Abstract

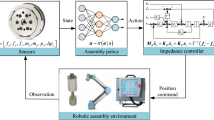

In the domain of flexible manufacturing, Deep Reinforcement Learning (DRL) has emerged as a pivotal technology for robotic assembly tasks. Despite advancements in sample efficiency and interaction safety through residual reinforcement learning with initial policies, challenges persist in achieving context generalization amidst stochastic systems characterized by large random errors and variable backgrounds. Addressing these challenges, this study introduces a novel framework that integrates task attention-based multimodal fusion with an adaptive error curriculum within a residual reinforcement learning paradigm. Our approach commences with the formulation of a task attention-based multimodal policy that synergizes task-centric visual, relative pose, and tactile data into a compact, end-to-end model. This model is explicitly designed to enhance context generalization by improving observability, thereby ensuring robustness against stochastic errors and variable backgrounds. The second facet of our framework, curriculum residual learning, introduces an adaptive error curriculum that intelligently modulates the guidance and constraints of a model-based feedback controller. This progression from perfect to significantly imperfect initial policies incrementally enhances policy robustness and learning process stability. Empirical validation demonstrates the capability of our method to efficiently acquire a high-precision policy for assembly tasks with clearances as tight as 0.1 mm and error margins up to 20 mm within a 3.5-hour training window-a feat challenging for existing RL-based methods. The results indicate a substantial reduction in average completion time by 75\(\%\) and a 34\(\%\) increase in success rate over the classical two-step approach. An ablation study was conducted to assess the contribution of each component within our framework. Real-world task experiments further corroborate the robustness and generalization of our method, achieving over a 90\(\%\) success rate in variable contexts.

Similar content being viewed by others

Data availability and access

The data that support the findings of this study are available from the corresponding author upon reasonable request.

References

Jiang J, Yao L, Huang Z, Yu G, Wang L, Bi Z (2022) The state of the art of search strategies in robotic assembly. J Industrial Information Integration 26:100259

Li J, Pang D, Zheng Y, Guan X, Le X (2022) A flexible manufacturing assembly system with deep reinforcement learning. Control Eng Prac

De Gregorio D, Zanella R, Palli G, Pirozzi S, Melchiorri C (2018) Integration of robotic vision and tactile sensing for wire-terminal insertion tasks. IEEE Trans Automation Sci Eng 16(2):585–598

Zhao D-Y (2021) Sun F Wang Z, Zhou Q: A novel accurate positioning method for object pose estimation in robotic manipulation based on vision and tactile sensors. Int J Adv Manufac Technol 116:2999–3010

Lee MA (2020) Zhu Y, Zachares P, Tan M, Srinivasan K, Savarese S, Fei-Fei L, Garg A, Bohg J: Making sense of vision and touch: Learning multimodal representations for contact-rich tasks. IEEE Trans Robotics 36(3):582–596

Hou Z, Yang W, Chen R, Feng P, Xu J (2022) A hierarchical compliance-based contextual policy search for robotic manipulation tasks with multiple objectives. IEEE Trans Industrial Inform 19(4):5444–5455

Johannink T, Bahl S, Nair A, Luo J, Kumar A Loskyll M, Ojea JA, Solowjow E, Levine S (2019) Residual reinforcement learning for robot control. In: 2019 International conference on robotics and automation (ICRA), IEEE, pp 6023–6029

Kulkarni P, Kober J Babuka R, Santina CD (2021) Learning assembly tasks in a few minutes by combining impedance control and residual recurrent reinforcement learning. Adv Intell Syst 4

Hao P Lu T, Cui S, Wei J, Cai Y Wang S (2022) Meta-residual policy learning: Zero-trial robot skill adaptation via knowledge fusion. IEEE Robotics Automation Lett 1–1

Staessens T, Lefebvre T, Crevecoeur G (2022) Adaptive control of a mechatronic system using constrained residual reinforcement learning. IEEE Trans Industrial Electron 69(10):10447–10456

Shi Y, Chen Z Liu H, Riedel S, Gao C Feng Q, Deng J, Zhang J (2021) Proactive action visual residual reinforcement learning for contact-rich tasks using a torque-controlled robot. In: 2021 IEEE International conference on robotics and automation (ICRA), IEEE, pp 765–771

Schoettler G, Nair A, Luo J, Bahl S, Ojea JA, Solowjow E, Levine S (2020) Deep reinforcement learning for industrial insertion tasks with visual inputs and natural rewards. In: 2020 IEEE/RSJ International conference on intelligent robots and systems (IROS), IEEE, pp 5548–5555

Song JM (2021) Chen Q Li Z: A peg-in-hole robot assembly system based on gauss mixture model. Robotics Comput Integr Manuf 67

Lin H-I (2020) Design of an intelligent robotic precise assembly system for rapid teaching and admittance control. Robotics Comput Integr Manuf 64:101946

Apolinarska AA, Pacher M, Li H, Cote N, Pastrana R, Gramazio F, Kohler M (2021) Robotic assembly of timber joints using reinforcement learning. Automation Construction, 103569

Inoue T, De Magistris G, Munawar A, Yokoya T, Tachibana R (2017) Deep reinforcement learning for high precision assembly tasks. In: 2017 IEEE/RSJ International conference on intelligent robots and systems (IROS), IEEE, pp 819–825

Xu J, Hou Z, Wang W, Xu B, Zhang K, Chen K (2019) Feedback deep deterministic policy gradient with fuzzy reward for robotic multiple peg-in-hole assembly tasks. IEEE Trans Industrial Inform 15:1658–1667

Ren T, Dong Y, Wu D, Chen K (2018) Learning-based variable compliance control for robotic assembly. J Mechan Robotics

Song R (2021) Li F Quan W, Yang X, Zhao J: Skill learning for robotic assembly based on visual perspectives and force sensing. Robotics Auton Syst 135:103651

Luo J, Solowjow E, Wen C, Ojea JA, Agogino AM, Tamar A, Abbeel P (2019) Reinforcement learning on variable impedance controller for high-precision robotic assembly. In: 2019 International conference on robotics and automation (ICRA), IEEE, pp 3080–3087

Beltran-Hernandez CC, Petit D, Ramirez-Alpizar IG, Nishi T, Kikuchi S, Matsubara T, Harada K (2020) Learning force control for contact-rich manipulation tasks with rigid position-controlled robots. IEEE Robotics Automation Lett 5(4):5709–5716

Bogdanovic M, Khadiv M, Righetti L (2019) Learning variable impedance control for contact sensitive tasks. IEEE Robotics and Automation Lett 5:6129–6136

Chen C, Zhang C, Pan Y-D (2023) Active compliance control of robot peg-in-hole assembly based on combined reinforcement learning. Appl Intell

Liu Q, Ji Z, Xu W, Liu Z, Yao B, Zhou Z (2023) Knowledge-guided robot learning on compliance control for robotic assembly task with predictive model. Expert Syst Appl 234:121037

Yasutomi AY (2023) Ichiwara H, Ito H, Mori H, Ogata T: Visual spatial attention and proprioceptive data-driven reinforcement learning for robust peg-in-hole task under variable conditions. IEEE Robotics Automation Lett 8:1834–1841

Xie L, Yu H, Zhao Y, Zhang H, Zhou Z, Wang M, Wang Y, Xiong R (2022) Learning to fill the seam by vision: Sub-millimeter peg-in-hole on unseen shapes in real world. In: 2022 International conference on robotics and automation (ICRA), pp 2982–2988

Shi Y, Yuan C, Tsitos AC, Cong L, Hadjar H, Chen Z, Zhang J-W (2023) A sim-to-real learning-based framework for contact-rich assembly by utilizing cyclegan and force control. IEEE Trans Cognitive Develop Syst 15:2144–2155

Zhang Z, Wang Y, Zhang Z, Wang L, Huang H, Cao Q (2024) A residual reinforcement learning method for robotic assembly using visual and force information. J Manuf Syst

Ahn K, Na M-W, Song J-B (2023) Robotic assembly strategy via reinforcement learning based on force and visual information. Robotics Auton Syst 164:104399

Chen W, Zeng C, Liang H, Sun F, Zhang J (2023) Multimodality driven impedance-based sim2real transfer learning for robotic multiple peg-in-hole assembly. IEEE Trans Cybernet

Jin P, Lin Y, Song Y, Li T, Yang W (2023) Vision-force-fused curriculum learning for robotic contact-rich assembly tasks. Front Neurorobotics 17

Abu-Dakka FJ, Nemec B, Kramberger A, Buch AG (2014) Norbert: Solving peg-in-hole tasks by human demonstration and exception strategies. Ind Robot 41:575–584

Wang X, Chen Y, Zhu W (2021) A survey on curriculum learning. IEEE Trans Pattern Anal Mach Intell 44:4555–4576

Li X, Li J, Shi H (2023) A multi-agent reinforcement learning method with curriculum transfer for large-scale dynamic traffic signal control. Appl Intell 53:21433–21447

Cui F, Di H, Huang H, Ren H, Ouchi K, Liu Z, Xu J (2022) Multi-source inverse-curriculum-based training for low-resource dialogue generation. Appl Intell 53:13665–13676

Dong S, Jha D.K, Romeres D, Kim S, Nikovski D, Rodriguez A (2021) Tactile-rl for insertion: Generalization to objects of unknown geometry. In: 2021 IEEE International conference on robotics and automation (ICRA), 6437–6443

Luo J, Sushkov O, Pevceviciute R, Lian W, Su C, Vecerik M, Ye N, Schaal S, Scholz J (2021) Robust multi-modal policies for industrial assembly via reinforcement learning and demonstrations: A large-scale study. arXiv preprint arXiv:2103.11512

Hermann L, Argus M, Eitel A, Amiranashvili A, Burgard W, Brox T (2020) Adaptive curriculum generation from demonstrations for sim-to-real visuomotor control. In: 2020 IEEE International conference on robotics and automation (ICRA), IEEE, pp 6498–6505

Kirk R, Zhang A, Grefenstette E, Rocktaeschel T (2023) A survey of zero-shot generalisation in deep reinforcement learning. J Artif Intell Res 76:201–264

Ballou A, Reinke C, Alameda-Pineda X (2022) Variational meta reinforcement learning for social robotics. Appl Intell 53:27249–27268

Wang K, Kang B, Shao J, Feng J (2020) Improving generalization in reinforcement learning with mixture regularization. Adv Neural Inform Process Syst 33:7968–7978

Finn C, Tan X.Y, Duan Y, Darrell T, Levine S, Abbeel P (2015) Deep spatial autoencoders for visuomotor learning. In: 2016 IEEE International conference on robotics and automation (ICRA), 512–519

Zhou K, Guo C, Zhang H (2022) Improving indoor visual navigation generalization with scene priors and markov relational reasoning. Appl Intell 52:17600–17613

Huang X, Chen D, Guo Y, Jiang X, Liu Y (2023) Untangling multiple deformable linear objects in unknown quantities with complex backgrounds. IEEE Trans Automation Sci Eng

Sundaresan P, Grannen J, Thananjeyan B, Balakrishna A, Laskey M, Stone K, Gonzalez J, Goldberg K (2020) Learning rope manipulation policies using dense object descriptors trained on synthetic depth data. In: 2020 IEEE International conference on robotics and automation (ICRA), 9411–9418

Mnih V, Heess N, Graves A et al (2014) Recurrent models of visual attention. Adv Neural Inform Process Syst 27

Wang S, Fan Y, Jin S, Takyi-Aninakwa P, Fernandez C (2023) Improved anti-noise adaptive long short-term memory neural network modeling for the robust remaining useful life prediction of lithium-ion batteries. Reliability Eng Syst Safety, 108920. https://doi.org/10.1016/j.ress.2022.108920

Luo J, Solowjow E, Wen C, Ojea JA, Agogino AM, Tamar A, Abbeel P (2019) Reinforcement learning on variable impedance controller for high-precision robotic assembly. In: 2019 International conference on robotics and automation (ICRA), IEEE, pp 3080–3087

Lee D-H, Choi M-S, Park H, Jang G-R, Park J-H, Bae J-H (2022) Peg-in-hole assembly with dual-arm robot and dexterous robot hands. IEEE Robotics Automation Lett 7(4):8566–8573

Haugaard R, Langaa J, Sloth C, Buch A (2021) Fast robust peg-in-hole insertion with continuous visual servoing. In: Conference on Robot Learning, PMLR, pp 1696–1705

Stevsic S, Christen S, Hilliges O (2020) Learning to assemble: Estimating 6d poses for robotic object-object manipulation. IEEE Robotics Automation Lett 5(2):1159–1166

Acknowledgements

This document is the results of the National Key Research and Development Program of China (Grant No. 2021YFB3301400), the research project funded by the National Natural Science Foundation of China (Grant No. 52075177 and No.52305105), Research Foundation of Guangdong Province (Grant No. 2019A050505001 and 2018KZDXM002), Guangzhou Research Foundation (Grant No. 202002030324 and 201903010028), Zhongshan Research Foundation (Grant No. 2020B2020).

Author information

Authors and Affiliations

Contributions

Chuang Wang: Conceptualization, Methodology, Software, Writing - original draft. Ze Lin: Software, Investigation. Biao Liu: Visualization, Investigation. Chupeng Su: Writing - review and editing. Gang Chen: Writing - review and editing, Supervision, Funding acquisition. Longhan Xie: Supervision, Funding acquisition. All authors read and approved the final manuscript.

Corresponding authors

Ethics declarations

Conflicts of interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Wang, C., Lin, Z., Liu, B. et al. Task attention-based multimodal fusion and curriculum residual learning for context generalization in robotic assembly. Appl Intell 54, 4713–4735 (2024). https://doi.org/10.1007/s10489-024-05417-x

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-024-05417-x