Abstract

Semi-supervised feature selection plays a crucial role in semi-supervised classification tasks by identifying the most informative and relevant features while discarding irrelevant or redundant features. Many semi-supervised feature selection approaches take advantage of pairwise constraints. However, these methods either encounter obstacles when attempting to automatically determine the appropriate number of features or cannot make full use of the given pairwise constraints. Thus, we propose a constrained feature weighting (CFW) approach for semi-supervised feature selection. CFW has two goals: maximizing the modified hypothesis margin related to cannot-link constraints and minimizing the must-link preserving regularization related to must-link constraints. The former makes the selected features strongly discriminative, and the latter makes similar samples with selected features more similar in the weighted feature space. In addition, L1-norm regularization is incorporated in the objective function of CFW to automatically determine the number of features. Extensive experiments are conducted on real-world datasets, and experimental results demonstrate the superior effectiveness of CFW compared to that of the existing popular supervised and semi-supervised feature selection methods.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Data availability and material

Data is openly available in public repositories. http://archive.ics.uci.edu/ml/index.php; https://cam-orl.co.uk/facedatabase.html.

References

Sheikhpour R, Sarram MA, Gharaghani S, Chahooki MAZ (2017) A survey on semi-supervised feature selection methods. Pattern Recogn 64:141–158

Bouchlaghem Y, Akhiat Y, Amjad S (2022) Feature selection: A review and comparative study. E3S Web of Conferences 351:01046

Pang Q, Zhang L (2020) Semi-supervised neighborhood discrimination index for feature selection. Knowl-Based Syst 204:106224

Chen H, Chen H, Li W, Li T, Luo C, Wan J (2022) Robust dual-graph regularized and minimum redundancy based on self-representation for semi-supervised feature selection. Neurocomputing 490:104–123

Jin L, Zhang L, Zhao L (2023) Max-difference maximization criterion: A feature selection method for text categorization. Front Comp Sci 17(1):171337

Jin L, Zhang L, Zhao L (2023) Feature selection based on absolute deviation factor for text classification. Inform Process Manag 60(3):103251

Li Z, Tang J (2021) Semi-supervised local feature selection for data classification. Inf Sci 64(9):192108

Pang Q, Zhang L (2021) A recursive feature retention method for semi-supervised feature selection. Int J Mach Learn Cybern 12(9):2639–2657

Tang B, Zhang L (2019) Multi-class semi-supervised logistic I-Relief feature selection based on nearest neighbor. In: Advances in knowledge discovery and data mining. pp 281–292

Tang B, Zhang L (2020) Local preserving logistic I-Relief for semi-supervised feature selection. Neurocomputing 399:48–64

Sun Y, Todorovic S, Goodison S (2009) Local-learning-based feature selection for high-dimensional data analysis. IEEE Trans Pattern Anal Mach Intell 32(9):1610–1626

Xu J, Tang B, He H, Man H (2016) Semi-supervised feature selection based on relevance and redundancy criteria. IEEE Trans Neural Netw Learn Syst 28(9):1974–1984

Wang C, Hu Q, Wang X, Chen D, Qian Y, Dong Z (2017) Feature selection based on neighborhood discrimination index. IEEE Trans Neural Netw Learn Syst 29(7):2986–2999

He X, Cai D, Niyogi P (2005) Laplacian score for feature selection. Adv Neural Inf Process Syst 18:507–514

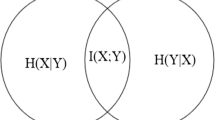

Peng H, Long F, Ding C (2005) Feature selection based on mutual information criteria of max-dependency, max-relevance, and min-redundancy. IEEE Trans Pattern Anal Mach Intell 27(8):1226–1238

Zhao J, Lu K, He X (2008) Locality sensitive semi-supervised feature selection. Neurocomputing 71(10–12):1842–1849

Salmi A, Hammouche K, Macaire L (2020) Similarity-based constraint score for feature selection. Knowl-Based Syst 209:106429

Kalakech M, Biela P, Macaire L, Hamad D (2011) Constraint scores for semi-supervised feature selection: A comparative study. Pattern Recogn Lett 32(5):656–665

Hindawi M, Allab K, Benabdeslem K (2011) Constraint selection-based semi-supervised feature selection. In: 2011 IEEE 11th international conference on data mining. pp 1080–1085

Zhang D, Chen S, Zhou Z-H (2008) Constraint score: A new filter method for feature selection with pairwise constraints. Pattern Recogn 41(5):1440–1451

Benabdeslem K, Hindawi M: Constrained laplacian score for semi-supervised feature selection. In: Joint European conference on machine learning and knowledge discovery in databases. pp 204–218

Hijazi S, Kalakech M, Hamad D, Kalakech A (2018) Feature selection approach based on hypothesis-margin and pairwise constraints. In: 2018 IEEE Middle East and North Africa Communications Conference, pp 1–6

Chen X, Zhang L, Zhao L (2023) Iterative constraint score based on hypothesis margin for semi-supervised feature selection. Knowl-Based Syst 271:110577

Sun Y (2007) Iterative Relief for feature weighting: Algorithms, theories, and applications. IEEE Trans Pattern Anal Mach Intell 29(6):1035–1051

Asuncion A, Newman D (2013) UCI machine learning repository. University of California, Irvine, School of Information and Computer Sciences. http://archive.ics.uci.edu/ml

Pomeroy S, Tamayo P, Gaasenbeek M, Sturla L, Angelo M, McLaughlin M, Kim J, Goumnerova L, Black P, Lau C (2002) Gene expression-based classification and outcome prediction of central nervous system embryonal tumors. Nature 415(24):436–442

Alon U, Barkai N, Notterman DA, Gish K, Ybarra S, Mack D, Levine AJ (1999) Broad patterns of gene expression revealed by clustering analysis of tumor and normal colon tissues probed by oligonucleotide arrays. Proc Natl Acad Sci 96(12):6745–6750

Zhao Z, Zhang K-N, Wang Q, Li G, Zeng F, Zhang Y, Wu F, Chai R, Wang Z, Zhang C (2021) Chinese glioma genome atlas (CGGA): a comprehensive resource with functional genomic data from chinese glioma patients. Genom Proteom Bioinf 19(1):1–12

Ramaswamy S, Tamayo P, Rifkin R, Mukherjee S, Yeang C-H, Angelo M, Ladd C, Reich M, Latulippe E, Mesirov JP (2001) Multiclass cancer diagnosis using tumor gene expression signatures. Proc Natl Acad Sci 98(26):15149–15154

Su AI, Cooke MP, Ching KA, Hakak Y, Walker JR, Wiltshire T, Orth AP, Vega RG, Sapinoso LM, Moqrich A (2002) Large-scale analysis of the human and mouse transcriptomes. Proc Natl Acad Sci 99(7):4465–4470

Gross R (2005) Face databases. In: Handbook of face recognition. Springer, Pittsburgh, USA pp 301–327

Singh D, Febbo PG, Ross K, Jackson DG, Manola J, Ladd C, Tamayo P, Renshaw AA, D’Amico AV, Richie JP (2002) Gene expression correlates of clinical prostate cancer behavior. Cancer Cell 1(2):203–209

Yeoh E-J, Ross ME, Shurtleff SA, Williams WK, Patel D, Mahfouz R, Behm FG, Raimondi SC, Relling MV, Patel A (2002) Classification, subtype discovery, and prediction of outcome in pediatric acute lymphoblastic leukemia by gene expression profiling. Cancer Cell 1(2):133–143

Friedman M (1937) The use of ranks to avoid the assumption of normality implicit in the analysis of variance. J Am Stat Assoc 32(200):675–701

Lai J, Chen H, Li W, Li T, Wan J (2022) Semi-supervised feature selection via adaptive structure learning and constrained graph learning. Knowl-Based Syst 251:109243

Yi Y, Zhang H, Zhang N, Zhou W, Huang X, Xie G, Zheng C (2024) SFS-AGGL: Semi-supervised feature selection integrating adaptive graph with global and local information. Information 15(1):57

Dunn OJ (1961) Multiple comparisons among means. J Am Stat Assoc 56(293):52–64

Chen H, Tiňo P, Yao X (2009) Predictive ensemble pruning by expectation propagation. IEEE Trans Knowl Data Eng 21(7):999–1013

Huang X, Zhang L, Wang B, Li F, Zhang Z (2018) Feature clustering-based support vector machine recursive feature elimination for gene selection. Appl Intell 48(3):594–607

Funding

This work was supported in part by the Natural Science Foundation of the Jiangsu Higher Education Institutions of China under Grant No. 19KJA550002, by the Six Talent Peak Project of Jiangsu Province of China under Grant No. XYDXX-054, and by the Priority Academic Program Development of Jiangsu Higher Education Institutions.

Author information

Authors and Affiliations

Contributions

Xinyi Chen: Conceptualization, Methodology, Software, Validation, Formal analysis, Writing - original draft; Li Zhang: Writing- reviewing & editing, Supervision, Project administration; Lei Zhao: Supervision, Project administration; Xiaofang Zhang: Supervision, Project administration.

Corresponding author

Ethics declarations

Conflicts of interest

We declare that there have been no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Ethics approval

This article does not contain any studies with human participants or animals performed by any of the authors.

Consent to participate

All authors agreed to participate.

Consent for publication

All authors have consented to publication.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendices

Appendix A: Proof of Theorem 1

It is well known that a function defined on an open set is convex if and only if its Hessian matrix is positive semi-definite. Therefore, to prove the convexity of the function \(J_{R}\left( \textbf{w} \right) \) in (19), we must demonstrate that its Hessian matrix is positive semi-definite.

Because the Laplacian matrix \(\textbf{L}^{\mathcal {M}}\) is symmetric and positive semi-definite, \(\textbf{Q}=\textbf{F}^T \textbf{L}^{\mathcal {M}} \textbf{F}\) is also symmetric and positive semi-definite. Therefore, we can express the must-link preserving regularization as \(J_{R}\left( \textbf{w} \right) =\text {trace} \left( 2\textbf{M}^T \textbf{Q} \textbf{M} \right) \), as shown in (21).

Then, the first partial derivative of \(J_{R}\left( \textbf{w} \right) \) with respect to \(w_r\) can be calculated as follows:

where \(Q_{rr}\) is the element in the r-th row and r-th column of \(\textbf{Q}\). The second partial derivative of \(J_{R}\left( \textbf{w} \right) \) with respect to \(w_s\) can be expressed as

Therefore, the Hessian matrix \(\textbf{H}\) of \(J_{R}\left( \textbf{w}\right) \) is a diagonal matrix, where the diagonal elements are \(H_{rr}=4Q_{rr}\).

Since \(\textbf{Q}\) is positive semi-definite, we have that \(Q_{rr} \ge 0\). As a result, the Hessian matrix \(\textbf{H}\) of \(J_{R}\left( \textbf{w}\right) \) is positive semi-definite. In other words, \(J_{R}\left( \textbf{w} \right) \) is a convex function of \(\textbf{w}\) when \(\textbf{w} \ge 0 \). This concludes the proof.

Appendix B: Proof of Theorem 2

Let \(J_{1}\left( \textbf{w}\right) =\log \left( 1+\exp \left( -\textbf{w}^{T} {\textbf{z}}\right) \right) \) and \(J_{2}\left( \textbf{w}\right) =\lambda _{1}\Vert \textbf{w}\Vert _{1}\), then the objective function (24) can be rewritten as:

According to the properties of convex functions, \(J\left( \textbf{w}\right) \) is a convex function if and only if \(J_{1}\left( \textbf{w}\right) \), \(J_{2}\left( \textbf{w}\right) \), and \(J_{R}\left( \textbf{w} \right) \) are convex functions. Theorem 1 states that \(J_{R}\left( \textbf{w} \right) \) is a convex function. Now, we need to prove that the other two functions are also convex. Following the approach used to prove Theorem 1, we simply need to demonstrate that the Hessian matrices of both \(J_{1}\left( \textbf{w}\right) \) and \(J_{2}\left( \textbf{w}\right) \) are positive semi-definite.

We start by calculating the first and second partial derivatives of \(J_{1}\left( \textbf{w}\right) \) with respect to \(\textbf{w}\), as shown below

and

Without loss of generality, let \(c=\sqrt{\frac{\exp \left( -\textbf{w}^{T} \textbf{z}\right) }{\left( 1+\exp \left( -\textbf{w}^{T} {\textbf{z}}\right) \right) ^{2}}}\). Substituting c into (B5), we have

where \(\textbf{H}_1\) is the Hessian matrix of \(J_{1}(\textbf{w})\). Because \(\textbf{H}_1\) can be regarded as the outer product of a column vector \(c{\textbf{z}} \) and its own transpose vector \(\left( c {\textbf{z}}\right) ^{T}\). So \(\textbf{H}_1\) is a matrix of rank 1 with only one non-zero eigenvalue. It can be calculated that the non-zero eigenvalue of matrix \(\textbf{H}_1\) is \(c^2\left\| \textbf{z}\right\| ^2\), which is greater than 0. In this case, the Hessian matrix of (B6) is positive semi-definite. Therefore, \(J_{1}\left( \textbf{w}\right) \) is a convex function.

As for \(J_{2}\left( \textbf{w}\right) \), we have

and

Thus, the Hessian matrix of \(J_{2}\left( \textbf{w} \right) \) is a matrix with all zeros, which means that the Hessian matrix of \( J_{2}\left( \textbf{w} \right) \) is positive semi-definite. Hence, \( J_{2}\left( \textbf{w} \right) \) is a also convex function.

In summary, \(J_{1}\left( \textbf{w} \right) \), \(J_{2}\left( \textbf{w} \right) \) and \(J_{R}\left( \textbf{w} \right) \) are convex functions. Thus, \(J\left( \textbf{w} \right) \) is a convex function. This completes the proof.

Appendix C: Proof of Theorem 3

Note that \(\textbf{z}(t)\) is updated by \(\textbf{w}(t-1)\) in the t-th iteration. When \(\textbf{z}(t)\) and \(\textbf{w}(t-1)\) are given, \(\textbf{w}(t)\) is updated using the gradient descent scheme in (27) and the truncation rule in (28). Consequently, the objective function achieves its minimum \(J(\textbf{w}(t) \mid \textbf{z}(t))\) for fixed \(\textbf{z}(t)\). In other words,

which completes the proof of Theorem 3.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Chen, X., Zhang, L., Zhao, L. et al. Constrained feature weighting for semi-supervised learning. Appl Intell 54, 9987–10006 (2024). https://doi.org/10.1007/s10489-024-05691-9

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-024-05691-9