Abstract

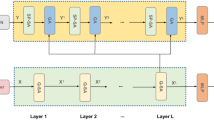

Text Visual Question Answering (TextVQA) task aims to enable models to read and answer questions based on images with text. Existing attention-based methods for TextVQA tasks often face challenges in effectively aligning local features between modalities during multimodal information interaction. This misalignment hinders their performance in accurately answering questions based on images with text. To address this issue, the Multiscale Attention Pre-training Model (MAPM) is proposed to enhance multimodal feature fusion. MAPM introduces the multiscale attention modules, which facilitate finegrained local feature enhancement and global feature fusion across modalities. By adopting these modules, MAPM achieves superior performance in aligning and integrating visual and textual information. Additionally, MAPM benefits from being pre-trained with scene text, employing three pre-training tasks: masked language model, visual region matching, and OCR visual text matching. This pre-training process establishes effective semantic alignment relationships among different modalities. Experimental evaluations demonstrate the superiority of MAPM, achieving a 1.2% higher accuracy compared to state-of-the-art models on the TextVQA dataset, especially when handling numerical data within images.

Graphical abstract

Multiscale Attention Pre-training Model (MAPM) is proposed to enhance local fine-grained features (Joint Attention Module) and effectively addresses redundancy in global features (Global Attention Module) in text VQA task. Three pre-training tasks are designed to enhance the model’s expressive power and address the issue of cross modal semantic alignment

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.References

Almazán J, Gordo A, Fornés A et al (2014) Word spotting and recognition with embedded attributes. IEEE Trans Pattern Anal Mach Intell 36(12):2552–2566

Biten AF, Tito R, Mafla A et al (2019) Scene text visual question answering. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 4291–4301

Biten AF, Litman R, Xie Y et al (2022) Latr: layout-aware transformer for scenetext vqa. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 16548–16558

Chia YK, Han VTY, Ghosal D et al (2024) Puzzlevqa: diagnosing multimodal reasoning challenges of language models with abstract visual patterns. arXiv:2403.13315

Devlin J, Chang MW, Lee K et al (2018) Bert: pre-training of deep bidirectional transformers for language understanding. arXiv preprint arXiv:1810.04805

Gao D, Li K, Wang R et al (2020) Multi-modal graph neural network for joint reasoning on vision and scene text. In: Proceedingsof the IEEE/CVF conference on computer vision and pattern recognition, pp 12746–12756

Guo Z, Han D (2023) Sparse co-attention visual question answering networks based on thresholds. Appl Intell 53(1):586–600

Hu R, Singh A, Darrell T et al (2020) Iterative answer prediction with pointer-augmented multimodal transformers for textvqa. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 9992–10002

Hu Z, Yang P, Jiang Y et al (2024) Prompting large language model with context and pre-answer for knowledge-based vqa. Pattern Recogn 151:110399

Joulin A, Grave E, Bojanowski P et al (2016) Fasttext.zip: compressing text classification models. arXiv preprint arXiv:1612.03651

Kant Y, Batra D, Anderson P et al (2020) Spatially aware multimodal transformers for textvqa. In: Computer vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part IX, pp 715–732

Liu Y, Zhang S, Jin L et al (2019) Omnidirectional scene text detection with sequential-free box discretization, pp 3052–3058

Lu J, Batra D, Parikh D et al (2019) Vilbert: pretraining task-agnostic visiolinguistic representations for vision-and-language tasks. Adv Neural Inf Process Syst 32

Lu X, Fan Z, Wang Y et al (2021) Localize, group, and select: boosting text-vqa by scene text modeling. 2021 IEEE/CVF International conference on computer vision workshops (ICCVW), pp 2631–2639

Peng L, Yang Y, Wang Z et al (2022) Mra-net: improving vqa via multi-modal relation attention network. IEEE Trans Pattern Anal Mach Intell 44(1):318–329

Shen X, Han D, Guo Z et al (2022) Local self-attention in transformer for visual question answering. Appl Intell, pp 1–18

Singh A, Natarajan V, Shah M et al (2019) Towards vqa models that can read. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 8317–8326

Vaswani A, Shazeer N, Parmar N et al (2017) Attention is all you need. Adv Neural Inf Process Syst 30

Xiao J, Shang X, Yao A et al (2021) Next-qa: next phase of question-answering to explaining temporal actions. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp 9777–9786

Yang Z, Lu Y, Wang J et al (2021) Tap: text-aware pre-training for text-vqa and text-caption. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 8751–8761

Yang Z, Gan Z, Wang J et al (2022) An empirical study of gpt-3 for fewshot knowledge-based vqa. Proceedings of the AAAI Conference on Artificial Intelligence 36(3):3081–3089

Zhu Q, Gao C, Wang P et al (2021) Simple is not easy: a simple strong baseline for textvqa and textcaps. In: Proceedings of the AAAI conference on artificial intelligence, pp 3608–3615

Acknowledgements

This work is supported by National Natural Science Foundation of China (61807002).

Funding

This work is supported by National Natural Science Foundation of China (61807002).

Author information

Authors and Affiliations

Contributions

All authors contributed to the study conception and design. Material preparation, data collection and analysis were performed by Yingying Li and Yue Yang. The first draft of the manuscript was written by Yue Yang and all authors commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Conflict of interest/Competing interests

There is no conflict of interest/Competing interests.

Ethics approval

All authors promise that our work meets the requirements of ethical responsibilities.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Yang, Y., Yu, Y. & Li, Y. MAPM: multiscale attention pre-training model for TextVQA. Appl Intell 54, 10401–10413 (2024). https://doi.org/10.1007/s10489-024-05727-0

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-024-05727-0