Abstract

The application of attention mechanisms, especially channel attention, has achieved huge success in the field of computer vision. However, existing methods mainly focus on more sophisticated attention modules for better performance, but ignore global and local contexts in the frequency domain. This work focuses on the channel relationship and proposes a novel architectural unit called Frequency Global and Local (FGL) context block. It adaptively recalibrates global-local channel-wise feature responses by explicitly modeling interdependencies between channels in the frequency domain. The proposed lightweight FGL module is efficient well generalizable across different datasets. Meanwhile, the FGL context block significantly improves the performance of existing convolutional neural networks (CNNs) at a slight computational cost. Our FGL module is extensively evaluated with applications of image classification, object detection, and semantic segmentation with the backbones of ResNets, MobileNetV2, and MobileNeXt. The experimental results indicate that our module is more efficient than its counterparts. Our model is open-sourced at https://github.com/YunDuanFei/FGL.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Data Availability

All authors certify that they have no affiliations with or involvement in any organization or entity with any financial interest or non-financial interest in the subject matter or materials discussed in this manuscript.

References

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: 2016 IEEE conference on computer vision and pattern recognition (CVPR), pp 770–778. https://doi.org/10.1109/CVPR.2016.90

Cao Y, Xu J, Lin S, Wei F, Hu H (2019) Gcnet: non-local networks meet squeeze-excitation networks and beyond. In: 2019 IEEE/CVF international conference on computer vision workshop (ICCVW), pp 1971–1980. https://doi.org/10.1109/ICCVW.2019.00246

Duan K, Bai S, Xie L, Qi H, Huang Q, Tian Q (2023) Centernet++ for object detection. IEEE Trans Pattern Anal Mach Intell, pp 1–14. https://doi.org/10.1109/TPAMI.2023.3342120

Wang X, Girshick R, Gupta A, He K (2018) Non-local neural networks. In: 2018 IEEE/CVF conference on computer vision and pattern recognition, pp 7794–7803. https://doi.org/10.1109/CVPR.2018.00813

Hu J, Shen L, Albanie S, Sun G, Wu E (2020) Squeeze-and-excitation networks. IEEE Trans Pattern Anal Mach Intell 42(8):2011–2023. https://doi.org/10.1109/TPAMI.2019.2913372

Hong F, Kong L, Zhou H, Zhu X, Li H, Liu Z (2024) Unified 3d and 4d panoptic segmentation via dynamic shifting networks. IEEE Trans Pattern Anal Mach Intell, pp 1–16. https://doi.org/10.1109/TPAMI.2023.3349304

Xie J, Cai Y, Chen J, Xu R, Wang J, Li Q (2024) Knowledge-augmented visual question answering with natural language explanation. IEEE Trans Image Process, pp 1–1. https://doi.org/10.1109/TIP.2024.3379900

Krizhevsky A, Sutskever I, Hinton GE (2017) Imagenet classification with deep convolutional neural networks. Commun ACM 60(6):84–90. https://doi.org/10.1145/3065386

Ding Y, Ma Z, Wen S, Xie J, Chang D, Si Z, Wu M, Ling H (2021) Ap-cnn: weakly supervised attention pyramid convolutional neural network for fine-grained visual classification. IEEE Trans Image Process 30:2826–2836. https://doi.org/10.1109/TIP.2021.3055617

Yang G, Rota P, Alameda-Pineda X, Xu D, Ding M, Ricci E (2022) Variational structured attention networks for deep visual representation learning. IEEE Transactions on Image Processing, pp 1–1. https://doi.org/10.1109/TIP.2021.3137647

Chen Z, Yang J, Chen L, Jiao H (2022) Garbage classification system based on improved shufflenet v2. Resour Conserv Recycl 178:106090. https://doi.org/10.1016/j.resconrec.2021.106090

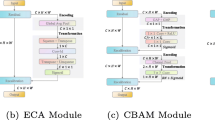

Wang Q, Wu B, Zhu P, Li P, Zuo W, Hu Q (2020) Eca-net: efficient channel attention for deep convolutional neural networks. In: 2020 IEEE/CVF conference on computer vision and pattern recognition (CVPR), pp 11531–11539. https://doi.org/10.1109/CVPR42600.2020.01155

Woo S, Park J, Lee J-Y, Kweon IS (2018) Cbam: convolutional block attention module. In: Computer vision – ECCV 2018: 15th european conference, Munich, Germany, September 8–14, 2018, Proceedings, Part VII, pp 3–19. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-030-01234-2_1

Fu J, Liu J, Tian H, Li Y, Bao Y, Fang Z, Lu H (2019) Dual attention network for scene segmentation. In: 2019 IEEE/CVF conference on computer vision and pattern recognition (CVPR), pp 3141–3149.https://doi.org/10.1109/CVPR.2019.00326

Sagar A (2022) Dmsanet: dual multi scale attention network. In: Image analysis and processing – iciap 2022: 21st international conference, Lecce, Italy, May 23–27, 2022, Proceedings, Part I, pp 633–645. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-031-06427-2_53

Hou Q, Zhou D, Feng J (2021) Coordinate attention for efficient mobile network design. In: 2021 IEEE/CVF conference on computer vision and pattern recognition (CVPR), pp 13708–13717.https://doi.org/10.1109/CVPR46437.2021.01350

Hu J, Shen L, Albanie S, Sun G, Vedaldi A (2018) Gather-excite: exploiting feature context in convolutional neural networks. In: Proceedings of the 32nd international conference on neural information processing systems. NIPS’18, pp 9423–9433. Curran Associates Inc., Red Hook, NY, USA

Liu H, Liu F, Fan X, Huang D (2022) Polarized self-attention: towards high-quality pixel-wise mapping. Neurocomputing 506:158–167. https://doi.org/10.1016/j.neucom.2022.07.054

Qin Z, Zhang P, Wu F, Li X (2021) Fcanet: frequency channel attention networks. In: 2021 IEEE/CVF international conference on computer vision (ICCV), pp 763–772. https://doi.org/10.1109/ICCV48922.2021.00082

Hu L, Kong Y, Li J, Li X (2023) Effective local-global transformer for natural image matting. IEEE Trans Circ Syst Video Technol 33(8):3888–3898. https://doi.org/10.1109/TCSVT.2023.3234983

Li K, Wang Y, Zhang J, Gao P, Song G, Liu Y, Li H, Qiao Y (2023) Uniformer: unifying convolution and self-attention for visual recognition. IEEE Trans Pattern Anal Mach Intell 45(10):12581–12600. https://doi.org/10.1109/TPAMI.2023.3282631

Gao Z, Xie J, Wang Q, Li P (2019) Global second-order pooling convolutional networks. In: 2019 IEEE/CVF conference on computer vision and pattern recognition (CVPR), pp 3019–3028. https://doi.org/10.1109/CVPR.2019.00314

Huang Z, Wang X, Wei Y, Huang L, Shi H, Liu W, Huang TS (2023) Ccnet: criss-cross attention for semantic segmentation. IEEE Trans Pattern Anal Mach Intell 45(6):6896–6908. https://doi.org/10.1109/TPAMI.2020.3007032

Chen Y, Kalantidis Y, Li J, Yan S, Feng J (2018) A \(^{\wedge }\)2-nets: Double attention networks. In: Bengio S, Wallach H, Larochelle H, Grauman K, Cesa-Bianchi N, Garnett R (eds.) Advances in neural information processing systems, vol 31. https://proceedings.neurips.cc/paper_files/paper/2018/file/e165421110ba03099a1c0393373c5b43-Paper.pdf

Bello I, Zoph B, Le Q, Vaswani A, Shlens J (2019) Attention augmented convolutional networks. In: 2019 IEEE/CVF international conference on computer vision (ICCV), pp 3285–3294. https://doi.org/10.1109/ICCV.2019.00338

Zhao H, Zhang Y, Liu S, Shi J, Loy CC, Lin D, Jia J (2018) Psanet: point-wise spatial attention network for scene parsing. In: Ferrari V, Hebert M, Sminchisescu C, Weiss Y (eds) Computer vision - ECCV 2018. Springer, Cham, pp 270–286

Misra D, Nalamada T, Arasanipalai AU, Hou Q (2021) Rotate to attend: convolutional triplet attention module. In: 2021 IEEE winter conference on applications of computer vision (WACV), pp 3138–3147. https://doi.org/10.1109/WACV48630.2021.00318

Li Y, Yao T, Pan Y, Mei T (2023) Contextual transformer networks for visual recognition. IEEE Trans Pattern Anal Mach Intell 45(2):1489–1500. https://doi.org/10.1109/TPAMI.2022.3164083

Zou S, Zuo X, Wang S, Qian Y, Guo C, Cheng L (2023) Human pose and shape estimation from single polarization images. IEEE Trans Multimed 25:3560–3572. https://doi.org/10.1109/TMM.2022.3162469

Liu M, Wu S, Chen R, Lin Z, Wang Y, Meijering E ((2024)) Brain image segmentation for ultrascale neuron reconstruction via an adaptive dual-task learning network. IEEE Transactions on Medical Imaging, pp 1–1. https://doi.org/10.1109/TMI.2024.3367384

Liu N, Nan K, Zhao W, Yao X, Han J (2023) Learning complementary spatial–temporal transformer for video salient object detection. IEEE Transactions on Neural Networks and Learning Systems, pp 1–11. https://doi.org/10.1109/TNNLS.2023.3243246

Zhang S, Yu W, Jiang F, Nie L, Yao H, Huang Q, Tao D (2024) Stereo image restoration via attention-guided correspondence learning. IEEE Trans Pattern Anal Mach Intell, pp 1–17. https://doi.org/10.1109/TPAMI.2024.3357709

Fu J, Xie Q, Meng D, Xu Z (2024) Rotation equivariant proximal operator for deep unfolding methods in image restoration. IEEE Trans Pattern Anal Mach Intell, pp 1–17. https://doi.org/10.1109/TPAMI.2024.3383532

Kim J, Kim S, Kim ST, Ro YM (2022) Robust perturbation for visual explanation: Cross-checking mask optimization to avoid class distortion. IEEE Trans Image Process 31:301–313. https://doi.org/10.1109/TIP.2021.3130526

Ralekar C, Choudhary S, Gandhi TK, Chaudhury S (2024) Development of character recognition model inspired by visual explanations. IEEE Trans Artif Intell 5(3):1362–1372. https://doi.org/10.1109/TAI.2023.3289167

Chu X, Xie X, Ye S, Lu H, Xiao H, Yuan Z, Zhu-Tian C, Zhang H, Wu Y (2022) Tivee: visual exploration and explanation of badminton tactics in immersive visualizations. IEEE Trans Vis Comput Graph 28(1):118–128. https://doi.org/10.1109/TVCG.2021.3114861

Selvaraju RR, Cogswell M, Das A, Vedantam R, Parikh D, Batra D (2020) Grad-cam: visual explanations from deep networks via gradient-based localization. Int J Comput Vision 128(2):336–359. https://doi.org/10.1007/s11263-019-01228-7

Sandler M, Howard A, Zhu M, Zhmoginov A, Chen L-C (2018) Mobilenetv2: inverted residuals and linear bottlenecks. In: 2018 IEEE/CVF conference on computer vision and pattern recognition, pp 4510–4520. https://doi.org/10.1109/CVPR.2018.00474

Zhou D, Hou Q, Chen Y, Feng J, Yan S (2020) Rethinking bottleneck structure for efficient mobile network design. In: Computer vision – ECCV 2020: 16th European conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part III, pp 680–697. Springer, Berlin, Heidelberg (. https://doi.org/10.1007/978-3-030-58580-8_40

Paszke A, Gross S, Massa F, Lerer A, Bradbury J, Chanan G, Killeen T, Lin Z, Gimelshein N, Antiga L, Desmaison A, Köpf A, Yang E, DeVito Z, Raison M, Tejani A, Chilamkurthy S, Steiner B, Fang L, Bai J, Chintala S (2019) PyTorch: An Imperative Style, High-performance Deep Learning Library. Curran Associates Inc., Red Hook, NY, USA

Wightman R (2019) PyTorch Image Models. GitHub. https://doi.org/10.5281/zenodo.4414861

Szegedy C, Vanhoucke V, Ioffe S, Shlens J, Wojna Z (2016) Rethinking the inception architecture for computer vision. In: 2016 IEEE conference on computer vision and pattern recognition (CVPR), pp 2818–2826. https://doi.org/10.1109/CVPR.2016.308

Russakovsky O, Deng J, Su H, Krause J, Satheesh S, Ma S, Huang Z, Karpathy A, Khosla A, Bernstein M, Berg AC, Fei-Fei L (2015) Imagenet large scale visual recognition challenge. Int J Comput Vision 115(3):211–252. https://doi.org/10.1007/s11263-015-0816-y

Zhang Q-L, Yang Y-B (2021) Sa-net: shuffle attention for deep convolutional neural networks. In: ICASSP 2021 - 2021 IEEE international conference on acoustics, speech and signal processing (ICASSP), pp 2235–2239. https://doi.org/10.1109/ICASSP39728.2021.9414568

Lee H, Kim H-E, Nam H (2019) Srm: a style-based recalibration module for convolutional neural networks. In: 2019 IEEE/CVF international conference on computer vision (ICCV), pp 1854–1862. https://doi.org/10.1109/ICCV.2019.00194

Zhang Q-L, Rao L, Yang Y (2021) Group-cam: group score-weighted visual explanations for deep convolutional networks. arXiv:2103.13859

Liu W, Anguelov D, Erhan D, Szegedy C, Reed S, Fu C-Y, Berg AC (2016) Ssd: single shot multibox detector. In: Leibe B, Matas J, Sebe N, Welling M(eds.) Computer Vision – ECCV 2016, pp 21–37. Springer, Cham

Chen L, Papandreou G, Schroff F, Adam H (2017) Rethinking atrous convolution for semantic image segmentation. CoRR arXiv:1706.05587

Funding

This work was supported in part by National Natural Science Foundation of China under Grant U2333209, in part by National Natural Science Foundation of China under Grant 12126606, in part by Science and Technology Planning Project of Sichuan Province, China under Grant 23DYF2913, in part by the R&D project of Pazhou Lab (Huangpu) under Grant 2023K0605, and in part by Zigong-Sichuan University School Cooperation Program under Grant 2023CDZG-8.

Author information

Authors and Affiliations

Contributions

All authors have contributed equally.

Corresponding author

Ethics declarations

Competing of Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Ethical Approval

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Liu, Y., Liu, Y., Li, H. et al. FGLNet: frequency global and local context channel attention networks. Appl Intell 54, 11325–11341 (2024). https://doi.org/10.1007/s10489-024-05729-y

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-024-05729-y