Abstract

Recently, the rich content of Chinese legal documents has attracted considerable scholarly attention. Legal Relational Triple Extraction which is a critical way to enable machines to understand the semantic information presents a significant challenge in Natural Language Processing, as it seeks to discern the connections between pairs of entities within legal case texts. This challenge is compounded by the intricate nature of legal language and the substantial expense associated with human annotation. Despite these challenges, existing models often overlook the incorporation of cross-domain features. To address this, we introduce LegalATLE, an innovative method for legal Relational Triple Extraction that integrates active learning and transfer learning, reducing the model’s reliance on annotated data and enhancing its performance within the target domain. Our model employs active learning to prudently assess and select samples with high information value. Concurrently, it applies domain adaptation techniques to effectively transfer knowledge from the source domain, thereby improving the model’s generalization and accuracy. Additionally, we have manually annotated a new theft-related triple dataset for use as the target domain. Comprehensive experiments demonstrate that LegalATLE outperforms existing efficient models by approximately 1.5%, reaching 92.90% on the target domain. Notably, with only 4% and 5% of the full dataset used for training, LegalATLE performs about 10% better than other models, demonstrating its effectiveness in data-scarce scenarios.

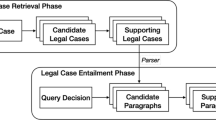

Graphical abstract

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Data Availability and Access

The datasets used and/or analyzed during the current study are available from the corresponding author on reasonable request.

Change history

04 November 2024

A Correction to this paper has been published: https://doi.org/10.1007/s10489-024-05844-w

References

Wang L, Yu K, Wumaier A, Zhang P, Yibulayin T, Wu X, Gong J, Maimaiti M (2024) Genre: generative multi-turn question answering with contrastive learning for entity–relation extraction. Complex Intell Syst 1–15

Martinez-Gil J (2023) A survey on legal question-answering systems. Comput Sci Rev 48:100552

Yu H, Li H, Mao D, Cai Q (2020) A relationship extraction method for domain knowledge graph construction. World Wide Web 23(2):735–753

Yue Q, Li X, Li D (2021) Chinese relation extraction on forestry knowledge graph construction. Comput Syst Sci & Eng 37(3)

Li J, Sun A, Han J, Li C (2020) A survey on deep learning for named entity recognition. IEEE Trans Knowl Data Eng 34(1):50–70

Liu P, Guo Y, Wang F, Li G (2022) Chinese named entity recognition: the state of the art. Neurocomputing 473:37–53

Guo Z, Zhang Y, Lu W (2019) Attention guided graph convolutional networks for relation extraction. In: Annual meeting of the association for computational linguistics, pp 241–251

Zhu H, Tiwari P, Zhang Y, Gupta D, Alharbi M, Nguyen TG, Dehdashti S (2022) Switchnet: a modular neural network for adaptive relation extraction. Comput Electrical Eng 104:108445

Sovrano F, Palmirani M, Vitali F et al (2020) Legal knowledge extraction for knowledge graph based question-answering. Front Artif Intell Appl 334:143–153

Wang Y, Yu B, Zhang Y, Liu T, Zhu H, Sun L (2020) Tplinker: single-stage joint extraction of entities and relations through token pair linking. In: Proceedings of the 28th international conference on computational linguistics, pp 1572–1582

Ren F, Zhang L, Yin S, Zhao X, Liu S, Li B, Liu Y (2021) A novel global feature-oriented relational triple extraction model based on table filling. In: Proceedings of the 2021 conference on empirical methods in natural language processing, pp 2646–2656

Wei Z, Su J, Wang Y, Tian Y, Chang Y (2020) A novel cascade binary tagging framework for relational triple extraction. In: Proceedings of the 58th annual meeting of the association for computational linguistics, pp 1476–1488

Zheng H, Wen R, Chen X, Yang Y, Zhang Y, Zhang Z, Zhang N, Qin B, Xu M, Zheng Y (2021) Prgc: potential relation and global correspondence based joint relational triple extraction. In: Proceedings of the 59th annual meeting of the association for computational linguistics, pp 6225–6235

Shang Y-M, Huang H, Mao X (2022) Onerel: joint entity and relation extraction with one module in one step. Proceedings of the AAAI conference on artificial intelligence 36:11285–11293

Sui D, Zeng X, Chen Y, Liu K, Zhao J (2023) Joint entity and relation extraction with set prediction networks. IEEE Trans Neural Netw Learn Syst

Zhuang F, Qi Z, Duan K, Xi D, Zhu Y, Zhu H, Xiong H, He Q (2020) A comprehensive survey on transfer learning. Proceedings of the IEEE 109(1):43–76

Tripuraneni N, Jordan M, Jin C (2020) On the theory of transfer learning: the importance of task diversity. Advances Neural Inf Process Syst 33:7852–7862

Devlin J, Chang M-W, Lee K, Toutanova K (2018) Bert: pre-training of deep bidirectional transformers for language understanding. In: Proceedings of the 2019 conference of the North American chapter of the association for computational linguistics: human language technologies, pp 4171–4186

Liu Y, Ott M, Goyal N, Du J, Joshi M, Chen D, Levy O, Lewis M, Zettlemoyer L, Stoyanov V (2021) Roberta: a robustly optimized bert pretraining approach, 1218–1227

Ren F, Zhang L, Zhao X, Yin S, Liu S, Li B (2022) A simple but effective bidirectional framework for relational triple extraction. In: Proceedings of the Fifteenth ACM international conference on web search and data mining, pp 824–832

Dixit K, Al-Onaizan Y (2019) Span-level model for relation extraction. In: Proceedings of the 57th annual meeting of the association for computational linguistics, pp 5308–5314

Eberts M, Ulges A (2020) Span-based joint entity and relation extraction with transformer pre-training. In: Proceedings of the 28th international conference on computational linguistics, pp 88–99

Zhong Z, Chen D (2021) A frustratingly easy approach for entity and relation extraction. In: Proceedings of the 2021 Conference of the North American Chapter of the association for computational linguistics: human language technologies, pp 50–61

Chen Y, Sun Y, Yang Z, Lin H (2020) Joint entity and relation extraction for legal documents with legal feature enhancement. In: Proceedings of the 28th international conference on computational linguistics, pp 1561–1571

Zhang H, Qin H, Zhang G, Wang Y, Li R (2023) Joint entity and relation extraction for legal documents based on table filling. In: International conference on neural information processing, Springer, pp 211–222

Ma X, Xu P, Wang Z, Nallapati R, Xiang B (2019) Domain adaptation with bert-based domain classification and data selection. In: Proceedings of the 2nd workshop on deep learning approaches for low-resource NLP (DeepLo 2019), pp 76–83

Chan JY-L, Bea KT, Leow SMH, Phoong SW, Cheng WK (2023) State of the art: a review of sentiment analysis based on sequential transfer learning. Artif Intell Rev 56(1):749–780

Khurana S, Dawalatabad N, Laurent A, Vicente L, Gimeno P, Mingote V, Glass J (2024) Cross-lingual transfer learning for low-resource speech translation. In: IEEE International conference on acoustics, speech and signal processing (ICASSP)

Elnaggar A, Otto R, Matthes F (2018) Deep learning for named-entity linking with transfer learning for legal documents. In: Proceedings of the 2018 artificial intelligence and cloud computing conference, pp 23–28

Chen Y-S, Chiang S-W, Wu M-L (2022) A few-shot transfer learning approach using text-label embedding with legal attributes for law article prediction. Appl Intell 52(3):2884–2902

Moro G, Piscaglia N, Ragazzi L, Italiani P (2023) Multi-language transfer learning for low-resource legal case summarization. Artif Intell Law 1–29

Bernhardt M, Castro DC, Tanno R, Schwaighofer A, Tezcan KC, Monteiro M, Bannur S, Lungren MP, Nori A, Glocker B et al (2022) Active label cleaning for improved dataset quality under resource constraints. Nature Commun 13(1):1161

Citovsky G, DeSalvo G, Gentile C, Karydas L, Rajagopalan A, Rostamizadeh A, Kumar S (2021) Batch active learning at scale. Adv Neural Inf Process Syst 34:11933–11944

Zhou Z, Shin JY, Gurudu SR, Gotway MB, Liang J (2021) Active, continual fine tuning of convolutional neural networks for reducing annotation efforts. Med Image Anal 71:101997

Taketsugu H, Ukita N (2023) Uncertainty criteria in active transfer learning for efficient video-specific human pose estimation. In: 2023 18th International Conference on Machine Vision and Applications (MVA), IEEE, pp 1–5

Gu Q, Dai Q (2021) A novel active multi-source transfer learning algorithm for time series forecasting. Appl Intell 51:1326–1350

Onita D (2023) Active learning based on transfer learning techniques for text classification. IEEE Access 11:28751–28761

Farinneya P, Pour MMA, Hamidian S, Diab M (2021) Active learning for rumor identification on social media. Findings of the association for computational linguistics: EMNLP 2021:4556–4565

Kasai J, Qian K, Gurajada S, Li Y, Popa L (2019) Low-resource deep entity resolution with transfer and active learning. In: Proceedings of the 57th annual meeting of the association for computational linguistics, pp 5851–5861

Fatemi Z, Xing C, Liu W, Xiong C (2023) Improving gender fairness of pre-trained language models without catastrophic forgetting. In: Proceedings of the 61st annual meeting of the association for computational linguistics, pp 1249–1262

Ahmad PN, Liu Y, Ullah I, Shabaz M (2024) Enhancing coherence and diversity in multi-class slogan generation systems. ACM Trans Asian Low-Resource Language Inf Process 23(8):1–24

Shin J, Kang Y, Jung S, Choi J (2022) Active instance selection for few-shot classification. IEEE Access 10:133186–133195

Yu Y, Zhang R, Xu R, Zhang J, Shen J, Zhang C (2023) Cold-start data selection for few-shot language model fine-tuning: a prompt-based uncertainty propagation approach. In: Proceedings of the 61st Annual meeting of the association for computational linguistics, pp 2499–2521

Gao T, Fisch A, Chen D (2021) Making pre-trained language models better few-shot learners. In: Proceedings of the 59th annual meeting of the association for computational linguistics, pp 3816–3830

Mishra S, Khashabi D, Baral C, Choi Y, Hajishirzi H (2022) Reframing instructional prompts to gptk’s language. Findings of the association for computational linguistics: ACL 2022:589–612

Lee D-H, Kadakia A, Tan K, Agarwal M, Feng X, Shibuya T, Mitani R, Sekiya T, Pujara J, Ren X (2022) Good examples make a faster learner: simple demonstration-based learning for low-resource ner. In: Proceedings of the 60th annual meeting of the association for computational linguistics, pp 2687–2700

Zhang H, Zhang T, Cao F, Wang Z, Zhang Y, Sun Y, Vicente MA (2022) Bca: bilinear convolutional neural networks and attention networks for legal question answering. AI Open 3:172–181

Cao Y, Sun Y, Xu C, Li C, Du J, Lin H (2022) Cailie 1.0: a dataset for challenge of ai in law-information extraction v1. 0. AI Open 3:208–212

Wang Y, Sun Y, Liu Z, Sarma SE, Bronstein MM, Solomon JM (2019) Dynamic graph cnn for learning on point clouds. ACM Trans Graphics (tog) 38(5):1–12

Krizhevsky A, Sutskever I, Hinton GE (2012) Imagenet classification with deep convolutional neural networks. Adv Neural Inf Process Syst 25

Ganin Y, Ustinova E, Ajakan H, Germain P, Larochelle H, Laviolette F, March M, Lempitsky V (2016) Domain-adversarial training of neural networks. J Mach Learn Res 17(59):1–35

Tzeng E, Hoffman J, Saenko K, Darrell T (2017) Adversarial discriminative domain adaptation. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 7167–7176

Long M, Cao Z, Wang J, Jordan MI (2018) Conditional adversarial domain adaptation. Adv Neural Inf Process Syst 31

Acknowledgements

This work is supported by the National Key Research and Development Program of China (No. 2022YFC3301801), the Fundamental Research Funds for the Central Universities (No. DUT22ZD205).

Author information

Authors and Affiliations

Contributions

Conceptualization: Haiguang Zhang and Yuanyuan Sun; Methodology: Haiguang Zhang and Yuanyuan Sun; Formal analysis and investigation: Haiguang Zhang, Yuanyuan Sun and Bo Xu; Writing - original draft preparation: Haiguang Zhang, and Bo Xu; Writing - review and editing: Yuanyuan Sun, Bo Xu and Hongfei Lin; Supervision: Yuanyuan Sun, Bo Xu and Hongfei Lin.

Corresponding author

Ethics declarations

Competing Interests

The authors have no competing interests to declare that are relevant to the content of this article.

Ethical and Informed Consent for Data Used

The article was submitted with the consent of all the authors to participate.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The contains error in Figure 7 image.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zhang, H., Sun, Y., Xu, B. et al. LegalATLE: an active transfer learning framework for legal triple extraction. Appl Intell 54, 12835–12850 (2024). https://doi.org/10.1007/s10489-024-05842-y

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-024-05842-y