Abstract

Semantic decoding, understood as predicting the semantic information carried by stimuli presented to subjects based on neural signals, is an active area of research. Previous studies have mainly focused on the visual perception process, with relatively little attention paid to complex auditory decoding. Moreover, simple linear models do not achieve optimal performance for the mapping between brain signals and natural sounds. Therefore, a robust approach that combines a pretrained audio tagging model and a nonlinear multilayer perceptron model was proposed to transfer information from non-invasive measured brain activity to deep learning features, thereby generating sound semantics. The results achieved on previously unseen subjects, training without data from the target subjects, and ultimately predicting natural-sound semantics from the fMRI data of unseen subjects. In the study with 30 subjects, the framework in research achieves 23.21% Top-1 and 51.88% Top-5 accuracy scores, which significantly exceed the baseline scores and the scores of other classical algorithms. The approach advances the decoding of auditory neural excitation with the help of deep neural networks, and the proposed model successfully completes a challenging cross-subject decoding task.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Data availability

The data that support the findings of this study are available from the authors upon reasonable request and with permission of corresponding authors of the dataset.

References

Kell AJE, Yamins DLK, Shook EN, Norman-Haignere SV, McDermott JH (2018) A task-optimized neural network replicates human auditory behavior, predicts brain responses, and reveals a cortical processing hierarchy. Neuron 98(1):630-644(e16)

Norman-Haignere SV, McDermott JH (2018) Neural responses to natural and model-matched stimuli reveal distinct computations in primary and nonprimary auditory cortex. PLoS Biol 16(7):e2005127

Casey MA (2017) Music of the 7ths: Predicting and decoding multivoxel fmri responses with acoustic, schematic, and categorical music features. Front Psychol 8(7):01179

Nakai T, Koide-Majima N, Nishimoto S (2021) Correspondence of categorical and feature-based representations of music in the human brain. Brain Behav 11(1):e01936

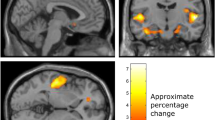

Santoro R, Moerel M, De Martino F, Valente G, Ugurbil K, Yacoub E, Formisano E (2017) Reconstructing the spectrotemporal modulations of real-life sounds from fMRI response patterns. Proc Natl Acad Sci USA 114(18):4799–4804

Szegedy C, Liu W, Jia Y, Sermanet P, Reed S, Anguelov D, Erhan D, Vanhoucke V, Rabinovich A (2015) Going deeper with convolutions. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR), pp 1–9

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR), pp 770–778

Huang G, Liu Z, Van Der Maaten L, Weinberger KQ (2017) Densely connected convolutional networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR), pp 2261–2269

Bahmei B, Birmingham E, Arzanpour S (2022) CNN-RNN and Data Augmentation Using Deep Convolutional Generative Adversarial Network for Environmental Sound Classification. IEEE Signal Process Lett 29:682–686

Hershey S, Chaudhuri S, Ellis DPW, Gemmeke JF, Jansen A, Moore RC, Plakal M, Platt D, Saurous RA, Seybold B, Slaney M, Weiss RJ, Wilson K (2017) CNN architectures for large-scale audio classification. In: Proceedings of the international conference on acoustics, speech and signal processing (ICASSP), pp 131–135

Kong Q, Cao Y, Iqbal T, Wang Y, Wang W, Plumbley MD (2020) Panns: Large-scale pretrained audio neural networks for audio pattern recognition. IEEE/ACM Trans Audio Speech Lang Process 28:2880–2894

Gemmeke JF, Ellis DPW, Freedman D, Jansen A, Lawrence W, Moore RC, Plakal M, Ritter M (2017) Audio set: an ontology and human-labeled dataset for audio events. In: Proceedings of the international conference on acoustics, speech and signal processing (ICASSP), pp 776–780

Guclu U, van Gerven MAJ (2017) Increasingly complex representations of natural movies across the dorsal stream are shared between subjects. Neuroimage 145:329–336

Huth AG, de Heer WA, Griffiths TL, Theunissen FE, Gallant JL (2016) Natural speech reveals the semantic maps that tile human cerebral cortex. Nature 532(7600):453–458

Pereira F, Lou B, Pritchett B, Ritter S, Gershman SJ, Kanwisher N, Botvinick M, Fedorenko E (2018) Toward a universal decoder of linguistic meaning from brain activation. Nat Commun 9:963

Nishida S, Nishimoto S (2018) Decoding naturalistic experiences from human brain activity via distributed representations of words. Neuroimage 180:232–242

Vodrahalli K, Chen P-H, Liang Y, Baldassano C, Chen J, Yong E, Honey C, Hasson U, Ramadge P, Norman KA, Arora S (2018) Mapping between fmri responses to movies and their natural language annotations. Neuroimage 180:223–231

Matsuo E, Kobayashi I, Nishimoto S, Nishida S, Asoh H (2018) Describing semantic representations of brain activity evoked by visual stimuli. In: Proceedings of the IEEE international conference on systems, man, and cybernetics (SMC), pp 576–583

Wen H, Shi J, Zhang Y, Lu K-H, Cao J, Liu Z (2018) Neural Encoding and Decoding with Deep Learning for Dynamic Natural Vision. Cereb Cortex 28(12):4136–4160

Yotsutsuji S, Lei M, Akama H (2021) Evaluation of Task fMRI Decoding With Deep Learning on a Small Sample Dataset. Front Neuroinform 15:577451

Piczak KJ (2015) ESC: Dataset for environmental sound classification. In: Proceedings of the acm international conference on multimedia (ACM), pp 1015–1018

Berezutskaya J, Freudenburg Z, Ambrogioni VL, Guclu U, van Gerven MAJ, Ramsey NF (2020) Cortical network responses map onto data-driven features that capture visual semantics of movie fragments. Sci Rep 10(1):12077

Zhang H, Ciss M, Dauphin YN, Lopez-Paz D (2018) mixup: Beyond empirical risk minimization. In: Proceedings of the international conference on learning representations (ICLR), pp 1–13

Hastie T, Tibshirani R (1996) Discriminant adaptive nearest neighbor classification. IEEE Trans Pattern Anal Mach Intell 18(6):607–616

Surhone LM, Tennoe MT, Henssonow SF (2010) Orthogonal Procrustes Problem. Betascript Publishing, Publisher

Hoerl AE, Kennard RW (2000) Ridge regression: Biased estimation for nonorthogonal problems. Technometrics 42(1):80–86

Bruno LG, Michele E, Giancarlo V et al (2023) Intermediate acoustic-to-semantic representations link behavioral and neural responses to natural sounds. Nat Neurosci 4:26

Vincent KMC, Lana O, Kazuhisa S, Kosetsu T, Masataka G, Shinichi F (2023) Decoding drums, instrumentals, vocals, and mixed sources in music using human brain activity With fMRI. In: Proceedings of the international symposium conference on music information retrieval (ISMIR), pp 197–206

Aslam MS, Radhika T, Chandrasekar A et al (2024) Improved Event-Triggered-Based Output Tracking for a Class of Delayed Networked T-S Fuzzy Systems. Int J Fuzzy Syst 26(4):1247–1260

Cao Y, Chandrasekar A, Radhika T, Vijayakumar V (2023) Input-to-state stability of stochastic Markovian jump genetic regulatory networks. Math Comput Simul 08:007

Horikawa T, Kamitani Y (2017) Generic decoding of seen and imagined objects using hierarchical visual features. Nat Commun 8(1):1–15

Acknowledgements

This work was supported by the National Natural Science Foundation of China (No.U2133218), the National Key Research and Development Program of China (No.2018YFB0204304) and the Fundamental Research Funds for the Central Universities of China (No.FRF-MP-19-007 and No. FRF-TP-20- 065A1Z). We would like to thank Dr. Yuanyuan Zhang and Prof. Renxin Chu for their valuable contributions to this research.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interests

This research has no potential competing interests, which encompass financial, non-financial, or other associations with individuals or organizations that could improperly impact our work.

Ethical and informed consent for data used

The data used in this study is legally obtained. The experimental procedure was approved by the local ethics committee, and prior to the experiment, all participants signed informed consent, ensuring compliance with ethical standards.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zhao, M., Liu, B. An fMRI-based auditory decoding framework combined with convolutional neural network for predicting the semantics of real-life sounds from brain activity. Appl Intell 55, 118 (2025). https://doi.org/10.1007/s10489-024-05873-5

Accepted:

Published:

DOI: https://doi.org/10.1007/s10489-024-05873-5