Abstract

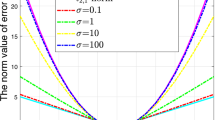

Unsupervised feature selection (UFS) has gained increasing attention and research interest in various domains, such as machine learning and data mining. Recently, numerous matrix factorization-based methods have been widely adopted for UFS. However, the following issues still exist. First, most methods based on matrix factorization use the squared Frobenius-norm to measure the loss term, making them sensitive to outliers. Although using \( \varvec{l}_{\varvec{2,1}} \)-norm-based loss improves the robustness, it is sensitive to small loss. Second, most existing matrix factorization-based methods utilize a fixed graph to preserve the local structure of data, making them fail to capture the intrinsic local structure accurately. To address the above drawbacks, we present a novel robust UFS model named matrix factorization with adaptive loss via bi-stochastic graph regularization (MFALBS). Specifically, MFALBS decomposes the projected data points into two orthogonal matrices to facilitate discriminative feature selection. Meanwhile, MFALBS utilizes an adaptive loss function for measuring the loss term in order to enhance model robustness. Moreover, MFALBS adopts an adaptive learning strategy for optimal bi-stochastic graph learning in order to improve local structure preservation ability. Finally, an optimization algorithm is proposed to solve the proposed model. Experimental results on real-world datasets show the effectiveness and superiority of MFALBS.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Data Availability and Access

Data will be made available on request.

Notes

The code and datasets will be made available at: https://github.com/xiangfasong/MFALBS.

References

Georgiou T, Liu Y, Chen W, Lew MS (2020) A survey of traditional and deep learning-based feature descriptors for high dimensional data in computer vision. Int J Multim Inf Retr 9(3):135–170. https://doi.org/10.1007/S13735-019-00183-W

Bommert A, Welchowski T, Schmid M, Rahnenführer J (2022) Benchmark of filter methods for feature selection in high-dimensional gene expression survival data. Briefings Bioinform 23(1). https://doi.org/10.1093/BIB/BBAB354

Mostafa RR, Khedr AM, Aghbari ZA, Afyouni I, Kamel I, Ahmed N (2024) An adaptive hybrid mutated differential evolution feature selection method for low and high-dimensional medical datasets. Knowl Based Syst 283:111218. https://doi.org/10.1016/J.KNOSYS.2023.111218

Omuya EO, Okeyo GO, Kimwele MW (2021) Feature selection for classification using principal component analysis and information gain. Expert Syst Appl 174:114765. https://doi.org/10.1016/J.ESWA.2021.114765

Liu T, Lu Y, Zhu B, Zhao H (2023) Clustering high-dimensional data via feature selection. Biometrics 79(2):940–950. https://doi.org/10.1111/biom.13665

Lu X, Long J, Wen J, Fei L, Zhang B, Xu Y (2022) Locality preserving projection with symmetric graph embedding for unsupervised dimensionality reduction. Pattern Recognit 131:108844. https://doi.org/10.1016/J.PATCOG.2022.108844

Zhou J, Zhang Q, Zeng S, Zhang B, Fang L (2024) Latent linear discriminant analysis for feature extraction via isometric structural learning. Pattern Recognit 149:110218. https://doi.org/10.1016/J.PATCOG.2023.110218

He X, Cai D, Niyogi P (2005) Laplacian score for feature selection. In: International conference on neural information processing systems, pp 507–514

Miao J, Zhao J, Yang T, Fan C, Tian Y, Shi Y, Xu M (2024) Explicit unsupervised feature selection based on structured graph and locally linear embedding. Expert Syst Appl 255:124568. https://doi.org/10.1016/J.ESWA.2024.124568

Xie X, Cao Z, Sun F (2023) Joint learning of graph and latent representation for unsupervised feature selection. Appl Intell 53(21):25282–25295. https://doi.org/10.1007/S10489-023-04893-X

Mozafari M, Seyedi SA, Mohammadiani RP, Tab FA (2024) Unsupervised feature selection using orthogonal encoder-decoder factorization. Inf Sci 663:120277. https://doi.org/10.1016/J.INS.2024.120277

Lin X, Guan J, Chen B, Zeng Y (2022) Unsupervised feature selection via orthogonal basis clustering and local structure preserving. IEEE Trans Neural Networks Learn Syst 33(11):6881–6892. https://doi.org/10.1109/TNNLS.2021.3083763

Moslemi A, Ahmadian A (2023) Dual regularized subspace learning using adaptive graph learning and rank constraint: Unsupervised feature selection on gene expression microarray datasets. Comput Biol Medicine 167:107659. https://doi.org/10.1016/J.COMPBIOMED.2023.107659

Wang X, Wu P, Xu Q, Zeng Z, Xie Y (2021) Joint image clustering and feature selection with auto-adjoined learning for high-dimensional data. Knowl Based Syst 232:107443. https://doi.org/10.1016/J.KNOSYS.2021.107443

Dhal P, Azad C (2024) A fine-tuning deep learning with multi-objective-based feature selection approach for the classification of text. Neural Comput Appl 36(7):3525–3553. https://doi.org/10.1007/S00521-023-09225-1

Wu X, Xu X, Liu J, Wang H, Hu B, Nie F (2021) Supervised feature selection with orthogonal regression and feature weighting. IEEE Trans Neural Networks Learn Syst 32(5):1831–1838. https://doi.org/10.1109/TNNLS.2020.2991336

Li Z, Nie F, Wu D, Wang Z, Li X (2024) Sparse trace ratio LDA for supervised feature selection. IEEE Trans Cybern 54(4):2420–2433. https://doi.org/10.1109/TCYB.2023.3264907

Lai J, Chen H, Li T, Yang X (2022) Adaptive graph learning for semi-supervised feature selection with redundancy minimization. Inf Sci 609:465–488. https://doi.org/10.1016/J.INS.2022.07.102

Sheikhpour R, Berahmand K, Forouzandeh S (2023) Hessian-based semi-supervised feature selection using generalized uncorrelated constraint. Knowl Based Syst 269:110521. https://doi.org/10.1016/J.KNOSYS.2023.110521

Li Z, Yang Y, Liu J, Zhou X, Lu H (2012) Unsupervised feature selection using nonnegative spectral analysis. In: International conference on artificial intelligence, pp 1026–1032. https://doi.org/10.1609/AAAI.V26I1.8289

Wang C, Wang J, Gu Z, Wei J, Liu J (2024) Unsupervised feature selection by learning exponential weights. Pattern Recognit 148:110183. https://doi.org/10.1016/J.PATCOG.2023.110183

Li J, Cheng K, Wang S, Morstatter F, Trevino RP, Tang J, Liu H (2018) Feature selection: A data perspective. ACM Comput Surv 50(6):94–19445. https://doi.org/10.1145/3136625

Solorio-Fernández S, Carrasco-Ochoa JA, Martínez-Trinidad JF (2020) A review of unsupervised feature selection methods. Artif Intell Rev 53(2):907–948. https://doi.org/10.1007/S10462-019-09682-Y

Hou C, Nie F, Li X, Yi D, Wu Y (2014) Joint embedding learning and sparse regression: A framework for unsupervised feature selection. IEEE Trans Cybern 44(6):793–804. https://doi.org/10.1109/TCYB.2013.2272642

Zhu P, Zuo W, Zhang L, Hu Q, Shiu SCK (2015) Unsupervised feature selection by regularized self-representation. Pattern Recognit 48(2):438–446. https://doi.org/10.1016/J.PATCOG.2014.08.006

Liu Y, Ye D, Li W, Wang H, Gao Y (2020) Robust neighborhood embedding for unsupervised feature selection. Knowl Based Syst 193:105462. https://doi.org/10.1016/J.KNOSYS.2019.105462

Du L, Shen Y (2015) Unsupervised feature selection with adaptive structure learning. In: International conference on knowledge discovery and data mining, pp 209–218. https://doi.org/10.1145/2783258.2783345

Nie F, Zhu W, Li X (2016) Unsupervised feature selection with structured graph optimization. In: International conference on artificial intelligence, pp 1302–1308. https://doi.org/10.1609/AAAI.V30I1.10168

Huang Y, Shen Z, Cai F, Li T, Lv F (2021) Adaptive graph-based generalized regression model for unsupervised feature selection. Knowl Based Syst 227:107156. https://doi.org/10.1016/J.KNOSYS.2021.107156

Zhao H, Li Q, Wang Z, Nie F (2022) Joint adaptive graph learning and discriminative analysis for unsupervised feature selection. Cogn Comput 14(3):1211–1221. https://doi.org/10.1007/S12559-021-09875-0

Tang C, Zheng X, Zhang W, Liu X, Zhu X, Zhu E (2023) Unsupervised feature selection via multiple graph fusion and feature weight learning. Sci China Inf Sci 66(5). https://doi.org/10.1007/S11432-022-3579-1

Zhou Q, Wang Q, Gao Q, Yang M, Gao X (2024) Unsupervised discriminative feature selection via contrastive graph learning. IEEE Trans Image Process 33:972–986. https://doi.org/10.1109/TIP.2024.3353572

Wang S, Pedrycz W, Zhu Q, Zhu W (2015) Subspace learning for unsupervised feature selection via matrix factorization. Pattern Recognit 48(1):10–19. https://doi.org/10.1016/J.PATCOG.2014.08.004

Shang R, Wang W, Stolkin R, Jiao L (2016) Subspace learning-based graph regularized feature selection. Knowl Based Syst 112:152–165. https://doi.org/10.1016/J.KNOSYS.2016.09.006

Shang R, Xu K, Shang F, Jiao L (2020) Sparse and low-redundant subspace learning-based dual-graph regularized robust feature selection. Knowl Based Syst 187. https://doi.org/10.1016/J.KNOSYS.2019.07.001

Han D, Kim J (2015) Unsupervised simultaneous orthogonal basis clustering feature selection. In: International conference on computer vision and pattern recognition, pp 5016–5023. https://doi.org/10.1109/CVPR.2015.7299136

Qian M, Zhai C (2013) Robust unsupervised feature selection. In: International joint conference on artificial intelligence, pp 1621–1627

Du S, Ma Y, Li S, Ma Y (2017) Robust unsupervised feature selection via matrix factorization. Neurocomputing 241:115–127. https://doi.org/10.1016/J.NEUCOM.2017.02.034

Luo C, Zheng J, Li T, Chen H, Huang Y, Peng X (2022) Orthogonally constrained matrix factorization for robust unsupervised feature selection with local preserving. Inf Sci 586:662–675. https://doi.org/10.1016/J.INS.2021.11.068

Nie F, Wang H, Huang H, Ding CHQ (2013) Adaptive loss minimization for semi-supervised elastic embedding. In: International joint conference on artificial intelligence, pp 1565–1571

Nie F, Huang H, Cai X, Ding CHQ (2010) Efficient and robust feature selection via joint 2, 1-norms minimization. In: International conference on neural information processing systems, pp 1813–1821

Sun Z, Xie H, Liu J, Gou J, Yu Y (2023) Dual-graph with non-convex sparse regularization for multi-label feature selection. Appl Intell 53(18):21227–21247. https://doi.org/10.1007/S10489-023-04515-6

Wang Q, He X, Jiang X, Li X (2022) Robust bi-stochastic graph regularized matrix factorization for data clustering. IEEE Trans Pattern Anal Mach Intell 44(1):390–403. https://doi.org/10.1109/TPAMI.2020.3007673

Boyd SP, Parikh N, Chu E, Peleato B, Eckstein J (2011) Distributed optimization and statistical learning via the alternating direction method of multipliers. Found Trends Mach Learn 3(1):1–122. https://doi.org/10.1561/2200000016

Li S, Tang C, Liu X, Liu Y, Chen J (2019) Dual graph regularized compact feature representation for unsupervised feature selection. Neurocomputing 331:77–96. https://doi.org/10.1016/J.NEUCOM.2018.11.060

Shi Z, Liu J (2023) Noise-tolerant clustering via joint doubly stochastic matrix regularization and dual sparse coding. Expert Syst Appl 222:119814. https://doi.org/10.1016/J.ESWA.2023.119814

Neumann JV (1950) Functional Operators (AM-22), Volume 2: The Geometry of Orthogonal Spaces.(AM-22). Princeton University Press, Princeton

Zhang X (2020) A Matrix Algebra Approach to Artificial Intelligence. Springer, Singapore. https://doi.org/10.1007/978-981-15-2770-8

Author information

Authors and Affiliations

Contributions

Xiangfa Song: Conceptualization, Formal analysis, Methodology, Software, Validation, Writing-review & editing.

Corresponding author

Ethics declarations

Competing Interests

The author declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Ethical and Informed Consent for Data Used

The dataset used is public and available, and there are no ethical implications.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix A

Appendix A

Lemma 3

The closed-form solution to the optimization problem (15) is \( \textbf{S}=\textbf{R}+\frac{n+\textbf{1}_{n}^{T} \textbf{R} \textbf{1}_{n}}{n^{2}} \textbf{1}_{n} \textbf{1}_{n}^{T}-\frac{1}{n} \textbf{R} \textbf{1}_{n} \textbf{1}_{n}^{T}-\frac{1}{n} \textbf{1}_{n} \textbf{1}_{n}^{T}\textbf{R} \), where \( \textbf{R}=\frac{\textbf{A}+\textbf{A}^T}{2} \).

Proof

The Lagrangian function for problem (15) can be formulated as follows:

where \(\eta \in \mathbb {R}^{n}\) and \(\Xi \in \mathbb {R}^{n \times n}\) are Lagrangian multipliers.

By differentiating w.r.t. \( \textbf{S} \) and setting it to 0, we obatain

By calculating the transpose on both sides of (A2), we get:

By combining (A2) with (A3), we obtain:

By combining (A2) with (A4), we further have:

where \( \textbf{R}=\frac{\textbf{A}+\textbf{A}^T}{2} \). By the constraint \( \textbf{S 1}_n=\textbf{1}_n \), we multiply vector \( \textbf{1}_n \) on both sides of (A5), and we can obtain

from which we get

Based on the Woodbury formula [48], we get

By combining (A5), (A7) and (A8), we can get the solution of problem (15) as

\(\square \)

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Song, X. Robust unsupervised feature selection based on matrix factorization with adaptive loss via bi-stochastic graph regularization. Appl Intell 55, 55 (2025). https://doi.org/10.1007/s10489-024-05876-2

Accepted:

Published:

DOI: https://doi.org/10.1007/s10489-024-05876-2