Abstract

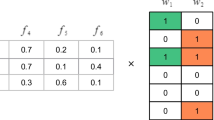

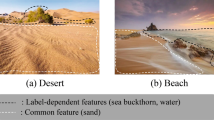

In the contemporary epoch of massive data, the fuzziness of labels and the high dimensionality of feature space are prevalent characteristics of data. As a mathematical methodology for managing uncertainty, Dempster-Shafer evidence theory has found widespread applications in artificial intelligence, pattern recognition, and decision analysis. However, it has not garnered adequate attention in label distribution learning (LDL). This paper studies feature selection for LDL using Dempster-Shafer evidence theory. First, for a LDL data, distance maps in the feature space and in the label space are given, respectively. Furthermore, a tunable parameter to regulate the proximity level of features or labels is implemented. Then, the \(\alpha \)-upper and \(\alpha \)-lower approximations in the LDL data are put forward. Subsequently, to alleviate the influence of uncertainty on classification performance, robust feature evaluation measures for a LDL data, namely, “belief map" and “plausibility map" are defined, and they are based on the approximations. Next, feature selection algorithms utilizing belief and plausibility maps are specially designed. Finally, experimental results and statistical analyses demonstrate that the defined belief and plausibility maps can effectively measure the indeterminacy of LDL data, and the designed feature selection algorithms outperform five existing algorithms regarding classification performance.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Data availability and access

The data used or analyzed during the current study are available from the corresponding author after the paper is accepted for publication.

References

Chen S, Wang J, Chen Y (2020) Label distribution learning on auxiliary label space graphs for facial expression recognition, In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 13984–13993

Dempster AP (1967) Upper and lower probabilities induced by a multivalued mapping. Ann Math Stat 38:325–339

Dunn OJ (1961) Multiple comparisons among means. J Am Stat Assoc 56:52–64

Deng ZX, Li TR (2022) Feature selection for label distribution learning using dual-similarity based neighborhood fuzzy entropy. Inf Sci 3:385–404

Friedman M (1940) A comparison of alternative tests of significance for the problem of m rankings. Ann Math Stat 11:86–92

Geng X (2016) Label distribution learning. IEEE Trans Knowl Data Eng 7:1734–1748

Geng X, Qian X, Huo Z, Zhang Y (2022) Head pose estimation based on multivariate label distribution. IEEE Trans Pattern Anal Mach Intell 44:1974–1991

Geng X, Yin C, Zhou ZH (2013) Facial age estimation by learning from label distributions. IEEE Trans Pattern Anal Mach Intell 10:2401–2412

Ling MG, Geng X (2019) Indoor Crowd Counting by Mixture of Gaussians Label Distribution Learning. IEEE Trans Image Process 11:5691–5701

Lee J, Kim DW (2013) Feature selection for multi-label classification using multi-variate mutual information. Pattern Recogn Lett 34:349–357

Lin Y, Liu H, Zhao H, Hu Q, Zhu X, Wu X (2022) Hierarchical Feature Selection Based on Label Distribution Learning. IEEE Trans Knowl Data Eng 35:5964–5976

Lin S, Wang C, Mao Y, Lin Y (2024) Feature selection for label distribution learning under feature weight view. Int J Mach Learn Cybern 15:1827–1840

Liu W, Wang J (2021) Recursive elimination-election algorithms for wrapper feature selection. Appl Soft Comput 113:107956

Li ZW, Zhang QL, Wang P (2022) Gene selection in a single cell gene space based on D-S evidence theory, Interdisciplinary Sciences: Computational. Life Sci 14:722–744

Ma J, Gao X (2020) A filter-based feature construction and feature selection approach for classification using genetic programming. Knowl-Based Syst 196:105806

Naik AK, Kuppili V (2024) An embedded feature selection method based on generalized classifier neural network for cancer classification. Comput Biol Med 168:107677

Pawara P, Okafo E, Groefsema M (2020) One-vs-One classification for deep neural networks. Pattern Recogn 108:107528

Peng YC, Zhang QL (2021) Feature selection for interval-valued data based on D-S evidence theory. IEEE Access 9:122754–122765

Qian WB, Dong P, Wang YL (2022) Local rough set-based feature selection for label distribution learning with incomplete labels. Int J Mach Learn Cybern 13:2345–2364

Qian W, Huang J, Wang Y, Shu W (2020) Mutual information-based label distribution feature selection for multi-label learning. Knowl-Based Syst 195:105684

Qian W, Xiong C, Wang Y (2021) A ranking-based feature selection for multi-label classification with fuzzy relative discernibility. Appl Soft Comput 102:106995

Reyes O, Morell C, Ventura S (2015) Scalable extensions of the ReliefF algorithm for weighting and selecting features on the multi-label learning context. Neurocomputing 161:168–182

Shafer G (1976) A mathematical theory of evidence. Princeton University Press, Princeton

Sheskin DJ (2000) Parametric and nonparametric statistical procedures. Chapman and Hall/CRC, London, pp 402–410

Wang YN, Wang SC (2023) Feature selection for set-valued data based on D-S evidence theory. Artif Intell Rev 56:2667–2696

Tan C, Ji GL, Zeng XQ (2021) Multi-label enhancement manifold learning algorithm for vehicle video 13:96–102

Thabtah F, Kamalov F, Hammoud S, Shahamiri SR (2020) Least loss: A simplified filter method for feature selection. Inf Sci 534:1–15

Tarkhaneh O, Nguyen TT, Mazaheri S (2021) A novel wrapper-based feature subset selection method using modified binary differential evolution algorithm. Inf Sci 565:278–305

Qian WB, Dong P, Dai SM (2022) Incomplete label distribution feature selection based on neighborhood-tolerance discrimination index. Appl Soft Comput 130:109693

Wu WZ, Leung Y, Zhang WX (2002) Connections between rough set theory and Dempster-Shafer theory of evidence. Int J Gen Syst 31:405–430

Xu SP, Ju HR, Shang L (2020) Label distribution learning: a local collaborative mechanism. Int J Approximate Reasoning 121:59–84

Xu N, Liu YP, Geng X (2021) Label enhancement for label distribution learning. IEEE Trans Knowl Data Eng 4:1632–1643

Xiong CZ, Qian WB (2021) Feature selection based on label distribution and fuzzy mutual information. Inf Sci 2:297–319

Yan JJ, Zhang ZN (2020) A hybrid scheme-based one-vs-all decision trees for multi-class classification tasks. Knowl-Based Syst 198:105922

Zhang Y, Fu K, Wang J (2020) Learning from discrete Gaussian label distribution and spatial channel-aware residual attention for head pose estimation. Neurocomputing 407:259–269

Zhang HY (2023) Feature selection for single cell RNA sequencing data based on a noise-robust fuzzy relation and fuzzy evidence theory. Appl Soft Comput 148:110940

Zychowski A, Mandziuk J (2021) Duo-LDL method for label distribution learning based on pairwise class dependencies. Appl Soft Comput 110:107585

Zhang J, Qin Q, Liu X (2023) Emotion-wise feature interaction analysis-based visual emotion distribution learning. Vis Comput 44:1762–1773

Zhou LX, Tang L, Zhang ZY (2023) Extracting and ranking product features in consumer reviews based on evidence theory, Journal of Ambient Intelligence and Humanized. Computing 14:9973–9983

Zhou H, Ma L, Niu XL (2024) A novel hybrid model combined with ensemble embedded feature selection method for estimating reference evapotranspiration in the North China Plain. Agric Water Manag 296:108807

Acknowledgements

The authors would like to thank the editors and the anonymous reviewers for their valuable comments and suggestions, which have helped immensely in improving the quality of the paper. This work is startup fund for doctoral research of Guangdong University of Science and technology (GKY-2024BSQDK-11), and Science Foundation in Guangdong University of Science and Technology (GKY-2023KYZDK-1).

Author information

Authors and Affiliations

Contributions

Zhengwei Zhao: Methodology, Writing-Original draft; Rongrong Wang: Software, Writing-Original draft; Wei Pang: Editing, Investigation; Zhaowen Li: Validation, Editing.

Corresponding authors

Ethics declarations

Competing Interests

The authors declare that they have no conflict of interest.

Ethical and informed consent for data used

The data used or analyzed during the current study are available from the corresponding author after the paper is accepted for publication.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zhao, Z., Wang, R., Pang, W. et al. Feature selection for label distribution learning using Dempster-Shafer evidence theory. Appl Intell 55, 259 (2025). https://doi.org/10.1007/s10489-024-05879-z

Accepted:

Published:

DOI: https://doi.org/10.1007/s10489-024-05879-z