Abstract

Distance correlation is a novel class of multivariate dependence measure, taking positive values between 0 and 1, and applicable to random vectors of not necessarily equal arbitrary dimensions. It offers several advantages over the well-known Pearson correlation coefficient, the most important being that distance correlation equals zero if-and-only if- the random vectors are independent. There are two different estimators of the distance correlation available in the literature. The first estimator, proposed by Székely et al. (Ann Stat 35:2769–279 2007), is based on an asymptotically unbiased estimator of the distance covariance, which is a V-statistic. The second builds on an unbiased estimator of the distance covariance proposed in Székely and Rizzo (Stat 42:2382–2412 2014), shown to be a U-statistic by Huo and Székely (Technometrics 58:435–447 2016). This study evaluates their efficiency (mean squared error) and compares computational times for both methods under different dependence structures. Under conditions of independence or near-independence, the V-estimates are biased, while the U-estimator frequently cannot be computed due to negative values. To address this challenge, a convex linear combination of the former estimators is proposed and studied, yielding good results regardless of the level of dependence. Additionally, a medical database is studied and discussed.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The concept of dependence among random observations plays a central role in many fields, including statistics, medicine, biology and engineering, among others. Given the complexity inherent in fully understanding dependencies, the strength of these relationships is often distilled into a single metric: the correlation coefficient. Numerous types of correlation coefficients exist, but perhaps the most widely known is the Pearson’s correlation coefficient. Alternative measures of correlation also exist. For instance, rank correlation assesses the relationship between the rankings of two variables or the rankings of the same variable across different conditions. Examples of rank correlation coefficients include Spearman’s rank correlation coefficient, Kendall’s Tau correlation coefficient, and Goodman and Kruskal’s gamma.

Pearson’s correlation coefficient has some disadvantages. For example, the fact that the Pearson’s correlation coefficient is zero does not determine independence between two variables, as only a linear dependence between the variables can be determined and the variables may have a non-linear relationship. In recent years, a novel measure of dependence between random vectors has been proposed, called distance correlation, which was introduced by [1]. According to these authors, distance covariance and distance correlation share a parallel with product-moment covariance and correlation. However, unlike the classical definition of correlation, distance correlation is zero solely when the random vectors are independent. Moreover, the distance correlation can be used to evaluate both linear and nonlinear correlations between variables. Essentially, for all distributions with finite first moments, distance correlation (\(\mathcal {R}\)) extends the notion of correlation in two key ways:

-

1.

\(\mathcal {R}(X,Y)\) is defined for X and Y in arbitrary dimensions;

-

2.

\(\mathcal {R}(X,Y) = 0\) characterizes the independence of X and Y.

Distance correlation satisfies \(0 \le \mathcal {R}\le 1\), and \(\mathcal {R}= 0\) if-and only if- X and Y are independent.

Distance correlation has been applied and extended to a wide variety of fields, such as variable selection [7,8,9,10], as well as in disciplines like biology [11, 12] and medicine [13, 14], among others. It has also been explored within high-dimensional contexts [15, 16]. Furthermore, the applicability of distance correlation was extended to address the challenge of testing the independence of high-dimensional random vectors in [6]. Similarly, the concept of partial distance correlation was introduced by [2]. Distance correlation has been extended to encompass conditional distance correlation [16,17,18,19], and it has also been examined in the realm of survival data [20,21,22,23,24]. Lastly, [25] have demonstrated that for any fixed Pearson correlation coefficient strictly between -1 and 1, the distance correlation coefficient can attain any value within the open unit interval (0,1).

The estimation of distance correlation relies on the estimation of distance covariance. [5] demonstrated the uniqueness of distance covariance. [1] proposed a sample distance covariance estimator, and they showed that it is a V-statistic. This estimator of the distance covariance gives rise to the so-called V-estimator of the distance correlation. Moreover, intermediate results provided by [2] led to an unbiased estimator of the squared distance covariance. This unbiased estimator was further identified as a U-statistic in [3]. This distance covariance estimator results in the U-estimator of the distance correlation.

The two estimators of the distance correlation, U-estimator and V-estimator, have different properties. The direct implementation of the V-estimator results in computational complexity that scales as \(\mathcal {O}(n^2)\). The computation of the U-estimator reduces computational complexity to \(\mathcal {O}(n \log n)\). However, both the U-statistic and the V-statistic of distance correlation can be calculated in \(\mathcal {O}(n \log n)\) steps, the algorithm described by [3] can be straightforwardly extended to the V-statistic version. Both estimators exhibit good asymptotic properties, although, the U-estimator allows for easier derivation of these properties.

One scenario of interest when working with distance correlation is that of independence, when both distance correlation and distance covariance are zero. It is under independence or in situations close to it where the two estimators exhibit the most significant differences. Firstly, the U-estimator of the squared distance covariance is unbiased, leading to occurrences of negative squared distance covariance values, which precludes the computation of the U-estimator of distance correlation. Conversely, the V-estimator is biased as it can only take positive values. However, to the best of our knowledge, the respective advantages and disadvantages of each distance correlation estimator, and the use of one estimator or the other, do not seem to be based on any specific basis. For example, while [23] employ the U-estimator to propose an extension for right-censored data, [17] suggest an extension for conditional distance correlation using both estimators.

This paper describes a simulation study that was conducted to assess the practical behavior and efficiency of each estimator with different dependency models. The experimental results show that neither is consistently better, making the choice of the estimator in practice a challenging task. To tackle this inconvenience, a new approach, specifically a convex linear combination of the aforementioned estimators, is introduced and studied.

The remainder of this paper is organized as follows. Section 2 introduces the preliminaries, defining distance covariance and distance correlation. It also presents the estimators of distance covariance and distance correlation described in the literature. Some of the existing packages developed in R and Python software are introduced. To study the occurrence of negative values for the squared distance covariance estimator which enables calculation of the U-estimator of distance correlation, two modifications of the U-estimator are proposed to handle that problem. Section 3 introduces a convex linear combination approach to address the estimator choice problem. Section 4 presents the results of the simulation study through three models: Farlie-Gumbel-Morgenstern (FGM), a bivariate normal model, and a non-linear model. The results are compared and shown in terms of efficiency, mean squared error (MSE), bias, variance, and computational time for each estimator. Additionally a comprehensive comparison between the original estimators and the proposed alternatives presented. Section 5 analyzes and discuss results for an application to a real data base. Finally, Section 6 provides the concluding remarks.

2 Distance correlation estimation

Let \(X \in \mathbb {R}^p\) and \(Y \in \mathbb {R}^q\) be random vectors, where p and q are positive integers. The characteristic functions of X and Y are denoted as \(\phi _X\) and \(\phi _Y\), respectively, and the joint characteristic function of X and Y is denoted as \(\phi _{X,Y}\).

Definition 1

The distance covariance between random vectors X and Y with finite first moments is the non-negative square root of the number \(\mathcal {V}^2(X, Y)\), defined by:

where \(c_d = \frac{\pi ^{(1+d)/2}}{\Gamma ((1+d)/2)}\), \(|t|^{1+p}_p = ||t||^{1+p}, |s|^{1+q}_q = ||s||^{1+q}\). Similarly, distance variance is defined as the square root of

Definition 2

The distance correlation between two random vectors X and Y with finite first moments is the positive square root of the non-negative number \(\mathcal {R}^2(X,Y)\) defined by

An equivalent form for computing distance covariance through expectations given by [1], this is, if \(E|X|^2_p < \infty \) and \(E|Y|^2_q < \infty \), then \(E[|X|_p|Y|_q] < \infty \), and

where \((X_1,Y_1),(X_2,Y_2)\) and \((X_3,Y_3)\) are independent and identically distributed as (X, Y). Note that (2) can be used to compute distance covariance using only the density function, without needing to know the characteristic function. Similarly, it is possible to compute distance variance of X as the square root of

and similarly for \(\mathcal {V}^2\)(Y). As a result, it is possible to accurately determine the exact distance correlation \(\mathcal {R}(X,Y)\) using (2), (3) and (1).

For an observed random sample \((\varvec{\textrm{X},\textrm{Y}}) = \{(X_k,Y_k): k = 1, \dots ,n\}\) from the joint distribution of random vectors \(X \in \mathbb {R}^p\) and \(Y \in \mathbb {R}^q\), [1] proposed the following empirical estimator of distance covariance.

Definition 3

Empirical distance covariance \(\mathcal {V}_n(\varvec{\textrm{X}}, \varvec{\textrm{Y}})\) is defined by the non-negative square root of:

where \(A_{kl}\) and \(B_{kl}\) denote the corresponding double-centered distance matrices defined as

where \(a_{kl} = |X_k - X_l|\) are the pairwise distances of the X observations. The terms \(B_{kl}\) are defined in a ismilar manner but using \(b_{kl} = |Y_k-Y_l|\) instead of \(a_{kl}\). Similarly, sample distance variance \(\mathcal {V}_n(\varvec{\textrm{X}})\) is defined as the square root of:

Theorem 1 in [1] proved that \(\mathcal {V}_n^2(\varvec{\textrm{X},\textrm{Y}}) \ge 0\). Moreover, it has been proven that under independence, \(\mathcal {V}_n^2(\varvec{\textrm{X},\textrm{Y}})\) is a degenerate kernel V-statistic.

The first estimator of distance correlation in [1], dCorV, is based on empirical distance variance and covariance, giving the empirical distance correlation.

Definition 4

The empirical distance correlation \(\mathcal {R}_n(\varvec{\textrm{X}}, \varvec{\textrm{Y}})\) is the square root of

Likewise, [2] proposed an alternative estimator for the distance covariance \(\mathcal {V}(X,Y)\) based on a \(\mathcal {U}-\)centered matrix.

Definition 5

Let \(A = (a_{kl})\) be a symmetric, real valued \(n \times n\) matrix with zero diagonal, \(n > 2\). Define the \(\mathcal {U}-\)centered matrix \(\tilde{A}\) as follows. Let the (k, l)th entry of \(\tilde{A}\) be defined by

Here “\(\mathcal {U}-\)centered" is so named because the inner product,

defines an unbiased estimator of the squared distance covariance \(\mathcal {V}^2(X,Y)\). [3] established that the estimator in (6) can be expressed as a U-statistic. Thus, it becomes feasible to define an alternative estimator of the empirical distance correlation through \(\mathcal {U}_n(\varvec{\textrm{X},\textrm{Y}})\) and distance variance of \(\varvec{\textrm{X}}\) and \(\varvec{\textrm{Y}}\), \(\mathcal {U}_n(\varvec{\textrm{X}})\), \(\mathcal {U}_n(\varvec{\textrm{Y}})\), respectively, in the following manner.

Definition 6

The estimator dCorU of the distance correlation \(\mathcal {R}(X,Y)\), based on U-statistics, is the square root of

This reformulation provides a fast algorithm for estimating distance covariance, which can be implemented with a computational complexity of \(\mathcal {O}(n \log n)\), while the original estimator (4) has a computational complexity of \(\mathcal {O}(n^2)\). Alternatively, [26] proposed an algorithm primarily composed of two sorting steps for computing distance correlation estimator in (7). This design renders it simple to implement and also results in a computational complexity of \(\mathcal {O}(n \log n)\).

These results have prompted the implementation and development of many software packages, available in R [30] and Python [31]. A comprehensive comparison of the performance of these packages in both languages is presented by [32]. An open-source Python package for distance correlation and other statistics is introduced: the dcor package [33]. The libraries studied in Python are statsmodels [34], hyppo [35], and pingouin [36]. In R, the energy [27] package implements the dcov and dcor functions, which return \(\mathcal {V}_n(\varvec{\textrm{X},\textrm{Y}})\) and dCorV(\(\varvec{\textrm{X},\textrm{Y}}\)), respectively. For the U-estimator, the dcovU and bcdcor functions are implemented. These functions return the \(\mathcal {U}_n^2\)(\(\varvec{\textrm{X},\textrm{Y}}\)) and dCorU\(^2\)(\(\varvec{\textrm{X},\textrm{Y}}\)) values, respectively; that is, they do not take the square root. In contrast, dcortools implements the distcov and distcor functions. The argument bias.corr allows the V-estimator to be used when the argument is FALSE, or the U-estimator with bias.corr = TRUE. Additionally, the Rfast [28] package includes functions such as dvar, dcov, dcor, and bcdcor, using the fast method proposed by [3]. And the bcdcor function computes the bias-corrected distance correlation of two matrices.

A notable aspect to consider, as previously mentioned, is that the computation of \(\mathcal {U}_n^2(\varvec{\textrm{X},\textrm{Y}})\) in (6) can yield negative values in cases of independence or very low levels of dependence and small sample sizes. Consequently, it becomes impossible to calculate dCorU using the expression in (8). This precludes the computation of the dCorU estimator as the square root of dCorU\(^2\). This issue arises from the fact that \(\mathcal {U}_n^2(\varvec{\textrm{X},\textrm{Y}})\) is an unbiased estimator of the squared distance covariance \(\mathcal {V}^2(X,Y)\), which is 0 under independence. To the best of our knowledge, this problem has not been discussed in the literature. However some authors have implemented this estimator in software such as R and Python, giving the following alternatives to compute dCorU. Authors such as [27, 28] return dCorU\(^2\) without computing the square root, while others, such as [29] return for dCorU the square root of the absolute value of dCorU\(^2\), with the sign corresponding to dCorU\(^2\). However, as shown in Table 2 in Section 4.2, when working in scenarios under independence, approximately 60% of the estimates are negative values. For this reason, this study explores two proposals to address this challenge, resulting in the following alternative estimators of the distance correlation:

-

Replace the values of \(\mathcal {U}_n^2(X,Y)\) with their absolute value. This U-estimator of the distance correlation is denoted by dCorU(A).

-

Consider \(\max (\mathcal {U}_n^2(X,Y), 0)\) so negative values of \(\mathcal {U}_n^2(X,\) \(Y)\) are truncated to zero. Denote this U-estimator of \(\mathcal {R}\) as dCorU(T).

Under an independence scenario, both dCorU and dCorU(A) yield identical MSE. This is because the squared of the difference between the estimated value and the real value (\((\text {dCorU} - \mathcal {R})^2)\) does not deviate from the difference obtained when using the absolute value (\((\text {dCorU(A)} - \mathcal {R})^2\)) when \(\mathcal {R}= 0\).

3 New proposal for estimating the distance correlation

Under independence or near-independence conditions (\(\mathcal {R}\approx 0\)), the V-estimator is biased as it always yields positive results. As regards, the U-estimator, this often cannot be computed because of negative values of dCorU\(^2(X,Y)\). The preference between the two estimators seems to also depend on the nature (linear, non-linear) of the relationship between X and Y (see results in Section 4.1). While simulations allow the behavior of estimators to be studied and provide insights into when each should be used, in real-world scenarios determining whether the relationship between X and Y is linear or non-linear, and assessing the level of dependence or independence, can be challenging. Consequently, selecting the appropriate estimator becomes difficult. To overcome this limitation, this paper introduces a new estimator of distance correlation that overcomes these challenges. It consists of a convex linear combination of both estimators (dCorU, dCorV), denoted as

where \(\lambda \in [0,1]\) serves as a weighting parameter determining the balance between the two estimators. Note that the convex linear combination inherits many of the asymptotic properties of the original estimators. Specifically, it has less bias than dCorV (because dCorU is unbiased).

An alternative to this estimator is the square root of

which results in a lower percentage of negative values (see Appendix A). An issue to be addressed in this case is obtaining the square root of \(dCor^2_{\lambda }\) when it is negative. However, this problem can be solved using the options already mentioned for dCorU. It should be noted that none of the combinations ensure that the estimate is always greater than zero, however, both options reduce the percentage of negative values.

The optimal value \(\lambda _0\) is proposed for the parameter \(\lambda \), which minimizes the Mean Squared Error (MSE) of this new estimator. This optimal value \(\lambda _0\), as provided in Lemma 1, relies on various unknown quantities such as the covariance, variance, and bias of dCorU and dCorV.

Lemma 1

Let \(X \in \mathbb {R}^p\) and \(Y \in \mathbb {R}^q\) be random vectors, where p and q are positive integers and define \(\hat{\theta }^U = \)dCorU(X,Y) and \(\hat{\theta }^V = \)dCorV(X,Y). Given the convex linear combination \(\lambda \hat{\theta }^U + (1-\lambda )\hat{\theta }^V\), then, with a view to minimizing the MSE, the optimal value of \(\lambda \) is given by \(\lambda _0\) defined as:

Proof

Let \(\hat{\theta }^C_{\lambda _0} = \lambda _0 \hat{\theta }^U + (1-\lambda _0)\hat{\theta }^V\) be the convex linear combination, then

where

and

Therefore,

where the optimal value of \(\lambda \) is given by the solution to the following equation:

Solving the previous equation yields the expression of \(\lambda _0\) in (8). \(\square \)

Due to the unavailability of the exact values of each term in the expression of \(\lambda _0\) in (8), the decision was taken to estimate \(\lambda _0\) using bootstrap, specifically, the smoothed bootstrap, see Algorithm 1.

The smoothed bootstrap relies on K, a kernel function (typically a symmetric density around zero), and \(h > 0\), a smoothing parameter referred to as the bandwidth, in this case, \(h_1,h_2\). The bandwidth regulates the size of the environment used for estimation. It is customary to stipulate that the kernel function K must be non-negative and have an integral of one. Additionally, it is often expected for K to be symmetric. While the selection of the function K does not significantly influence the properties of the estimator (aside from its regularity conditions such as continuity, differentiability, etc.), the choice of the smoothing parameter is crucial for accurate estimation. In essence, the size of the environment utilized for nonparametric estimation must be appropriate-neither excessively large nor too small.

In R it is possible to use the density() function of the base package to obtain a kernel-like estimate of the density (with the bandwidth determined by the bw parameter), some of the methods implemented include Silverman’s [37] and Scott’s [38] rules of thumb, unbiased and biased cross-validation methods and direct plug-in methods, among others, although implementations from other packages can be used.

4 Simulation study

The simulation study employs the dcortools package, specifically utilizing the distcor function. To estimate the distance correlation through \(\textrm{dCorU}\), the code used is distcor(X,Y,bias.corr = T). For computing \(\textrm{dCorV}\), there are two options: distcor(X,Y,bias.corr = F) or simply distcor(X,Y). The study is divided into three main parts, first, a comparison between the original estimators; then, a comparison between the dCorU estimator and the proposed alternatives to mitigate negative values when dealing with dependence or very low dependence and small sample sizes; finally, a comprehensive comparison encompassing all the scenarios, including the original estimators, comparisons of the proposed alternatives for dCorU, and estimates for the proposed convex linear combination.

For each scenario, the mean, bias, variance and mean squared error (MSE) are computed (see Appendix A). Each simulation is repeated for 1000 Monte Carlo iterations, along with three sample sizes: 100, 1000, and 10000. To compare the efficiency (MSE) and computational time of each estimator, three models (FGM, bivariate normal and a non-linear models) with varying levels of dependence are considered, defined as follows.

4.1 Models

FGM model. The first model corresponds to a copula. One of the most popular parametric families of copulas is the Farlie-Gumbel-Morgenstern (FGM) family, which is defined by

with copula density given by

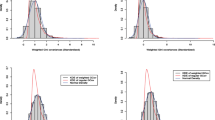

where \(f_X(x), f_Y(y) \sim U(0,1)\). A well-known limitation of this family is that it does not allow the modeling of high dependences since Pearson’s correlation coefficient is limited to \(\rho = \frac{\theta }{3}\in \left[ -\frac{1}{3},\frac{1}{3}\right] \). Accurate calculation of distance covariance is achieved using (2), where the density function corresponds to (9). Similarly, calculations were performed for \(\mathcal {V}(X)\) and \(\mathcal {V}(Y)\). As a result, \(\mathcal {R}(X,Y) = \frac{|\theta |}{\sqrt{10}}\) is obtained. It is important to note that the dependence is not strong, specifically, \(0 \le \mathcal {R}\le 0.31622\) (see Fig. 1).

Bivariate normal model. The exact result for the distance correlation in this case is provided by [1] and is expressed as a function of the Pearson correlation coefficient. If (X, Y) has bivariate normal distribution with unit variance each, then, the squared distance correlation is given by:

In contrast to the previous model, the bivariate normal model allows complete dependence, resulting in \(\mathcal {R}= 1\) when \(\rho =1\) or \(\rho =-1\). This feature allows the performance of the estimators to be evaluated under high levels of dependence.

Nonlinear model. Let (X, Y) be a bivariate random variable with density

with \(k \in \mathbb {Z}\), controls the degree of dependence (which increases with k) and c is a fixed value that depends on the value of k. For this model, the k’s used are 0 (independence), 2,4,8 and 16 (strong dependence). An initial observation is that even at a relatively low level of dependence, such as \(k = 4\), the model’s behavior becomes discernible (see Fig. 1). It is important to highlight that, in this model, the value of distance correlation when X and Y are totally dependent (i.e. when \(k \rightarrow \infty \)) is not 1, but \(\mathcal {R}\approx 0.41\). So, unlike the linear models (FGM and normal bivariate), in this model low values of \(\mathcal {R}\) (\(0.22 \le \mathcal {R}\le 0.41\)) could indicate a strong relationship between X and Y. Figure 1 shows four samples drawn along with their respective distance correlation. The lines represent the conditional mean \(E[Y|X = x]\).

4.2 Comparison between dCorU and dCorV estimators

This part of the simulation study compares the original estimators, dCorU and dCorV. Firstly, the comparison for the MSE of dCorU and dCorV across the different sample sizes for each distance correlation for the three models is shown in Fig. 2. Each simulation is repeated for 1000 Monte Carlo iterations, along with three sample sizes: 100, 1000, and 10000. Since the dcortools package is being used, then dCorU is dCorU = sign(dCorU\(^2\)) \(\sqrt{|\text {dCorU}^2|}\).

For the FGM model, the differences between the two estimators become more significant across all values of \(\theta \). Under independence (\(\mathcal {R}= 0\)), dCorU outperforms dCorV in terms of MSE for all three sample sizes. However, when there is dependence, dCorV shows better results, even in the presence of a small degree of dependence. As the level of dependence increases, the MSE of both estimators decreases and the differences disappear.

The MSE obtained for the bivariate normal model is shown in Fig. 2b. The conclusions are similar to those for the previous model. Under independence (\(\mathcal {R}= 0\)), dCorU emerges as the superior estimator. However, as soon as there is no independence, the opposite conclusion holds true, namely that dCorV is the best option. As dependence increases (\(\mathcal {R}\ge 0.454\)), sample size becomes less influential, and both estimators tend to converge and provide the same value. Furthermore, for larger sample sizes, a moderate dependence (\(\mathcal {R}> 0.224\)) is sufficient to observe similar behavior between the estimators. It is worth noting that under complete dependence (\(\mathcal {R}= 1\)), the estimates were the same for each sample size.

The results for the non-linear model are shown in Fig. 2c. Similar to the previous models, under independence the optimal estimate is provided by dCorU. However, the dCorV estimator does not systematically emerge as the superior choice at any specific level of dependence. Both estimators exhibit similar behavior with large sample sizes and, at dependence, the same holds true for this model as well.

The results of the bias and variance of both estimators are presented in Appendix A. They provide information that is consistent with the conclusions drawn from the MSE analysis. In particular, the most substantial difference between the two estimators can be observed in the case of a small sample size (\(n = 100\)) and weak dependence or independence, with dCorU exhibiting a negative bias close to zero with increasing levels of dependence. Similarly, dCorV also shows a decreasing bias, although always positive. The variance of dCorV is comparatively smaller than the variance of dCorU. In contrast, for larger sample sizes (\(n = 1000, 10000\)), the results show insignificant differences between the two estimators.

The computational times for each estimator are shown in Table 1. The characteristics of the computer equipment used are as follows: Intel(R) Core(TM) i7-1280P 12th Gen CPU with 2.00 GHz and 16 GB RAM. There are no significant differences in the computation times between the estimators. It is important to highlight that these times were obtained with the dcortools package, with which both the U-statistic and the V-statistic of distance correlation can be calculated in \(\mathcal {O}(n \log n)\) steps. In fact, the algorithm in [3] can be straightforwardly extended to the V-statistic version. If another package were used, for example the energy package, the computation times of both methods would be different.

4.3 Alternatives to dCorU

As mentioned above, when working under independence or with very low dependencies, the dCorU estimator often cannot be computed due to negative estimates of the squared covariance. Table 2 presents the percentage of negative values obtained for different sample sizes across the three models under varying levels of dependence. This section compares the dCorU estimator with the alternatives proposed in Section 2, specifically dCorU(A) and dCorU(T). Figure 3 shows the comparison of the MSE between dCorU and the proposed methods for each model.

The FGM-Model presents negative values across all the studied values of \(\theta \) when \(n = 100\). As the sample size increases, the problem of negative values only appears when the dependence is very weak (\(n = 100\)) or just under independence (\(n = 10000\)), see Table 2. Despite the notably high percentage of negative values in the case of independence, the differences between the estimators are not substantial. However, the most accurate estimation is achieved using dCorU(T), given that \(\mathcal {R}= 0\). Conversely, under dependence, dCorU(A) provides a more accurate estimation. Nevertheless, as the level of dependence strengthens, the MSE values for all three estimations tend to the same value.

In the case of the bivariate normal model (Fig. 3), negative values are encountered only under independence (\(\mathcal {R}= 0\)) or low levels of dependence (\(\mathcal {R}= 0.22\)), both with \(n=100\). Under strong dependence or with large sample sizes, the computation of dCorU does not exhibit the issue of negative values (at least with \(n \ge 100\)). The conclusions are aligned with those of the previous model. In the case of independence, dCorU(T) emerges as the optimal estimator, while in another scenario, it is dCorU(A). In the remaining cases, all three estimators yield comparable MSE. Furthermore, for \(n=10000\), the issue of negative values arises in the independence scenario (Table 2), but the differences remain insignificant.

Finally, in the case of the non-linear model, negative values are observed for weak dependence scenarios (\(k = 0,2,4\)) with \(n = 100\). However, as the sample size increases (\(n = 1000, 10000\)), the problem only arises under independence (\(k = 0\)). It is important to highlight that the conclusions are similar to those in the two previous cases. Under independence, the optimal estimator is dCorU(T), whereas in one of the other cases, it is dCorU(A). For the remaining cases, all three estimators yield the same MSE (Fig. 3).

MSE values for dCorU, dCorU with absolute value (dCorU(A)), and truncated value (dCorU(T)) across three sample sizes (n) and three different models: the FGM model in the first row, the bivariate normal model in the second row, and the nonlinear model in the last row. The corresponding distance correlation values are also presented

4.4 New estimator for distance correlation

This section explores and compares the original estimators (dCorU and dCorV) with proposed variations, including dCorU(A) and dCorU(T), together with their corresponding convex combinations computed with the optimal value of the parameter, \(\lambda _0\) (8), as follows:

The comparisons are conducted across two scenarios for the convex linear combination. The first scenario involves estimating the optimal \(\lambda _0\) (8) using Algorithm 1, while the second scenario utilizes the optimal value of \(\lambda _0\) in Lemma 1, where the bias, variance and covariance terms in (8) are approximated using 1000 Monte Carlo repetitions. The study focuses on the behavior of the MSE in each model under different levels of dependence and with a sample size \(n = 100\) (see Fig. 4).

The value of \(\hat{\lambda }_0\) was obtained using Algorithm 1 with 1000 bootstrap iterations. The bandwidths, considered \(h_1 = h_2\) for simplicity, were chosen as the value of the grid between 0.0025 and 0.32 with the lowest MSE. A simulation study showing the effect of the bandwidths is included in Appendix A. It is evident that the bandwidth has an effect only under independence conditions, with lower bandwidth values corresponding to lower MSE. When the level of dependence is moderate, the impact of the bandwidth becomes less significant, and under high dependence, the results for different bandwidth values tend to converge.

Comparison of the MSE between the different estimators and their respective convex linear combination and sample size \(n = 100\). Specifically, \(\text {dCor}_{\hat{\lambda }_0}\) denotes the combination \(\hat{\lambda }_0\)dCorU + \(\left( 1 - \hat{\lambda }_0\right) \)dCorV with \(\hat{\lambda }_0\) estimated using 1000 bootstrap replications. Similarly, \(\text {dCor}_{\lambda _0}\) denotes the combination \(\lambda _0\)dCorU + \((1 - \lambda _0)\)dCorV using the real optimal value of \(\lambda _0\) (8). The same naming convention applies to dCorU(A) and dCorU(T), each representing their respective combinations. The \(\mathcal {R}\) values shown are rounded values of the corresponding values from each model

In Fig. 4, the maximum value of the distance correlation considered is \(\mathcal {R}= 0.31\), which corresponds to the maximum level achievable in the FGM-Model. It is notable that, even though \(\mathcal {R}\) is approximately the same for all three models, the associated degree of dependence for each value of \(\mathcal {R}\) is different for each scenario, especially for the non-linear model. For this reason, the behavior of the estimators is not comparable over the models for a fixed value of \(\mathcal {R}\). The main observations that can be made include the following: under independence (\(\mathcal {R}= 0\)), the proposed estimator \(\text {dCor}_{\lambda 0}\) outperforms dCorU and dCorV when using the optimal \(\lambda _0\). The performance of the proposed estimator worsens slightly when \(\lambda _0\) is estimated, but it still gives much better results than dCorV. As soon as there is no independence (\(\mathcal {R}> 0\)), the proposed estimator with estimated \(\lambda _0\) gives comparable results to the one with the optimal \(\lambda _0\), and it improves both dCorU and dCorV

These conclusions can be observed for each of the models in Fig. 13, where it is noticeable that the most significant differences with each of the estimators occur under conditions of independence. Conversely, as dependence increases, the estimates tend to become more comparable. Specifically, the nonlinear model exhibits a decrease in MSE as \(\mathcal {R}\) increases, while the linear models do not show such a significant decrease.

Figure 5 shows the MSE for each model under varying levels of dependence, from independence (\(\mathcal {R}= 0\)) up to the maximum dependence permitted by each model. It is worth noting that the nonlinear model reaches a maximum \(\mathcal {R}\) value of around 0.4134, indicating complete dependence between X and Y.The behavior observed in the non-linear model is also evident in the normal bivariate model, wherein the MSE remains quite similar across all cases, starting at \(\mathcal {R}= 0.75\). Moreover, the reduction in MSE becomes evident in each case as \(\mathcal {R}\) increases, eventually reaching 0 in the case of complete dependence (\(\mathcal {R}= 1\)). However, this is not the case for the nonlinear model, where the MSE does not reach zero for the full dependence scenario (\(\mathcal {R}= 0.41\)).

It is important to mention that in cases of independence or very low dependence, dCorU(T) and its combination with \(\lambda _0\) exhibit the lowest MSE. Additionally, it is worth noting that the bootstrap approximation of \(\lambda _0\) shows larger discrepancies from the actual value in scenarios of independence or low dependence (see Appendix A). However, when dependence is low (approximately \(\mathcal {R}= 0.1\)), dCorU(A) and its convex combinations \(\left( \text {dCor(A)}_{\lambda _0} \text {and } \text {dCor(A)}_{\hat{\lambda }_0}\right) \) result in a lower MSE. Under moderate or high dependence (\(\mathcal {R}\ge 0.24\)), convex linear combinations tend to converge to the same MSE, consistently approaching the best estimate provided by the individual estimators (dCorU, dCorV, dCorU(A), dCorU(T)).

It becomes evident that the convex linear combination using the value \(\hat{\lambda }_0\) obtained through 1000 bootstrap repetitions consistently approximates the best estimates provided by the original estimators (dCorU, dCorV) across all the scenarios examined. While it is true that under specific conditions, some alternative combinations appear to yield slightly smaller errors, it is important to note that distinguishing between working under independence or with very low dependence may not always be feasible.

5 Application to real data

Rheumatoid arthritis (RA) is a chronic systemic inflammatory disease leading to joint inflammation, functional disability, deformities, and a shortened life expectancy. The goal of treatment in RA is remission in the early stage of the disease before patients develop permanent deformities. Therefore, it is important to identify predictors of relapse, so that physicians can individualize the treatment plan [39].

Obesity is now thought to be related to flare-ups in rheumatoid arthritis patients who are in remission. In order to study this statement, a data base obtained in the A Coruña University Hospital Complex (CHUAC, Complejo Hospitalario Universitario de A Coruña, Spain) was used. A clinical trial was conducted on 196 patients in remission, who were randomly divided into two groups: a control group and an experimental group, in order to study the patients’ responses to a reduction in treatment. The control group consisted of 99 patients who were given the treatment in the usual manner. In the experimental group, however, the treatment was gradually reduced until it was completely withdrawn.

There were 375 covariates, including age, sex, weight, and genetic information. To study the effect of overweight and obesity on flare-ups, the following information was used:

-

Body mass index (BMI): measurement of a person’s leanness or corpulence based on their height and weight, intended to quantify tissue mass. This information is available at two points: at the beginning of the study and at the patient’s last visit. For the dependency study, the information from the beginning (of the study) was used.

-

Disease Activity Score in 28 joints (DAS28): continuous measure of RA disease activity that combines information from swollen joints, tender joints, acute phase response and general health. Similar to BMI, DAS28 information was obtained at the start of treatment and at the patient’s last visit. In this case, the difference between the DAS28 scores at the last visit and at the beginning was used to observe if there was a significant change between the two data points. Similarly, the variants DAS28V.CRP, DAS28V.ESR, and DAS28CRP were used. The difference between each is in the way it is calculated. DAS28-CRP uses the C-reactive protein count, while DAS28-ESR uses the erythrocyte sedimentation rate. On the other hand, DAS28.V indicates that patient assessment is not included in the calculation of DAS28.

-

Simplified Disease Activity Index (SDAI): A composite index used in the assessment of disease activity in rheumatoid arthritis (RA). It combines several clinical and laboratory measures to provide a comprehensive evaluation of disease severity. It is used similarly to the DAS28, comparing the values between the last visit and the start of the study.

Figure 6 shows the relationship between BMI and the difference in DAS28 (last visit and start of the study) and its respective variants. All patients are depicted in two colors, corresponding to the group to which they belonged (control and experimental). Similarly, Fig. 7 shows the relationship between the SDAI and the BMI at the start of the study.

Table 3 shows the proportion of patients with a normal weight (BMI < 25), overweight (25 \(\le \) BMI < 30) or obesity (BMI \(\ge \) 30) according to the BMI.

In order to study whether overweight and/or obesity had any influence on the increase in DAS28, its variants and SDAI, which could indicate that a flare-up was experienced, the distance correlation was computed using both estimators and the proposed convex combination, and it was also compared with Pearson’s correlation coefficient (see Table 4). Additionally, the effect of BMI at the beginning of treatment was studied with all possible indicators of a flare-up. In this case, it was not possible to compute the Pearson’s coefficient.

It is noticeable that the estimates between both estimators (dCorU and dCorV) differed in most cases. The most noteworthy difference was observed in the control group, where dCorU was consistently negative. However, when the convex combination was computed, in the majority of cases, it was equal to dCorV. As mentioned earlier, small values of distance correlation does not necessarily imply a weak relationship, as total dependence does not always correspond to a distance correlation of 1. On the other hand, the Pearson correlation coefficient close to zero does not necessarily indicate independence. Moreover, it was always lower than the distance correlation, suggesting that the dependency relationship is better captured by dCor. From the information available, it can be concluded that the change in DAS28 was not entirely independent of BMI, although the dependence did not appear to be very strong. There seemed to be a stronger dependence in the experimental group.

On the other hand, it can be observed that the proportions of people with a normal weight or overweight were very similar between both groups (control and experimental). However, the dependence between BMI and the difference in DAS28/SDAI was greater in the experimental group. This implies that, from a medical perspective, there was a higher risk of changes in DAS28/SDAI when the BMI was out of the normal range and the person was part of the experimental group. This result might suggest that there was a dependence between the group and the change in DAS28/SDAI, indicating a difference between belonging to the control group and the experimental group. Table 5 shows the distance correlation between the group (control, experimental) and the change in each indicator of activity for rheumatoid arthritis. It can be observed that there is a certain dependence between group membership and the occurrence of a change, particularly in DAS28.

Finally, one of the advantages of using distance correlation that it is able to calculate the dependence between vectors of different dimensions. For this study, it was observed that BMI had a certain dependence on the change in the different variables that describe the behavior of RA (see Table 4). This dependence was observed with all the data as well as within the divided groups (control and experimental). Whenever distance correlation did not clearly indicate independence or strong dependence, it is advisable to conduct a more exhaustive study with hypothesis tests. For example, [1] proposed a distance covariance test.

6 Conclusions

This research addressed the problem of choosing the best method to estimate distance correlation between two vectors X and Y. Under independence, dCorU seemed to be preferable over dCorV for all the models and sample sizes analyzed. However, \(\mathcal {U}_n^2(\varvec{\textrm{X},\textrm{Y}})\) might be negative in scenarios of independence or low dependence, particularly with small sample sizes, making it impossible to calculate dCorU. This observation prompted the development of two alternative proposals, based on truncating or computing the absolute value of \(\mathcal {U}_n^2(\varvec{\textrm{X},\textrm{Y}})\). Under independence, the superior estimation is generally when truncating.

In contrast, under dependence, the conclusions differ. The dCorV estimator aligns with the best results in terms of MSE for the linear models (FGM and bivariate normal). In the case of the non-linear model, the dCorU estimator provides better estimates, although the differences are not relevant. Moreover, when considering the two estimators, namely dCorU(A) and dCorU(T), the optimal estimator appeared to be dCorU(A), especially in cases of weak dependence. However, in terms of computational time, both estimators were similar.

In practice, it is difficult to choose the best estimator for the distance correlation, as the choice depends on whether the relationship between X and Y is linear or not, and whether there is independence or not, questions that remain unanswered in real-life studies. To address these complexities, a new estimator was proposed involving the convex linear combination of the two estimators, dCorU and dCorV, as well as their respective extensions, dCorU(A) and dCorU(T). In the majority of the cases, the proposed estimator with the parameter \(\lambda _0\) estimated using bootstrap, \(\text {dCor}_{\hat{\lambda }_0}\), yielded better results than those obtained using only dCorU or dCorV. However, it is necessary to remember that computation time will increase as the number of bootstrap iterations increases.

Data Availability and Access

The datasets generated during and/or analyzed during the current study are available from the corresponding author on reasonable request.

References

Székely GJ, Rizzo ML, Bakirov NK (2007) Measuring and testing dependence by correlation of distances. Ann Stat 35:2769–2794. https://doi.org/10.1214/009053607000000505

Székely GJ, Rizzo ML (2014) Partial Distance Correlation with Methods for Dissimilarities. Ann Stat 42:2382–2412. https://doi.org/10.1214/14-AOS1255

Huo X, Székely GJ (2016) Fast Computing for Distance Covariance. Technometrics 58:435–447. https://doi.org/10.1080/00401706.2015.1054435

Székely GJ, Rizzo ML (2009) Brownian Distance Covariance. Ann. Appl Stat 3:1236–1265. https://doi.org/10.1214/09-AOAS312

Székely GJ, Rizzo ML (2012) On the uniqueness of distance covariance. Stat Probab Lett 82:2278–2282. https://doi.org/10.1016/j.spl.2012.08.007

Székely GJ, Rizzo ML (2013) The distance correlation t-test of independence in high dimension. J Multivar Anal 117:193–213. https://doi.org/10.1016/j.jmva.2013.02.012

Yenigün CD, Rizzo ML (2015) Variable selection in regression using maximal correlation and distance correlation. J Stat Comput Simul 85:1692–1705. https://doi.org/10.1080/00949655.2014.895354

Febrero-Bande M, González-Manteiga W, Oviedo De La Fuente M (2019) Variable selection in functional additive regression models. Comput Stat 34:469–487. https://doi.org/10.1007/s00180-018-0844-5

Yang B, Yin X, Zhang N (2019) Sufficient variable selection using independence measures for continuous response. J Multivar Anal 173:480–493. https://doi.org/10.1016/j.jmva.2019.04.006

Wu R, Chen X. MM algorithms for distance covariance based sufficient dimension reduction and sufficient variable selection. Comput Stat Data Anal 155:107089. https://doi.org/10.1016/j.csda.2020.107089

Sun J, Herazo-Maya JD, Huang X, Kaminski N, Zhao H (2018) Distance-correlation based gene set analysis in longitudinal studies. Stat Appl Genet Mol Biol 17:20170053. https://doi.org/10.1515/sagmb-2017-0053

Brankovic A, Hosseini M, Piroddi L (2018) A distributed feature selection algorithm based on distance correlation with an application to microarrays. IEEE/ACM Trans Comput Biol Bioinforma 16:1802–1815. https://doi.org/10.1109/TCBB.2018.2833482

Guo Y, Wu C, Guo M, Liu X, Keinan A (2018) Gene-based nonparametric testing of interactions using distance correlation coefficient in case-control association studies. Genes 9(12):608. https://doi.org/10.3390/genes9120608

Hu W, Zhang A, Cai B, Calhoun V, Wang YP (2019) Distance canonical correlation analysis with application to an imaging-genetic study. J Med Imaging 6:026501. https://doi.org/10.1117/1.JMI.6.2.026501

Yao S, Zhang X, Shao X (2018) Testing mutual independence in high dimension via distance covariance. J Roal Stat Soc Ser B-Stat Methodol 80:455–480. https://doi.org/10.1111/rssb.12259

Lu S, Chen X, Wang H (2021) Conditional distance correlation sure independence screening for ultra-high dimensional survival data. Commun Stat - Theory Methods 50:1936–1953. https://doi.org/10.1080/03610926.2019.1657454

Wang X, Pan W, Hu W, Tian Y, Zhang H (2015) Conditional distance correlation. J Am Stat Assoc 110:1726–1734. https://doi.org/10.1080/01621459.2014.993081

Lu J, Lin L (2020) Model-free conditional screening via conditional distance correlation. Stat Papers 61:225–244. https://doi.org/10.1007/s00362-017-0931-7

Cui H, Liu Y, Mao G, Zhang J (2022) Model-free conditional screening for ultrahigh-dimensional survival data via conditional distance correlation. Biom J 65(3):2200089. https://doi.org/10.1002/bimj.202200089

Chen LP (2021) Feature screening based on distance correlation for ultrahigh-dimensional censored data with covariate measurement error. Comput Stat 36:857–884. https://doi.org/10.1007/s00180-020-01039-2

Chen X, Chen X, Wang H (2018) Robust feature screening for ultra-high dimensional right censored data via distance correlation. Comput Stat Data Anal 119:118–138. https://doi.org/10.1016/j.csda.2017.10.004

Chen LP (2022) Ultrahigh-dimensional sufficient dimension reduction for censored data with measurement error in covariates. J Appl Stat 49:1154–1178. https://doi.org/10.1080/02664763.2020.1856352

Edelmann D, Welchowski T, Benner A (2022) A consistent version of distance covariance for right-censored survival data and its application in hypothesis testing. Biom 78:867–879. https://doi.org/10.1111/biom.13470

Zhang J, Liu Y, Cui H (2021) Model-free feature screening via distance correlation for ultrahigh dimensional survival data. Stat Papers 62:2711–2738. https://doi.org/10.1007/s00362-020-01210-3

Edelmann D, Mori TF, Székely GJ (2021) On relationships between the Pearson and the distance correlation coefficients. Stat Probab Lett 169:108960. https://doi.org/10.1016/j.spl.2020.108960

Chaudhuri A, Hu W (2019) A fast algorithm for computing distance correlation. Comput Stat Data Anal 135:15–24. https://doi.org/10.1016/j.csda.2019.01.016

Rizzo ML, Székely GJ (2022) energy: E-Statistics: Multivariate Inference via the Energy of Data. https://CRAN.R-project.org/package=energy, R package version 1.7-11

Papadakis M, Tsagris M, Dimitriadis M, Fafalios S, Tsamardinos I, Fasiolo M, Borboudakis G, Burkardt J, Zou C, Lakiotaki K, Chatzipantsiou C (2022) Rfast: A Collection of Efficient and Extremely Fast R Functions. https://CRAN.R-project.org/package=Rfast, R package version 2.0.6

Edelmann D, Fiedler J (2022) dcortools: Providing Fast and Flexible Functions for Distance Correlation Analysis. https://CRAN.R-project.org/package=dcortools, R package version 0.1.6

R Core Team (2022) R: A Language and Environment for Statistical Computing. R Foundation for Statistical Computing. Vienna, Austria. https://www.R-project.org/

Van Rossum G, Drake Jr FL (1995) Python reference manual

Ramos-Carreño C, Torrecilla JL (2023) dcor: Distance correlation and energy statistics in Python. SoftwareX 22:101326. https://doi.org/10.1016/j.softx.2023.101326

Ramos-Carreño C (2022) dcor: distance correlation and energy statistics in Python. https://pypi.org/project/dcor/

Seabold S, Perktold J (2010) Statsmodels: Econometric and statistical modeling with Python. in: 9th Python in Science Conference

Panda S, Palaniappan S, Xiong J, Bridgeford E, Mehta R, Shen C (2021) hyppo: A multivariate hypothesis testing Python package. https://github.com/neurodata/hyppo

Vallat R (2018) Pingouin: statistics in Python. J Open Source Softw 3:1026. https://doi.org/10.21105/joss.01026

Silverman BW (1986) Density estimation. Chapman Hall, London

Scott DW (1992) Multivariate Density Estimation, Theory, Practice and Visualization. Wiley, New York

Katchamart W, Johnson S, Lin H, Phumethum V, Salliot C, Bombardier C (2010) Predictors for remission in rheumatoid arthritis patients: a systematic review. Arthritis Care Res 62:1128–1143. https://doi.org/10.1002/acr.20188

Acknowledgements

The authors would like to thank Dr. Francisco J. Blanco and Vanesa Balboa for their comments on the interpretation of the results obtained in the application of real data. We thank the three reviewers and the editor for their insightful suggestions and comments, which have led to a great improvement of this manuscript.

Funding

This research was supported by the International, Interdisciplinary and Intersectoral Information and Communications Technology PhD programme (3-i ICT) granted to CITIC and supported by the European Union through the Horizon 2020 research and innovation programme under a Marie Sklodowska-Curie agreement (H2020-MSCA-COFUND).

Author information

Authors and Affiliations

Contributions

Formal analysis, investigation and visualization: Blanca E. Monroy-Castillo; Writing - original draft preparation: Blanca E. Monroy-Castillo; Writing- review and editing: M. Amalia Jácome, Ricardo Cao; Supervision and discussion: M. Amalia Jácome, Ricardo Cao.

Corresponding author

Ethics declarations

Conflict of Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Ethical and Informed Consent for Data Used

Clinical trial 2012-004482-40, titled “Evaluation of the clinical utility of a standardized protocol of tapering strategies for patients with rheumatoid arthritis (RA) in persistent clinical remission on biologic therapy: an open-label, randomized, controlled study” by Francisco J. Blanco García.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix A Simulation study

Appendix A Simulation study

This appendix showcases the outcomes investigated and discussed in Section 4. These results are illustrated through various plots depicting bias, variance, and Mean Squared Error (MSE) across the different comparisons explored. Additionally, numerical summaries for the mean, variance, bias, and MSE are provided in tabular format for each model across various scenarios.

1.1 A. 1 Bias and variance

This section presents the bias and variance results for each model across five levels of dependence and three sample sizes (\(n = 100, 1000, 10000\)). These results have been discussed in detail in Section 4 of the paper. Figure 8 illustrates the results obtained under the FGM model, while Fig. 9 depicts the outcomes under the bivariate normal model. Similarly, Fig. 10 showcases the results for the nonlinear model.

1.2 A.2 Convex linear combination

The combination \(\hat{\lambda }_0 \text {dCorU}^2 + (1-\hat{\lambda }_0)\text {dCorV}^2\) could be negative. Figure 11 shows the estimations using the new proposal for convex linear combination. It is notable that the estimations are positive, as in the original proposal. However, when \(\lambda _0\) is obtained using Monte Carlo simulations, the results are shown in Fig. 12. In that case, the percentage of negative values is as follows: FGM model, 36.9%; bivariate normal model, 35.9%; and nonlinear model, 38.3%, which reduces the negative values obtained for the original estimator, \(\text {dCorU}^2\), and for the original convex combination proposed.

Estimations for the \(\hat{\lambda }\text {dCorU}^2 + (1-\hat{\lambda })\text {dCorV}^2\) with \(\hat{\lambda }\) obtained through Algorithm 1, using 5 bandwidth values (h = 0.02, 0.04, 0.08, 0.16, 0.32), for the three models (FGM, BN, NLM) under independence (\(\theta = 0, \rho = 0, k = 0\), respectively)

Figure 13 shows the MSE results for various bandwidth values (\(h = 0.0025, 0.005, 0.01, 0.02, 0.04, 0.08, 0.16,\) \( 0.32\)) across different models and levels of dependence. The comparison was made through the three convex linear combinations: \(\text {dCor}_{\hat{\lambda }_0}\), \(\text {dCor(A)}_{\hat{\lambda }_0}\) and \(\text {dCor(T)}_{\hat{\lambda }_0}\).

Figure 14 presents the MSE results for each model using different estimators, including the original estimators (dCorU, dCorV), alternatives to dCorU (dCorU(A), dCorU(T)), and their convex linear combinations. Specifically, \(\text {dCor}_{\hat{\lambda }_0} = \hat{\lambda }_0\)dCorU + \(\left( 1 - \hat{\lambda }_0\right) \)dCorV with \(\hat{\lambda }_0\) estimated using 1000 bootstrap replications. Similarly, \(\text {dCor}_{\lambda _0} = \lambda _0\)dCorU + \((1 - \lambda _0)\)dCorV using the real optimal value of \(\lambda _0\) computed with 1000 Monte Carlo repetitions.

1.3 Numerical results

This appendix presents the complementary results of the simulation study. The tables show the mean, bias, variance, mean squared error (MSE) for each model with different parameters, using a sample size of n.

For \(n = 100\), the proposed dCorU(A) and dCorU(T) estimators were examined. The results for the convex linear combinations are also provided. These tables include the mean, bias, variance and MSE for each combination, along with the bootstrap-estimated \(\hat{\lambda }_0\) value. Since the real \(\lambda _0\) can be obtained, the results for these combinations are also provided. This demonstrates the differences between the estimators of the distance correlation \(\text {dCor}_{\lambda }\) with the bootstrap estimate \(\hat{\lambda }_0\) and the real value \(\lambda _0\).

For \(n = 1000\) and \(n = 10000\), only results for the original estimators (dCorU, dCorV) were presented. Since the negative values for these sample sizes are only observed under specific conditions and did not exhibit significant differences from the original estimators, these results are not presented.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Monroy-Castillo, B.E., Jácome, M.A. & Cao, R. Improved distance correlation estimation. Appl Intell 55, 263 (2025). https://doi.org/10.1007/s10489-024-05940-x

Accepted:

Published:

DOI: https://doi.org/10.1007/s10489-024-05940-x