Abstract

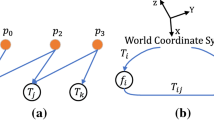

With the benefit of reduced memory and computational overhead, the sparse-based 6 degrees-of-freedom (6D) pose estimation method leverages the creation of sparse two-dimensional (2D) to three-dimensional (3D) correspondences to estimate the pose of objects in an RGB image. However, this method often leads to accuracy degradation. In this paper, we propose a data field weighting based pixel-wise voting network (DFW-PVNet), aiming at improving the accuracy of the 6D pose estimation while keeping excellent memory and computational overheads. The proposed DFW-PVNet first assigns potential weights to pixels at different positions by utilizing data field theory and then selects the pixels with higher potential weights to participate in the voting and locating of 2D keypoints. By building accurate sparse 2D-3D correspondences between the located 2D keypoints and the corresponding predefined 3D keypoints, the 6D pose of the object can be calculated through a perspective-n-point (PnP) solver. Experiments are conducted based on the LINEMOD and the Occlusion LINEMOD datasets, and the results show that the accuracy of the proposed method surpasses the state-of-the-art sparse-based methods and is comparable to dense-based methods but with significantly lower memory and computational overheads.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Data availability

The datasets for this study can be found in the online repository [https://bop.felk.cvut.cz/datasets/].

References

Fan Z, Zhu Y, He Y et al (2022) Deep learning on monocular object pose detection and tracking: A comprehensive overview. ACM Comput Surv 55(4):1–40

Wang Y, Xie J, Cheng J, Dou L (2023) Review of object pose estimation in RGB images based on deep learning. J Comp Appl 43(8):2546

Gorschlüter F, Rojtberg P, Pöllabauer T (2022) A survey of 6d object detection based on 3d models for industrial applications. J Imaging 8(3):53

Wang C, Xu D, Zhu Y, Martín-Martín R, Lu C, Fei-Fei L, Savarese S (2019) Densefusion: 6D object pose estimation by iterative dense fusion. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp 3343–3352

He Y, Sun W, Huang H, Liu J, Fan H, Sun J (2020) PVN3D: A deep point-wise 3D keypoints voting network for 6DoF pose estimation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp 11632–11641

He Y, Wang Y, Fan H, Sun J, Chen Q (2022) FS6D: Few-shot 6D pose estimation of novel objects. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp 6814–6824

Brachmann E, Michel F, Krull A, Yang MY, Gumhold S (2016) Uncertainty-driven 6D pose estimation of objects and scenes from a single RGB image. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp 3364–3372

Rad M, Lepetit V (2017) Bb8: A scalable, accurate, robust to partial occlusion method for predicting the 3D poses of challenging objects without using depth. In: Proceedings of the IEEE International Conference on Computer Vision, pp 3828–3836

Tekin B, Sinha SN, Fua P (2018) Real-time seamless single shot 6D object pose prediction. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp 292–301

Zhou Y, Liu S (2022) Object pose estimation based on improved YOLOX algorithm. In: 2022 IEEE 11th Data Driven Control and Learning Systems Conference (DDCLS), pp 699–705. IEEE

Zhang S, Zhao W, Guan Z, Peng X, Peng J (2021) Keypoint-graph-driven learning framework for object pose estimation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp 1065–1073

Oberweger M, Rad M, Lepetit V (2018) Making deep heatmaps robust to partial occlusions for 3D object pose estimation. In: Proceedings of the European Conference on Computer Vision (ECCV), pp 119–134

Park K, Patten T, Vincze M (2019) Pix2pose: Pixel-wise coordinate regression of objects for 6D pose estimation. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp 7668–7677s

Peng S, Liu Y, Huang Q, Zhou X, Bao H (2019) PVNet: Pixel-wise voting network for 6DoF object pose estimation. IEEE Trans Pattern Anal Mach Intell 14(8)

Li Z, Wang G, Ji X (2019) CDPN: Coordinates-based disentangled pose network for real-time RGB-based 6-DoF object pose estimation. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp 7678–7687

Wang G, Manhardt F, Tombari F, Ji X (2021) GDR-Net: Geometry-guided direct regression network for monocular 6D object pose estimation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp 16611–16621

Li D, Du Y (2017) Artificial intelligence with uncertainty. CRC Press

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp 770–778

Xiao J, Hays J, Ehinger KA, Oliva A, Torralba A (2010) SUN database: Large-scale scene recognition from abbey to zoo. In: 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, pp 3485–3492. IEEE

Hinterstoisser S, Cagniart C, Ilic S, Sturm P, Navab N, Fua P, Lepetit V (2011) Gradient response maps for real-time detection of textureless objects. IEEE Trans Pattern Anal Mach Intell 34(5):876–888

Hu Y, Hugonot J, Fua P, Salzmann M (2019) Segmentation-driven 6D object pose estimation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp 3385–3394

Lepetit V, Moreno-Noguer F, Fua P (2009) EPnP: An accurate O(n) solution to the PnP problem. Int J Comput Vis 81:155–166

Zakharov S, Shugurov I, Ilic S (2019) DPOD: 6D pose object detector and refiner. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp 1941–1950

Hodan T, Barath D, Matas J (2020) EPOS: Estimating 6D pose of objects with symmetries. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp 11703–11712

Redmon J, Farhadi A (2017) YOLO9000: Better, faster, stronger. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp 7263–7271

Ge Z, Liu S, Wang F, Li Z, Sun J (2021) YOLOX: Exceeding YOLO series in 2021. arXiv preprint arXiv:2107.08430

Sener O, Koltun V (2018) Multi-task learning as multi-objective optimization. Advances in Neural Information Processing Systems 31

Guo, S., Hu, Y., Alvarez, J. M., & Salzmann, M. (2023). Knowledge distillation for 6d pose estimation by aligning distributions of local predictions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp 18633–18642

Xu, Y., Lin, K. Y., Zhang, G., Wang, X., & Li, H. (2024). Rnnpose: 6-dof object pose estimation via recurrent correspondence field estimation and pose optimization. IEEE Transactions on Pattern Analysis and Machine Intelligence

Li, F., Vutukur, S. R., Yu, H., Shugurov, I., Busam, B., Yang, S., & Ilic, S. (2023). Nerf-pose: A first-reconstruct-then-regress approach for weakly-supervised 6d object pose estimation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pp 2123–2133

Liu P, Zhang Q, Cheng J (2024) Bdr6d: Bidirectional deep residual fusion network for 6d pose estimation. IEEE Trans Autom Sci Eng 21(2):1793–1804

Acknowledgements

This work was supported in part by National Natural Science Foundation of China (NSFC) under Grant No.61772061.

Author information

Authors and Affiliations

Contributions

Yinning Lu proposed the initial research idea, conducted the experiments, and wrote initial manuscript draft. Songwei Pei supervised the work and revised the manuscript.

Corresponding author

Ethics declarations

Ethics approval

This article does not contain any studies with human participants or animals performed by any of the authors.

Competing interests

The authors declare that there is no conflict of interest regarding the publication of this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Lu, Y., Pei, S. DFW-PVNet: data field weighting based pixel-wise voting network for effective 6D pose estimation. Appl Intell 55, 240 (2025). https://doi.org/10.1007/s10489-024-05942-9

Accepted:

Published:

DOI: https://doi.org/10.1007/s10489-024-05942-9