Abstract

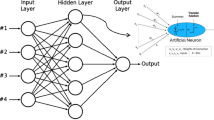

Remaining useful life predictions depend on the quality of health indicators (HIs) generated from condition monitoring sensors, evaluated by predefined prognostic metrics such as monotonicity, prognosability, and trendability. Constructing these HIs requires effective models capable of automatically selecting and fusing features from pertinent measurements, given the inherent noise in sensory data. While deep learning approaches have the potential to automatically extract features without the need for significant specialist knowledge, these features lack a clear (physical) interpretation. Furthermore, the evaluation metrics for HIs are nondifferentiable, limiting the application of supervised networks. This research aims to develop an intrinsically interpretable ANN, targeting qualified HIs with significantly lower complexity. A semi-supervised paradigm is employed, simulating labels inspired by the physics of progressive damage. This approach implicitly incorporates nondifferentiable criteria into the learning process. The architecture comprises additive and newly modified multiplicative layers that combine features to better represent the system’s characteristics. The developed multiplicative neurons are not restricted to pairwise actions, and they can also handle both division and multiplication. To extract a compact HI equation, making the model mathematically interpretable, the number of parameters is further reduced by discretizing the weights via a ternary set. This weight discretization simplifies the extracted equation while gently controlling the number of weights that should be overlooked. The developed methodology is specifically tailored to construct interpretable HIs for commercial turbofan engines, showcasing that the generated HIs are of high quality and interpretable.

Graphical abstract

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Data Availability

Dataset used in this study is publicly available.

References

Guo L, Li N, Jia F, Lei Y, Lin J (2017) A recurrent neural network based health indicator for remaining useful life prediction of bearings. Neurocomputing 240:98–109

Soualhi M, Nguyen KT, Medjaher K, Nejjari F, Puig V, Blesa J, Quevedo J, Marlasca F (2023) Dealing with prognostics uncertainties: Combination of direct and recursive remaining useful life estimations. Comput Ind 144:103766

Huang R, Xi L, Li X, Liu CR, Qiu H, Lee J (2007) Residual life predictions for ball bearings based on self-organizing map and back propagation neural network methods. Mech Syst Signal Process 21(1):193–207

Coble J, Hines JW(2009) Identifying optimal prognostic parameters from data: a genetic algorithms approach. In: Annual Conference of the PHM Society 1

Coble J (2010) An Automated Approach for Fusing Data Sources to Identify Optimal Prognostic Parameters. PhD thesis, Dissertation, University of Tennessee Knoxville, TN

Niknam SA, Kobza J, Hines JW (2017) Techniques of trend analysis in degradation-based prognostics. Int J Adv Manuf Technol 88:2429–2441

Rigamonti M, Baraldi P, Zio E, Roychoudhury I, Goebel K, Poll S (2018) Ensemble of optimized echo state networks for remaining useful life prediction. Neurocomputing 281:121–138

Baptista ML, Goebel K, Henriques EM (2022) Relation between prognostics predictor evaluation metrics and local interpretability shap values. Artif Intell 306:103667

Hu C, Youn BD, Wang P, Yoon JT (2012) An ensemble approach for robust data-driven prognostics. In: International design engineering technical conferences and computers and information in engineering conference, vol 45028, pp 333–347, American Society of Mechanical Engineers

Wen P, Zhao S, Chen S, Li Y (2021) A generalized remaining useful life prediction method for complex systems based on composite health indicator. Reliab Eng & Syst Safe 205:107241

Chen D, Qin Y, Qian Q, Wang Y, Liu F (2022) Transfer life prediction of gears by cross-domain health indicator construction and multi-hierarchical long-term memory augmented network. Reliability Engineering & System Safety, pp 108916

de Pater I, Mitici M (2023) Developing health indicators and rul prognostics for systems with few failure instances and varying operating conditions using a lstm autoencoder. Eng Appl Artif Intell 117:105582

Ni Q, Ji J, Feng K (2022) Data-driven prognostic scheme for bearings based on a novel health indicator and gated recurrent unit network. IEEE Transactions on Industrial Informatics

Mitici M, de Pater I, Barros A, Zeng Z (2023) Dynamic predictive maintenance for multiple components using data-driven probabilistic rul prognostics: The case of turbofan engines. Reliability Engineering & System Safety, pp 109199

Yan J, He Z, He S (2022) A deep learning framework for sensor-equipped machine health indicator construction and remaining useful life prediction. Comput & Ind Eng 172:108559

Molnar C, Casalicchio G, Bischl B (2020) Interpretable machine learning–a brief history, state-of-the-art and challenges. In: Joint european conference on machine learning and knowledge discovery in databases, pp 417–431, Springer

Arrieta AB, Díaz-Rodríguez N, Del Ser J, Bennetot A, Tabik S, Barbado A, García S, Gil-López S, Molina D, Benjamins R et al (2020) Explainable artificial intelligence (xai): Concepts, taxonomies, opportunities and challenges toward responsible ai. Inf fusion 58:82–115

Sokol K, Flach P (2021) Explainability is in the mind of the beholder: Establishing the foundations of explainable artificial intelligence. arXiv:2112.14466

Vollert S, Atzmueller M, Theissler A (2021) Interpretable machine learning: A brief survey from the predictive maintenance perspective. In: 2021 26th IEEE international conference on emerging technologies and factory automation (ETFA), pp 01–08, IEEE

Nor AKM, Pedapati SR, Muhammad M, Leiva V (2021) Overview of explainable artificial intelligence for prognostic and health management of industrial assets based on preferred reporting items for systematic reviews and meta-analyses. Sensors 21(23):8020

Marcinkevičs R, Vogt JE (2023) Interpretable and explainable machine learning: a methods-centric overview with concrete examples. Wiley Interdisciplinary Rev: Data Mining Knowl Disc 13(3):e1493

Cummins L, Sommers A, Ramezani SB, Mittal S, Jabour J, Seale M, Rahimi S (2024) Explainable predictive maintenance: a survey of current methods, challenges and opportunities. IEEE Access

Hong CW, Lee C, Lee K, Ko M-S, Kim DE, Hur K (2020) Remaining useful life prognosis for turbofan engine using explainable deep neural networks with dimensionality reduction. Sensors 20(22):6626

Khan T, Ahmad K, Khan J, Khan I, Ahmad N (2022) An explainable regression framework for predicting remaining useful life of machines. In: 2022 27th international conference on automation and computing (icac), pp 1–6, IEEE

Youness G, Aalah A (2023) An explainable artificial intelligence approach for remaining useful life prediction. Aerospace 10(5):474

Baptista M, Mishra M, Henriques E, Prendinger H (2020) Using explainable artificial intelligence to interpret remaininguseful life estimation with gated recurrent unit

Protopapadakis G, Apostolidis A, Kalfas AI (2022) Explainable and interpretable ai-assisted remaining useful life estimation for aeroengines. In: Turbo Expo: Power for Land, Sea, and Air 85987, pp V002T05A002, American Society of Mechanical Engineers

Solís-Martín D, Galán-Páez J, Borrego-Díaz J (2023) On the soundness of xai in prognostics and health management (phm). Information 14(5):256

Lipton ZC (2018) The mythos of model interpretability: In machine learning, the concept of interpretability is both important and slippery. Queue 16(3):31–57

Kindermans P-J, Hooker S, Adebayo J, Alber M, Schütt KT, Dähne S, Erhan D, Kim B (2019) The (un) reliability of saliency methods. Interpreting, explaining and visualizing deep learning, Explainable AI, pp 267–280

Ghorbani A, Abid A, Zou J (2019) Interpretation of neural networks is fragile. In: Proceedings of the AAAI conference on artificial intelligence, vol 33, pp 3681–3688

Moradi M, Gul FC, Zarouchas D (2024) A novel machine learning model to design historical-independent health indicators for composite structures. Compos Part B: Eng 275:111328

Veiber L, Allix K, Arslan Y, Bissyandé TF, Klein J (2020) Challenges towards \(\{Production-Ready\}\) explainable machine learning. In: 2020 USENIX conference on operational machine learning (OpML 20)

Barraza JF, Droguett EL, Martins MR (2024) Scf-net: A sparse counterfactual generation network for interpretable fault diagnosis. Reliab Eng & Syst Safe 250:110285

Halford GS, Baker R, McCredden JE, Bain JD (2005) How many variables can humans process? Psych Sci 16(1):70–76

Schmidt M, Lipson H (2009) Distilling free-form natural laws from experimental data. Science 324(5923):81–85

Udrescu S-M, Tegmark M (2020) Ai feynman: A physics-inspired method for symbolic regression. Sci Adv 6(16):eaay2631

Ding P, Qian Q, Wang H, Yao J (2019) A symbolic regression based residual useful life model for slewing bearings. IEEE Access 7:72076–72089

Ding P, Jia M, Wang H (2021) A dynamic structure-adaptive symbolic approach for slewing bearings’ life prediction under variable working conditions. Struct Health Monit 20(1):273–302

Nguyen KT, Medjaher K (2021) An automated health indicator construction methodology for prognostics based on multi-criteria optimization. ISA Trans 113:81–96

Moradi M, Komninos P, Benedictus R, Zarouchas D (2022) Interpretable neural network with limited weights for constructing simple and explainable hi using shm data. In: Annual Conference of the PHM Society 14, PHM Society

Martius G, Lampert CH (2016) Extrapolation and learning equations. arXiv:1610.02995

Deng X, Zhang Z (2022) Sparsity-control ternary weight networks. Neural Netw 145:221–232

Moradi M, Broer A, Chiachío J, Benedictus R, Loutas TH, Zarouchas D (2023) Intelligent health indicator construction for prognostics of composite structures utilizing a semi-supervised deep neural network and shm data. Eng App Artif Intell 117:105502

Van Engelen JE, Hoos HH (2020) A survey on semi-supervised learning. Machine Learn 109(2):373–440

Ramasso E, Saxena A (2014) Review and analysis of algorithmic approaches developed for prognostics on cmapss dataset. In: Annual conference of the prognostics and health management society 2014

Moradi M, Broer A, Chiachío J, Benedictus R, Zarouchas D (2023) Intelligent health indicators based on semi-supervised learning utilizing acoustic emission data. In: European workshop on structural health monitoring, pp 419–428. Springer

Durbin R, Rumelhart DE (1989) Product units: A computationally powerful and biologically plausible extension to backpropagation networks. Neural Comput 1(1):133–142

Schmitt M (2002) On the complexity of computing and learning with multiplicative neural networks. Neural Comput 14(2):241–301

Acknowledgements

This project has received funding from the European Union’s Horizon 2020 research and innovation programme under the Marie Sklodowska-Curie grant agreement No 859957 “ENHAnCE, European training Network in intelligent prognostics and Health mAnagement in Composite structurEs ”.

Author information

Authors and Affiliations

Contributions

Morteza Moradi: Conceptualization, Methodology, Software, Validation, Data curation, Formal analysis, Investigation, Visualization, Writing - Original Draft, Writing - Review & Editing. Panagiotis Komninos: Methodology, Software, Formal analysis, Investigation, Data curation, Writing - Review & Editing. Dimitrios Zarouchas: Writing - Review & Editing, Supervision, Funding acquisition.

Corresponding author

Ethics declarations

Competing of interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Moradi, M., Komninos, P. & Zarouchas, D. Constructing explainable health indicators for aircraft engines by developing an interpretable neural network with discretized weights. Appl Intell 55, 143 (2025). https://doi.org/10.1007/s10489-024-05981-2

Accepted:

Published:

DOI: https://doi.org/10.1007/s10489-024-05981-2