Abstract

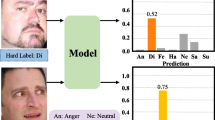

Facial expression recognition poses a significant challenge in computer vision with numerous applications. However, existing FER methods need more generalization ability and better robustness when dealing with complex datasets with noisy labels. We propose a label distribution learning model, RA-ARNet, with novel reliability-aware (RA) and attention-rectified (AR) modules to handle noisy labels. Specifically, the RA module evaluates the reliability of the image’ neighboring instances in the valence-arousal space and constructs corresponding label distribution based on the evaluation as auxiliary supervision information to enhance the model’s robustness and generalization on various FER datasets with noisy labels. The AR module can gradually improve the model’s ability to extract attention features of facial landmarks by introducing consistency detection of attention feature maps of images and landmarks in training, thereby improving the model’s FER accuracy. The competitive experimental results on public datasets validate the effectiveness of the proposed method and compare it with the current state-of-the-art methods. The experimental results indicate that the classification performance of RA-ARNet reaches 91.36% on RAF-DB and 61.47% on AffectNet (8 cls) and shows potential to deal with images with occlusion.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Data availability and access

The dataset and the source code generated during this study are available on request from the corresponding author.

References

Zhao X, Zhu J, Luo B, Gao Y (2021) Survey on facial expression recognition: history, applications, and challenges. IEEE MultiMedia 28(4):38–44

Zeng J, Shan S, Chen X (2018) Facial expression recognition with inconsistently annotated datasets. In: Proceedings of the European conference on computer vision (ECCV), pp 222–237

Chen S, Wang J, Chen Y, Shi Z, Geng X, Rui Y (2020) Label distribution learning on auxiliary label space graphs for facial expression recognition. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 13984–13993

Zhao Z, Liu Q, Zhou F (2021) Robust lightweight facial expression recognition network with label distribution training. In: Proceedings of the AAAI conference on artificial intelligence, vol 35, pp 3510–3519

She J, Hu Y, Shi H, Wang J, Shen Q, Mei T (2021) Dive into ambiguity: latent distribution mining and pairwise uncertainty estimation for facial expression recognition. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 6248–6257

Li J, Li G, Liu F, Yu Y (2022) Neighborhood collective estimation for noisy label identification and correction. In: European conference on computer vision. Springer, pp 128–145

Mollahosseini A, Hasani B, Mahoor MH (2019) Affectnet: A database for facial expression, valence, and arousal computing in the wild. IEEE Trans Affect Comput 10(1):18–31. https://doi.org/10.1109/TAFFC.2017.2740923

Le N, Nguyen K, Tran Q, Tjiputra E, Le B, Nguyen A (2023) Uncertainty-aware label distribution learning for facial expression recognition. In: Proceedings of the IEEE/CVF winter conference on applications of computer vision, pp 6088–6097

Yu Z, Zhang C (2015) Image based static facial expression recognition with multiple deep network learning. In: Proceedings of the 2015 ACM on International conference on multimodal interaction, pp 435–442

Wu Y, Shen L (2021) An adaptive landmark-based attention network for students facial expression recognition. In: 2021 6th International conference on communication, image and signal processing (CCISP). IEEE, pp 139–144

Zhang Y, Wang C, Ling X, Deng W (2022) Learn from all: Erasing attention consistency for noisy label facial expression recognition. In: European conference on computer vision. Springer, pp 418–434

Dalal N, Triggs B (2005) Histograms of oriented gradients for human detection. In: 2005 IEEE Computer society conference on computer vision and pattern recognition (CVPR’05), vol 1. IEEE, pp 886–893

Buciu I, Pitas I (2004) Application of non-negative and local non negative matrix factorization to facial expression recognition. In: Proceedings of the 17th international conference on pattern recognition, 2004. ICPR 2004, vol. 1. IEEE, pp 288–291

Xie X, Lam K-M (2006) Gabor-based kernel pca with doubly nonlinear mapping for face recognition with a single face image. IEEE Trans Image Process 15(9):2481–2492

Li Y, Lu Y, Chen B, Zhang Z, Li J, Lu G, Zhang D (2022) Learning informative and discriminative features for facial expression recognition in the wild. IEEE Trans Circuits Syst Video Technol 32(5):3178–3189. https://doi.org/10.1109/TCSVT.2021.3103760

Li Y, Gao Y, Chen B, Zhang Z, Lu G, Zhang D (2022) Self-supervised exclusive-inclusive interactive learning for multi-label facial expression recognition in the wild. IEEE Trans Circuits Syst Video Technol 32(5):3190–3202. https://doi.org/10.1109/TCSVT.2021.3103782

Gu Y, Yan H, Zhang X, Wang Y, Ji Y, Ren F (2023) Toward facial expression recognition in the wild via noise-tolerant network. IEEE Trans Circuits Syst Video Technol 33(5):2033–2047. https://doi.org/10.1109/TCSVT.2022.3220669

Liu Y, Zhang X, Kauttonen J, Zhao G (2024) Uncertain facial expression recognition via multi-task assisted correction. IEEE Trans Multimedia 26:2531–2543. https://doi.org/10.1109/TMM.2023.3301209

Zhou Y, Xue H, Geng X (2015) Emotion distribution recognition from facial expressions. In: Proceedings of the 23rd ACM international conference on multimedia, pp 1247–1250

Gao B-B, Xing C, Xie C-W, Wu J, Geng X (2017) Deep label distribution learning with label ambiguity. IEEE Trans Image Process 26(6):2825–2838

Deng J, Guo J, Xue N, Zafeiriou S (2019) Arcface: Additive angular margin loss for deep face recognition. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 4690–4699

Chen C (2021) Pytorch face landmark: A fast and accurate facial landmark detector

Zhou B, Khosla A, Lapedriza A, Oliva A, Torralba A (2016) Learning deep features for discriminative localization. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 2921–2929

Xu N, Liu Y-P, Geng X (2019) Label enhancement for label distribution learning. IEEE Trans Knowl Data Eng 33(4):1632–1643

Russell JA (1980) A circumplex model of affect. J Pers Soc Psychol 39(6):1161

Li S, Deng W, Du J (2017) Reliable crowdsourcing and deep locality-preserving learning for expression recognition in the wild. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 2852–2861

Mollahosseini A, Hasani B, Mahoor MH (2017) Affectnet: A database for facial expression, valence, and arousal computing in the wild. IEEE Trans Affect Comput 10(1):18–31

Dhall A, Goecke R, Joshi J, Sikka K, Gedeon T (2014) Emotion recognition in the wild challenge 2014: Baseline, data and protocol. In: Proceedings of the 16th international conference on multimodal interaction, pp 461–466

Guo Y, Zhang L, Hu Y, He X, Gao J (2016) Ms-celeb-1m: A dataset and benchmark for large-scale face recognition. In: Computer vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, October 11-14, 2016, Proceedings, Part III 14. Springer, pp 87–102

Toisoul A, Kossaifi J, Bulat A, Tzimiropoulos G, Pantic M (2021) Estimation of continuous valence and arousal levels from faces in naturalistic conditions. Nat Mach Intell 3(1):42–50

Kinga D, Adam JB, et al (2015) A method for stochastic optimization. In: International conference on learning representations (ICLR), vol. 5, p. 6. San Diego, California

Li H, Wang N, Yang X, Wang X, Gao X (2024) Unconstrained facial expression recognition with no-reference de-elements learning. IEEE Trans Affect Comput 15(1):173–185. https://doi.org/10.1109/TAFFC.2023.3263886

Li C, Li X, Wang X, Huang D, Liu Z, Liao L (2024) Fg-agr: Fine-grained associative graph representation for facial expression recognition in the wild. IEEE Trans Circuits Syst Video Technol 34(2):882–896. https://doi.org/10.1109/TCSVT.2023.3237006

Wang K, Peng X, Yang J, Lu S, Qiao Y (2020) Suppressing uncertainties for large-scale facial expression recognition. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 6897–6906

Wang K, Peng X, Yang J, Meng D, Qiao Y (2020) Region attention networks for pose and occlusion robust facial expression recognition. IEEE Trans Image Process 29:4057–4069

Farzaneh AH, Qi X (2021) Facial expression recognition in the wild via deep attentive center loss. In: Proceedings of the IEEE/CVF Winter conference on applications of computer vision, pp 2402–2411

Li H, Wang N, Ding X, Yang X, Gao X (2021) Adaptively learning facial expression representation via cf labels and distillation. IEEE Trans Image Process 30:2016–2028

Ruan D, Yan Y, Lai S, Chai Z, Shen C, Wang H (2021) Feature decomposition and reconstruction learning for effective facial expression recognition. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 7660–7669

Xue F, Wang Q, Guo G (2021) Transfer: Learning relation-aware facial expression representations with transformers. In: Proceedings of the IEEE/CVF International conference on computer vision, pp 3601–3610

Zeng D, Lin Z, Yan X, Liu Y, Wang F, Tang B (2022) Face2exp: Combating data biases for facial expression recognition. In: Proceedings of the IEEE/cvf conference on computer vision and pattern recognition, pp 20291–20300

Zhao R, Liu T, Huang Z, Lun DPK, Lam K-M (2023) Geometry-aware facial expression recognition via attentive graph convolutional networks. IEEE Trans Affect Comput 14(2):1159–1174. https://doi.org/10.1109/TAFFC.2021.3088895

Sun M, Cui W, Zhang Y, Yu S, Liao X, Hu B, Li Y (2023) Attention-rectified and texture-enhanced cross-attention transformer feature fusion network for facial expression recognition. IEEE Trans Ind Inform 19(12):11823–11832. https://doi.org/10.1109/TII.2023.3253188

Liu C, Hirota K, Dai Y (2023) Patch attention convolutional vision transformer for facial expression recognition with occlusion. Inf Sci 619:781–794. https://doi.org/10.1016/J.INS.2022.11.068

Li Y, Lu G, Li J, Zhang Z, Zhang D (2023) Facial expression recognition in the wild using multi-level features and attention mechanisms. IEEE Trans Affect Comput 14(1):451–462. https://doi.org/10.1109/TAFFC.2020.3031602

Lee B, Ko K, Hong J, Ko H (2024) Hard sample-aware consistency for low-resolution facial expression recognition. In: 2024 IEEE/CVF Winter conference on applications of computer vision (WACV), pp 198–207. https://doi.org/10.1109/WACV57701.2024.00027

Maaten L, Hinton G (2008) Visualizing data using t-sne. J Mach Learn Res 9(11)

Zhang Y, Wang C, Deng W (2021) Relative uncertainty learning for facial expression recognition. Adv Neural Inf Process Syst 34:17616–17627

Acknowledgements

This work was supported by the National Natural Science Foundation of China under Grant (61902225), the Joint Funds of Natural Science Foundation of Shandong Province under Grant (ZR2021LZL011), and the Fundamental Research Funds for the Central Universities (2022ZYGXZR020).

Author information

Authors and Affiliations

Contributions

Liyuan Peng data curation, conceptualization, design, implementation, and draft writing; Yanbing Liu, visualization and validation; Yuyun Wei, validation; Jia cui, editing and supervision; Meng Qi formal analysis, resources, writing-review, and supervision.

Corresponding author

Ethics declarations

Ethical and informed consent for data used

The data supporting this study’s findings are available in RAF-DB and AffectNet datasets.

Competing interests

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Peng, L., Liu, Y., Wei, Y. et al. Reliability-aware label distribution learning with attention-rectified for facial expression recognition. Appl Intell 55, 18 (2025). https://doi.org/10.1007/s10489-024-05999-6

Accepted:

Published:

DOI: https://doi.org/10.1007/s10489-024-05999-6