Abstract

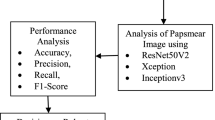

Cervical cancer is the fourth most prevalent cancer among women worldwide and a major contributor to cancer-related mortality in females. Manually classifying cytopathology screening slides remains one of the most important and commonly used methods for diagnosing cervical cancer. However, this method requires the participation of medical experts and is highly labor intensive. Consequently, in regions with limited medical resources, prompt cervical cancer diagnosis is challenging. To address this issue, the BiNext-Cervix model, a new deep learning framework, has been proposed to rapidly and accurately diagnose cervical cancer via Pap smear images. BiNext-Cervix employs Tokenlearner in the initial stage to facilitate interaction between two pixels within the image, enabling the subsequent network to better understand the image features. Additionally, the BiNext-Cervix integrates the recently introduced ConvNext and BiFormer models, allowing for deep exploration of image information from both local and global perspectives. A fully connected layer is used to fuse the extracted features and perform the classification. The experimental results demonstrate that combining ConvNext and BiFormer achieves higher accuracy than using either model individually. Furthermore, the proposed BiNext-Cervix outperforms other commonly used deep learning models, showing superior performance.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Data Availability and Access

All datasets used in this study are derived from publicly available sources.

References

Sung H, Ferlay J, Siegel RL, Laversanne M, Soerjomataram I, Jemal A, Bray F (2021) Global cancer statistics 2020: Globocan estimates of incidence and mortality worldwide for 36 cancers in 185 countries. CA Cancer J Clin 71(3):209–249

Health WHOR, Organization WH, Diseases WHOC, Promotion H (2006) Comprehensive cervical cancer control: a guide to essential practice. World Health Organization, ???

Bray F, Ferlay J, Soerjomataram I, Siegel RL, Torre LA, Jemal A (2018) Global cancer statistics 2018: Globocan estimates of incidence and mortality worldwide for 36 cancers in 185 countries. CA Cancer J Clin 68(6):394–424

Gençtav A, Aksoy S, Önder S (2012) Unsupervised segmentation and classification of cervical cell images. Pattern Recogn 45(12):4151–4168

Wang C-W, Liou Y-A, Lin Y-J, Chang C-C, Chu P-H, Lee Y-C, Wang C-H, Chao T-K (2021) Artificial intelligence-assisted fast screening cervical high grade squamous intraepithelial lesion and squamous cell carcinoma diagnosis and treatment planning. Sci Rep 11(1):16244

William W, Ware A, Basaza-Ejiri AH, Obungoloch J (2018) A review of image analysis and machine learning techniques for automated cervical cancer screening from pap-smear images. Comput Methods Programs Biomed 164:15–22

LeCun Y, Bottou L, Bengio Y, Haffner P (1998) Gradient-based learning applied to document recognition. Proc IEEE 86(11):2278–2324

Krizhevsky A, Sutskever I, Hinton GE (2012) Imagenet classification with deep convolutional neural networks. Adv Neural Inf Process Syst 25

Rahaman MM, Li C, Yao Y, Kulwa F, Wu X, Li X, Wang Q (2021) Deepcervix: a deep learning-based framework for the classification of cervical cells using hybrid deep feature fusion techniques. Comput Biol Med 136:104649

Simonyan K, Zisserman A (2014) Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556

Chollet F (2017) Xception: deep learning with depthwise separable convolutions. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1251–1258

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 770–778

Shi J, Wang R, Zheng Y, Jiang Z, Zhang H, Yu L (2021) Cervical cell classification with graph convolutional network. Comput Methods Programs Biomed 198:105807

Yaman O, Tuncer T (2022) Exemplar pyramid deep feature extraction based cervical cancer image classification model using pap-smear images. Biomed Signal Process Control 73:103428

Al-Haija QA, Smadi M, Al-Bataineh OM (2021) Identifying phasic dopamine releases using darknet-19 convolutional neural network. In: 2021 IEEE International IoT, Electronics and Mechatronics Conference (IEMTRONICS). IEEE, pp 1–5

Tuncer T, Ertam F (2020) Neighborhood component analysis and relieff based survival recognition methods for hepatocellular carcinoma. Physica A 540:123143

Cortes C, Vapnik V (1995) Support-vector networks. Mach Learn 20:273–297

Tolstikhin IO, Houlsby N, Kolesnikov A, Beyer L, Zhai X, Unterthiner T, Yung J, Steiner A, Keysers D, Uszkoreit J et al (2021) Mlp-mixer: an all-mlp architecture for vision. Adv Neural Inf Process Syst 34:24261–24272

Dosovitskiy A, Beyer L, Kolesnikov A, Weissenborn D, Zhai X, Unterthiner T, Dehghani M, Minderer M, Heigold G, Gelly S et al (2020) An image is worth 16x16 words: transformers for image recognition at scale. arXiv preprint arXiv:2010.11929

Russakovsky O, Deng J, Su H, Krause J, Satheesh S, Ma S, Huang Z, Karpathy A, Khosla A, Bernstein M et al (2015) Imagenet large scale visual recognition challenge. Int J Comput Vision 115:211–252

Khan A, Han S, Ilyas N, Lee Y-M, Lee B (2023) Cervixformer: a multi-scale swin transformer-based cervical pap-smear wsi classification framework. Comput Methods Programs Biomed 240:107718

Darwish M, Altabel MZ, Abiyev RH (2023) Enhancing cervical pre-cancerous classification using advanced vision transformer. Diagnostics 13(18):2884

Lee SH, Lee S, Song BC (2021) Vision transformer for small-size datasets. arXiv preprint arXiv:2112.13492

Liu W, Li C, Xu N, Jiang T, Rahaman MM, Sun H, Wu X, Hu W, Chen H, Sun C et al (2022) Cvm-cervix: a hybrid cervical pap-smear image classification framework using cnn, visual transformer and multilayer perceptron. Pattern Recogn 130:108829

Maurya R, Pandey NN, Dutta MK (2023) Visioncervix: Papanicolaou cervical smears classification using novel cnn-vision ensemble approach. Biomed Signal Process Control 79:104156

Iandola F, Moskewicz M, Karayev S, Girshick R, Darrell T, Keutzer K (2014) Densenet: implementing efficient convnet descriptor pyramids. arXiv preprint arXiv:1404.1869

Howard AG, Zhu M, Chen B, Kalenichenko D, Wang W, Weyand T, Andreetto M, Adam H (2017) Mobilenets: efficient convolutional neural networks for mobile vision applications. arXiv preprint arXiv:1704.04861

Liu Z, Mao H, Wu C-Y, Feichtenhofer C, Darrell T, Xie S (2022) A convnet for the 2020s. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 11976–11986

Zhu L, Wang X, Ke Z, Zhang W, Lau RW (2023) Biformer: vision transformer with bi-level routing attention. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 10323–10333

Xiao Z, Xu X, Xing H, Zhao B, Wang X, Song F, Qu R, Feng L (2024) Dtcm: deep transformer capsule mutual distillation for multivariate time series classification. IEEE Trans Cogn Dev Syst

Xiao Z, Tong H, Qu R, Xing H, Luo S, Zhu Z, Song F, Feng L (2023) Capmatch: semi-supervised contrastive transformer capsule with feature-based knowledge distillation for human activity recognition. IEEE Trans Neural Netw Learn Syst

Ryoo MS, Piergiovanni A, Arnab A, Dehghani M, Angelova A (2021) Tokenlearner: what can 8 learned tokens do for images and videos? arXiv preprint arXiv:2106.11297

Plissiti ME, Dimitrakopoulos P, Sfikas G, Nikou C, Krikoni O, Charchanti A (2018) Sipakmed: a new dataset for feature and image based classification of normal and pathological cervical cells in pap smear images. In: 2018 25th IEEE International Conference on Image Processing (ICIP). IEEE, pp 3144–3148

Rezende MT, Silva R, Bernardo FdO, Tobias AH, Oliveira PH, Machado TM, Costa CS, Medeiros FN, Ushizima DM, Carneiro CM et al (2021) Cric searchable image database as a public platform for conventional pap smear cytology data. Sci Data 8(1):151

Jantzen J, Dounias G (2006) The pap smear benchmark. In: Proceeding of NISIS-2006 Symposium [Internet]

Hussain E (2019) Liquid based cytology pap smear images for multi-class diagnosis of cervical cancer. Data Brief 4

Huang G, Liu Z, Van Der Maaten L, Weinberger KQ (2017) Densely connected convolutional networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 4700–4708

Szegedy C, Liu W, Jia Y, Sermanet P, Reed S, Anguelov D, Erhan D, Vanhoucke V, Rabinovich A (2015) Going deeper with convolutions. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1–9

Sandler M, Howard A, Zhu M, Zhmoginov A, Chen L-C (2018) Mobilenetv2: inverted residuals and linear bottlenecks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 4510–4520

Ma N, Zhang X, Zheng H-T, Sun J (2018) Shufflenet v2: practical guidelines for efficient cnn architecture design. In: Proceedings of the European Conference on Computer Vision (ECCV), pp 116–131

Szegedy C, Ioffe S, Vanhoucke V, Alemi A (2017) Inception-v4, inception-resnet and the impact of residual connections on learning. In: Proceedings of the AAAI conference on artificial intelligence, vol 31

Liu Z, Lin Y, Cao Y, Hu H, Wei Y, Zhang Z, Lin S, Guo B (2021) Swin transformer: hierarchical vision transformer using shifted windows. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 10012–10022

Srinivas A, Lin T-Y, Parmar N, Shlens J, Abbeel P, Vaswani A (2021) Bottleneck transformers for visual recognition. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 16519–16529

Touvron H, Cord M, Douze M, Massa F, Sablayrolles A, Jégou H (2021) Training data-efficient image transformers & distillation through attention. In: International conference on machine learning. PMLR, pp 10347–10357

Yuan L, Chen Y, Wang T, Yu W, Shi Y, Jiang Z-H, Tay FE, Feng J, Yan S (2021) Tokens-to-token vit: training vision transformers from scratch on imagenet. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 558–567

Maaten L, Hinton G (2008) Visualizing data using t-sne. J Mach Learn Res 9(11)

Selvaraju RR, Cogswell M, Das A, Vedantam R, Parikh D, Batra D (2017) Grad-cam: visual explanations from deep networks via gradient-based localization. In: Proceedings of the IEEE international conference on computer vision, pp 618–626

Hinton G, Vinyals O, Dean J (2015) Distilling the knowledge in a neural network. arXiv preprint arXiv:1503.02531

Krishnamoorthi R (2018) Quantizing deep convolutional networks for efficient inference: a whitepaper. arXiv preprint arXiv:1806.08342

Acknowledgements

This work was supported by JST SPRING, Grant Number JPMJSP2135.

Author information

Authors and Affiliations

Contributions

Minhui Dong: Conceptualization, Methodology, Software, Writing-Original draft. Yu Wang : Data curation, Validation. ZeYu Zhang: Data curation, Validation. Yuki Todo: Supervision, Writing- Reviewing and Editing.

Corresponding author

Ethics declarations

Ethical and informed consent

The study was conducted in accordance with the ethical standards of the institutional and national research committee and with the 1964 Helsinki declaration and its later amendments or comparable ethical standards. Informed consent was obtained from all individual participants included in the study.

Competing Interests

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Dong, M., Wang, Y., Zang, Z. et al. BiNext-Cervix: A novel hybrid model combining BiFormer and ConvNext for Pap smear classification. Appl Intell 55, 144 (2025). https://doi.org/10.1007/s10489-024-06025-5

Accepted:

Published:

DOI: https://doi.org/10.1007/s10489-024-06025-5