Abstract

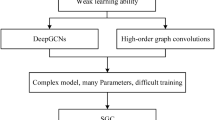

Graph convolutional neural networks (GCNs) are deep learning methods for processing graph-structured data. Usually, GCNs mainly consider pairwise connections and ignore higher-order interactions between nodes. Recently, simplices have been shown to encode not only pairwise relations between nodes but also encode higher-order interactions between nodes. Researchers have been concerned with how to design simplicial-based convolutional neural networks. The existing simplicial neural networks can achieve good performance in tasks such as missing value imputation, graph classification, and node classification. However, due to issues of gradient vanishing, over-smoothing, and over-fitting, they are typically limited to very shallow models. Therefore, we innovatively propose a simplicial convolutional neural network for deep learning (DeepSCNN). Firstly, simplicial edge sampling technology (SES) is introduced to prevent over-fitting caused by deepening network layers. Subsequently, initial residual connection technology is added to simplicial convolutional layers. Finally, to verify the validity of the DeepSCNN, we conduct missing data imputation and node classification experiments on citation networks. Additionally, we compare the experimental performance of the DeepSCNN with that of simplicial neural networks (SNN) and simplicial convolutional networks (SCNN). The results show that our proposed DeepSCNN method outperforms SNN and SCNN.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Data availability and access

The data that support the findings of this study are available on request from authors Chunyang Tang. The data are not publicly available due to them containing information that could compromise research participant privacy and consent.

References

Veličković P, Cucurull G, Casanova A, Romero A, Lio P, Bengio Y (2018) Graph attention networks. In: International Conference on Learning Representations (LCLR), pp 1-10

Ye Z, Zhao H, Zhu Y, Xiao Y (2020) HSNR: a network representation learning algorithm using hierarchical structure embedding. Chin J Electron 29(6):1141–1152

Kipf TN, Welling M (2016) Variational graph auto-encoders. Methods 192:25–34

Zhang M, Chen Y (2018) Link prediction based on graph neural networks. Advan Neural Inf Process Syst 31

Wu Z, Pan S, Chen F, Long G, Zhang C, Philip SY (2020) A comprehensive survey on graph neural networks. IEEE Trans Neural Netw Learn Syst 32(1):4–24

Xu B, Cen K, Huang J, Shen H, Cheng X (2020) A survey on graph convolutional neural network. Chinese J Comput 43(5):755–780

Barbarossa S, Sardellitti S (2020) Topological signal processing over simplicial complexes. IEEE Trans Signal Process 68:2992–3007

Yang M, Isufi E, Schaub M T, Leus G (2021) Finite impulse response filters for simplicial complexes. In: 2021 29th European Signal Processing Conference (EUSIPCO), pp 2005-2009

Ebli S, Defferrard M, Spreemann G (2020) Simplicial neural networks. In: NeurIPS 2020 Workshop on TDA and Beyond

Bodnar C, Frasca F, Wang Y, Otter N, Montufar GF, Lio P, Bronstein M (2021) Weisfeiler and lehman go topological: message passing simplicial networks. In: International Conference on Machine Learning, pp 1026-1037

Bunch E, You Q, Fung G, Singh V (2020) Simplicial 2-complex convolutional neural networks. In: NeurIPS 2020 Workshop on TDA and Beyond

Bodnar C, Frasca F, Otter N, Wang Y, Lio P, Montufar GF, Bronstein M (2021) Weisfeiler and lehman go cellular: Cw networks. Adv Neural Inf Process Syst 34:2625–2640

Bruna J, Zaremba W, Szlam A, LeCun Y (2014) Spectral networks and locally connected networks on graphs. In: International Conference on Learning Representations (LCLR), pp 1-10

Defferrard M, Bresson X, Vandergheynst P (2016) Convolutional neural networks on graphs with fast localized spectral filtering. Advan Neural Inf Process Syst 29

Kipf T N, Welling M (2017) Semi-supervised classification with graph convolutional networks. In: International Conference on Learning Representations (LCLR), pp 1-14

Xu B, Shen H, Cao Q, Qiu Y, Cheng X (2019) Graph wavelet neural network. International Conference on Learning Representations (LCLR)

Zhang X, Liu H, Li Q, Wu XM (2019) Attributed graph clustering via adaptive graph convolution. In: Proceedings of the 28th International Joint Conference on Artificial Intelligence, pp 4327-4333

Zhuang C, Ma Q (2018) Dual graph convolutional networks for graph-based semi-supervised classification. In: Proceedings of the 2018 world wide web conference, pp 499-508

Li M, Zhou S, Chen Y, Huang C, Jiang Y (2024) EduCross: dual adversarial bipartite hypergraph learning for cross-modal retrieval in multimodal educational slides. Inf Fusion 109:102428

Li J, Zheng R, Feng H, Li M, Zhuang X (2024) Permutation equivariant graph framelets for heterophilous graph learning. IEEE Trans Neural Netw Learn Syst:11634–11648

Li M, Li Z, Huang C, Jiang Y, Wu X (2024) EduGraph: learning path-based hypergraph neural networks for MOOC course recommendation. IEEE Trans Big Data:2024–11-03

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 770-778

Xu K, Li C, Tian Y, Sonobe T, Kawarabayashi K I, Jegelka S (2018) Representation learning on graphs with jumping knowledge networks. In: International conference on machine learning, pp 5453–5462

Chen D, Lin Y, Li W, Li P, Zhou J, Sun X (2020) Measuring and relieving the over-smoothing problem for graph neural networks from the topological view. Proc AAAI Conf Artif Intell 34(04):3438–3445

Li G, Muller M, Thabet A, Ghanem B (2019) Deepgcns: can gcns go as deep as cnns?. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 9267-9276

Duan W, Xuan J, Qiao M, LJ (2020) Learning from the dark: boosting graph convolutional neural networks with diverse negative samples. Proc AAAI Conf Artif Intell 36(6):6550–6558

Duan W, Lu J, Wang YG, X J (2024) Layer-diverse negative sampling for graph neural networks. arXiv:2403.11408

Li M, Micheli A, Wang YG, Pan S, Lio P, Gnecco GS, Sanguineti M (2024) Guest editorial: deep neural networks for graphs: theory, models, algorithms, and applications. IEEE Trans Neural Netw Learn Syst 35(4):4367–4372

Yang M, Isufi E, Leus G (2022) Simplicial convolutional neural networks. In: ICASSP 2022-2022 IEEE International Conference on Acoustics (ICASSP), pp 8847-8851

Lee S H, Ji F, Tay W P (2022) SGAT: simplicial graph attention network. Int Joint Conf Artif Intell

Chen Y, Gel YR, Poor HV (2022) BScNets: block simplicial complex neural networks. In: Proceedings of the AAAI conference on artificial intelligence, pp 6333–6341

Hajij M, Ramamurthy KN, Guzmán-Sáenz A, Za G (2022) High skip networks: a higher order generalization of skip connections. In: ICLR 2022 workshop on geometrical and topological representation learning

Lim LH (2020) Hodge Laplacians on graphs. Siam Review 62(3):685–715

Goldberg TE (2002) Combinatorial Laplacians of simplicial complexes. Senior Thesis, Bard College, 6

Yang M, Isufi E, Schaub MT, Leus G (2022) Simplicial Convolutional Filters. IEEE Trans Signal Process:4633–4648

He C, Xie T, Rong Y, Huang W, Huang J, Ren X, Shahabi C (2019) Cascade-bgnn: Toward efficient self-supervised representation learning on large-scale bipartite graphs

Acknowledgements

This article is supported by the National Key Research and Development Program of China (No.2020YFC1523300), Innovation Platform Construction Project of Qinghai Province (2022-ZJ-T02).

Author information

Authors and Affiliations

Contributions

All authors contributed to the study conception and design. Material preparation, data collection, revision and analysis were performed by Chunyang Tang, Zhonglin Ye, Haixing Zhao, Libing Bai, and Jinjin Lin. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing Interests

The authors declare that they have no conflict of interest.

Ethical and informed consent for data used

We adhere to ethical principles when using data. We verbally explained the process of data collection and use to all individuals involved in the study and obtained their informed consent.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Tang, C., Ye, Z., Zhao, H. et al. DeepSCNN: a simplicial convolutional neural network for deep learning. Appl Intell 55, 281 (2025). https://doi.org/10.1007/s10489-024-06121-6

Accepted:

Published:

DOI: https://doi.org/10.1007/s10489-024-06121-6