Abstract

With the widespread application of time series data, the study of classification techniques has become an important topic. Although existing multivariate time series classification (MTSC) methods have made progress, they often rely on one-dimensional (1D) time series, which limits their ability to capture complex temporal dynamics and multiscale features. To address these challenges, a Spectral Convolutional Network (SCNet) is introduced in this work. SCNet effectively transforms 1D time series data into the frequency domain using an enhanced Discrete Fourier Transform (enhanced_DFT), revealing periodicity and key frequency components while reshaping the data into a two-dimensional (2D) time series for better representation. Furthermore, it uses a Spectral Energy Prioritization method to optimize frequency domain energy distribution and a multiscale convolutional module to capture features at different scales, improving the model’s ability to analyze short-term and long-term trends. To validate the effectiveness and superiority, we conducted extensive experiments on 10 sub-datasets from the well-known UEA dataset. The results show that our proposed SCNet achieved the highest average accuracy of 74.3%, which is 2.2% higher than the current state-of-the-art models, demonstrating its potential for practical application and efficiency in MTSC task.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Driven by the accelerated advancements in information technology, a large amount of time series data is being widely generated and applied across various fields, such as financial market analysis [1, 2], healthcare monitoring [3, 4], and industrial process control [5,6,7]. This data typically consists of a series of points recorded in chronological order, reflecting the dynamic changes of various natural or social phenomena and holding irreplaceable value due to its rich information. Based on this, multivariate time series (MTS) data comprises multiple related or independent time series recorded simultaneously, with each series capturing the temporal changes of a specific variable. The inherent complex correlations and rich information make MTSC a challenging task, particularly in terms of accurately capturing and leveraging the dynamic relationships between these series.

In recent years, the rapid development of artificial intelligence technologies has led to significant progress across various domains [8, 9]. MTSC research has also benefited, giving rise to three main types of models: Transformer-based models, self-supervised learning models, and other deep learning models.

Transformer-based models utilize self-attention mechanisms to effectively handle time series data [10, 11].

Self-supervised learning approaches extract features from time series data through innovative learning strategies, addressing the issue of limited labeled data [12, 13].

Deep learning models’ powerful representation and automatic feature learning capabilities have led to many methods aimed at abstracting representations from time series data [14,15,16,17,18].

Although these models have shown significant advantages in MTSC task, they generally struggle with frequency domain features. Moreover, the extracted features are not comprehensive enough, which are alwants based on the original 1D data.

To address these limitations, recent approaches have focused on transforming 1D time series data into 2D representations for more effective feature extraction. Methods such as the Relative Position Matrix (RPM) [19], Gramian Angular Field (GAF) [20], Markov Transition Field (MTF) [21], Recurrence Plot (RP) [22], and Motif Difference Field (MDF) [23] have been proposed to convert time series into 2D formats, capturing key temporal relationships and improving classification accuracy.

While these methods show good performance in transforming 1D time series into 2D formats, they may struggle to capture periodicity and fully reflect the underlying spectral characteristics of the time series. Many of these methods rely on simple transformations or fixed representations, which may not fully adapt to the complexity of the data, especially in MTSC task, and their efficiency tends to be relatively low.

To overcome the limitations of current methods in MTSC task, this paper introduces SCNet, an innovative model designed to handle the frequency domain properties and multiscale characteristics of MTS data.

SCNet uses advanced time-frequency transformation techniques, including an enhanced_DFT. Subsequent normalization enhances sensitivity to critical frequencies. This 1D-to-2D transformation is not only simpler but also more adaptive, providing a richer representation of the data. Additionally, SCNet employs a multiscale convolutional structure to capture features across different scales, from short-term fluctuations to long-term trends. This integration significantly improves the accuracy and efficiency of MTSC, addressing traditional models’ deficiencies in processing frequency domain features. The main contributions of this work are summarized as follows:

-

(1)

A novel network architecture is proposed, combining time-spectral processing and multiscale convolution techniques. This architecture is specifically designed to enhance the recognition of periodic and frequency features in MTS data. Additionally, the flexibility and accuracy in handling MTSC tasks are effectively improved through a multi-level feature extraction strategy.

-

(2)

Advanced time-frequency processing technique is em-ployed to transform MTS data from the time domain to the frequency domain, which is then converted into a 2D format. A 2D image processing method is introduced for feature extraction, after which the data is converted back to 1D. This process reveals the periodicity and primary frequency components of the data, thereby providing multi-perspective features.

-

(3)

A multiscale convolutional module is implemented to process MTS features across various scales. This module captures both short-term fluctuations and long-term trends, facilitating a comprehensive analysis of dynamic changes in MTS data and enhancing the model’s capability to manage its features.

The remainder of this paper is organized as follows. Section 2 introduces related work, Section 3 provides a detailed description of the proposed SCNet model and its various components, Section 4 presents the experimental setup and results, and Section 5 concludes the paper.

2 Related work

This section briefly reviews the latest advancements in MTSC task.

2.1 Problem definition

Definition 1

(MTS) An MTS is a sequence of real-valued vectors \( X \in \mathbb {R}^{T \times M} \), where \( X = \{x_1, x_2, \ldots , x_T\} \). Each \( x_t \in \mathbb {R}^{M} \) represents the observations at time \( t \), with \( M \) being the number of features and \( T \) the length of the time series.

Definition 2

(MTSC) MTSC is the process of mapping an MTS dataset \( X = \{X_1, X_2, \ldots , X_N\} \in \mathbb {R}^{N \times T \times M} \) to a predefined set of labels \( Y = \{y_1, y_2, \ldots , y_N\} \). Here, \( N \) represents the number of samples. The classification task \( f: X \rightarrow Y \) aims to predict the class label for each MTS by learning a model that can identify and utilize underlying patterns in MTS data.

2.2 Multivariate time series classification

Methods for MTSC are generally categorized into three major types: distance-based methods, feature-based methods, and deep learning-based methods.

To begin with, distance-based methods primarily classify MTS by evaluating the similarity between them. The core of these methods lies in defining an appropriate distance measure, such as Euclidean distance, which is suitable for direct comparison between sequences of the same dimension. More complex algorithms like Dynamic Time Warping (DTW) [24] allow for nonlinear mapping between MTS, enabling the comparison of sequences of different lengths. It is particularly useful in fields where time series may need flexible alignment due to varying durations. Additionally, an improved method, as proposed in a recent study [25], uses DTW barycenter averaging to effectively handle positive and unlabeled data, enhancing classification accuracy in MTSC task.

In addition, feature-based methods focus on extracting key features from time series using signal processing and data transformation techniques. For example, Wavelet Transform [26] can reveal local variations in time series at different scales, while Symbolic Aggregate approXimation (SAX) [27] transforms time series into discrete symbols, simplifying the data structure. Moreover, innovative methods such as ensemble learners with shapelet [28] representations allow for more precise capturing of distinguishing patterns and relational information in MTS.

lastly, deep learning-based methods leverage complex network architectures to automatically learn from data. These methods are particularly well-suited for handling large-scale and high-dimensional time series data. The MLSTM-FCN [29] is a notable model, combining Long Short-Term Memory (LSTM) and stacked CNN layer to effectively capture long-term dependencies and spatial features. TapNet [17] optimizes the feature extraction process through its unique random grouping alignment method and deep convolutional networks, making it especially suitable for datasets with scarce labels. ShapeNet [30] employs deep learning techniques, combining shapelets with Dilated Causal Convolutional Neural Network (Dilated Causal CNN) to tackle MTSC task. It extracts key features by applying shapelets to time series data of varying lengths and dimensions, and enhances the processing of these features using dilated causal networks.

These methods perform efficient classification, although most of them are based on 1D processing, which presents challenges when dealing with MTS data.

Due to the limitations of 1D time series data in capturing high- dimensional features, there has been increasing interest in transforming 1D time series into 2D representations to improve feature extraction for MTSC tasks. Notable methods include the RPMCNN [19], which uses a RPM to convert 1D time series into 2D images, capturing temporal relationships with CNNs. Similarly, GAF [20] and MTF [21] utilize angular transformations to encode time series data into 2D representations, enhancing feature extraction. Other approaches, like RP [22], visualize periodicity in the phase space of time series, while MDF [23] focuses on motifs and their differences, capturing long-range patterns by comparing the relative amplitudes of adjacent values. These methods transform time series into 2D formats, providing richer representations for MTSC task.

However, while these 2D transformation techniques have demonstrated effectiveness in many cases, they face certain limitations. One key challenge is their inability to fully capture the frequency domain properties and multi-scale features of the data. Many of these methods rely on simple transformations or fixed representations that do not adapt well to the complex and dynamic nature of multivariate time series. For example, methods like GAF and MTF may struggle with accurately reflecting underlying spectral characteristics, while RP and MDF, despite providing rich feature representations, often lack the flexibility to capture long-range dependencies and multi-dimensional interactions in data. Moreover, these approaches typically do not scale well with high-dimensional multivariate time series, which are common in real-world MTSC task. Consequently, while these methods enhance feature extraction, they still face challenges in fully leveraging the richness and complexity of MTS data.

2.3 Multiscale and spectral methods in time series classification

Multiscale convolutional structures and spectral analysis techniques are widely applied across various fields. In medical image analysis, they help in disease identification by analyzing images at different scales [31,32,33]. In audio processing, these techniques facilitate sound recognition and music classification through frequency analysis [34, 35]. They are also used in industrial applications for predictive maintenance by analyzing vibrational signals [36,37,38]. Recently, these techniques have been applied to MTSC task.

Spectral Temporal Graph Neural Network (STGNN) [39] combines graph convolutional networks and frequency domain analysis, effectively capturing complex spatiotemporal relationships in time series data by learning the topological structure of the series at different frequency levels. Alongside STGNN, the PCA-LSTM [40] approach uses principal component analysis and LSTM networks to integrate and exploit multi-temporal and spectral information from time series spectral imaging datasets. Additionally, multiscale convolutional models like InceptionTime [15] operate on multiple scales by designing convolutional kernels of varying sizes, allowing for the simultaneous capture of short-term and long-term dependencies. Meanwhile, TimesNet [18] employs Fast Fourier Transform (FFT) to capture periodic changes in the data, enhancing the understanding of temporal-frequency features. It is suitable for tasks such as classification, prediction, and anomaly detection.

Although multiscale convolutional structures and spectral analysis methods have shown great potential in MTSC task [41,42,43], most models always employ these techniques independently rather than integrating them effectively. For instance, STGNN and InceptionTime primarily focus on feature extraction in the spectral or temporal domains, limiting their effectiveness in handling complex data patterns. While TimesNet utilizes spectral information to capture periodic changes, it fails to deeply integrate this with multiscale convolution, which may result in a limited analytical perspective and omission of features.

3 The proposed method

In this section, we introduce the architecture of SCNet at first. Subsequently, we detail the time-spectral processing module (SpecProcessBlock) and the multiscale convolution module (ConvScaleBlock). Finally, we present the classification processing module within SCNet, and we describe and analyze the loss function.

3.1 Overview of SCNet

As shown in Fig. 1, the workflow of SCNet involves converting 1D variations into 2D variations, variations image processing techniques for feature extraction to obtain 2D feature representations. The data is then converted back to 1D, where multi-scale convolutions are applied to further extract prominent features, resulting in more comprehensive and multi-perspective features.

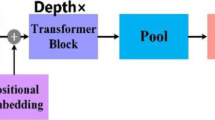

Building on this workflow, the core of the SCNet model, illustrated in Fig. 2, consists of three main components: the time-spectral processing module (SpecProcessBlock), the multiscale convolution module (ConvScaleBlock), and the classification processing module. These components work in synergy to form a powerful network capable of handling MTSC task.

First, the SpecProcessBlock is responsible for transforming the MTS data from the time domain to the spectral domain. By performing an enhanced_DFT, the module not only captures the fundamental periodicity and primary frequency components but also enhances the sensitivity to key frequencies through subsequent normalization processes. This transformation is crucial as it allows to utilize information in the spectral domain, which cannot be directly recognized in the original time-domain signal.

Next, the ConvScaleBlock further processes these spectral features. By using convolutional kernels of different sizes, this module captures features of MTS across multiple scales, ranging from fine-grained short-term fluctuations to broader long-term trends. Each layer is designed to optimize feature extraction, ensuring an understanding of the dynamic changes in the data across various time scales.

Finally, the integrated classification processing module makes the final decision based on the output from the ConvScaleBlock. This module processes the extracted features through a series of fully connected layers and activation functions, such as GELU, mapping them to predefined categories. The design of the classification module takes into account the prevention of overfitting and the enhancement of the generalization capability, ensuring robust classification performance on unseen data.

3.2 SpecProcessBlock

3.2.1 Conversion of 1D variations to 2D variations

This section aims to explain the transformation of 1D MTS into a 2D format, achieved through the collaborative use of enhanced_DFT and Spectral Energy Prioritization. These steps ensure critical frequency components are highlighted, thereby facilitating effective feature extraction and subsequent model learning. We explain their roles and implementation in the transformation process as follows:

Enhanced_DFT

The SpecProcessBlock processes the 1D data using an enhanced_DFT. This conversion is executed through a Real Fast Fourier Transform (rFFT) to shift the MTS from the time domain to the spectral domain.

The calculation process is as follows:

Transformation using rFFT: apply the rFFT to the 1D time series \(X_{1D}\). This process reveals the periodic characteristics and primary frequency components of the series. Additionally, the results are normalized to ensure that the output is independent of the input sequence length, which is particularly important when handling time series data of varying lengths. Here, T represents the length of the input time series.

Processing of frequency data: after performing rFFT on the MTS data, further process the frequency domain data to enhance its effectiveness in model analysis. Therefore, compute statistical features of the frequency domain data, including the mean and standard deviation.

Let \(|X_{freq,i}|\) denote the magnitude of the i-th element of \(X_{freq}\), where n is the total number of elements in \(X_{freq}\). The mean \(\mu \) and standard deviation \(\sigma \) are calculated as follows:

Normalization: normalized \(X_{freq}\). This processed frequency domain data provides a standardized feature scale, helping the model effectively extract useful information without being interfered with by outliers or extreme values in the raw amplitudes. The standardized data directly influences the subsequent weighted aggregation, thereby affecting the final model performance.

Spectral Energy Prioritization

To highlight the most representative frequency components, the module applies a Spectral Energy Prioritization strategy after obtaining spectral data through rFFT. This approach uses raw frequency amplitudes before normalization to preserve the original proportions, ensuring accurate identification of the most impactful frequencies on time series variations. The core idea of this method is to calculate the energy of each frequency component, then sort them by energy magnitude, and select the k components with the highest energy (\(top_k\)). This selection focuses on the frequencies most influential in the time series variations, thereby enhancing the accuracy and efficiency of subsequent processing steps.

For frequency domain data \(X_{\textrm{freq}}\) obtained through the rFFT, calculate the energy of each frequency, where \(\omega \) represents the frequency:

Next, select the \(top_k\) largest frequency components \(\omega _{top_k}\) from the calculated energies:

Once the \(top_k\) frequencies are determined, the model can utilize these frequencies for further learning. The steps are as follows:

Selection of Frequencies: a set of key frequencies, \(\omega _1,\) \( \ldots , \omega _k\), is chosen based on the characteristics of the data. These frequencies are used to extract periodic information from the original 1D time series, aiding the model in capturing periodic variations within the data.

Determination of Period Length: for each key frequency \(\omega _i\), the corresponding period length \(L_i\) is calculated. Here, \(i \in \{1, \ldots , k\}\), T represents the length of the time series:

Construction of 2D Tensors: based on the period length \(L_i\) and key frequency \(\omega _i\), the original 1D time series \(X_{1D}\) is reshaped into 2D shape \(X_i^{2D}\). This involves padding the original data to fit the shape of the 2D tensors and reshaping according to the period length.

Through the above steps, the 1D MTS is effectively transformed into 2D shape that contain rich information. These tensors provide a foundation for further data analysis.

In the processed 2D tensors, each column and each row respectively reveal two key types of variation in the MTS data. One is the intraperiod variation, where each column represents the values at the same time point across different periods. This organization enables the model to identify and learn patterns of variation at the same time point in different periods. Another is the interperiod variation, shown in each row, which displays the values at different time points within the same period. This allows the model to analyze and understand the trend of changes over the course of a period.

3.2.2 Extraction using ConvNeXt Block

After converting 1D MTS into 2D format, the SpecProcessBlock employs the ConvNeXt Block to further extract and refine features. Specifically, the process begins with a ConvNeXt Block layer that expands the input channel number to an intermediate layer channel number, enhancing the feature representation capabilities. This is followed by the application of the GELU activation function, which further improves feature processing capabilities. Finally, another ConvNeXt Block layer compresses the channel number from the intermediate layer back to the original dimension, completing the integration and refinement of information. This architecture design is inspired by the bottleneck structure commonly found in modern deep learning networks, aiming to optimize learning efficiency and representational capacity without significantly increasing computational costs.

Actually, ConvNeXt is a network architecture that employs depthwise separable convolution techniques, optimizing the computational efficiency and the number of parameters compared to traditional convolution operations. This architecture utilizes two types of convolution operations: depthwise convolution, which handles the spatial relationships in the input feature maps, and pointwise convolution, which addresses the inter-channel relationships. Its design is particularly useful for handling 2D data, making it an excellent choice for enhancing 2D feature extraction in our model.

Depthwise convolution operations use separate convolutional kernels to process each channel of the input independently. This differs from traditional convolutions, which use convolutional kernels across all channels. Depthwise convolution primarily extracts spatial features without increasing the complexity of inter-channel interactions. After completing depthwise convolution, pointwise convolution uses \(1 \times 1\) convolutional kernels to process the results. The main function of pointwise convolution is to transform the channel dimension while keeping the spatial dimensions of the feature maps unchanged. Let \(X_{l,i}^{2D}\) denote the 2D features output from the i-th processing block of a certain layer l, where i ranges from 1 to k:

Subsequently, Adaptive Average Pooling 2D (AAP2D) is applied to adjust the dimensions, ensuring that the output dimension aligns with the target length. Finally, the feature maps are reshaped into a 1D form to facilitate subsequent analysis and learning processes. Let \(X_{l,i}^{2D}\) denote the 2D features output from the i-th processing block of layer l, where i ranges from 1 to k. The process can be expressed as follows:

At the end of the SpecProcessBlock, we implement an adaptive aggregation mechanism. The energy E obtained from Spectral Energy Prioritization is used to reflect the relative importance of the selected frequencies and periods. Therefore, this process first evaluates the importance of each frequency component using the Softmax function and normalizes these scores. Based on these weights, the module calculates the weighted sum of all features, thus integrating information from various processing stages. This method allows the model to dynamically adjust the influence of each feature, optimizing the understanding and processing of MTS. The equation is as follows:

Finally, each SpecProcessBlock is connected via residual connections. It takes a 1D feature representation \(X_{l-1}^{1D}\) from the previous layer, processes it to generate \(\text {SpecProcessBlock}\) \((X_{l-1}^{1D})\), and then adds the processed features to the original input to form the output of the current layer \(X_l^{1D}\):

The structure allows to retain the original input information while combining it with the transformed features, thereby enhancing representational capacity.

3.3 ConvScaleBlock

The ConvScaleBlock is positioned at the end of a series of SpecProcessBlocks that uses residual connections. The module includes several parallel convolutional layers, each equipped with kernels of different sizes, allowing for the effective identification and extraction of data features ranging from fine-grained, rapid fluctuations to broader, long-term trends.

Each convolutional layer uses advanced dynamic masking techniques to precisely control the active regions of the convolutional kernels, ensuring each operates only within its designated area. This involves generating specific masks for each layer, which define where the kernels should function. These masks are determined by the kernel size and a predefined maximum kernel length, ensuring only selected data regions are processed, thereby reducing unnecessary computation and minimizing noise interference. This multi-level, multi-scale approach enhances the model’s interpretability of MTS data and improves classification accuracy.

This approach not only significantly reduces computational load, especially with large convolutional kernels where the savings are most noticeable, but also enhances processing flexibility and efficiency. Smaller kernels capture details and short-term variations, while larger kernels identify long-term patterns. This hierarchical structure enables the module to adapt to features across different time scales without excessive computational overhead, playing a crucial role in analyzing complex MTS data.

The application of the ConvScaleBlock is as follows. This step is applied after the last SpecProcessBlock for further feature extraction, \(spec\_out\) is the index of the last SpecProcessBlock:

Here, \(X_{conv}^{1D}\) is the feature output after ConvScaleBlock, which will be used for the next stage of the model’s processing.

At this point, through ConvNeXt Block and ConvScaleBlock, the model has obtained sufficient and comprehensive feature representations from both 2D and 1D format data, providing deeper data support for subsequent analysis.

3.4 Classification processing module

The output from the ConvScaleBlock is initially subjected to layer normalization. Layer normalization acts on each feature of each sample, independently adjusting the statistical properties of each feature to accelerate training and improve the model’s generalization performance. The formula for layer normalization is as follows, where \(X_{conv}^{1D}\) is the output from the multiscale convolution module, and \(X_{ln}\) is the normalized feature representation:

Subsequently, GELU activation function is applied to introduce non-linearity, enhancing the ability to handle complex patterns. The activated output is then fed into a fully connected layer, which maps the normalized features to the final classification output. This linear layer effectively transforms the processed features into predicted categories, where \(y'\) represents the final output:

This process not only optimizes the representation of information extracted from the MTS data but also ensures that the model can make accurate classification decisions based on these features.

3.5 Loss function

To optimize classification performance, the cross-entropy loss function is used to compute the difference between the input logits and the target labels [43,44,45]. The computation of the cross-entropy loss can be broken down into three steps as follows:

Softmax Function

For each sample, the Softmax function is applied to the predicted logits \( y'_{i,j} \) to obtain the predicted probabilities. The Softmax function for the j-th class of the i-th sample is defined as:

Negative Log-Likelihood

Next, the negative log-likelihood is computed for each sample based on its true class label \(y_i\). The negative log-likelihood for the i-th sample, denoted as NLL, is:

Total Loss

Finally, the total cross-entropy loss is computed as the average of the negative log-likelihoods across all N samples:

By minimizing this loss function, the model optimizes its parameters to enhance prediction accuracy and generalization.

4 Experiments

In this section, we provide a detailed description of the comprehensive experiments conducted with SCNet to evaluate its performance.

First of all, we describe the UEA datasets [46] used, the evaluation metrics adopted, and the baseline methods chosen for comparison. Then, we conducted comparative and ablation experiments to test the effectiveness and importance of different components of SCNet. What’s more, we presented visualizations to illustrate the details of the learning process for features, providing an intuitive assessment of the performance. Finally, we evaluated the efficiency of different models during training and test phases through runtime analysis.

4.1 Experimental setting

4.1.1 Datasets

We conducted comprehensive experiments on the UEA datasets to evaluate the performance of SCNet. These datasets span multiple domains, including Human Activity Recognition (HAR), Electroencephalogram (EEG), Audio, and other categories.

The UEA datasets we selected have a wide range of feature dimensions, ranging from 3 to 963, with time steps varying from 29 to 1751. The number of classes also varies significantly, from 2 to 26. The dataset sizes are also diverse. For more details, please refer to Table 1, which provides detailed information for each dataset.

4.1.2 Evaluation metrics

In our experiments, we used classification accuracy as the primary evaluation metric, as it directly reflects the model’s performance. We also assessed average accuracy, total best accuracy, average rank, and one-to-one comparison outcomes.

A win occurs when SCNet’s accuracy exceeds that of the benchmark model, a draw happens when the accuracies are equal, and a loss occurs when SCNet’s accuracy is lower. This framework quantifies the model’s performance across different datasets, with more wins and fewer draws and losses indicating better performance.

The average rank shows the model’s competitiveness against multiple benchmarks, calculated by averaging rankings across datasets. Overall, SCNet consistently outperforms all baseline methods in average rank.

4.1.3 Comparison methods

We compared SCNet with several outstanding models, including three deep learning methods (MLSTM-FCN [29], TapNet [17], and TimesNet [18]), two Transformer-based methods (TST [10] and Pyraformer [11]), and two self-supervised learning methods (TS2Vec [12] and MICOS [13]). We introduce the main features and performance advantages of these models as follows:

-

(1)

MLSTM-FCN is built upon the LSTM-FCN model, enhancing its ability to capture important features by incorporating a channel attention mechanism.

-

(2)

TapNet uses random grouping alignment for low-dimen-sional feature extraction and integrates multilayer convolutional networks to enhance this process. To address the issue of limited labeled data, it introduces an attention-based prototype network.

-

(3)

TimesNet effectively captures complex patterns and dynamic changes by analyzing the multi-periodicity of time series data and transforming 1D time series into 2D tensors, processed through the Inception block.

-

(4)

TST employs a Transformer-based encoder to handle MTS, with a robust unsupervised pre-training component that enables excellent performance across various domains, particularly in complex time series analysis involving long-range dependencies.

-

(5)

Pyraformer is a Transformer model based on PAM. It conveys information in a multi-resolution graph structure, effectively representing time series features across different scales.

-

(6)

TS2Vec (self-supervised) is a versatile framework that learns time series representations through hierarchical contrastive learning on augmented contextual views, demonstrating notable superiority across various tasks.

-

(7)

MICOS is a framework that uses a mixed supervised contrastive learning approach to process and analyze spatiotemporal data. This strategy combines self-supervised learning with intra-class and inter-class supervised learning, effectively extracting key spatiotemporal features from complex data.

4.1.4 Parameter settings

Our experiments were conducted using the PyTorch framework in Python on a computing system equipped with a single NVIDIA GeForce RTX 3090 GPU with 24GB memory and an Intel(R) Xeon(R) Silver 4210R CPU.

In SCNet, the ConvNeXtBlock is configured with two layers: the first layer has a kernel size of 5 with a padding of 2, followed by a GELU activation function, and the second layer has a kernel size of 7 with a padding of 3. The ConvScaleBlock is configured with three layers, with kernel sizes of 3, 5, and 7, respectively.

For model training, we used the Adam optimizer with a typical learning rate of 1e-3. To prevent overfitting and enhance generalization, we implemented an early stopping mechanism based on validation loss. Training was set to run for a maximum of 30 epochs, but it would halt early if the validation loss did not significantly decrease for 10 consecutive epochs, as determined by the predefined “patience” value. The same criterion was applied to all comparative methods to ensure consistency and fairness in the results.

4.2 Classification performance evaluation

The classification accuracies, average ranks, and win/draw/loss counts for each method are shown in Table 2, with the best accuracy for each dataset highlighted in bold. It should be noted that the underlined results represent the reproduction of the original experiments. For methods showing substantial differences from the originally reported results due to variations in training parameters, including patience values, random seeds, and hardware configurations, independent implementations and evaluations were performed. This approach aims to maintain a fair and reliable comparison across all methods.

The results indicate that SCNet outperforms all other baseline methods in terms of average ranking and overall performance.

Specifically, SCNet achieves an average accuracy of 0.743 across all ten datasets, significantly surpassing the performance of other models. This result not only indicates that SCNet possesses robust classification capabilities but also demonstrates its wide adaptability in handling various types of MTS data. For instance, on the SelfRegulationSCP1 dataset, SCNet’s accuracy exceeds that of the best competitor by 2.4%, suggesting that SCNet effectively captures key features, thereby enhancing model performance.

Further analysis reveals that SCNet also excels in one-on-one comparisons, winning 9, 10, 10, 8, 10, 9, and 10 times against MLSTM-FCN, TapNet, TimesNet, TST, Pyraformer, TS2Vec, and MICOS, respectively. This underscores SCNet’s superiority in MTSC task. Its average ranking of 1.60 further validates the reliability and stability of SCNet.

Notably, SCNet demonstrates outstanding performance on specific datasets, achieving accuracies of 0.785 and 0.995 on the Heartbeat and SpokenArabicDigits datasets, respectively. This highlights its strong capabilities in processing biological and audio signals. Such impressive performance is attributed not only to SCNet’s architecture but also to its use of spectral analysis and multiscale convolutional structures, which effectively capture the multi-level features within MTS data.

To further substantiate these findings, statistical significance tests were conducted to ensure the reliability of the observed performance differences. The significance test results in Table 2 indicate that SCNet demonstrates statistically significant advantages over the best-performing baseline methods on most datasets, as indicated by p-values below 0.05. Additionally, Fig. 3 displays a critical difference diagram of the results presented in Table 2, illustrating the differences between SCNet and other compared methods across various datasets. As shown in the figure, the performance differences between SCNet and all compared methods are statistically significant, reaching the level of statistical significance.

4.3 Ablation experiments

To evaluate the importance of enhanced_DFT in feature extraction, we replaced it with FFT in the SCNet model, denoting the modified model as \(\textrm{SCNet}_{FFT}\). As shown in the experimental results in Fig. 4, the SCNet model using enhanced_DFT outperformed \(\textrm{SCNet}_{FFT}\) on most datasets. Specifically, enhanced_DFT performs normalization and incorporates Spectral Energy Prioritization, which excels at capturing complex patterns and features in MTS data, thereby improving the model’s classification accuracy.

In the next step, we removed ConvScaleBlock from the SCNet model, naming the new model as \(\textrm{SCNet}_{ out\_conv}\), to explore the effectiveness of this module in MTSC task. The experimental results, as shown in Fig. 5, indicate that the classification accuracy of \(\textrm{SCNet}_{out\_conv}\) decreased on most datasets, suggesting that the ConvScaleBlock captures more useful information during feature extraction, thereby enhancing the model’s performance in MTSC task.

Finally, we present the average accuracy and average ranking of \(\textrm{SCNet}_{FFT}\) and \(\textrm{SCNet}_{out\_conv}\) compared to SCNet on the selected UEA datasets in Table 3. It is evident that SCNet achieved the highest average accuracy and the best average rank compared with all variants, further validating the effectiveness of the proposed components.

4.4 Feature visualization

We utilized the t-SNE algorithm to visualize the features extracted by SCNet and \(\textrm{SCNet}_{out\_conv}\) on three datasets: JapaneseVowels, SpokenArabicDigits, and UWaveGestureLibrary. As shown in Fig. 6, different colors represent data from different classes. The first column displays the features from the original data, the second column shows features learned by \(\textrm{SCNet}_{out\_conv}\), and the third column presents the features extracted by the full SCNet, including ConvScaleBlock.

Figure 6 clearly illustrates that the SCNet, equipped with ConvScaleBlock, demonstrates more distinct and concentrated feature differentiation. This is particularly noticeable in complex classification tasks like JapaneseVowels, where ConvScaleBlock significantly improves the model’s learning capability, facilitating better category distinction. In contrast, although \(\textrm{SCNet}_{out\_conv}\) still demonstrates some classification capability, the boundaries between categories are relatively blurry, highlighting the crucial role of ConvScaleBlock in enhancing the model’s classification performance. This result emphasizes the important role of ConvScaleBlock in enhancing the accuracy of MTSC task.

4.5 Computational cost analysis

In this experiment, we selected TimesNet and Pyraformer as comparison models to evaluate the performance of SCNet in handling classification tasks of varying lengths. These two models were chosen because TimesNet, like SCNet, is a deep learning-based method that ranks closely behind in average accuracy, demonstrating strong data processing capabilities. On the other hand, Pyraformer, with its Transformer-based multi-layer temporal pyramid structure, effectively reduces computational complexity. The PAM ensures that the signal transmission distance between any two nodes in the long time series remains at a constant level, making it representative of high-performance models.

As shown in Table 4, we conducted a detailed analysis of the parameter count (Params) and floating-point operations (FLOPs) of SCNet and the two comparison models. The results indicate that SCNet has significantly fewer Params and FLOPs than Pyraformer across most datasets, while it is slightly higher than TimesNet, but both are within the same order of magnitude, with no significant difference. The slightly higher parameter count and computational complexity of SCNet are primarily due to its innovative design, which introduces a two-step feature extraction process. This design aims to capture more comprehensive feature information, thus enhancing expressiveness and learning capacity. Nevertheless, As shown in Table 5, despite the increase in Params and FLOPs, SCNet demonstrates a significant reduction in training and test time across most datasets, improving computational efficiency without compromising model performance.

In summary, by moderately increasing the parameter count and FLOPs, SCNet successfully achieves a balance between accuracy and efficiency. Particularly for more complex tasks, SCNet not only maintains high classification accuracy but also optimizes the utilization of computational resources, striking an excellent balance between computational demands and model performance. These results indicate that SCNet’s design effectively enhances computational efficiency while ensuring outstanding performance, making it highly scalable and competitive in real-world applications.

5 Conclusion

SCNet is introduced as an innovative MTSC model in this work, leveraging spectral domain analysis and multiscale convolution techniques. The SpecProcessBlock effectively transforms MTS data from the time domain to the frequency domain, capturing key frequency features and converting 1D variations to 2D variations. The ConvScaleBlock further enhances feature extraction, enabling the model to capture dynamics ranging from fine-grained short-term changes to broad long-term trends. Experimental results show that SCNet achieved the highest average classification accuracy of 0.743 compared to seven other advanced methods, and secured the best average ranking of 1.60 on the selected datasets. Additionally, ablation experiments verified the importance of various components of the model.

However, SCNet has room for improvement regarding its sensitivity to input data noise and the risk of overfitting with limited samples. Future work will address these issues by incorporating noise robustness techniques for enhanced stability and using data augmentation to reduce overfitting. We will also continue to explore spectral feature extraction and multiscale convolution structures to improve SCNet’s accuracy and stability with complex time series data.

Data availability and access

The UEA datasets are available at www.timeseriesclassification.com

References

Wu X, Chen H, Wang J et al (2020) Adaptive stock trading strategies with deep reinforcement learning methods. Inf Sci 538:142–158. https://doi.org/10.1016/j.ins.2020.05.066

Wang J, Guo X, Li W et al (2021) Statistical mechanical analysis for unweighted and weighted stock market networks. Pattern Recogn 120:108123. https://doi.org/10.1016/j.patcog.2021.108123

Liao J, Liu D, Su G et al (2021) Recognizing diseases with multivariate physiological signals by a deepcnn-lstm network. Appl Intell 51(11):7933–7945. https://doi.org/10.1007/s10489-021-02309-2

Shuja J, Alanazi E, Alasmary W et al (2021) Covid-19 open source data sets: a comprehensive survey. Appl Intell 51(3):1296–1325. https://doi.org/10.1007/s10489-020-01862-6

Suh WH, Oh S, Ahn CW (2023) Metaheuristic-based time series clustering for anomaly detection in manufacturing industry. Appl Intell 53(19):21723–21742. https://doi.org/10.1007/s10489-023-04594-5

Wang H, Lu W, Tang S et al (2022) Predict industrial equipment failure with time windows and transfer learning. Appl Intell 52(3):2346–2358. https://doi.org/10.1007/s10489-021-02441-z

Chen L, Peng C, Yang C et al (2023) Domain adversarial-based multi-source deep transfer network for cross-production-line time series forecasting. Appl Intell 53(19):22803–22817. https://doi.org/10.1007/s10489-023-04729-8

Wu X, Tao C, Zhang J et al (2023) Space or time for video classification transformers. Appl Intell 53(20):23039–23048. https://doi.org/10.1007/s10489-023-04756-5

Wang J, Liu X, Li W et al (2024) Te-tfn: A text-enhanced transformer fusion network for multimodal knowledge graph completion. IEEE Intell Syst. https://doi.org/10.1109/MIS.2024.3378921

Zerveas G, Jayaraman S, Patel D, et al (2021) A transformer-based framework for multivariate time series representation learning. In: Proceedings of the 27th ACM SIGKDD conference on knowledge discovery & data mining, pp 2114–2124. https://doi.org/10.1145/3447548.3467401

Liu S, Yu H, Liao C, et al (2021) Pyraformer: Low-complexity pyramidal attention for long-range time series modeling and forecasting. In: International conference on learning representations. https://doi.org/10.34726/2945

Yue Z, Wang Y, Duan J, et al (2022) Ts2vec: Towards universal representation of time series. In: Proceedings of the AAAI Conference on Artificial Intelligence, pp 8980–8987. https://doi.org/10.1609/aaai.v36i8.20881

Hao S, Wang Z, Alexander AD et al (2023) Micos: Mixed supervised contrastive learning for multivariate time series classification. Knowl-Based Syst 260:110158. https://doi.org/10.1016/j.knosys.2022.110158

Wen Q, Sun L, Yang F, et al (2020) Time series data augmentation for deep learning: A survey. arXiv preprint arXiv:2002.12478. https://doi.org/10.48550/arXiv.2002.12478

Ismail Fawaz H, Lucas B, Forestier G et al (2020) Inceptiontime: Finding alexnet for time series classification. Data Min Knowl Disc 34(6):1936–1962. https://doi.org/10.1007/s10618-020-00710-y

Karim F, Majumdar S, Darabi H et al (2019) Multivariate lstm-fcns for time series classification. Neural Netw 116:237–245. https://doi.org/10.1016/j.neunet.2019.04.014

Zhang X, Gao Y, Lin J, et al (2020) Tapnet: Multivariate time series classification with attentional prototypical network. In: Proceedings of the AAAI conference on artificial intelligence, pp 6845–6852. https://doi.org/10.1609/aaai.v34i04.6165

Wu H, Hu T, Liu Y, et al (2022) Timesnet: Temporal 2d-variation modeling for general time series analysis. arXiv preprint arXiv:2210.02186. https://doi.org/10.48550/arXiv.2210.02186

Chen W, Shi K (2019) A deep learning framework for time series classification using relative position matrix and convolutional neural network. Neurocomputing 359:384–394. https://doi.org/10.1016/j.neucom.2019.06.032

Fahim SR, Sarker Y, Sarker SK et al (2020) Self attention convolutional neural network with time series imaging based feature extraction for transmission line fault detection and classification. Electr Power Syst Res 187:106437. https://doi.org/10.1016/j.epsr.2020.106437

Fahim M, Fraz K, Sillitti A (2020) Tsi: Time series to imaging based model for detecting anomalous energy consumption in smart buildings. Inf Sci 523:1–13. https://doi.org/10.1016/j.ins.2020.02.069

Li X, Kang Y, Li F (2020) Forecasting with time series imaging. Expert Syst Appl 160:113680. https://doi.org/10.1016/j.eswa.2020.113680

Zhang Y, Gan F, Chen X (2020) Motif difference field: An effective image-based time series classification and applications in machine malfunction detection. In: 2020 IEEE 4th Conference on Energy Internet and Energy System Integration (EI2), IEEE, pp 3079–3083. https://doi.org/10.1109/EI250167.2020.9346704

Li H (2021) Time works well: Dynamic time warping based on time weighting for time series data mining. Inf Sci 547:592–608. https://doi.org/10.1016/j.ins.2020.08.089

Li J, Zhang H, Dong Y et al (2021) An improved self-training method for positive unlabeled time series classification using dtw barycenter averaging. Sensors 21(21):7414. https://doi.org/10.3390/s21217414

Rhif M, Ben Abbes A, Farah IR et al (2019) Wavelet transform application for/in non-stationary time-series analysis: A review. Appl Sci 9(7):1345. https://doi.org/10.3390/app9071345

Yu Y, Zhu Y, Wan D, et al (2019) A novel symbolic aggregate approximation for time series. In: Proceedings of the 13th International Conference on Ubiquitous Information Management and Communication (IMCOM) 2019 13, Springer, pp 805–822. https://doi.org/10.1007/978-3-030-19063-7_65

Zhao H, Pan Z, Tao W (2020) Regularized shapelet learning for scalable time series classification. Comput Netw 173:107171. https://doi.org/10.1016/j.comnet.2020.107171

Karim F, Majumdar S, Darabi H et al (2019) Multivariate lstm-fcns for time series classification. Neural Netw 116:237–245. https://doi.org/10.1016/j.neunet.2019.04.014

Li G, Choi B, Xu J, et al (2021) Shapenet: A shapelet-neural network approach for multivariate time series classification. In: Proceedings of the AAAI conference on artificial intelligence, pp 8375–8383. https://doi.org/10.1609/aaai.v35i9.17018

Teng L, Li H (2019) Karim S (2019) Dmcnn: a deep multiscale convolutional neural network model for medical image segmentation. Journal of Healthcare Engineering 1:8597606. https://doi.org/10.1155/2019/8597606

Shibu DS, Priyadharsini SS (2021) Multi scale decomposition based medical image fusion using convolutional neural network and sparse representation. Biomed Signal Process Control 69:102789. https://doi.org/10.1016/j.bspc.2021.102789

Agnes SA, Anitha J, Pandian SIA et al (2020) Classification of mammogram images using multiscale all convolutional neural network (ma-cnn). J Med Syst 44(1):30. https://doi.org/10.1007/s10916-019-1494-z

Zhu W, Omar M (2023) Multiscale audio spectrogram transformer for efficient audio classification. In: ICASSP 2023-2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), IEEE, pp 1–5. https://doi.org/10.1109/ICASSP49357.2023.10096513

Liu J, Zhang Y, Lv D et al (2022) Birdsong classification based on ensemble multi-scale convolutional neural network. Sci Rep 12(1):8636. https://doi.org/10.1038/s41598-022-12121-8

Wu X, Jin H, Ye X et al (2020) Multiscale convolutional and recurrent neural network for quality prediction of continuous casting slabs. Processes 9(1):33. https://doi.org/10.3390/pr9010033

Yang G, Zhong Y, Yang L et al (2020) Fault detection of harmonic drive using multiscale convolutional neural network. IEEE Trans Instrum Meas 70:1–11. https://doi.org/10.1109/TIM.2020.3024355

Shi Y, Deng A, Deng M et al (2020) Enhanced lightweight multiscale convolutional neural network for rolling bearing fault diagnosis. IEEE Access 8:217723–217734. https://doi.org/10.1109/ACCESS.2020.3041735

Cao D, Wang Y, Duan J, et al (2021) Spectral temporal graph neural network for multivariate time-series forecasting. arXiv preprint arXiv:2103.07719. https://doi.org/10.48550/arXiv.2103.07719

Xu JL, Hugelier S, Zhu H et al (2021) Deep learning for classification of time series spectral images using combined multi-temporal and spectral features. Anal Chim Acta 1143:9–20. https://doi.org/10.1016/j.aca.2020.11.018

Ruiz AP, Flynn M, Large J et al (2021) The great multivariate time series classification bake off: a review and experimental evaluation of recent algorithmic advances. Data Min Knowl Disc 35(2):401–449. https://doi.org/10.1007/s10618-020-00727-3

Iwana BK, Uchida S (2021) An empirical survey of data augmentation for time series classification with neural networks. PLoS ONE 16(7):e0254841. https://doi.org/10.1371/journal.pone.0254841

Ismail Fawaz H, Forestier G, Weber J et al (2019) Deep learning for time series classification: a review. Data Min Knowl Disc 33(4):917–963. https://doi.org/10.1007/s10618-019-00619-1

Demirkaya A, Chen J, Oymak S (2020) Exploring the role of loss functions in multiclass classification. In: 2020 54th annual conference on information sciences and systems (ciss), IEEE, pp 1–5. https://doi.org/10.1109/CISS48834.2020.1570627167

Mao A, Mohri M, Zhong Y (2023) Cross-entropy loss functions: Theoretical analysis and applications. arXiv preprint arXiv:2304.07288. https://doi.org/10.48550/arXiv.2304.07288

Bagnall A, Dau HA, Lines J, et al (2018) The uea multivariate time series classification archive, 2018. arXiv preprint arXiv:1811.00075. https://doi.org/10.48550/arXiv.1811.00075

Acknowledgements

This work is supported by the National Key Research and Development Program of China (2022YFB3707800), the National Natural Science Foundation of China (No. 62172267), the State Key Program of National Natural Science Foundation of China (Grant No. 61936001), the Project of Key Laboratory of Silicate Cultural Relics Conservation (Shanghai University), Ministry of Education (No. SCRC2023ZZ02ZD).

Funding

Open access funding provided by Hong Kong Baptist University Library.

Author information

Authors and Affiliations

Contributions

Xing Wu: Conceptualisation, Methodology, Funding Acquisition, Writing - Review & Editing. Xinyu Xing: Writing - Original Draft, Methodology. Junfeng Yao: Supervision, Validation. Quan Qian: Supervision, Validation. Jun Song: Supervision, Validation.

Corresponding authors

Ethics declarations

Conflict of interest

The authors declare that they have no Conflict of interest.

Ethics approval and consent to participate

All authors give consent to participate.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Wu, X., Xing, X., Yao, J. et al. Scnet: spectral convolutional networks for multivariate time series classification. Appl Intell 55, 456 (2025). https://doi.org/10.1007/s10489-025-06352-1

Accepted:

Published:

DOI: https://doi.org/10.1007/s10489-025-06352-1