Abstract

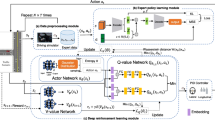

Offline Reinforcement Learning (RL), which optimizes policies from previously collected datasets, is a promising approach for tackling tasks where direct interaction with the environment is infeasible due to high risk or cost of errors, such as autonomous vehicle (AV) applications. However, offline RL faces a critical challenge: extrapolation errors arising from out-of-distribution (OOD) data. In this paper, we propose Attention Ensemble Mixture (AEM), a novel offline RL algorithm that leverages ensemble learning and an attention mechanism. Ensemble learning enhances the confidence of Q-function predictions, while the attention mechanism evaluates the uncertainty of selected actions. By assigning appropriate attention weights to each Q-head, AEM effectively down-weights OOD actions and up-weights in-distribution actions. We further introduce three key improvements to enhance the robustness and generality of AEM: attention-weighted Bellman backups, KL divergence regularization, and delayed attention updates. Extensive comparative experiments demonstrate that AEM outperforms several state-of-the-art ensemble offline RL algorithms, while ablation studies underscore the significance of the proposed enhancements. In AV tasks, AEM exhibits superior performance compared to other methods, excelling in both offline and online evaluations.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Notes

Because some ensemble RL algorithms are designed for offline RL tasks, in the following taxonomy, these ensemble RL algorithms are classified as offline RL algorithms.

Our data and code are available on https://github.com/Mr-XcHan/AEM.

References

Afsar MM, Crump T, Far B (2022) Reinforcement learning based recommender systems: A survey. ACM Comput Surv 55(7) https://doi.org/10.1145/3543846

Mazyavkina N, Sviridov S, Ivanov S, Burnaev E (2021) Reinforcement learning for combinatorial optimization: A survey. Comput Operate Res 134:105400. https://doi.org/10.1016/j.cor.2021.105400

Ouyang L, Wu J, Jiang X, Almeida D, Wainwright C, Mishkin P, Zhang C, Agarwal S, Slama K, Ray A, Schulman J, Hilton J, Kelton F, Miller L, Simens M, Askell A, Welinder P, Christiano PF, Leike J, Lowe R (2022) Training language models to follow instructions with human feedback. In: Koyejo S, Mohamed S, Agarwal A, Belgrave D, Cho K, Oh A (eds) Advances in Neural Information Processing Systems, vol. 35, pp. 27730–27744. Curran Associates, Inc., ???. https://proceedings.neurips.cc/paper_files/paper/2022/file/b1efde53be364a73914f58805a001731-Paper-Conference.pdf

Han X, Afifi H, Moungla H, Marot M (2024) Leaky ppo: A simple and efficient rl algorithm for autonomous vehicles. In: 2024 International Joint Conference on Neural Networks (IJCNN), pp 1–7 . https://doi.org/10.1109/IJCNN60899.2024.10650450

Nikulin A, Kurenkov V, Tarasov D, Akimov D, Kolesnikov S (2023) Q-Ensemble for Offline RL: Don’t Scale the Ensemble, Scale the Batch Size . arXiv:2211.11092

Fang Z, Li Y, Lu J, Dong J, Han B, Liu F (2022) Is out-of-distribution detection learnable? In: Koyejo S, Mohamed S, Agarwal A, Belgrave D, Cho K, Oh A (eds) Advances in Neural Information Processing Systems, vol 35, pp 37199–37213. Curran Associates, Inc., ???. https://proceedings.neurips.cc/paper_files/paper/2022/file/f0e91b1314fa5eabf1d7ef6d1561ecec-Paper-Conference.pdf

Fujimoto S, Meger D, Precup D (2019) Off-policy deep reinforcement learning without exploration. In: Chaudhuri K, Salakhutdinov R (eds) Proceedings of the 36th International Conference on Machine Learning. Proceedings of Machine Learning Research, vol 97, pp 2052–2062. PMLR, ???. https://proceedings.mlr.press/v97/fujimoto19a.html

Kumar A, Fu J, Soh M, Tucker G, Levine S (2019) Stabilizing off-policy q-learning via bootstrapping error reduction. In: Wallach H, Larochelle H, Beygelzimer A, Alché-Buc F, Fox E, Garnett R (eds) Advances in Neural Information Processing Systems, vol 32. Curran Associates, Inc., ???. https://proceedings.neurips.cc/paper_files/paper/2019/file/c2073ffa77b5357a498057413bb09d3a-Paper.pdf

Wu Y, Tucker G, Nachum O (2019) Behavior Regularized Offline Reinforcement Learning. arXiv:1911.11361

Zhang C, Kuppannagari S, Viktor P (2021) Brac+: Improved behavior regularized actor critic for offline reinforcement learning. In: Balasubramanian VN, Tsang I (eds) Proceedings of The 13th Asian Conference on Machine Learning. Proceedings of Machine Learning Research, vol 157, pp 204–219. PMLR, ???. https://proceedings.mlr.press/v157/zhang21a.html

Kumar A, Zhou A, Tucker G, Levine S (2020) Conservative q-learning for offline reinforcement learning. In: Larochelle H, Ranzato M, Hadsell R, Balcan MF, Lin H (eds) Advances in Neural Information Processing Systems, vol 33, pp 1179–1191. Curran Associates, Inc., ???. https://proceedings.neurips.cc/paper_files/paper/2020/file/0d2b2061826a5df3221116a5085a6052-Paper.pdf

An G, Moon S, Kim J-H, Song HO (2021) Uncertainty-based offline reinforcement learning with diversified q-ensemble. In: Ranzato M, Beygelzimer A, Dauphin Y, Liang PS, Vaughan JW (eds) Advances in Neural Information Processing Systems, vol 34, pp 7436–7447. Curran Associates, Inc., ???. https://proceedings.neurips.cc/paper_files/paper/2021/file/3d3d286a8d153a4a58156d0e02d8570c-Paper.pdf

Bai C, Wang L, Yang Z, Deng Z, Garg A, Liu P, Wang Z (2022) Pessimistic Bootstrapping for Uncertainty-Driven Offline Reinforcement Learning. arXiv:2202.11566

Ghasemipour K, Gu SS, Nachum O (2022) Why so pessimistic? estimating uncertainties for offline rl through ensembles, and why their independence matters. In: Koyejo S, Mohamed S, Agarwal A, Belgrave D, Cho K, Oh A (eds) Advances in Neural Information Processing Systems, vol 35, pp 18267–18281. Curran Associates, Inc., ???. https://proceedings.neurips.cc/paper_files/paper/2022/file/7423902b5534e2b267438c85444a54b1-Paper-Conference.pdf

Wang Z, Novikov A, Zolna K, Merel JS, Springenberg JT, Reed SE, Shahriari B, Siegel N, Gulcehre C, Heess N, Freitas N (2020) Critic regularized regression. In: Larochelle H, Ranzato M, Hadsell R, Balcan MF, Lin H (eds) Advances in Neural Information Processing Systems, vol. 33, pp. 7768–7778. Curran Associates, Inc., ???. https://proceedings.neurips.cc/paper_files/paper/2020/file/588cb956d6bbe67078f29f8de420a13d-Paper.pdf

Sheikh H, Phielipp M, Boloni L (2021) Maximizing ensemble diversity in deep reinforcement learning. In: International Conference on Learning Representations. https://openreview.net/forum?id=hjd-kcpDpf2

Csiszár I (1975) \(i\)-divergence geometry of probability distributions and minimization problems. The Annals of Probability 3(1):146–158 . Accessed 2025-01-07

Mnih V, Kavukcuoglu K, Silver D, Rusu AA, Veness J, Bellemare MG, Graves A, Riedmiller M, Fidjeland AK, Ostrovski G, et al. (2015) Human-level control through deep reinforcement learning. nature 518(7540):529–533 https://doi.org/10.1038/nature14236

Anschel O, Baram N, Shimkin N (2017) Averaged-DQN: Variance reduction and stabilization for deep reinforcement learning. In: Precup D, Teh YW (eds) Proceedings of the 34th International Conference on Machine Learning. Proceedings of Machine Learning Research, vol 70, pp 176–185. PMLR, ??? . https://proceedings.mlr.press/v70/anschel17a.html

Agarwal R, Schuurmans D, Norouzi M (2020) An optimistic perspective on offline reinforcement learning. In: III HD, Singh A (eds) Proceedings of the 37th International Conference on Machine Learning. Proceedings of Machine Learning Research, vol 119, pp 104–114. PMLR, ???. https://proceedings.mlr.press/v119/agarwal20c.html

Sutton RS, Barto AG (2018) Reinforcement Learning: An Introduction. MIT press, ???

Williams RJ (1992) Simple statistical gradient-following algorithms for connectionist reinforcement learning. Mach Learn 8:229–256. https://doi.org/10.1007/BF00992696

Schulman J, Levine S, Abbeel P, Jordan M, Moritz P (2015) Trust region policy optimization. In: Bach F, Blei D (eds) Proceedings of the 32nd International Conference on Machine Learning. Proceedings of Machine Learning Research, vol 37, pp 1889–1897. PMLR, Lille, France . https://proceedings.mlr.press/v37/schulman15.html

Schulman J, Wolski F, Dhariwal P, Radford A, Klimov O (2017) Proximal Policy Optimization Algorithms . arXiv:1707.06347

Lillicrap TP, Hunt JJ, Pritzel A, Heess N, Erez T, Tassa Y, Silver D, Wierstra D (2019) Continuous control with deep reinforcement learning. arXiv:1509.02971

Levine S, Kumar A, Tucker G, Fu J (2020) Offline Reinforcement Learning: Tutorial, Review, and Perspectives on Open Problems . arXiv:2005.01643

Figueiredo Prudencio R, Maximo MROA, Colombini EL (2024) A survey on offline reinforcement learning: Taxonomy, review, and open problems. IEEE Trans Neural Netw Learn Syst 35(8):10237–10257 . https://doi.org/10.1109/TNNLS.2023.3250269

Watkins CJ, Dayan P (1992) Q-learning. Mach Learn 8:279–292

Huber PJ (1992) In: Kotz S, Johnson NL (eds) Robust Estimation of a Location Parameter, pp 492–518. Springer, New York, NY . https://doi.org/10.1007/978-1-4612-4380-9_35

Han X, Afifi H, Marot M (2025) Projection Implicit Q-Learning with Support Constraint for Offline Reinforcement Learning. arXiv:2501.08907

Chen RY, Sidor S, Abbeel P, Schulman J (2017) UCB Exploration via Q-Ensembles. arXiv:1706.01502

Lee K, Laskin M, Srinivas A, Abbeel P (2021) Sunrise: A simple unified framework for ensemble learning in deep reinforcement learning. In: Meila M, Zhang T (eds) Proceedings of the 38th International Conference on Machine Learning. Proceedings of Machine Learning Research, vol 139, pp 6131–6141. PMLR, ??? . https://proceedings.mlr.press/v139/lee21g.html

Peer O, Tessler C, Merlis N, Meir R (2021) Ensemble bootstrapping for q-learning. In: Meila M, Zhang T (eds) Proceedings of the 38th International Conference on Machine Learning. Proceedings of Machine Learning Research, vol 139, pp 8454–8463. PMLR, ???. https://proceedings.mlr.press/v139/peer21a.html

Lan Q, Pan Y, Fyshe A, White M (2021) Maxmin Q-learning: Controlling the Estimation Bias of Q-learning. arXiv:2002.06487

Liang L, Xu Y, Mcaleer S, Hu D, Ihler A, Abbeel P, Fox R (2022) Reducing variance in temporal-difference value estimation via ensemble of deep networks. In: Chaudhuri K, Jegelka S, Song L, Szepesvari C, Niu G, Sabato S (eds) Proceedings of the 39th International Conference on Machine Learning. Proceedings of Machine Learning Research, vol 162, pp 13285–13301. PMLR, ???. https://proceedings.mlr.press/v162/liang22c.html

Fujimoto S, Gu SS (2021) A minimalist approach to offline reinforcement learning. In: Ranzato M, Beygelzimer A, Dauphin Y, Liang PS, Vaughan JW (eds) Advances in Neural Information Processing Systems, vol. 34, pp. 20132–20145. Curran Associates, Inc., ???. https://proceedings.neurips.cc/paper_files/paper/2021/file/a8166da05c5a094f7dc03724b41886e5-Paper.pdf

Srivastava N, Hinton G, Krizhevsky A, Sutskever I, Salakhutdinov R (2014) Dropout: a simple way to prevent neural networks from overfitting. The J Mach Learn Res 15(1):1929–1958

Kumar A, Gupta A, Levine S (2020) Discor: Corrective feedback in reinforcement learning via distribution correction. In: Larochelle H, Ranzato M, Hadsell R, Balcan MF, Lin H (eds) Advances in Neural Information Processing Systems, vol 33, pp 18560–18572. Curran Associates, Inc., ???. https://proceedings.neurips.cc/paper_files/paper/2020/file/d7f426ccbc6db7e235c57958c21c5dfa-Paper.pdf

Fujimoto S, Hoof H, Meger D (2018) Addressing function approximation error in actor-critic methods. In: Dy J, Krause A (eds) Proceedings of the 35th International Conference on Machine Learning. Proceedings of Machine Learning Research, vol 80, pp 1587–1596. PMLR, ???. https://proceedings.mlr.press/v80/fujimoto18a.html

Brockman G, Cheung V, Pettersson L, Schneider J, Schulman J, Tang J, Zaremba W (2016) OpenAI Gym . arXiv:1606.01540

Li Q, Peng Z, Feng L, Zhang Q, Xue Z, Zhou B (2023) Metadrive: Composing diverse driving scenarios for generalizable reinforcement learning. IEEE Trans Pattern Anal Mach Intell 45(3):3461–3475. https://doi.org/10.1109/TPAMI.2022.3190471

Acknowledgements

The authors would like to thank the anonymous reviewers for valuable feedback and suggestions.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A

Theorem 1

Assume that \(Q^*(s, a)\) is the optimal Q-function. The Bellman optimality operator is \((\mathcal {T} Q)(s, a):= r + \gamma \mathbb {E}_{P(s'|s, a)} \) \( \left[ \mathop {\max }_{a'} Q(s', a') \right] .\) Define \(\zeta _{k}(s, a) = \left| Q_{k}(s, a) - Q^{*}(s, a)\right| \) as the total error at the k-th iteration, and define \(\delta _{k}(s, a) = \left| Q_{k}(s, a) - \mathcal {T} Q_{k-1}(s, a)\right| \) as the Bellman error at the k-th iteration. Then, we have

Proof

where \(\textcircled {1}: Q^{*} = \mathcal {T} Q^{*}. \textcircled {2}:\) Jensen’s Inequality.

Thus, \(\zeta _{k}(s, a) \le \delta _{k}(s, a) + \gamma \mathop {\max }_{a'} \mathbb {E}_{P(s'|s, a)}[\zeta _{k-1}(s', a')]\). \(\square \)

Appendix B

The advantages of AWBB loss function over WQF loss function will be illustrated by a simple example shown in Fig. 11. Assume that the agent chooses the action a in state s and transfers to state \(s'\). Meanwhile, the Q-function is estimated as \(Q(s, a) = 3\). There are three next-step action choices \(a_1, a_2, a_3\). In addition, we assume that there are three target Q-networks in the ensemble Q-network.

In Fig. 11, the OOD actions(\(a_1\) and \(a_2\)) are noted by the dotted line, and the red colour represents the \(a_{ij} = \mathop {\arg \max }_{a_{ij}} Q^i(s', a_j). i = 1, 2, 3; j = 1, 2, 3\), where the superscript i is the i-th target Q-network, and the subscript j is the j-th action.

For simplicity, we assume that the reward \(r=0\) and discount factor \(\gamma =1\). According to the Bellman error \(\delta (s, a) = \left| Q(s, a) - \mathcal {T} Q(s, a) \right| \), we calculate

Then, we assume that the attention weights after training are strictly inverse proportional to the Bellman error since the attention network should assign lower weights to the larger Bellman errors. Thus, the normalized attention weight of each target Q-network is calculated as follows,

For the WQF loss function \(\mathcal {L}_{WQF}(s, a, s', \alpha )\), 7.69.6

For the form of AWBB loss function \(\mathcal {L}_{AWBB}(s, a, s', \alpha )\), 79

Based on the example above, firstly, even though the attention weights are appropriately assigned to each target Q-network, we found that agent with \(\mathcal {L}_{WQF}(s, a, s', \alpha )\) chooses \(a_1 = \mathop {\max }_{a_j} \sum _{i=1}^{3} \alpha _{i} Q^{i}(s', a_j)\), which is an OOD action. Secondly, although the agent with \(\mathcal {L}_{AWBB}(s, a, s', \alpha )\) chooses the OOD action \(a_1\), \(a_2\) to update the target Q-network \(T-Q-Head_1\), \(T-Q-Head_2\), respectively, the loss values for target Q-network \(T-Q-Head_1\) and \(T-Q-Head_2\) are 6156/361, 8208/361, larger than the \(\mathcal {L}_{WQF}\), which means OOD actions causes a larger loss. Meanwhile, the agent with \(\mathcal {L}_{AWBB}(s, a, s', \alpha )\) chooses the in-distribution action \(a_3\) according to the \(T-Q-Head_3\), which introduces a more minor error than \(\mathcal {L}_{WQF}(s, a, s', \alpha )\). Therefore, using the \(\mathcal {L}_{AWBB}(s, a, s', \alpha )\) is more consistent with the analysis of Theorem. 1.

Appendix C

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Han, X., Afifi, H., Moungla, H. et al. Attention ensemble mixture: a novel offline reinforcement learning algorithm for autonomous vehicles. Appl Intell 55, 508 (2025). https://doi.org/10.1007/s10489-025-06403-7

Accepted:

Published:

DOI: https://doi.org/10.1007/s10489-025-06403-7