Abstract

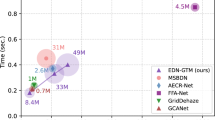

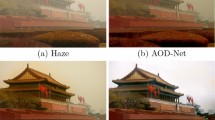

Existing dehazing models have excellent performance in synthetic scenes but still face the challenge of low robustness in real scenes. In this paper, we propose Dark-ControlNet, a generalized and enhanced dehazing plug-in that uses the dark channel prior as a control condition, which can be deployed on existing dehazing models and can be simply fine-tuned to enhance their robustness in real scenes while improving their dehazing performance. We first freeze the backbone network to preserve its encoding and decoding capabilities and input the dark channel prior with high robustness as conditional information to the plug-in network to obtain prior knowledge. Then, we fuse the dark channel prior features into the backbone network in the form of mean-variance alignment via the Haze&Dark(HD) module and guide the backbone network to decode clear images by fine-tuning the plug-in network. The experimental results show that the existing dehazing model enhanced by Dark-ControlNet performs well on synthetic datasets and real datasets.

Similar content being viewed by others

Explore related subjects

Discover the latest articles and news from researchers in related subjects, suggested using machine learning.Data Availability

The authors do not have permission to share data.

References

Huang S-C, Le T-H, Jaw D-W (2020) Dsnet: joint semantic learning for object detection in inclement weather conditions. IEEE Trans Pattern Anal Mach Intell 43(8):2623–2633. https://doi.org/10.1109/tpami.2020.2977911

Sakaridis C, Dai D, Hecker S, Van Gool L (2018) Model adaptation with synthetic and real data for semantic dense foggy scene understanding. In: Proceedings of the european conference on computer vision (ECCV), pp 687–704. https://doi.org/10.1007/978-3-030-01261-8_42

He K, Sun J, Tang X (2010) Single image haze removal using dark channel prior. IEEE Trans Pattern Anal Mach Intell 33(12):2341–2353

Zhu Q, Mai J, Shao L (2015) A fast single image haze removal algorithm using color attenuation prior. IEEE Trans Image Process 24(11):3522–3533. https://doi.org/10.1109/tip.2015.2446191

Fattal R (2014) Dehazing using color-lines. ACM Tran Graph (TOG) 34(1):1–14. https://doi.org/10.1145/2651362

Cai B, Xu X, Jia K, Qing C, Tao D (2016) Dehazenet: an end-to-end system for single image haze removal. IEEE Trans Image Process 25(11):5187–5198. https://doi.org/10.1109/tip.2016.2598681

Li B, Peng X, Wang Z, Xu J, Feng D (2017) Aod-net: all-in-one dehazing network. In: Proceedings of the IEEE international conference on computer vision, pp 4770–4778. https://doi.org/10.1109/iccv.2017.511

Ren W, Liu S, Zhang H, Pan J, Cao X, Yang M-H (2016) Single image dehazing via multi-scale convolutional neural networks. In: Computer vision–ECCV 2016: 14th european conference, Amsterdam, The Netherlands, October 11-14, 2016, Proceedings, Part II 14. Springer, pp 154–169. https://doi.org/10.1007/978-3-319-46475-6_10

Dong H, Pan J, Xiang L, Hu Z, Zhang X, Wang F, Yang M-H (2020) Multi-scale boosted dehazing network with dense feature fusion. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 2157–2167. https://doi.org/10.1109/cvpr42600.2020.00223

Guo C-L, Yan Q, Anwar S, Cong R, Ren W, Li C (2022) Image dehazing transformer with transmission-aware 3d position embedding. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 5812–5820. https://doi.org/10.1109/cvpr52688.2022.00572

Liu X, Ma Y, Shi Z, Chen J (2019) Griddehazenet: attention-based multi-scale network for image dehazing. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 7314–7323. https://doi.org/10.1109/iccv.2019.00741

Qiu Y, Zhang K, Wang C, Luo W, Li H, Jin Z (2023) Mb-taylorformer: multi-branch efficient transformer expanded by taylor formula for image dehazing. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 12802–12813. https://doi.org/10.1109/iccv51070.2023.01176

Zheng Y, Zhan J, He S, Dong J, Du Y (2023) Curricular contrastive regularization for physics-aware single image dehazing. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 5785–5794. https://doi.org/10.1109/cvpr52729.2023.00560

Ancuti CO, Ancuti C, Sbert M, Timofte R (2019) Dense-haze: a benchmark for image dehazing with dense-haze and haze-free images. In: 2019 IEEE international conference on image processing (ICIP). IEEE, pp 1014–1018. https://doi.org/10.1109/icip.2019.8803046

Ancuti CO, Ancuti C, Timofte R, De Vleeschouwer C (2018) O-haze: a dehazing benchmark with real hazy and haze-free outdoor images. In: Proceedings of the IEEE conference on computer vision and pattern recognition workshops, pp 754–762. https://doi.org/10.1109/cvprw.2018.00119

Shao Y, Li L, Ren W, Gao C, Sang N (2020) Domain adaptation for image dehazing. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 2808–2817. https://doi.org/10.1109/cvpr42600.2020.00288

Yang Y, Wang C, Liu R, Zhang L, Guo X, Tao D (2022) Self-augmented unpaired image dehazing via density and depth decomposition. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 2037–2046. https://doi.org/10.1109/cvpr52688.2022.00208

Chen Z, Wang Y, Yang Y, Liu D (2021) Psd: principled synthetic-to-real dehazing guided by physical priors. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 7180–7189. https://doi.org/10.1109/cvpr46437.2021.00710

Li L, Dong Y, Ren W, Pan J, Gao C, Sang N, Yang M-H (2019) Semi-supervised image dehazing. IEEE Trans Image Process 29:2766–2779. https://doi.org/10.1109/tip.2019.2952690

Wu R-Q, Duan Z-P, Guo C-L, Chai Z, Li C (2023) Ridcp: revitalizing real image dehazing via high-quality codebook priors. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 22282–22291. https://doi.org/10.1109/cvpr52729.2023.02134

Luo Z, Gustafsson FK, Zhao Z, Sjölund J, Schön TB (2023) Refusion: enabling large-size realistic image restoration with latent-space diffusion models. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 1680–1691. https://doi.org/10.1109/cvprw59228.2023.00169

Yu M, Cui T, Lu H, Yue Y (2024) Vifnet: an end-to-end visible-infrared fusion network for image dehazing. Neurocomputing 128105. https://doi.org/10.1016/j.neucom.2024.128105

Zhang K, Wang Y, Huang J, Wang L, Yang W (2024) Multi-scale guided dehazing network for single image dehazing. IEEE Trans Image Process 33:10042805. https://doi.org/10.1109/TIP.2024.3301077

Zhang J, et al (2024) Adversarial training for unsupervised single image dehazing. In: CVPR

Zhang L, Rao A, Agrawala M (2023) Adding conditional control to text-to-image diffusion models. In: Proceedings of the IEEE/CVF international conference on computer vision (ICCV), pp 3836–3847. https://doi.org/10.1109/iccv51070.2023.00355

Berman D, Avidan S, et al (2016) Non-local image dehazing. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1674–1682. https://doi.org/10.1109/cvpr.2016.185

Zhang H, Patel VM (2018) Densely connected pyramid dehazing network. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 3194–3203. https://doi.org/10.1109/cvpr.2018.00337

Ren W, Ma L, Zhang J, Pan J, Cao X, Liu W, Yang M-H (2018) Gated fusion network for single image dehazing. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 3253–3261. https://doi.org/10.1109/cvpr.2018.00343

Qin X, Wang Z, Bai Y, Xie X, Jia H (2020) Ffa-net: feature fusion attention network for single image dehazing. In: Proceedings of the AAAI conference on artificial intelligence, vol 34, pp 11908–11915. https://doi.org/10.1609/aaai.v34i07.6865

Wu H, Qu Y, Lin S, Zhou J, Qiao R, Zhang Z, Xie Y, Ma L (2021) Contrastive learning for compact single image dehazing. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 10551–10560. https://doi.org/10.1109/cvpr46437.2021.01041

Hong M, Liu J, Li C, Qu Y (2022) Uncertainty-driven dehazing network. In: Proceedings of the AAAI conference on artificial intelligence, vol 36, pp 906–913. https://doi.org/10.1609/aaai.v36i1.19973

Ye T, Jiang M, Zhang Y, Chen L, Chen E, Chen P, Lu Z (2021) Perceiving and modeling density is all you need for image dehazing. arXiv:2111.09733

Goodfellow I, Pouget-Abadie J, Mirza M, Xu B, Warde-Farley D, Ozair S, Courville A, Bengio Y (2014) Generative adversarial nets. Adv Neural Inf Process Syst 27

Zhu J-Y, Park T, Isola P, Efros AA (2017) Unpaired image-to-image translation using cycle-consistent adversarial networks. In: Proceedings of the IEEE international conference on computer vision, pp 2223–2232. https://doi.org/10.1109/iccv.2017.244

Meng C, He Y, Song Y, Song J, Wu J, Zhu J-Y, Ermon S (2021) Sdedit: guided image synthesis and editing with stochastic differential equations. arXiv:2108.01073

Ramesh A, Dhariwal P, Nichol A, Chu C, Chen M (2022) Hierarchical text-conditional image generation with clip latents 1(2):3. arXiv:2204.06125

Avrahami O, Lischinski D, Fried O (2022) Blended diffusion for text-driven editing of natural images. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 18208–18218. https://doi.org/10.1109/cvpr52688.2022.01767

Brooks T, Holynski A, Efros AA (2023) Instructpix2pix: learning to follow image editing instructions. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 18392–18402. https://doi.org/10.1109/cvpr52729.2023.01764

Gafni O, Polyak A, Ashual O, Sheynin S, Parikh D, Taigman Y (2022) Make-a-scene: scene-based text-to-image generation with human priors. In: European conference on computer vision. Springer, pp 89–106. https://doi.org/10.1007/978-3-031-19784-0_6

Hertz A, Mokady R, Tenenbaum J, Aberman K, Pritch Y, Cohen-Or D (2022) Prompt-to-prompt image editing with cross attention control. arXiv:2208.01626

Kawar B, Zada S, Lang O, Tov O, Chang H, Dekel T, Mosseri I, Irani M (2023) Imagic: text-based real image editing with diffusion models. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 6007–6017. https://doi.org/10.1109/cvpr52729.2023.00582

Kim G, Kwon T, Ye JC (2022) Diffusionclip: text-guided diffusion models for robust image manipulation. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 2426–2435. https://doi.org/10.1109/cvpr52688.2022.00246

Nichol A, Dhariwal P, Ramesh A, Shyam P, Mishkin P, McGrew B, Sutskever I, Chen M (2021) Glide: towards photorealistic image generation and editing with text-guided diffusion models. arXiv:2112.10741

Parmar G, Kumar Singh K, Zhang R, Li Y, Lu J, Zhu, J-Y (2023) Zero-shot image-to-image translation. In: ACM SIGGRAPH 2023 conference proceedings, pp 1–11. https://doi.org/10.1145/3588432.3591513

Avrahami O, Hayes T, Gafni O, Gupta S, Taigman Y, Parikh D, Lischinski D, Fried O, Yin X (2023) Spatext: spatio-textual representation for controllable image generation. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 18370–18380. https://doi.org/10.1109/cvpr52729.2023.01762

Li Y, Liu H, Wu Q, Mu F, Yang J, Gao J, Li C, Lee YJ (2023) Gligen: open-set grounded text-to-image generation. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 22511–22521. https://doi.org/10.1109/cvpr52729.2023.02156

Gal R, Alaluf Y, Atzmon Y, Patashnik O, Bermano AH, Chechik G, Cohen-Or D (2022) An image is worth one word: Personalizing text-to-image generation using textual inversion. arXiv:2208.01618

Ruiz N, Li Y, Jampani V, Pritch Y, Rubinstein M, Aberman K (2023) Dreambooth: fine tuning text-to-image diffusion models for subject-driven generation. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 22500–22510. https://doi.org/10.1109/cvpr52729.2023.02155

Huang N, Tang F, Dong W, Lee T-Y, Xu C (2023) Region-aware diffusion for zero-shot text-driven image editing. arXiv:2302.11797

Tumanyan N, Geyer M, Bagon S, Dekel T (2023) Plug-and-play diffusion features for text-driven image-to-image translation. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 1921–1930. https://doi.org/10.1109/cvpr52729.2023.00191

Voynov A, Aberman K, Cohen-Or D (2023) Sketch-guided text-to-image diffusion models. In: ACM SIGGRAPH 2023 conference proceedings, pp 1–11. https://doi.org/10.1145/3588432.3591560

Shirakawa T, Uchida S (2024) Noisecollage: a layout-aware text-to-image diffusion model based on noise cropping and merging. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 8921–8930. https://doi.org/10.1109/cvpr52733.2024.00852

Zhou D, Li Y, Ma F, Zhang X, Yang Y (2024) Migc: multi-instance generation controller for text-to-image synthesis. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 6818–6828. https://doi.org/10.1109/cvpr52733.2024.00651

Bar-Tal O, Yariv L, Lipman Y, Dekel T (2023) Multidiffusion: fusing diffusion paths for controlled image generation

Bashkirova D, Lezama J, Sohn K, Saenko K, Essa I (2023) Masksketch: unpaired structure-guided masked image generation. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 1879–1889. https://doi.org/10.1109/cvpr52729.2023.00187

Huang L, Chen D, Liu Y, Shen Y, Zhao D, Zhou J (2023) Composer: creative and controllable image synthesis with composable conditions arXiv:2302.09778

Mou C, Wang X, Xie L, Wu Y, Zhang J, Qi Z, Shan, Y (2024) T2i-adapter: learning adapters to dig out more controllable ability for text-to-image diffusion models. In: Proceedings of the AAAI conference on artificial intelligence, vol 38, pp 4296–4304. https://doi.org/10.1609/aaai.v38i5.28226

Ioffe S (2015) Batch normalization: accelerating deep network training by reducing internal covariate shift. arXiv:1502.03167

Ulyanov D, Vedaldi A, Lempitsky V (2016) Instance normalization: the missing ingredient for fast stylization. arXiv:1607.08022

Dumoulin V, Shlens J, Kudlur M (2016) A learned representation for artistic style. arXiv:1610.07629

Loshchilov I, Hutter F (2016) Sgdr: stochastic gradient descent with warm restarts. arXiv:1608.03983

Wang Z, Bovik AC, Sheikh HR, Simoncelli EP (2004) Image quality assessment: from error visibility to structural similarity. IEEE Trans Image Process 13(4):600–612. https://doi.org/10.1109/tip.2003.819861

Bovik AC (2010) Handbook of Image and Video Processing. Academic press

Mittal A, Moorthy AK, Bovik AC (2012) No-reference image quality assessment in the spatial domain. IEEE Trans Image Process 21(12):4695–4708. https://doi.org/10.1109/tip.2012.2214050

Mittal A, Soundararajan R, Bovik AC (2012) Making a “completely blind’’ image quality analyzer. IEEE Signal Process Lett 20(3):209–212. https://doi.org/10.1109/lsp.2012.2227726

Li B, Ren W, Fu D, Tao D, Feng D, Zeng W, Wang Z (2018) Benchmarking single-image dehazing and beyond. IEEE Trans Image Process 28(1):492–505. https://doi.org/10.1109/tip.2018.2867951

Dong J, Pan J (2020) Physics-based feature dehazing networks. In: Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part XXX 16. Springer, pp 188–204. https://doi.org/10.1007/978-3-030-58577-8_12

Tu Z, Talebi H, Zhang H, Yang F, Milanfar P, Bovik A, Li Y (2022) Maxim: multi-axis mlp for image processing. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (CVPR), pp 5769–5780. https://doi.org/10.1109/cvpr52688.2022.00568

Bai H, Pan J, Xiang X, Tang J (2022) Self-guided image dehazing using progressive feature fusion. IEEE Trans Image Process 31:1217–1229. https://doi.org/10.1109/tip.2022.3140609

Zamir SW, Arora A, Khan S, Hayat M, Khan FS, Yang M-H (2022) Restormer: efficient transformer for high-resolution image restoration. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 5728–5739. https://doi.org/10.1109/cvpr52688.2022.00564

Acknowledgements

This work was supported by Public-welfare Technology Application Research of Zhejiang Province in China under Grant LGG22F020032, Wenzhou Basic Industrial Project in China under Grant G2023093, Startup Foundation of Hangzhou Dianzi University under Grant KYS285624344, and Key Research and Development Project of Zhejiang Province in China under Grant 2021C03137.

Author information

Authors and Affiliations

Contributions

Yu Yang: Conceptualization, Methodology, In-vestigation, Formal Analysis, Data Cumtion, Software, Visualization, Writing; Xuesong Yin: Supervision, Revision; Yigang Wang: Supervision, Revision.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

This article does not contain any studies with human participants or animals performed by any of the authors.

Conflict of interests

All the authors certify that there is no conflict of interest with any individual or organization for the present work.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Yang, Y., Yin, X. & Wang, Y. Dark-ControlNet: an enhanced dehazing universal plug-in based on the dark channel prior. Appl Intell 55, 564 (2025). https://doi.org/10.1007/s10489-025-06439-9

Accepted:

Published:

DOI: https://doi.org/10.1007/s10489-025-06439-9