Abstract

Hierarchical Reinforcement Learning (HRL) is widely applied in various complex task scenarios. In complex tasks where simple model-free reinforcement learning struggles, hierarchical design allows for more efficient utilization of interactive data, significantly reducing training costs and improving training success rates. This study delves into the use of HRL based on the model-free policy layer to learn complex strategies for a robotic arm playing table tennis. Through processes such as pre-training, self-play training, and self-play training with top-level winning strategies, the robustness of the lower-level hitting strategies has been enhanced. Furthermore, a novel decay reward mechanism has been employed in the training of the higher-level agent to improve the win rate in adversarial matches against other methods. After pre-training and adversarial training, we achieved an average of 52 rally cycles for the forehand strategy and 48 rally cycles for the backhand strategy in testing. The high-level strategy training based on the decay reward mechanism resulted in an advantageous score when competing against other strategies.

Similar content being viewed by others

Explore related subjects

Discover the latest articles and news from researchers in related subjects, suggested using machine learning.Data Availability

Data will be made available on request. If someone wants to request the data from this study, they can contact corresponding author.

References

Li Y (2017) Deep reinforcement learning: an overview. arXiv:1701.07274

Ibarz J, Tan J, Finn C, Kalakrishnan M, Pastor P, Levine S (2021) How to train your robot with deep reinforcement learning: lessons we have learned. Int J Robot Res 40(4–5):698–721

Lample G, Chaplot DS (2017) Playing fps games with deep reinforcement learning. In: Proceedings of the AAAI conference on artificial intelligence, vol. 31

Yang Y, Juntao L, Lingling P (2020) Multi-robot path planning based on a deep reinforcement learning dqn algorithm. CAAI Trans Intell Technol 5(3):177–183

Arulkumaran K, Deisenroth MP, Brundage M, Bharath AA (2017) Deep reinforcement learning: a brief survey. IEEE Signal Process Mag 34(6):26–38

François-Lavet V, Henderson P, Islam R, Bellemare MG, Pineau J et al (2018) An introduction to deep reinforcement learning. Found Trends Mach Learn 11(3–4):219–354

Lillicrap TP, Hunt JJ, Pritzel A, Heess N, Erez T, Tassa Y, Silver D, Wierstra D (2015) Continuous control with deep reinforcement learning. arXiv:1509.02971

Atkeson CG, Santamaria JC (1997) A comparison of direct and model-based reinforcement learning. In: Proceedings of international conference on robotics and automation, vol. 4. IEEE, pp 3557–3564

Barto AG, Mahadevan S (2003) Recent advances in hierarchical reinforcement learning. Discrete Event Dyn Syst 13(1–2):41–77

Mahjourian R, Miikkulainen R, Lazic N, Levine S, Jaitly N (2018) Hierarchical policy design for sample-efficient learning of robot table tennis through self-play. arXiv:1811.12927

Tebbe J, Krauch L, Gao Y, Zell A (2021) Sample-efficient reinforcement learning in robotic table tennis. In: 2021 IEEE International Conference on Robotics and Automation (ICRA). IEEE, pp 4171–4178

Gao W, Graesser L, Choromanski K, Song X, Lazic N, Sanketi P, Sindhwani V, Jaitly N (2020) Robotic table tennis with model-free reinforcement learning. In: 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, pp 5556–5563

Wang Y, Sun Z, Luo Y, Zhang H, Zhang W, Dong K, He Q, Zhang Q, Cheng E, Song B (2023) A novel trajectory-based ball spin estimation method for table tennis robot. IEEE Trans Ind Electron 1–11. https://doi.org/10.1109/TIE.2023.3319743

Wang Y, Luo Y, Zhang H, Zhang W, Dong K, He Q, Zhang Q, Cheng E, Sun Z, Song B (2023) A table-tennis robot control strategy for returning high-speed spinning ball. IEEE/ASME Trans Mechatron 1–10. https://doi.org/10.1109/TMECH.2023.3316165

Ding T, Graesser L, Abeyruwan S, D’Ambrosio DB, Shankar A, Sermanet P, Sanketi PR, Lynch C (2022) Learning high speed precision table tennis on a physical robot. In: 2022 IEEE/RSJ international conference on Intelligent Robots and Systems (IROS). pp 10780–10787. https://doi.org/10.1109/IROS47612.2022.9982205

Ma H, Büchler D, Schölkopf B, Muehlebach M (2023) Reinforcement learning with model-based feedforward inputs for robotic table tennis. Auton Robots 1–17

Ma H, Fan J, Wang Q (2022) A novel ping-pong task strategy based on model-free multi-dimensional q-function deep reinforcement learning. In: 2022 8th International Conference on Systems and Informatics (ICSAI). IEEE, pp 1–6

Al-Emran M (2015) Hierarchical reinforcement learning: a survey. Int J Comput Digital Syst 4(02)

Yuan J, Zhang J, Yan J (2022) Towards solving industrial sequential decision-making tasks under near-predictable dynamics via reinforcement learning: an implicit corrective value estimation approach

Cuayáhuitl H, Dethlefs N, Frommberger L, Richter K-F, Bateman JA (2010) Generating adaptive route instructions using hierarchical reinforcement learning. In: Spatial cognition, vol. 7. Springer, pp 319–334

Xu X, Huang T, Wei P, Narayan A, Leong T-Y (2020) Hierarchical reinforcement learning in starcraft ii with human expertise in subgoals selection. arXiv:2008.03444

Dethlefs N, Cuayáhuitl H (2015) Hierarchical reinforcement learning for situated natural language generation. Nat Lang Eng 21(3):391–435

Araki B, Li X, Vodrahalli K, DeCastro J, Fry M, Rus D (2021) The logical options framework. In: International conference on machine learning. PMLR, pp 307–317

Nachum O, Gu SS, Lee H, Levine S (2018) Data-efficient hierarchical reinforcement learning. Adv Neural Inf Process Syst 31

Bacon P-L, Harb J, Precup D (2017) The option-critic architecture. In: Proceedings of the AAAI conference on artificial intelligence, vol. 31

Kulkarni TD, Narasimhan K, Saeedi A, Tenenbaum J (2016) Hierarchical deep reinforcement learning: Integrating temporal abstraction and intrinsic motivation. Adv Neural Inf Process Syst 29

Ji Y, Li Z, Sun Y, Peng XB, Levine S, Berseth G, Sreenath K (2022) Hierarchical reinforcement learning for precise soccer shooting skills using a quadrupedal robot. In: 2022 IEEE/RSJ international conference on Intelligent Robots and Systems (IROS). pp 1479–1486. https://doi.org/10.1109/IROS47612.2022.9981984

Huang X, Li Z, Xiang Y, Ni Y, Chi Y, Li Y, Yang L, Peng XB, Sreenath K (2023) Creating a dynamic quadrupedal robotic goalkeeper with reinforcement learning. In: 2023 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). pp 2715–2722 . https://doi.org/10.1109/IROS55552.2023.10341936

Hu R, Zhang Y (2022) Fast path planning for long-range planetary roving based on a hierarchical framework and deep reinforcement learning. Aerospace 9(2):101

Bai Y, Jin C (2020) Provable self-play algorithms for competitive reinforcement learning. In: International conference on machine learning. PMLR, pp 551–560

Hernandez D, Denamganaï K, Gao Y, York P, Devlin S, Samothrakis S, Walker JA (2019) A generalized framework for self-play training. In: 2019 IEEE Conference on Games (CoG). IEEE, pp 1–8

Zhang H, Yu T (2020) Alphazero. Deep reinforcement learning: fundamentals, research and applications. pp 391–415

Brandão B, De Lima TW, Soares A, Melo L, Maximo MROA (2022) Multiagent reinforcement learning for strategic decision making and control in robotic soccer through self-play. IEEE Access 10:72628–72642. https://doi.org/10.1109/ACCESS.2022.3189021

Lin F, Huang S, Pearce T, Chen W, Tu W-W (2023) Tizero: Mastering multi-agent football with curriculum learning and self-play. arXiv:2302.07515

Wang X, Thomas JD, Piechocki RJ, Kapoor S, Santos-Rodríguez R, Parekh A (2022) Self-play learning strategies for resource assignment in open-ran networks. Comput Netw 206:108682

Andersson RL (1989) Aggressive trajectory generator for a robot ping-pong player. IEEE Control Syst Mag 9(2):15–21

Lin H-I, Yu Z, Huang Y-C (2020) Ball tracking and trajectory prediction for table-tennis robots. Sensors 20(2):333

Miyazaki F, Matsushima M, Takeuchi M (2006) Learning to dynamically manipulate: a table tennis robot controls a ball and rallies with a human being. Advances in robot control: from everyday physics to human-like movements. https://doi.org/10.1007/978-3-540-37347-6_15

Koç O, Maeda G, Peters J (2018) Online optimal trajectory generation for robot table tennis. Robot Auton Syst 105:121–137. https://doi.org/10.1016/j.robot.2018.03.012

Mülling K, Kober J, Peters J (2010) Simulating human table tennis with a biomimetic robot setup. pp 273–282. https://doi.org/10.1007/978-3-642-15193-4_26

Huang Y, Xu D, Tan M, Su H (2011) Trajectory prediction of spinning ball for ping-pong player robot. pp 3434–3439. https://doi.org/10.1109/IROS.2011.6095044

Kyohei A, Masamune N, Satoshi Y (2020) The ping pong robot to return a ball precisely. Omron TECHNICS 51:1–6

Zhao Y, Xiong R, Zhang Y (2017) Model based motion state estimation and trajectory prediction of spinning ball for ping-pong robots using expectation-maximization algorithm. J Intell Robot Syst 87(3):407–423

Lin H-I, Huang Y-C (2019) Ball trajectory tracking and prediction for a ping-pong robot. In: 2019 9th International Conference on Information Science and Technology (ICIST). IEEE, pp 222–227

Abeyruwan SW, Graesser L, D’Ambrosio DB, Singh A, Shankar A, Bewley A, Jain D, Choromanski KM, Sanketi PR (2023) i-sim2real: reinforcement learning of robotic policies in tight human-robot interaction loops. In: Conference on robot learning. PMLR, pp 212–224

Büchler D, Guist S, Calandra R, Berenz V, Schölkopf B, Peters J (2022) Learning to play table tennis from scratch using muscular robots. IEEE Trans Robotics

Zhu Y, Zhao Y, Jin L, Wu J, Xiong R (2018) Towards high level skill learning: Learn to return table tennis ball using monte-carlo based policy gradient method. In: 2018 IEEE international conference on Real-time Computing and Robotics (RCAR). pp 34–41. https://doi.org/10.1109/RCAR.2018.8621776

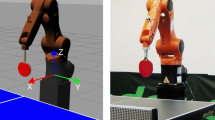

Tebbe J, Gao Y, Sastre-Rienietz M, Zell A (2019) A table tennis robot system using an industrial kuka robot arm. In: Brox T, Bruhn A, Fritz M (eds) Pattern recognition. Springer, Cham, pp 33–45

Tebbe J (2022) Adaptive robot systems in highly dynamic environments: a table tennis robot. PhD thesis, Universität Tübingen

Gao Y, Tebbe J, Zell A (2023) Optimal stroke learning with policy gradient approach for robotic table tennis. Appl Intell 53(11):13309–13322

Kaelbling LP, Littman ML, Moore AW (1996) Reinforcement learning: a survey. J Artif Intell Res 4:237–285

Puterman ML (1990) Markov decision processes. Handbooks Oper Res Management Sci 2:331–434

Watkins CJ, Dayan P (1992) Q-learning. Mach Learn 8:279–292

Fan J, Wang Z, Xie Y, Yang Z (2020) A theoretical analysis of deep q-learning. In: Learning for dynamics and control. PMLR, pp 486–489

Konda V, Tsitsiklis J (1999) Actor-critic algorithms. Adv Neural Inf Process Syst 12:100

Peters J, Vijayakumar S, Schaal S (2005) Natural actor-critic. In: Machine Learning: ECML 2005: 16th European conference on machine learning, Porto, Portugal, October 3-7, 2005. Proceedings 16. Springer, pp 280–291

Haarnoja T, Zhou A, Abbeel P, Levine S (2018) Soft actor-critic: off-policy maximum entropy deep reinforcement learning with a stochastic actor. In: International conference on machine learning. PMLR, pp 1861–1870

Senadeera M, Karimpanal TG, Gupta S, Rana S (2022) Sympathy-based reinforcement learning agents. In: Proceedings of the 21st international conference on autonomous agents and multiagent systems. pp 1164–1172

Acknowledgements

This work was supported by National Natural Science Foundation of China (GA 61876054).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have no competing interests to declare that are relevant to the content of this article.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Supplementary file 1 (mp4 7294 KB)

Appendices

Appendix A: Convergence of pre-training rewards

Appendix B: Convergence failure of reward components during pre-training using the DDPG method

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Ma, H., Fan, J., Xu, H. et al. Mastering table tennis with hierarchy: a reinforcement learning approach with progressive self-play training. Appl Intell 55, 562 (2025). https://doi.org/10.1007/s10489-025-06450-0

Accepted:

Published:

DOI: https://doi.org/10.1007/s10489-025-06450-0

Keywords

Profiles

- Qiang Wang View author profile