Abstract

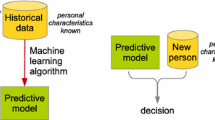

Increasing numbers of decisions about everyday life are made using algorithms. By algorithms we mean predictive models (decision rules) captured from historical data using data mining. Such models often decide prices we pay, select ads we see and news we read online, match job descriptions and candidate CVs, decide who gets a loan, who goes through an extra airport security check, or who gets released on parole. Yet growing evidence suggests that decision making by algorithms may discriminate people, even if the computing process is fair and well-intentioned. This happens due to biased or non-representative learning data in combination with inadvertent modeling procedures. From the regulatory perspective there are two tendencies in relation to this issue: (1) to ensure that data-driven decision making is not discriminatory, and (2) to restrict overall collecting and storing of private data to a necessary minimum. This paper shows that from the computing perspective these two goals are contradictory. We demonstrate empirically and theoretically with standard regression models that in order to make sure that decision models are non-discriminatory, for instance, with respect to race, the sensitive racial information needs to be used in the model building process. Of course, after the model is ready, race should not be required as an input variable for decision making. From the regulatory perspective this has an important implication: collecting sensitive personal data is necessary in order to guarantee fairness of algorithms, and law making needs to find sensible ways to allow using such data in the modeling process.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Notes

European directive 95/46/EG of the European Parliament and the Council of 24th October 1995, [1995] OJ L281/31. See also http://europa.eu.int/eur-lex/en/lif/dat/1995/en_395L0046.html.

This principle is sometimes referred to as the principle of minimality, see Bygrave (2002, p. 341).

Note that, in the European Data Protection Directive and the WBP, this principle applies only to incomplete or inaccurate data, or data that are irrelevant or processed illegitimately.

Proposal for a Regulation of the European Parliament and of the Council on the protection of individuals with regard to the processing of personal data and on the free movement of such data (General Data Protection Regulation), Brussels, 25.1.2012 COM(2012) 11 final 2012/0011 (COD). Available at http://eur-lex.europa.eu/LexUriServ/LexUriServ.do?uri=COM:2012:0011:FIN:EN:PDF.

Art. 15 of the EU directive on the protection of personal data.

ECJ, C-127/07, 16 December 2008.

Obtained from: http://data.princeton.edu/wws509/datasets/#salary.

References

Ajunwa I, Friedler S, Scheidegger C, Venkatasubramanian S (2016) Hiring by algorithm: predicting and preventing disparate impact. SSRN

Barocas S, Selbst AD (2016) Big data’s disparate impact. Calif Law Rev. http://ssrn.com/abstract=2477899

Bygrave L (2002). Data protection law; approaching its rationale, logic and limits, vol. 10 of information law series. Kluwer Law International, The Hague

Calders T, Karim A, Kamiran F, Ali, W, Zhang X (2013) Controlling attribute effect in linear regression. In: Proceedings of 13th IEEE ICDM, pp 71–80

Calders T, Zliobaite I (2013) Why unbiased computational processes can lead to discriminative decision procedures. In: Discrimination and Privacy in the Information Society, pp 43–57

Citron DK, Pasquale FA (2014) The scored society: due process for automated predictions. Wash Law Rev, Vol. 89, p. 1, U of Maryland Legal Studies Research Paper No. 2014-8

Custers BHM (2012) Predicting data that people refuse to disclose; how data mining predictions challenge informational self-determination. Priv Obs Mag 3. http://www.privacyobservatory.org/

Custers B, Calders T, Schermer B, Zarsky T (eds) (2013a) Discrimination and privacy in the information society: data mining and profiling in large databases. Springer, Heidelberg

Custers B, Van der Hof S, Schermer B, Appleby-Arnold S, Brockdorff N (2013b) Informed consent in social media use. the gap between user expectations and eu personal data protection law. SCRIPTed J Law Technol Soc 10:435–457

Edelman BG, Luca M (2014) Digital discrimination: the case of Airbnb.com. Working Paper 14-054, Harvard Business School

Feldman M, Friedler SA, Moeller J, Scheidegger C, Venkatasubramanian S (2015) Certifying and removing disparate impact. In: Proceedings of 21st ACM KDD, pp 259–268

Gellert R, De Vries K, De Hert P, Gutwirth S (2013) A comparative analysis of anti-discrimination and data protection legislations. In: Discrimination and privacy in the information society: data mining and profiling in large databases. Springer, Heidelberg

Hajian S, Domingo-Ferrer J (2013) A methodology for direct and indirect discrimination prevention in data mining. IEEE Trans Knowl Data Eng 25(7):1445–1459

Hillier A (2003) Spatial analysis of historical redlining: a methodological explanation. J Hous Res 14(1):137–168

Hornung G (2012) A general data protection regulation for europe? Light and shade. The Commissions Draft of 25 January 2012, 9 SCRIPTed, pp 64–81

House TW (2014) Big data: seizing opportunities, preserving values

Kamiran F, Calders T (2009) Classification without discrimination. In IEEE international conference on computer, control & communication, IEEE-IC4. IEEE press

Kamiran F, Calders T, Pechenizkiy M (2010) Discrimination aware decision tree learning. In: Proceedings of 10th IEEE ICDM, pp 869–874

Kamiran F, Zliobaite I, Calders T (2013) Quantifying explainable discrimination and removing illegal discrimination in automated decision making. Knowl Inf Syst 35(3):613–644

Kamishima T, Akaho S, Asoh H, Sakuma J (2012) Fairness-aware classifier with prejudice remover regularizer. In: Proceedings of ECMLPKDD, pp 35–50

Kay M, Matuszek C, Munson S (2015) Unequal representation and gender stereotypes in image search results for occupations. In: Proceedings of 33rd ACM CHI, pp 3819–3828

Kosinski M, Stillwell D, Graepel T (2013) Private traits and attributes are predictable from digital records of human behaviour. Proc Natl Acad Sci 110(15):5802–5805

Kuner C (2012) The european commission’s proposed data protection regulation: a copernican revolution in european data protection law. Privacy and Security Law Report

Luong BT, Ruggieri S, Turini F (2011) k-NN as an implementation of situation testing for discrimination discovery and prevention. In: Proceedings of 17th KDD, pp 502–510

Mancuhan K, Clifton C (2014) Combating discrimination using bayesian networks. Artif Intell Law 22(2):211–238

McCrudden C, Prechal S (2009) The concepts of equality and non-discrimination in europe. European commission. DG Employment, Social Affairs and Equal Opportunities

Ohm P (2010) Broken promises of privacy: responding to the surprising failure of anonymization. UCLA Law Rev 57:1701–1765

Pearl J (2009) Causality: models, reasoning and inference, 2nd edn. Cambridge University Press, Cambridge

Pedreschi D, Ruggieri S, Turini F (2008) Discrimination-aware data mining. In: Proceedings of 14th ACM KDD, pp 560–568

Pope DG, Sydnor JR (2011) Implementing anti-discrimination policies in statistical profiling models. Am Econ J Econ Policy 3(3):206–231

Romei A, Ruggieri S (2014) A multidisciplinary survey on discrimination analysis. Knowl Eng Rev 29(5):582–638

Schermer B, Custers B, Van der Hof S (2014) The crisis of consent: how stronger legal protection may lead to weaker consent in data protection. Ethics Inf Technol 16(2):171–182

Squires G (2003) Racial profiling, insurance style: insurance redlining and the uneven development of metropolitan areas. J Urban Aff 25(4):391–410

Sweeney L (2013) Discrimination in online ad delivery. Commun ACM 56(5):44–54

Weisberg S (1985) Applied linear regression, second edition

Zemel RS, Wu Y, Swersky K, Pitassi T, Dwork C (2013) Learning fair representations. In: Proceedings of 30th ICML, pp 325–333

Zliobaite I (2015) A survey on measuring indirect discrimination in machine learning. CoRR, abs/1511.00148

Author information

Authors and Affiliations

Corresponding author

Appendix: Omitted variable bias

Appendix: Omitted variable bias

We provide a theoretical expectation for the omitted variable bias in the ordinary least squares (OLS) estimation of linear regression coefficients. The theory is known in multiple statistical textbooks, we adapt the reasoning for discrimination prevention. For better interpretability we focus on a simple case with one legitimate variable, extension to more variables is straightforward.

Let the true underlying model behind data be

where x is a legitimate variable (such as education), s is a sensitive variable (such as ethnicity), y is the target variable (such as salary), e is random noise with the expected value of zero, and \(\beta\), \(b_1\), and \(b_0\) are non-zero coefficients.

Assume a data scientist decides to fit model \(y = \hat{b}_0 + \hat{b}_1x\).

Following the standard (OLS) procedure for estimating regression parameters the data scientist gets:

where bar denotes the mean, and hat denotes that it is estimated from data.

Next we plug-in the true underlying model from Eq. (10)

This demonstrates that unless \(Cov (x,s)\) is zero, or \(\beta\) is zero, the estimates \(\hat{b}_1\) and \(\hat{b}_0\) will be biased by a component that carries forward discrimination.

Rights and permissions

About this article

Cite this article

Žliobaitė, I., Custers, B. Using sensitive personal data may be necessary for avoiding discrimination in data-driven decision models. Artif Intell Law 24, 183–201 (2016). https://doi.org/10.1007/s10506-016-9182-5

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10506-016-9182-5