Abstract

We propose a new methodology for learning and adaption of manipulation skills that involve physical contact with the environment. Pure position control is unsuitable for such tasks because even small errors in the desired trajectory can cause significant deviations from the desired forces and torques. The proposed algorithm takes a reference Cartesian trajectory and force/torque profile as input and adapts the movement so that the resulting forces and torques match the reference profiles. The learning algorithm is based on dynamic movement primitives and quaternion representation of orientation, which provide a mathematical machinery for efficient and stable adaptation. Experimentally we show that the robot’s performance can be significantly improved within a few iteration steps, compensating for vision and other errors that might arise during the execution of the task. We also show that our methodology is suitable both for robots with admittance and for robots with impedance control.

Similar content being viewed by others

References

Bristow, D., Tharayil, M., & Alleyne, A. (2006). A survey of iterative learning control. IEEE Control Systems Magazine, 26(3), 96–114.

Broenink, J.F., & Tiernego, M.L.J. (1996). Peg-in-hole assembly using impedance control with a 6 DOF robot. Proceedings of the 8th European Simulation Symposium (pp. 504–508).

Bruyninckx, H., Dutre, S., & De Schutter, J. (1995). Peg-on-hole: a model based solution to peg and hole alignment. IEEE International Conference on Robotics and Automation (ICRA), (Vol. 2, pp. 1919–1924). Nagoya, Japan.

Buchli, J., Stulp, F., Theodorou, E., & Schaal, S. (2011). Learning variable impedance control. International Journal of Robotics Research, 30(7), 820–833.

Calinon, S., Evrard, P., Gribovskaya, E., Billard, A., & Kheddar, A. (2009). Learning collaborative manipulation tasks by demonstration using a haptic interface. IEEE International Conference on Advanced Robotics (ICAR), Munich, Germany.

Collins, K., Palmer, A. J., & Rathmill, K. (1985). The development of a European benchmark for the comparison of assembly robot programming systems. In K. Rathmill, P. MacConail, S. O’leary, & J. Browne (Eds.), Robot technology and applications (pp. 187–199). New York: Springer.

Dillmann, R. (2004). Teaching and learning of robot tasks via observation of human performance. Robotics and Autonomous Systems, 47(2–3), 109–116.

Giordano, P. R., Stemmer, A., Arbter, K., & Albu-Schäffer, A. (2008). Robotic assembly of complex planar parts: An experimental evaluation. IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) (pp. 3775–3782). Nice, France.

Gullapalli, V., Grupen, R. A., & Barto, A. G. (1992). Learning reactive admittance control. IEEE International Conference on Robotics and Automation (ICRA) (pp. 1475–1480). Nice, France.

Hamner, B., Koterba, S., Shi, J., Simmons, R., & Singh, S. (2010). An autonomous mobile manipulator for assembly tasks. Autonomous Robots, 28, 131–149.

Hersch, M., Guenter, F., Calinon, S., & Billard, A. (2008). Dynamical system modulation for robot learning via kinesthetic demonstrations. IEEE Transactions on Robotics, 24(6), 1463–1467.

Hirana, K., Suzuki, T., & Okuma, S. (2002). Optimal motion planning for assembly skill based on mixed logical dynamical system. textit7th International Workshop on Advanced Motion Control (pp. 359–364). Maribor, Slovenia.

Hogan, N. (1985). Impedance control: An approach to manipulation: Part I—theory. Journal of Dynamic Systems, Measurement, and Control, 107(1), 1–7.

Hsu, P., Hauser, J., & Sastry, S. (1989). Dynamic control of redundant manipulators. Journal of Robotic Systems, 6(2), 133–148.

Hutter, M., Hoepflinger, M.A., Gehring, C., Bloesch, M., Remy, C.D., & Siegwart, R. (2012). Hybrid operational space control for compliant legged systems. Robotics: Science and Systems (RSS). Sydney, Australia.

Hyon, S. H., Hale, J. G., & Cheng, G. (2007). Full-body compliant human-humanoid interaction: Balancing in the presence of unknown external forces. IEEE Transactions on Robotics, 23(5), 884–898.

Ijspeert, A. J., Nakanishi, J., & Schaal, S. (2001). Nonlinear dynamical systems for imitation with humanoid robots. IEEE-RAS International Conference on Humanoid Robots (Humanoids) (pp. 219–226). Tokyo, Japan.

Ijspeert, A. J., Nakanishi, J., Hoffmann, H., Pastor, P., & Schaal, S. (2013). Dynamical movement primitives: Learning attractor models for motor behaviors. Neural Computations, 25(2), 328–373.

Kaiser, M., & Dillmann, R. (1996). Building elementary robot skills from human demonstration. IEEE International Conference on Robotics and Automation (ICRA) (pp. 2700–2705). Minneapolis, MN.

Kalakrishnan, M., Righetti, L., Pastor, P., & Schaal, S. (2011). Learning force control policies for compliant manipulation. IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) (pp. 4639–4644). San Francisco, CA.

Kawato, M. (1990). Feedback-error-learning neural network for supervised motor learning. In E. Eckmiller (Ed.), Advanced neural computers (pp. 365–372). Amsterdam: Elsevier.

Kazemi, M., Valois, J. S., Bagnell, J. A., & Pollard, N. (2014). Human-inspired force compliant grasping primitives. Autonomous Robots, 37, 209–225.

Khatib, O. (1987). A unified approach for motion and force control of robot manipulators: The operational space formulation. IEEE Journal of Robotics and Automation, RA–3(1), 43–53.

Kormushev, P., Calinon, S., & Caldwell, D. G. (2011). Imitation learning of positional and force skills demonstrated via kinesthetic teaching and haptic input. Advanced Robotics, 25(5), 581–603.

Laurin-Kovitz, K. F., Colgate, J. E., & Carnes, S. D. R. (1991). Design of components for programmable passive impedance. IEEE International Conference on Robotics and Automation (ICRA) (pp. 1476–1481). Sacramento, CA.

Lee, D., & Ott, C. (2011). Incremental kinesthetic teaching of motion primitives using the motion refinement tube. Autonomous Robots, 31(2), 115–131.

Li, Y. (1997). Hybrid control approach to the peg-in-hole problem. IEEE Robotics and Automation Magazine, 4(2), 52–60.

Lopes, A., & Almeida, F. (2008). A force-impedance controlled industrial robot using an active robotic auxiliary device. Robotics and Computer-Integrated Manufacturing, 24, 299–309.

Moore, K., Chen, Y., & Ahn, H. S. (2006). Iterative learning control: A tutorial and big picture view. 45th IEEE Conference on Decision and Control (pp. 2352–2357). San Diego, CA.

Nakamura, Y. (1991). Advanced robotics: Redundancy and optimization. Boston, MA: Addison-Wesley.

Nakanishi, J., & Schaal, S. (2004). Feedback error learning and nonlinear adaptive control. Neural Networks, 17, 1453–1465.

Nakanishi, J., Cory, R., Mistry, M., Peters, J., & Schaal, S. (2008). Operational space control: A theoretical and empirical comparison. The International Journal of Robotics Research, 27, 737– 757.

Nemec, B., & Ude, A. (2012). Action sequencing using dynamic movement primitives. Robotica, 30, 837–846.

Nemec, B., Žlajpah, L., & Omrčen, D. (2007). Comparison of null-space and minimal null-space control algorithms. Robotica, 25(5), 511–520.

Newman, W. S., Branicky, M. S., Podgurski, H. A., Chhatpar, S., Huang, L., Swaminathan, J., & Zhang, H. (1999). Force-responsive robotic assembly of transmission components. IEEE International Conference on Robotics and Automation (ICRA), (Vol. 3, pp. 2096–2102). Detroit, Michigan.

Pastor, P., Righetti, L., Kalakrishnan, M., & Schaal, S. (2011). Online movement adaptation based on previous sensor experiences. IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) (pp. 365–371). San Francisco, CA.

Quigley, M., Gerkey, B., Conley, K., Faust, J., Foote, T., Leibsz, J., Bergery, E., Wheeler, R., & Ng, A. (2009). ROS: An open-source robot operating system. ICRA Workshop on Open Source Software. Kobe, Japan.

Raibert, M. H., & Craig, J. J. (1981). Hybrid position/force control of manipulators. Journal of Dynamic Systems, Measurement, and Control, 103(2), 126–133.

Rozo, L., Jiménez, P., & Torras, C. (2013). A robot learning from demonstration framework to perform force-based manipulation tasks. Inteligent Service Robotics, 6, 33–51.

Rusu, R. B., & Cousins, S. (2011). 3D is here: Point Cloud Library (PCL). IEEE International Conference on Robotics and Automation (ICRA). Shanghai: ICRA Communications.

Savarimuthu, T. R., Liljekrans, D., Ellekilde, L. P., Ude, A., Nemec, B., & Krüger, N. (2013). Analysis of human peg-in-hole executions in a robotic embodiment using uncertain grasps. 9th International Workshop on Robot Motion and Control (pp. 233–239). Poland: Wasowo.

Schreiber, G., Stemmer, A., & Bischoff, R. (2010). The fast research interface for the KUKA lightweight robot. ICRA Workshop on Innovative Robot Control Architectures for Demanding (Research) Applications—How to Modify and Enhance Commercial Controllers. Anchorage, Alaska.

Skubic, M., & Volz, R. A. (1998). Learning force-based assembly skills from human demonstration for execution in unstructured environments. IEEE International Conference on Robotics and Automation (ICRA) (pp. 1281–1288). Leuven, Belgium.

Stemmer, A., Albu-Schäffer, A., & Hirzinger, G. (2007). An analytical method for the planning of robust assembly tasks of complex shaped planar parts. IEEE International Conference on Robotics and Automation (ICRA) (pp. 317–323). Rome

Ude, A. (1999). Filtering in a unit quaternion space for model-based object tracking. Robotics and Autonomous Systems, 28(2–3), 163–172.

Ude, A., Gams, A., Asfour, T., & Morimoto, J. (2010). Task-specific generalization of discrete and periodic dynamic movement primitives. IEEE Transactions on Robotics, 26(5), 800–815.

Villani, L., & De Schutter, J. (2008). Force control. In B. Siciliano & O. Khatib (Eds.), Springer handbook of robotics (pp. 161–185). Berlin: Springer.

Whitney, D. E. (1969). Resolved motion rate control of manipulators and human prostheses. IEEE Transactions on Systems, Man, and Cybernetics, MMS–10(2), 47–53.

Whitney, D. E., & Nevins, J. L. (1979). What is the Remote Center Compliance (RCC) and what can it do? International Symposium on Industrial Robots (ISIR). Washington, DC.

Xiao, J. (1997). Goal-contact relaxation graphs for contact-based fine motion planning. IEEE International Symposium on Assembly and Task Planning (ISATP) (pp. 25–30). California: Marina del Rey.

Yamashita, T., Godler, I., Takahashi, Y., Wada, K., & Katoh, R. (1991). Peg-and-hole task by robot with force sensor: Simulation and experiment. International Conference on Industrial Electronics, Control and Instrumentation (IECON) (pp. 980–985). Kobe.

Yoshikawa, T. (2000). Force control of robot manipulators. IEEE International Conference on Robotics and Automation (ICRA), (pp. 220–226). San Francisco, CA.

Yun, S. K. (2008). Compliant manipulation for peg-in-hole: Is passive compliance a key to learn contact motion? In IEEE International Conference on Robotics and Automation (ICRA) (pp. 1647–1652). Pasadena, CA.

Acknowledgments

The research leading to these results has received funding from the European Community Seventh Framework Programme FP7/2007-2013 (Specific Programme Cooperation, Theme 3, Information and Communication Technologies) under Grant Agreement No. 269959, IntellAct and No. 600578, ACAT.

Author information

Authors and Affiliations

Corresponding author

Electronic supplementary material

Below is the link to the electronic supplementary material.

Supplementary material 1 (mp4 2450 KB)

Supplementary material 2 (mp4 6842 KB)

Appendix: Impedance control

Appendix: Impedance control

In general, the dynamics of a robot arm interacting with the environment is described by

where \(\varvec{\rho }\) is a vector of joint torques, \(\mathbf{H}\in \mathbb {R}^{n\times n}\) is a symmetric, positive definite inertia matrix, \(\mathbf{h}\in \mathbb {R}^n\) contains nonlinear terms due to the centrifugal, Coriolis, friction and gravity forces, \(\mathbf{J}\in \mathbb {R}^{6\times n}\) is the robot Jacobian, and \(\mathbf{F},\, \mathbf{M}\in \mathbb {R}^3\) are the vectors of environment contact forces and torques acting on the robot’s end-effector. \(\pmb {\theta } \in \mathbb {R}^n \) denotes the joint angles. The following relationship holds between joint space and Cartesian space accelerations (Hsu et al. 1989; Nakanishi et al. 2008)

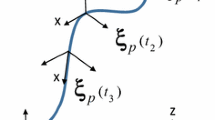

where \(\mathbf{J}_\mathbf{H}^+=\mathbf{H}^{-1}\mathbf{J}^\mathrm {T}(\mathbf{J}\mathbf{H}^{-1}\mathbf{J}^\mathrm {T})^{+} = \mathbf{H}^{-1/2}(\mathbf{J}\mathbf{H}^{-1/2})^+\) denotes the inertia weighted pseudo-inverse of \(\mathbf{J}\) (Nakamura 1991; Whitney 1969). \(\pmb {\xi }\) is any vector from \(\mathbb {R}^n\) and defines the null space motion. By inserting (49) into (48) we obtain a general form control law for a redundant robot (Nemec et al. 2007)

where \(\mathbf{N}\) is the projection matrix onto the null space of inertia weighted Jacobian. It is defined as \(\mathbf{N}=\mathbf{I}-\mathbf{J}_\mathbf{H}^+\mathbf{J}\). The parameters \(\ddot{\mathbf {p}}\), \(\dot{\pmb {\omega }}\), and \(\pmb {\xi }\) can be used as control inputs that should be set so that the task space tracking errors are minimized. The first term in Eq. (50) is the task controller, the second term is the null space controller and the third and the fourth term compensate for the non-linear robot dynamics and external forces, respectively. Lets choose the task command inputs \(\ddot{\mathbf{p}}_c,\) \(\dot{\pmb {\omega }}_c\) as follows

where subscript \({}_r\) denotes the reference values and variables without the substrict the current values as received from the robot. The force/torque errors \(\mathbf{e}_p\) and \(\mathbf{e}_q\) are calculated using (28) and (29), respectively. \(\mathbf{K}^d_p\), \(\mathbf{K}^d_q\), \(\mathbf{K}^p_p\), \(\mathbf{K}^p_q\), \(\mathbf{K}_{s1}\) and \(\mathbf{K}_{s2}\) are positive definite, diagonal positional and rotational damping matrices, positional and rotational stiffness matrices, and force and torque feedback matrices, respectively. The reference position and orientation are calculated by integrating (20), (37), (8), (38), and (32), followed by displacement (28) – (28). The reference velocities and accelerations are calculated as \(\dot{\mathbf{p}}_r=\Delta \mathbf{q}_{w}*\dot{\mathbf{p}}_{ DMP}* \bar{\Delta \mathbf{q}} _w\) and \(\ddot{\mathbf{p}}_r=\Delta \mathbf{q}_w*\ddot{\mathbf{p}}_{ DMP}* \bar{\Delta \mathbf{q}}_w\), respectively, while the reference angular velocities and accelerations are calculated as \(\omega _r=\Delta \mathbf{q}_w*\eta _{ DMP}/\tau * \bar{\Delta \mathbf{q}}_w\) and \(\dot{\omega }_r=\Delta \mathbf{q}_w*\dot{\eta }_{ DMP}/\tau *\bar{\Delta \mathbf{q}}_w\), respectively.

With the above choice of command accelerations we obtain the well known impedance control law (Hogan 1985). By choosing also the null space command input \(\pmb {\xi }_c\), one can control the null space motion of the robot. Simply setting \(\pmb {\xi }_c\) to \(0\) results in non-conservative motion, i. e. the robot will constantly move in null space minimizing the kinetic energy. An appropriate solution to this problem is to set the desired null space velocities to zero, which results in an energy dissipation controller (Khatib 1987). The command null space motion vector is thus calculated as

The commanded torque \(\varvec{\rho }_c\) is finally calculated by inserting \(\ddot{\pmb {p}}_c\), \(\dot{\pmb {\omega }}_c\), \(\pmb {\xi }_c\) into (50), whereas the current values are used for all other variables in (50).

Rights and permissions

About this article

Cite this article

Abu-Dakka, F.J., Nemec, B., Jørgensen, J.A. et al. Adaptation of manipulation skills in physical contact with the environment to reference force profiles. Auton Robot 39, 199–217 (2015). https://doi.org/10.1007/s10514-015-9435-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10514-015-9435-2