Abstract

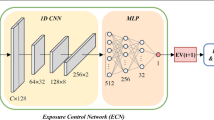

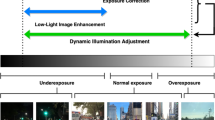

The vision-based localization of robots operating in complex environments is challenging due to the varying dynamic illumination. This study aims to develop a novel automated camera-exposure control algorithm for illumination robust localization. First, a lightweight photometric calibration method is designed to model camera optical imaging. Based on the calibrated imaging, a photometric-sensitive image quality metric is developed for the robust estimation of image information. Then, a novel automated exposure control algorithm is designed to respond to environmental illumination variation rapidly and avoid visual degradation by utilizing a coarse-to-fine strategy to adjust the camera exposure time to optimum in real-time. Furthermore, a photometric compensation algorithm is introduced to fix the problem of the brightness inconsistency derived from the change of exposure time. Finally, several experiments are performed on the public datasets and our field robot. Results demonstrate that the proposed framework effectively improves the localization performance in varying illumination environments.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Code Availability Statement

The code will be available at https://github.com/HITSZ-NRSL/ExposureControl.

References

Albrecht, A., & Heide, N.F. (2019). Improving feature-based visual slam in person indoor navigation with hdr imaging. In 2019 IEEE 2nd international conference on information communication and signal processing (ICICSP), IEEE, pp 369–373

Basri, R., & Jacobs, D. W. (2003). Lambertian reflectance and linear subspaces. IEEE Transactions on Pattern Analysis and Machine Intelligence, 25(2), 218–233.

Bergmann, P., Wang, R., & Cremers, D. (2017). Online photometric calibration of auto exposure video for realtime visual odometry and slam. IEEE Robotics and Automation Letters, 3(2), 627–634.

Debevec, P. E., & Malik, J. (2008). Recovering high dynamic range radiance maps from photographs. In: textitACM SIGGRAPH 2008 classes, pp. 1–10

Delmerico, J., Mintchev, S., Giusti, A., Gromov, B., Melo, K., Horvat, T., Cadena, C., Hutter, M., Ijspeert, A., Floreano, D., et al. (2019). The current state and future outlook of rescue robotics. Journal of Field Robotics, 36(7), 1171–1191.

Dosselmann, R., & Yang, X. D. (2011). A comprehensive assessment of the structural similarity index. Signal Image and Video Processing, 5(1), 81–91.

Engel, J., Koltun, V., & Cremers, D. (2017). Direct sparse odometry. IEEE Transactions on Pattern Analysis and Machine Intelligence, 40(3), 611–625.

Goldman, D. B. (2010). Vignette and exposure calibration and compensation. IEEE Transactions on Pattern Analysis and Machine Intelligence, 32(12), 2276–2288.

Haussecker, H. W., & Fleet, D. J. (2001). Computing optical flow with physical models of brightness variation. IEEE Transactions on Pattern Analysis and Machine Intelligence, 23(6), 661–673.

Jin, H., Favaro, P., & Soatto, S. (2001). Real-time feature tracking and outlier rejection with changes in illumination. In: Proceedings eighth IEEE international conference on computer vision. ICCV 2001, vol 1, pp 684–689

Khattak, S., Papachristos, C., & Alexis, K. (2020). Keyframe-based thermal-inertial odometry. Journal of Field Robotics, 37(4), 552–579.

Kim, J., Cho, Y., & Kim, A. (2018a). Exposure control using bayesian optimization based on entropy weighted image gradient. In: 2018 IEEE International conference on robotics and automation (ICRA), IEEE, pp. 857–864

Kim, J., Cho, Y., & Kim, A. (2020). Proactive camera attribute control using bayesian optimization for illumination-resilient visual navigation. IEEE Transactions on Robotics, 36(4), 1256–1271.

Kim, P., Coltin, B., Alexandrov, O., & Kim, H.J. (2017). Robust visual localization in changing lighting conditions. In: 2017 IEEE International conference on robotics and automation (ICRA), IEEE, pp. 5447–5452

Kim, P., Lee, H., & Kim, H. J. (2018). Autonomous flight with robust visual odometry under dynamic lighting conditions. Autonomous Robots, 43(6), 1605–1622.

Koscianski, A., & Luersen, M. A. (2008). Globalization and Parallelization of Nelder-Mead and Powell Optimization Methods. Netherlands: Springer.

Kou, F., Wei, Z., Chen, W., Wu, X., Wen, C., & Li, Z. (2017). Intelligent detail enhancement for exposure fusion. IEEE Transactions on Multimedia, 20(2), 484–495.

Li, S., Handa, A., Zhang, Y., & Calway, A. (2016). HDRFusion: HDR SLAM using a low-cost auto-exposure RGB-D sensor. In 2016 Fourth International Conference on 3D Vision (3DV) (pp. 314–322). IEEE.

Mühlfellner, P., Bürki, M., Bosse, M., Derendarz, W., Philippsen, R., & Furgale, P. (2016). Summary maps for lifelong visual localization. Journal of Field Robotics, 33(5), 561–590.

Mur-Artal, R., Montiel, J. M. M., & Tardos, J. D. (2015). Orb-slam: a versatile and accurate monocular slam system. IEEE Transactions on Robotics, 31(5), 1147–1163.

Papachristos, C., Mascarich, F., Khattak, S., Dang, T., & Alexis, K. (2019). Localization uncertainty-aware autonomous exploration and mapping with aerial robots using receding horizon path-planning. Autonomous Robots, 43(8), 2131–2161.

Park, J., Jung, T., & Yim, K. (2015). Precise exposure control for efficient eye tracking. In 2015 9th International Conference on Innovative Mobile and Internet Ser-vices in Ubiquitous Computing (pp. 431–443). IEEE.

Pillman, B., & Jasinski, D. (2010). Camera exposure determination based on a psychometric quality model. Journal of Signal Processing Systems, 65(2), 147–158.

Pinson, L. J. (1983). Robot vision: an evaluation of imaging sensors. Robotics and Robot Sensing Systems International Society for Optics and Photonics, 442, 15–26.

Prabhakar, K. R., Arora, R., Swaminathan, A., Singh, K. P., & Babu, R. V. (2019). A fast, scalable, and reliable deghosting method for extreme exposure fusion. In 2019 IEEE International conference on computational photography (ICCP), IEEE, pp. 1–8

Qin, T., Li, P., & Shen, S. (2018). Vins-mono: A robust and versatile monocular visual-inertial state estimator. IEEE Transactions on Robotics, 34(4), 1004–1020.

Quenzel, J., Horn, J., Houben, S., & Behnke, S. (2018). Keyframe-based photometric online calibration and color correction. In: 2018 IEEE/RSJ International conference on intelligent robots and systems (IROS), pp. 1–8. https://doi.org/10.1109/IROS.2018.8593595

Shan, T., & Englot, B. (2018). Lego-loam: Lightweight and ground-optimized lidar odometry and mapping on variable terrain. In 2018 IEEE/RSJ International conference on intelligent robots and systems (IROS), pp. 4758–4765. https://doi.org/10.1109/IROS.2018.8594299

Shim, I., Lee, J.Y., & Kweon, I.S. (2014). Auto-adjusting camera exposure for outdoor robotics using gradient information. In: 2014 IEEE/RSJ International conference on intelligent robots and systems, IEEE, pp 1011–1017

Shim, I., Oh, T. H., Lee, J. Y., Choi, J., Choi, D. G., & Kweon, I. S. (2018). Gradient-based camera exposure control for outdoor mobile platforms. IEEE Transactions on Circuits and Systems for Video Technology, 29(6), 1569–1583.

Shin, U., Park, J., Shim, G., Rameau, F., & Kweon, I.S. (2019). Camera exposure control for robust robot vision with noise-aware image quality assessment. In: 2019 IEEE/RSJ International conference on intelligent robots and systems (IROS), IEEE, pp. 1165–1172.

Singh, H., Agrawal, N., Kumar, A., Singh, G.K., & Lee, H.N. (2016). A novel gamma correction approach using optimally clipped sub-equalization for dark image enhancement. In 2016 IEEE International conference on digital signal processing (DSP), IEEE, pp. 497–501

Snoek, J., Larochelle, H., & Adams, R. P. (2012). Practical bayesian optimization of machine learning algorithms. In Advances in neural information processing systems, pp. 2951–2959

Tomasi, J. L. (2020). Learned adjustment of camera gain and exposure time for improved visual feature detection and matching. PhD thesis

Vargas, A., & Bogoya, J. (2018). A generalization of the averaged hausdorff distance. Computacion Y Sistemas, 22(2), 331–345.

Wu, Y., & Tsotsos, J. (2017). Active control of camera parameters for object detection algorithms. arXiv preprint arXiv:1705.05685

Zhang, Z., Forster, C., & Scaramuzza, D. (2017). Active exposure control for robust visual odometry in hdr environments. In: 2017 IEEE International conference on robotics and automation (ICRA), IEEE, pp. 3894–3901

Zhou, Y., Li, H., & Kneip, L. (2019). Canny-vo: Visual odometry with rgb-d cameras based on geometric 3-d-2-d edge alignment. IEEE Transactions on Robotics, 35(1), 184–199.

Funding

Funding was provided in part by the National Natural Science Foundation of China(Grant No.U1713206) and in part by the Shenzhen Science and Innovation Committee(Grant No.JCYJ20200109113412326; No.JCYJ20210324120400003; No.JCYJ20180507183837726; No.JCYJ20180507183456108).

Author information

Authors and Affiliations

Corresponding authors

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Supplementary file 1 (mp4 70693 KB)

Rights and permissions

About this article

Cite this article

Wang, Y., Chen, H., Zhang, S. et al. Automated camera-exposure control for robust localization in varying illumination environments. Auton Robot 46, 515–534 (2022). https://doi.org/10.1007/s10514-022-10036-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10514-022-10036-x