Abstract

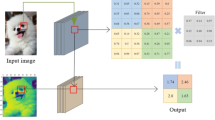

There are some traditional pooling methods in convolutional neural network, such as max-pooling, average pooling, stochastic pooling and so on, which determine the results of pooling based on the distribution of each activation in the pooling region. Zeiler and Fergus (Stochastic-pooling for regularization of deep convolutional neural networks, 2013) However, it is difficult for the feature mapping process to select a perfect activation representative of the pooling region, and can lead to the phenomenon of over-fitting. In this paper, the following theoretical basis comes out information theory (Shannon in Bell Syst. Tech. J. 27:379–423, 1948). First, we quantify the information entropy of each pooling region, and then propose an efficient pooling method by comparing the mutual information between activations and the pooling region which they are located in. Moreover, we assign different weights to different activations based on mutual information, and named it weighted-pooling. The main features of the weighted-pooling method are as follows: (1) The information quantity of the pooling region is quantified by information theory for the first time. (2) Also, each activation’s contribution was quantified for the first time and these contributions eliminate the uncertainty of the pooling region which it is located in. (3) For choosing a representative in this pooling region, the weight of each activation obviously superiors to the value of activation. In the experimental part, we respectively use MNIST and CIFAR-10 (Krizhevsky in Learning multiple layers of featurs from tiny images, University of Toronto, 2009; LeCun in The MNIST database, 2012) data sets to compare different pooling methods. The results show that the weighted-pooling method has higher recognition accuracy than other pooling methods and reaches a new state-of-the-art.

Similar content being viewed by others

References

Zeiler, M. D., Fergus, R.: Stochastic pooling for regularization of deep convolutional neural networks. Eprint Arxiv (2013)

Shannon, C.E.: A mathematical theory of communication. Bell Syst. Tech. J. 27(4), 379–423 (1948)

Krizhevsky, A.: Learning multiple layers of featurs from tiny images. Technical Report TR-2009, University of Toronto (2009)

LeCun, Y.: The MNIST database. http://yann.lecun.com/exdb/mnist/ (2012)

Ba, J. L., Kiros, J. R., Hinton, G. E. Layer normalization (2016)

LeCun, Y., Boser, B., Denker, J.S., Henderson, D., Howard, R.E., Hubbard, W., Jacke, L.D.: Backpropagation applied to handwritten zip code recognition. Neural Comput. 1(4), 541–551 (1989)

LeCun, Y., Boser, B., Denker, J. S., Howard, R. E., Habbard, W., Jackel, L. D., Henderson, D.: Handwritten digit recognition with a back-propagation network. In: Proceedings of Advances in Neural Information Processing Systems 2, pp. 396–404. Morgan Kaufmann Publishers Inc., San Francisco (1990)

Krizhevsky, A., Sutskever, I., Hinton, G. E.: ImageNet classification with deep convolutional neural networks. In: NIPS, pp. 1106–1114 (2012)

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition. Comput. Sci. (2014 )

Simonyan, K. Zisserman, A.: Two-stream convolutional networks for action recognition in videos. CoRR, abs/1406.2199, 2014. Published in Proceeding NIPS (2014)

Szegedy, C., Liu, W., Jia, Y. et al.: Going deeper with convolutions. pp. 1–9 (2014)

He, K., Zhang, X., Ren, S. et al.: Deep residual learning for image recognition. In: Computer Vision and Pattern Recognition. pp. 770–778, IEEE (2016)

Badrinarayanan, V., Kendall, A., Cipolla, R.: SegNet: a deep convolutional encoder-decoder architecture for scene segmentation. IEEE Trans. Pattern Anal. 99, 1 (2017)

Zhang, B., Li, Z., Cao, X., Ye, Q., Chen, C., Shen, L., Perina, A., Ji, R.: Output constraint transfer for kernelized correlation filter in tracking. IEEE Trans. Syst. Man Cybernet. 47(4), 693–703 (2017)

Wang, L., Zhang, B., Yang, W.. Boosting-like deep convolutional network for pedestrian detection. In: Biometric Recognition. Springer International Publishing (2015)

Zhang, B., Gu, J., Chen, C., Han, J., Su, X., Cao, X., Liu, J.: One-two-one network for compression artifacts reduction in remote sensing, In: ISPRS Journal of Photogrammetry and Remote Sensing (2018)

Zhang, B., Liu, W., Mao, Z., et al.: Cooperative and geometric learning algorithm (CGLA) for path planning of UAVs with limited information. Automatica 50(3), 809–820 (2014)

Russakovsky, O., Deng, J., Su, H., et al.: ImageNet large scale visual recognition challenge. Int. J. Comput. Vis. 115(3), 211–252 (2014)

Abadi, M., Agarwal, A., Barham, P. et al.: TensorFlow: large-scale machine learning on heterogeneous distributed systems (2016)

Kingma, D. P., Adam, J. B: A method for stochastic optimization. Comput. Sci. (2014)

Zeiler, M. D., Fergus, R.: Visualizing and understanding convolutional networks. 8689, pp. 818–833 (2014)

Bengio, Y., Simard, P., Frasconi, P.: Learning long-term dependencies with gradient descent is difficult. IEEE Trans. Neural Netw. 5(2), 157–66 (1994)

Glorot, X., Bengio, Y.: Understanding the difficulty of training deep feedforward neural networks. J. Mach. Learn. Res. 9, 249–256 (2010)

He, K., Zhang ,X., Ren, S. et al.: Delving deep into rectifiers: surpassing human-level performance on ImageNet classification, pp. 1026–1034 (2015)

Ioffe, S., Szegedy, C.: Batch normalization: accelerating deep network training by reducing internal covariate shift. In: International Conference on International Conference on Machine Learning. JMLR.org, pp. 448–456 (2015)

Duchi, J., Hazan, E., Singer, Y.: Adaptive subgradient methods for online learning and stochastic optimization. J. Mach. Learn. Res. 12(7), 257–269 (2011)

Zeiler, M. D.: ADADELTA: an adaptive learning rate method. In: Computer Science (2012)

Boureau, Y. L., Ponce, J., Lecun, Y.: A theoretical analysis of feature pooling in visual recognition. In: International Conference on Machine Learning. DBLP, pp. 111–118 (2010)

Hubel, D.H., Wiesel, T.N.: Receptive fields, binocular interaction and functional architecture in the cat’s visual cortex. J. Physiol. 160(1), 106 (1962)

Koenderink, J.J., Van Doorn, A.J.: The structure of locally orderless images. Int. J. Comput. Vis. 31(2–3), 159–168 (1999)

Graham, B.: Fractional max-pooling. Eprint Arxiv (2014)

Harada, T., Ushiku, Y., Yamashita, Y. et al.: Discriminative spatial pyramid. In: Computer Vision and Pattern Recognition. IEEE, pp. 1617–1624 (2011)

He, K., Zhang, X., Ren, S., et al.: Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 37(9), 1904–1916 (2015)

Fan, E.G.: Extended tanh-function method and its applications to nonlinear equations. Phys. Lett.s A 277(4), 212–218 (2000)

Hinton, G.E., Srivastava, N., Krizhevsky, A., et al.: Improving neural networks by preventing co-adaptation of feature detectors. Comput. Sci. 3(4), 212–223 (2012)

Everingham, M., Van Gool, L., Williams, C. K. I., Winn, J., Zisserman, A.: The PASCAL visual object classes challenge, VOC 2007 Results (2007)

Ren, S., Girshick, R., Girshick, R., et al.: Faster R-CNN: towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 39(6), 1137 (2017)

Acknowledgements

The authors would like to thank the reviewers for their helpful advices. The National Science and Technology Major Project (Grant No. 2017YFB0803001), the National Natural Science Foundation of China (Grant No. 61502048), Beijing Science and Technology Planning Project (Grant No. Z161100000216145) and the National “242” Information Security Program (2015A136) are gratefully acknowledged.

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

1.1 Joint entropy

Conditional entropy can be embodied by a fact that the entropy of a pair of stochastic variables is equal to the entropy of one of the stochastic variables plus the conditional entropy of another stochastic variable. \(H(X,Y)=H(X)+H(Y|X)\).

Proof

Equivalently written to:

Both sides of the equation take the mathematical expectation, which is the theorem.\(\square\)

1.2 Mutual information

The mutual information I(X;Y) can be rewritten in the following form.

Proof

\(\square\)

Rights and permissions

About this article

Cite this article

Zhu, X., Meng, Q., Ding, B. et al. Weighted pooling for image recognition of deep convolutional neural networks. Cluster Comput 22 (Suppl 4), 9371–9383 (2019). https://doi.org/10.1007/s10586-018-2165-4

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10586-018-2165-4