Abstract

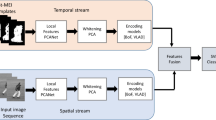

Human action recognition (HAR) is one of the most challenging tasks in the field of computer vision due to complex backgrounds and ambiguity action, etc. To tackle these issues, we propose a novel action recognition framework called Semantic Feature and High-order Physical Feature Fusion (SF-HPFF). Concretely, we first calculate attention pooling module with a low-rank approximation to remove the information of irrelevant complex backgrounds and thus capture the interested target motion region. On this basis, motion features based on the physical characteristics of flow field and semantic features based on word embedding are developed to distinguish ambiguity behaviors. These features are of low dimension and high discrimination, which help to reduce computation burden significantly while maintaining an excellent recognition performance. Finally, cascaded convolutional fusion network is adopted to fuse features and accomplish classification. Multiple experiment results validate that the proposed SF-HPFF outperforms the state-of-art action recognition methods.

Similar content being viewed by others

Data availability

The datasets analyzed during the current study are available in the network. These datasets were derived from the following public domain resources: https://www.di.ens.fr/~laptev/actions/hollywood2/; https://serre-lab.clps.brown.edu/resource/hmdb-a-large-human-motion-database; https://www.crcv.ucf.edu/data/UCF101/UCF101.rar.

References

Yi, Y., Li, A., Zhou, X.: Human action recognition based on action relevance weighted encoding. Signal Process. 80, 115640 (2020)

Girdhar, R., Ramanan, D.: Attentional pooling for action recognition. In: Advances in Neural Information Processing Systems, pp. 34–45 (2017)

Diba, A., Sharma, V., Van Gool, L.: Deep temporal linear encoding networks. In: Proceedings of the IEEE conference on Computer Vision and Pattern Recognition, pp. 2329–2338 (2017)

Wang, Y., Long, M., Wang, J., Yu, P.S.: Spatiotemporal pyramid network for video action recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1529–1538 (2017)

Donahue, J., Hendricks, L.A., Guadarrama, S., Rohrbach, Ma., Venugopalan, S., Saenko, K., Darrell, T.: Long-term recurrent convolutional networks for visual recognition and description. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2625–2634 (2015)

Varol, G., Laptev, I., Schmid, C.: Long-term temporal convolutions for action recognition. IEEE Trans. Pattern Anal. Mach. Intell. 40(6), 1510–1517 (2017)

Jafar, A., Lee, M.: High-speed hyperparameter optimization for deep resnet models in image recognition. Clust. Comput. 1–9 (2021)

Simonyan, K., Zisserman, A.: Two-stream convolutional networks for action recognition in videos. In Advances in Neural Information Processing Systems, pp. 568–576 (2014)

Zhu, A., Qianyu, W., Cui, R., Wang, T., Hang, W., Hua, G., Snoussi, H.: Exploring a rich spatial-temporal dependent relational model for skeleton-based action recognition by bidirectional ISTM-CNN. Neurocomputing 414, 90–100 (2020)

Zheng, Z., An, G., Dapeng, W., Ruan, Q.: Spatial-temporal pyramid based convolutional neural network for action recognition. Neurocomputing 358, 446–455 (2019)

Ijjina, E.P., Chalavadi, K.M.: Human action recognition using genetic algorithms and convolutional neural networks. Pattern Recognit. 59, 199–212 (2016)

Tu, Z., Xie, W., Qin, Q., Poppe, R., Veltkamp, R.C., Li, B., Yuan, J.: Multi-stream CNN: learning representations based on human-related regions for action recognition. Pattern Recognit. 79, 32–43 (2018)

Liu, Z., Zhang, X., Song, L., Ding, Z., Duan, H.: More efficient and effective tricks for deep action recognition. Clust. Comput. 22, 819–826 (2019)

Ozcan, T., Basturk, A.: Human action recognition with deep learning and structural optimization using a hybrid heuristic algorithm. Clust. Comput. 1–14 (2020)

Li, Y., Ji, B., Shi, X., Zhang, J., Kang, B., Wang, L.: Tea: Temporal excitation and aggregation for action recognition. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 909–918 (2020)

Sui, H., Song, Z., Gao, D., Hua, L.: Automatic image registration based on shape features and multi-scale image segmentation. In: 2nd International Conference on Multimedia and Image Processing (ICMIP), pp. 118–122. IEEE (2017)

Li, Y., Zhang, J., Gao, P., Jiang, L., Chen, M.: Grab cut image segmentation based on image region. In: IEEE 3rd International Conference on Image, Vision and Computing (ICIVC), pp. 311–315. IEEE (2018)

Ren, S., He, K., Girshick, R., Zhang, X., Sun, J.: Object detection networks on convolutional feature maps. IEEE Trans. Pattern Anal. Mach. Intell. 39(7), 1476–1481 (2016)

Hsu, S.-C., Wang, Y.-W., Huang, C.-L.: Human object identification for human-robot interaction by using fast R-CNN. In: Second IEEE International Conference on Robotic Computing (IRC), pp. 201–204. IEEE (2018)

Wang, K., Dong, Y., Bai, H., Zhao, Y., Hu, K.: Use fast R-CNN and cascade structure for face detection. In: 2016 Visual Communications and Image Processing (VCIP), pp. 1–4. IEEE (2016)

Girshick, R.: Fast R-CNN. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 1440–1448 (2015)

Chen, J.-L., Lin, Z.-Y., Wan, Y.-C., Chen, L.-G.: Accelerated local feature extraction in a reuse scheme for efficient action recognition. In: IEEE International Conference on Image Processing (ICIP), pp. 296–299. IEEE (2016)

Liu, L., Hu, F., Zhao, J.: Action recognition based on features fusion and 3d convolutional neural networks. In: 9th International Symposium on Computational Intelligence and Design (ISCID), vol. 1, pp. 178–181. IEEE (2016)

Huynh-The, T., Hua, C.-H., Tu, N.A., Kim, J.-W., Kim, S-H., Kim, D-S.: 3D action recognition exploiting hierarchical deep feature fusion model. In: 14th International Conference on Ubiquitous Information Management and Communication (IMCOM), pp. 1–3. IEEE (2020)

Wang, H., Kläser, A., Schmid, C., Liu, C.-L.: Action recognition by dense trajectories. In: CVPR 2011, pp. 3169–3176. IEEE (2011)

Wang, H., Schmid, C.: Action recognition with improved trajectories. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 3551–3558 (2013)

Huang, Q., Sun, S., Wang, F.: A compact pairwise trajectory representation for action recognition. In: 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 1767–1771. IEEE (2017)

Carmona, J.M., Climent, J.: Human action recognition by means of subtensor projections and dense trajectories. Pattern Recognit. 81, 443–455 (2018)

Chen, X.-H., Lai, J.-H.: Detecting abnormal crowd behaviors based on the div-curl characteristics of flow fields. Pattern Recognit. 88, 342–355 (2019)

Wang, L., Ding, Z., Tao, Z., Liu, Y., Fu, Y.: Generative multi-view human action recognition. In: IEEE/CVF International Conference on Computer Vision (ICCV), pp. 6211–6220. IEEE (2019)

Chen, E., Bai, X., Gao, L., Tinega, H.C., Ding, Y.: A spatiotemporal heterogeneous two-stream network for action recognition. IEEE Access 7, 57267–57275 (2019)

Xiao, X., Hu, H., Wang, W.: Trajectories-based motion neighborhood feature for human action recognition. In: IEEE International Conference on Image Processing (ICIP), pp. 4147–4151. IEEE (2017)

Zhan, Y., Ma, L., Yang, C.: Pseudo trajectories eliminating and pyramid clustering: Optimizing dense trajectories for action recognition. In: 2017 IEEE International Conference on Real-time Computing and Robotics (RCAR), pp. 62–67. IEEE (2017)

Ni, B., Moulin, P., Yang, X., Yan, S.: Motion part regularization: Improving action recognition via trajectory selection. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3698–3706 (2015)

Zhang, J., Haifeng, H.: Domain learning joint with semantic adaptation for human action recognition. Pattern Recognit. 90, 196–209 (2019)

Lin, T.-Y., RoyChowdhury, A., Maji, S.: Bilinear CNN models for fine-grained visual recognition. In: Proceedings of the IEEE international conference on computer vision, pp. 1449–1457 (2015)

Soomro, K., Zamir, A.R., Shah, M.: Ucf101: a dataset of 101 human actions classes from videos in the wild (2012). arXiv:1212.0402.

Kuehne, H., Jhuang, H., Garrote, E., Poggio, T., Serre, T.: Hmdb: a large video database for human motion recognition. In: International Conference on Computer Vision, pp. 2556–2563. IEEE (2011)

Marszalek, M., Laptev, I., Schmid, C.: Actions in context. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 2929–2936. IEEE (2009)

Jiang, M., Pan, N., Kong, J.: Spatial-temporal saliency action mask attention network for action recognition. J. Vis. Commun. Image Represent. 71, 102846 (2020)

Hanxu, S., Yue, L., Hao, C., Qiongyang, L., Xiaonan, Y., Yongquan, W., Jun, G.: Research on human action recognition based on improved pooling algorithm. In: 2020 Chinese Control And Decision Conference (CCDC), pp. 3306–3310. IEEE (2020)

Balasubramanian, V., Aloqaily, M., Tunde-Onadele, O., Yang, Z., Reisslein, M.: Reinforcing cloud environments via index policy for bursty workloads. In: NOMS 2020-2020 IEEE/IFIP Network Operations and Management Symposium, pp. 1–7. IEEE (2020)

Acknowledgements

This work was supported by the National Natural Science Foundation of China (Grant No. 51678075) the Science and Technology Project of Hunan (Grant No. 2017GK2271).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Xia, L., Ma, W. & Feng, L. Semantic features and high-order physical features fusion for action recognition. Cluster Comput 24, 3515–3529 (2021). https://doi.org/10.1007/s10586-021-03346-9

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10586-021-03346-9