Abstract

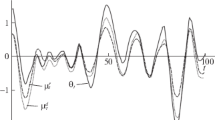

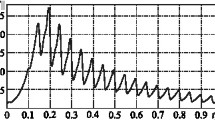

The solution of nonlinear least-squares problems is investigated. The asymptotic behavior is studied and conditions for convergence are derived. To deal with such problems in a recursive and efficient way, it is proposed an algorithm that is based on a modified extended Kalman filter (MEKF). The error of the MEKF algorithm is proved to be exponentially bounded. Batch and iterated versions of the algorithm are given, too. As an application, the algorithm is used to optimize the parameters in certain nonlinear input–output mappings. Simulation results on interpolation of real data and prediction of chaotic time series are shown.

Similar content being viewed by others

References

Bates, D.M., Watts, D.G.: Nonlinear Regression and Its Applications. Wiley, New York (1988)

Bertsekas, D.P.: Nonlinear Programming, 2nd edn. Athena Scientific, Belmont (1999)

Bertsekas, D.P.: Incremental least–squares methods and the extended Kalman filter. SIAM J. Optim. 6(3), 807–822 (1996)

Feldkamp, L.A., Prokhorov, D.V., Eagen, C.F., Yuan, F.: Enhanced multi-stream Kalman filter training for recurrent networks. In: Suykens, J., Vandewalle, J. (eds.) Nonlinear Modeling: Advanced Black-Box Techniques, pp. 29–53. Kluwer Academic, Dordrecht (1998)

Moriyama, H., Yamashita, N., Fukushima, M.: The incremental Gauss–Newton algorithm with adaptive stepsize rule. Comput. Optim. Appl. 26(2), 107–141 (2003)

Shuhe, H.: Consistency for the least squares estimator in nonlinear regression model. Stat. Probab. Lett. 67(2), 183–192 (2004)

Reif, K., Günter, S., Yaz, E., Unbehauen, R.: Stochastic stability of the discrete-time extended Kalman filter. IEEE Trans. Autom. Control 44(4), 714–728 (1999)

Kůrková, V., Sanguineti, M.: Learning with generalization capability by kernel methods of bounded complexity. J. Complex. 21(3), 350–367 (2005)

Rumelhart, D.E., Hinton, G.E., Williams, R.J.: Learning internal representation by error propagation. In: Rumelhart, D.E., McClelland, J.L., PDP Research Group (eds.) Parallel Distributed Processing: Explorations in the Microstructures of Cognition, vol. I: Foundations, pp. 318–362. MIT, Cambridge (1986)

Widrow, B., Lehr, M.A.: 30 years of adaptive neural networks: perceptron, madaline, and backpropagation. Proc. IEEE 78(9), 1415–1442 (1990)

Ortega, J.M.: Numerical Analysis: A Second Course. SIAM, Philadelphia (1990). Reprint of the 1972 edition by Academic, Now York

Jazwinski, A.H.: Stochastic Processes and Filtering Theory. Academic, New York (1970)

Pinkus, A.: Approximation theory of the MLP model in neural networks. Acta Numer. 8, 143–196 (1999)

Park, J., Sandberg, I.W.: Universal approximation using radial–basis–function networks. Neural Comput. 3(2), 246–257 (1991)

Heskes, T., Wiegerinck, W.: A theoretical comparison of batch-mode, on-line, cyclic, and almost-cyclic learning. IEEE Trans. Neural Netw. 7(4), 919–925 (1996)

Demuth, H., Beale, M.: Neural Network Toolbox—User’s Guide. The Math Works, Natick (2000)

Bell, B.M., Cathey, F.W.: The iterated Kalman filter update as a Gauss–Newton method. IEEE Trans Autom. Control 38(2), 294–297 (1993)

Fletcher, R.: Practical Methods of Optimization. Wiley, Chichester (1987)

Battiti, R.: First- and second-order methods for learning: between steepest descent and Newton’s method. Neural Comput. 4(2), 141–166 (1992)

Tollenaere, T.: SuperSAB: fast adaptive backpropagation with good scaling properties. Neural Netw. 3(5), 561–573 (1990)

Jacobs, R.A.: Increased rates of convergence through learning rate adaption. Neural Netw. 1(4), 295–307 (1988)

Denton, J.W., Hung, M.S.: A comparison of nonlinear optimization methods for supervised learning in multilayer feedforward neural networks. Eur. J. Oper. Res. 93(2), 358–368 (1996)

Stinchcombe, M., White, H.: Approximation and learning unknown mappings using multilayer feedforward networks with bounded weights. In: Proc. Int. Joint Conf. on Neural Networks IJCNN’90, pp. III7–III16 (1990)

Singhal, S., Wu, L.: Training multilayer perceptrons with the extended Kalman algorithm. In: Touretzky, D.S. (ed.) Advances in Neural Information Processing Systems 1, pp. 133–140. Morgan Kaufmann, San Mateo (1989)

Ruck, D.W., Rogers, S.K., Kabrisky, M., Maybeck, P.S., Oxley, M.E.: Comparative analysis of backpropagation and the extended Kalman filter for training multilayer perceptrons. IEEE Trans. Pattern Anal. Mach. Intell. 14(6), 686–691 (1992)

Iiguni, Y., Sakai, H., Tokumaru, H.: A real-time learning algorithm for a multilayered neural network based on the extended Kalman filter. IEEE Trans. Signal Process. 40(4), 959–966 (1992)

Schottky, B., Saad, D.: Statistical mechanics of EKF learning in neural networks. J. Phys. A: Math. Gen. 32(9), 1605–1621 (1999)

Nishiyama, K., Suzuki, K.: H ∞-learning of layered neural networks. IEEE Trans. Neural Netw. 12(6), 1265–1277 (2001)

Leung, C.-S., Tsoi, A.-C., Chan, L.W.: Two regularizers for recursive least squared algorithms in feedforward multilayered neural networks. IEEE Trans. Neural Netw. 12(6), 1314–1332 (2001)

Alessandri, A., Sanguineti, M., Maggiore, M.: Optimization-based learning with bounded error for feedforward neural networks. IEEE Trans. Neural Netw. 13(2), 261–273 (2002)

Prechelt, L.: PROBEN 1—A set of neural network benchmark problems and benchmarking rules. Tech. Rep. 21/94, Fakultät für Informatik, Universität Karlsruhe, Germany, September 1994, Anonymous FTP: /pub/papers/techreports/1994/1994-21.ps.gzonftp.ira.uka.de

Mackey, M.C., Glass, L.: Oscillation and chaos in physiological control systems. Science 197, 287–289 (1977)

Hardy, G., Littlewood, J.E., Polya, G.: Inequalities. Cambridge University Press, Cambridge (1989)

Author information

Authors and Affiliations

Corresponding author

Additional information

A. Alessandri and M. Cuneo were partially supported by the EU and the Regione Liguria through the Regional Programmes of Innovative Action (PRAI) of the European Regional Development Fund (ERDF). M. Sanguineti was partially supported by a grant from the PRIN project ‘New Techniques for the Identification and Adaptive Control of Industrial Systems’ of the Italian Ministry of University and Research.

Rights and permissions

About this article

Cite this article

Alessandri, A., Cuneo, M., Pagnan, S. et al. A recursive algorithm for nonlinear least-squares problems. Comput Optim Appl 38, 195–216 (2007). https://doi.org/10.1007/s10589-007-9047-7

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10589-007-9047-7