Abstract

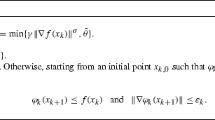

We propose a class of self-adaptive proximal point methods suitable for degenerate optimization problems where multiple minimizers may exist, or where the Hessian may be singular at a local minimizer. If the proximal regularization parameter has the form \(\mu({\bf{x}})=\beta\|\nabla f({\bf{x}})\|^{\eta}\) where η∈[0,2) and β>0 is a constant, we obtain convergence to the set of minimizers that is linear for η=0 and β sufficiently small, superlinear for η∈(0,1), and at least quadratic for η∈[1,2). Two different acceptance criteria for an approximate solution to the proximal problem are analyzed. These criteria are expressed in terms of the gradient of the proximal function, the gradient of the original function, and the iteration difference. With either acceptance criterion, the convergence results are analogous to those of the exact iterates. Preliminary numerical results are presented using some ill-conditioned CUTE test problems.

Similar content being viewed by others

References

Bongartz, I., Conn, A.R., Gould, N.I.M., Toint, P.L.: CUTE: constrained and unconstrained testing environments. ACM Trans. Math. Softw. 21, 123–160 (1995)

Combettes, P.L., Pennanen, T.: Proximal methods for cohypomonotone operators. SIAM J. Control 43, 731–742 (2004)

Fan, J., Yuan, Y.: On the convergence of the Levenberg-Marquardt method without nonsingularity assumption. Computing 74, 23–39 (2005)

Ha, C.D.: A generalization of the proximal point algorithm. SIAM J. Control 28, 503–512 (1990)

Hager, W.W., Zhang, H.: CG_DESCENT user’s guide. Tech. Rep., Department of Mathematics, University of Florida, Gainesville (2004)

Hager, W.W., Zhang, H.: A new conjugate gradient method with guaranteed descent and an efficient line search. SIAM J. Optim. 16, 170–192 (2005)

Hager, W.W., Zhang, H.: Algorithm 851: CG_DESCENT, a conjugate gradient method with guaranteed descent. ACM Trans. Math. Softw. 32, 113–137 (2006)

Humes, C., Silva, P.: Inexact proximal point algorithms and descent methods in optimization. Optim. Eng. 6, 257–271 (2005)

Iusem, A.N., Pennanen, T., Svaiter, B.F.: Inexact variants of the proximal point algorithm without monotonicity. SIAM J. Optim. 13, 1080–1097 (2003)

Kaplan, A., Tichatschke, R.: Proximal point methods and nonconvex optimization. J. Glob. Optim. 13, 389–406 (1998)

Li, D., Fukushima, M., Qi, L., Yamashita, N.: Regularized Newton methods for convex minimization problems with singular solutions. Comput. Optim. Appl. 28, 131–147 (2004)

Luque, F.J.: Asymptotic convergence analysis of the proximal point algorithm. SIAM J. Control 22, 277–293 (1984)

Martinet, B.: Régularisation d’inéquations variationnelles par approximations successives. Rev. Francaise Inf. Rech. Oper. Ser. R-3 4, 154–158 (1970)

Martinet, B.: Determination approachée d’un point fixe d’une application pseudo-contractante. C.R. Séances Acad. Sci. 274, 163–165 (1972)

Pennanen, T.: Local convergence of the proximal point algorithm and multiplier methods without monotonicity. Math. Oper. Res. 27, 170–191 (2002)

Rockafellar, R.T.: Augmented Lagrangians and applications of the proximal point algorithm in convex programming. Math. Oper. Res. 2, 97–116 (1976)

Rockafellar, R.T.: Monotone operators and the proximal point algorithm. SIAM J. Control 14, 877–898 (1976)

Tseng, P.: Error bounds and superlinear convergence analysis of some Newton-type methods in optimization. In: Pillo, G.D., Giannessi, F. (eds.) Nonlinear Optimization and Related Topics, pp. 445–462. Kluwer, Dordrecht (2000)

Wolfe, P.: Convergence conditions for ascent methods. SIAM Rev. 11, 226–235 (1969)

Wolfe, P.: Convergence conditions for ascent methods II: some corrections. SIAM Rev. 13, 185–188 (1971)

Yamashita, N., Fukushima, M.: The proximal point algorithm with genuine superlinear convergence for the monotone complementarity problem. SIAM J. Optim. 11, 364–379 (2000)

Yamashita, N., Fukushima, M.: On the rate of convergence of the Levenberg-Marquardt method. In: Topics in Numerical Analysis. Comput. Suppl., vol. 15, pp. 239–249. Springer, New York (2001)

Author information

Authors and Affiliations

Corresponding author

Additional information

This material is based upon work supported by the National Science Foundation under Grant Nos. 0203270, 0619080, and 0620286.

Rights and permissions

About this article

Cite this article

Hager, W.W., Zhang, H. Self-adaptive inexact proximal point methods. Comput Optim Appl 39, 161–181 (2008). https://doi.org/10.1007/s10589-007-9067-3

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10589-007-9067-3