Abstract

In recent years, convex optimization methods were successfully applied for various image processing tasks and a large number of first-order methods were designed to minimize the corresponding functionals. Interestingly, it was shown recently in Grewenig et al. (2010) that the simple idea of so-called “superstep cycles” leads to very efficient schemes for time-dependent (parabolic) image enhancement problems as well as for steady state (elliptic) image compression tasks. The “superstep cycles” approach is similar to the nonstationary (cyclic) Richardson method which has been around for over sixty years.

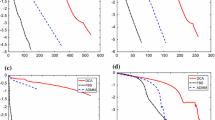

In this paper, we investigate the incorporation of superstep cycles into the projected gradient method. We show for two problems in compressive sensing and image processing, namely the LASSO approach and the Rudin-Osher-Fatemi model that the resulting simple cyclic projected gradient algorithm can numerically compare with various state-of-the-art first-order algorithms. However, due to the nonlinear projection within the algorithm convergence proofs even under restrictive assumptions on the linear operators appear to be hard. We demonstrate the difficulties by studying the simplest case of a two-cycle algorithm in ℝ2 with projections onto the Euclidean ball.

Similar content being viewed by others

References

Alexiades, V., Amiez, G., Gremaud, P.A.: Super-time-stepping acceleration of explicit schemes for parabolic problems. Commun. Numer. Methods Eng. 12, 31–42 (1996)

Anderssen, R.S., Golub, G.H.: Richardson’s non-stationary matrix iterative procedure. Tech. rep., Stanford University, Stanford, CA, USA (1972)

Barzilai, J., Borwein, J.M.: Two-point step size gradient methods. IMA J. Numer. Anal. 8(1), 141–148 (1988)

Bauschke, H.H., Combettes, P.L.: Convex Analysis and Monotone Operator Theory in Hilbert Spaces. Springer, New York (2011)

Beck, A., Teboulle, M.: Fast gradient-based algorithms for constrained total variation image denoising and deblurring. SIAM J. Imaging Sci. 2, 183–202 (2009)

Bertsekas, D.P.: On the Goldstein-Levitin-Polyak gradient projection method. IEEE Trans. Autom. Control 21, 174–183 (1976)

Bertsekas, D.P.: Nonlinear Programming. Athena Scientific, Belmont (1999)

Birgin, E.G., Martínez, J.M., Raydan, M.: Nonmonotone spectral projected gradient methods on convex sets. SIAM J. Optim. 10, 1196–1211 (2000)

Birgin, E.G., Martínez, J.M., Raydan, M.: Algorithm 813—Software for convex-constrained optimization. ACM Trans. Math. Softw. 27, 340–349 (2001)

Birgin, E.G., Martínez, J.M., Raydan, M.: Inexact spectral projected gradient methods on convex sets. IMA J. Numer. Anal. 23, 539–559 (2003)

Birman, M.S.: On a variant of the method of successive approximations. Vestn. Leningr. Univ. 9, 69–76 (1952)

Bonettini, S., Ruggiero, V.: On the convergence of primal-dual hybrid gradient algorithms for total variation image restoration. J. Math. Imaging Vis. 44(3), 236–253 (2012)

Candès, E.J., Romberg, J., Tao, T.: Robust uncertainty principles: Exact signal reconstruction from highly incomplete frequency information. IEEE Trans. Inf. Theory 52, 489–509 (2006)

Chambolle, A.: Total variation minimization and a class of binary MRF models. In: Rangarajan, A., Vemuri, B.C., Yuille, A.L. (eds.) Energy Minimization Methods in Computer Vision and Pattern Recognition, EMMCVPR. LNCS, vol. 3757, pp. 136–152. Springer, Berlin (2005)

Chambolle, A., Pock, T.: A first-order primal-dual algorithm for convex problems with applications to imaging. J. Math. Imaging Vis. 40, 120–145 (2011)

Dai, Y.H., Fletcher, R.: Projected Barzilai-Borwein methods for large-scale box-constrained quadratic programming. Numer. Math. 100, 21–47 (2005)

Dai, Y.H., Hager, W.W., Schittkowski, K., Zhang, H.: The cyclic Barzilai-Borwein method for unconstrained optimization. IMA J. Numer. Anal. 26, 604–627 (2006)

Donoho, D.: Compressed sensing. IEEE Trans. Inf. Theory 52, 1289–1306 (2006)

Frassoldati, G., Zanni, L., Zanghirati, G.: New adaptive stepsize selections in gradient methods. J. Ind. Manag. Optim. 4(2), 299–312 (2008)

Gavurin, M.K.: The use of polynomials of best approximation for the improvement of the convergence of iteration processes. Usp. Mat. Nauk 5, 156–160 (1950)

Gentzsch, W.: Numerical solution of linear and non-linear parabolic differential equations by a time discretisation of third order accuracy. In: Hirschel, E.H. (ed.) Proceedings of the Third GAMM Conference on Numerical Methods in Fluid Dynamics, pp. 109–117. Vieweg, Wiesbaden (1979)

Gentzsch, W., Schlüter, A.: Über ein Einschrittverfahren mit zyklischer Schrittweitenänderung zur Lösung parabolischer Differentialgleichungen. Z. Angew. Math. Mech. 58, 415–416 (1978)

Goldstein, A.A.: Convex programming in Hilbert space. Bull. Am. Math. Soc. 70, 709–710 (1964)

Grewenig, S., Weickert, J., Bruhn, A.: From Box filtering to fast explicit diffusion. In: Goesele, M., Roth, S., Kuijper, A., Schiele, B., Schindler, K. (eds.) Pattern Recognition. Lecture Notes in Computer Science, vol. 6376, pp. 533–542. Springer, Berlin (2010)

Hager, W.W., Zhang, H.: A new active set algorithm for box constrained optimization. SIAM J. Optim. 17, 526–557 (2006)

Lebedev, V., Finogenov, S.: Ordering of the iterative parameters in the cyclical Chebyshev iterative method. USSR Comput. Math. Math. Phys. 11(2), 155–170 (1971)

Levitin, E.S., Polyak, B.T.: Constrained minimization problems. USSR Comput. Math. Math. Phys. 6, 1–50 (1966)

Lorenz, D.A.: Constructing test instances for basis pursuit denoising. Tech. rep., TU Braunschweig (2011). arXiv:1103.2897

Loris, I., Bertero, M., Mol, C.D., Zanella, R., Zanni, L.: Accelerating gradient projection methods for l1-constrained signal recovery by steplength selection rules. Appl. Comput. Harmon. Anal. 27(2), 247–254 (2009)

Nesterov, Y.E.: A method of solving a convex programming problem with convergence rate O(1/k 2). Sov. Math. Dokl. 27(2), 372–376 (1983)

Nesterov, Y.E.: Smooth minimization of non-smooth functions. Math. Program. 103, 127–152 (2005)

Rudin, L.I., Osher, S., Fatemi, E.: Nonlinear total variation based noise removal algorithms. Physica D 60, 259–268 (1992)

Steidl, G.: A note on the dual treatment of higher order regularization functionals. Computing 76, 135–148 (2006)

Tibshirani, R.: Regression shrinkage and selection via the lasso. J. R. Stat. Soc. B 58, 267–288 (1994)

van den Berg, E., Friedlander, M.P.: Probing the Pareto frontier for basis pursuit solutions. SIAM J. Sci. Comput. 31, 890–912 (2008)

Wen, Z., Yin, W., Goldfarb, D., Zhang, Y.: A fast algorithm for sparse reconstruction based on shrinkage, subspace optimization, and continuation. SIAM J. Sci. Comput. 32, 1832–1857 (2009)

Young, D.: On Richardson’s method for solving linear systems with positive definite matrices. J. Math. Phys. 32, 243–255 (1954)

Zawilski, A.: Numerical stability of the cyclic Richardson iteration. Numer. Math. 60, 251–290 (1991)

Zhou, B., Gao, L., Dai, Y.H.: Gradient methods with adaptive step-sizes. Comput. Optim. Appl. 35, 69–86 (2005)

Zhu, M.: Fast numerical algorithms for total variation based image restoration. Ph.D. thesis, University of California, Los Angeles, USA (2008)

Zhu, M., Chan, T.: An efficient primal-dual hybrid gradient algorithm for total variation image restoration. Tech. rep., UCLA, Center for Applied Math (2008)

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Setzer, S., Steidl, G. & Morgenthaler, J. A cyclic projected gradient method. Comput Optim Appl 54, 417–440 (2013). https://doi.org/10.1007/s10589-012-9525-4

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10589-012-9525-4