Abstract

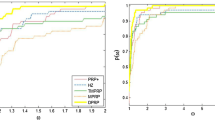

We propose a new gradient method for quadratic programming, named SDC, which alternates some steepest descent (SD) iterates with some gradient iterates that use a constant steplength computed through the Yuan formula. The SDC method exploits the asymptotic spectral behaviour of the Yuan steplength to foster a selective elimination of the components of the gradient along the eigenvectors of the Hessian matrix, i.e., to push the search in subspaces of smaller and smaller dimensions. The new method has global and \(R\)-linear convergence. Furthermore, numerical experiments show that it tends to outperform the Dai–Yuan method, which is one of the fastest methods among the gradient ones. In particular, SDC appears superior as the Hessian condition number and the accuracy requirement increase. Finally, if the number of consecutive SD iterates is not too small, the SDC method shows a monotonic behaviour.

Similar content being viewed by others

References

Akaike, H.: On a successive transformation of probability distribution and its application to the analysis of the optimum gradient method. Ann. Inst. Stat. Math. Tokyo 11, 1–16 (1959)

Barzilai, J., Borwein, J.: Two-point step size gradient methods. IMA J. Numer. Anal. 8, 141–148 (1988)

Birgin, E., Martínez, J., Raydan, M.: Spectral projected gradient methods: review and perspectives. J. Stat. Softw. (2012, to appear)

Bonettini, S., Landi, G., Loli Piccolomini, E., Zanni, L.: Scaling techniques for gradient projection-type methods in astronomical image deblurring. Int. J. Comput. Math. 90(1), 9–29 (2013)

Cauchy, A.: Méthodes générales pour la résolution des systèmes d’équations simultanées. CR. Acad. Sci. Par. 25, 536–538 (1847)

Dai, Y.H.: Alternate step gradient method. Optimization 52(4–5), 395–415 (2003)

Dai, Y.H., Fletcher, R.: Projected Barzilai–Borwein methods for large-scale box-constrained quadratic programming. Numer. Math. 100(1), 21–47 (2005)

Dai, Y.H., Hager, W., Schittkowski, K., Zhang, H.: The cyclic Barzilai–Borwein method for unconstrained optimization. IMA J. Numer. Anal. 26(3), 604–627 (2006)

Dai, Y.H., Liao, L.Z.: \(R\)-linear convergence of the Barzilai and Borwein gradient method. IMA J. Numer. Anal. 22(1), 1–10 (2002)

Dai, Y.H., Yuan, Y.: Alternate minimization gradient method. IMA J. Numer. Anal. 23, 377–393 (2003)

Dai, Y.H., Yuan, Y.: Analysis of monotone gradient methods. J. Ind. Manag. Optim. 1, 181–192 (2005)

De Angelis, P., Toraldo, G.: On the identification property of a projected gradient method. SIAM J. Numer. Anal. 30(5), 1483–1497 (1993)

De Asmundis, R., di Serafino, D., Landi, G.: On the regularizing behavior of recent gradient methods in the solution of linear ill-posed problems (2014). http://www.optimization-online.org/DB_HTML/2014/06/4393.html

De Asmundis, R., di Serafino, D., Riccio, F., Toraldo, G.: On spectral properties of steepest descent methods. IMA J. Numer. Anal. 33, 1416–1435 (2013)

van den Doel, K., Ascher, U.: The chaotic nature of faster gradient descent methods. J. Sci. Comput. 51(3), 560–581 (2012)

Figueiredo, M., Nowak, R., Wright, S.: Gradient projection for sparse reconstruction: application to compressed sensing and other inverse problems. IEEE J. Sel. Top. Signal Process. 1, 586–598 (2007)

Fletcher, R.: Low storage method for uncostrained optimization. In: Allgower, E.L., Georg, K. (eds.) Computational Solution of Nonlinear Systems of Equations. Lectures in Applied Mathematics, vol. 26, pp. 165–179. AMS Publications, Providence, RI (1990)

Fletcher, R.: On the Barzilai–Borwein method. In: Qi, L., Teo, K., Yang, X. (eds.) Optimization and Control with Applications. Applied Optimization Series, vol. 96, pp. 235–256. Springer, New York, NY (2005)

Fletcher, R.: A limited memory steepest descent method. Math. Program. Ser. A 135, 413–436 (2012)

Frassoldati, G., Zanni, L., Zanghirati, G.: New adaptive stepsize selections in gradient methods. J. Ind. Manag. Optim. 4(2), 299–312 (2008)

Friedlander, A., Martínez, J., Molina, B., Raydan, M.: Gradient method with retards and generalizations. SIAM J. Numer. Anal. 36, 275–289 (1999)

Grippo, L., Lampariello, F., Lucidi, S.: A nonmonotone line search technique for Newton’s method. SIAM J. Num. Anal. 23, 707–716 (1986)

Huang, H.: Efficient reconstruction of 2D images and 3D surfaces. Ph.D. Thesis, University of BC, Vancouver (2008)

Lamotte, J.L., Molina, B., Raydan, M.: Smooth and adaptive gradient method with retards. Math. Comput. Model. 36(9–10), 1161–1168 (2002)

Loosli, G., Canu, S.: Quadratic programming and machine learning large scale problems and sparsity. In: Siarry, P. (ed.) Optimization in Signal and Image Processing, pp. 111–135. Wiley ISTE, Hoboken, NJ (2009)

Moré, J., Toraldo, G.: Algorithms for bound constrained quadratic programming problems. Numer. Math. 55, 377–400 (1989)

Nocedal, J., Sartenaer, A., Zhu, C.: On the behavior of the gradient norm in the steepest descent method. Comput. Optim. Appl. 22, 5–35 (2002)

Raydan, M.: On the Barzilai and Borwein choice of steplength for the gradient method. IMA J. Numer. Anal. 13, 321–326 (1993)

Raydan, M.: The Barzilai and Borwein gradient method for the large scale unconstrained minimization problem. SIAM J. Optim. 7, 26–33 (1997)

Raydan, M., Svaiter, B.: Relaxed steepest descent and Cauchy–Barzilai–Borwein method. Comput. Optim. Appl. 21, 155–167 (2002)

Yuan, Y.: A new stepsize for the steepest descent method. J. Comp. Math. 24, 149–156 (2006)

Yuan, Y.: Step-sizes for the gradient method. AMS/IP Stud. Adv. Math. 42(2), 785–796 (2008)

Zhou, B., Gao, L., Dai, Y.H.: Gradient methods with adaptive step-sizes. Comput. Optim. Appl. 35(1), 69–86 (2006)

Acknowledgments

We wish to thank the anonymous referees for their constructive and detailed comments, which helped to improve the quality of this paper. This work was partially supported by INdAM-GNCS (2013 Project Numerical methods and software for large-scale optimization with applications to image processing and 2014 Project First-order optimization methods for image restoration and analysis), by the National Science Foundation (Grants 1016204 and 1115568), and by the Office of Naval Research (Grant N00014-11-1-0068).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

De Asmundis, R., di Serafino, D., Hager, W.W. et al. An efficient gradient method using the Yuan steplength. Comput Optim Appl 59, 541–563 (2014). https://doi.org/10.1007/s10589-014-9669-5

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10589-014-9669-5