Abstract

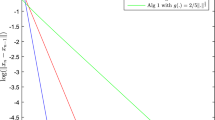

The generalized Nash equilibrium problem (GNEP) is often difficult to solve by Newton-type methods since the problem tends to have locally nonunique solutions. Here we take an existing trust-region method which is known to be locally fast convergent under a relatively mild error bound condition, and modify this method by a nonmonotone strategy in order to obtain a more reliable and efficient solver. The nonmonotone trust-region method inherits the nice local convergence properties of its monotone counterpart and is also shown to have the same global convergence properties. Numerical results indicate that the nonmonotone trust-region method is significantly better than the monotone version, and is at least competitive to an existing software applied to the same reformulation used within our trust-region framework. Additional tests on quasi-variational inequalities (QVI) are also presented to validate efficiency of the proposed extension.

Similar content being viewed by others

References

Bellavia, S., Macconi, M., Morini, B.: An affine scaling trust-region approach to bound-constrained nonlinear systems. Appl. Numer. Math. 44, 180–257 (2003)

Bellavia, S., Macconi, M., Morini, B.: STRSCNE: a scaled trust region solver for constrained nonlinear equations. Comput. Optim. Appl. 28, 31–50 (2004)

Bertsekas, D.P.: Nonlinear Programming, 2nd edn. Athena Scientific, Belmont (1999)

Calamai, P.H., Moré, J.J.: Projected gradient methods for linear constrained problems. Math. Program. 39, 93–116 (1987)

Dan, H., Yamashita, N., Fukushima, M.: Convergence properties of the inexact Levenberg–Marquardt method under local error bound conditions. Optim. Methods Softw. 17, 605–626 (2002)

Dolan, E.D., Moré, J.J.: Benchmarking optimization software with performance profiles. Math. Program. 91, 201–213 (2002)

Dreves, A., Facchinei, F., Fischer, A., Herrich, M.: A new error bound result for generalized Nash equilibrium problems and its algorithmic application. Comput. Optim. Appl. 59, 63–84 (2014)

Dreves, A., Facchinei, F., Kanzow, C., Sagratella, S.: On the solution of the KKT conditions of generalized Nash equilibrium problems. SIAM J. Optim. 21, 1082–1108 (2011)

Facchinei, F., Kanzow, C., Sagratella, S.: QVILIB: a library of quasi-variational inequality test problems. Pac. J. Optim. 9, 225–250 (2013)

Facchinei, F., Kanzow, C., Sagratella, S.: Solving quasi-variational inequalities via their KKT conditions. Math. Program. 114, 369–412 (2014)

Facchinei, F., Kanzow, C.: Generalized Nash equilibrium problems. Ann. Oper. Res. 175, 177–211 (2010)

Facchinei, F., Kanzow, C., Karl, S., Sagratella, S.: The semismooth Newton method for the solution of quasi-variational inequalities. Comput. Optim. Appl. 62, 85–109 (2015)

Fan, J., Yuan, Y.: On the quadratic convergence of the Levenberg–Marquardt method without nonsingularity assumption. Computing 74, 23–39 (2005)

Fischer, A., Herrich, M., Schönefeld, K.: Generalized Nash equilibrium problems—recent advances and challenges. Pesquisa Operacional 34, 521–558 (2014)

Fischer, A., Shukla, P.K., Wang, M.: On the inexactness level of robust Levenberg–Marquardt methods. Optimization 59, 273–287 (2010)

Herrich, M.: Local convergence of Newton-type methods for nonsmooth constrained equations and applications. Ph.D. Thesis, Institute of Mathematics, Technical University of Dresden, Germany (2014)

Izmailov, A.F., Solodov, M.V.: On error bounds and Newton-type methods for generalized Nash equilibrium problems. Comput. Optim. Appl. 59, 201–218 (2014)

Kanzow, C., Steck, D.: Augmented Lagrangian methods for the solution of generalized Nash equilibrium problems. SIAM J. Optim. 26, 2034–2058 (2016)

Kanzow, C., Yamashita, N., Fukushima, M.: Levenberg–Marquardt methods with strong local convergence properties for solving nonlinear equations with convex constraints. J. Comput. Appl. Math. 172, 375–397 (2004)

Qi, L., Tong, X.J., Li, D.H.: Active-set projected trust-region algorithm for box-constrained nonsmooth equations. J. Optim. Theory Appl. 120, 601–625 (2004)

Toint, P.L.: Non-monotone trust-region algorithms for nonlinear optimization subject to convex constraints. Math. Program. 77, 69–94 (1997)

Tong, X.J., Qi, L.: On the convergence of a trust-region method for solving constrained nonlinear equations with degenerate solutions. J. Optim. Theory Appl. 123, 187–211 (2004)

Yamashita, N., Fukushima, M.: On the rate of convergence of the Levenberg–Marquardt method. Computing 15, 239–249 (2001)

Author information

Authors and Affiliations

Corresponding author

A Proofs of Lemma 1 and Proposition 1

A Proofs of Lemma 1 and Proposition 1

We first recall some elementary properties of the projection operator.

Lemma 3

The following statements hold:

- (a) :

-

\( \big ( P_{\varOmega } (z) - z)^T \big ( P_{\varOmega } (z) - x \big ) \le 0 \quad \forall x \in \varOmega , \ \forall z \in \mathbb {R}^n \);

- (b) :

-

\(\Vert P_{\varOmega }(x_2) - P_{\varOmega }(x_1) \Vert \le \Vert x_2 - x_1 \Vert \quad \forall x_1,x_2 \in \mathbb {R}^n.\)

- (c) :

-

Given \( x, d \in \mathbb {R}^n \), the function

$$\begin{aligned} \theta (t) := \frac{\big \Vert P_{\varOmega } (x+ t d) - x \big \Vert }{t}, \quad t > 0, \end{aligned}$$is nonincreasing.

The first two properties in Lemma 3 are a well-known characterization of the projection (see [4]), whereas the third property was shown, e.g., in [3] in the context of a suitable globalization of a projected gradient method.

Proof of Lemma 1

Let \( k \in \mathbb {N} \) be fixed, choose \( \Delta > 0 \), and define

for the sake of notational convenience. Then an elementary calculation yields

where the inequality follows from Lemma 3(a), the definition of \( \bar{d}_k^G (\Delta ) \), and the feasibility of \( x_k \). On the other hand, Lemma 3(c) with \( d := -\, \nabla \varPsi (x_k) \) implies that

holds for all \( 0 < \Delta \le \Delta _{\max } \). Combining the last two inequalities yields the assertion. \(\square \)

Proof of Proposition 1

Since \(x_k\rightarrow x^*\) for \(k\in K\) and \(k\rightarrow \infty \), the continuity of \(F'\) implies that there is a constant \( b_1 \) such that \( \Vert F'(x_k)\Vert \le b_1 \) for all \( k \in K \). Using (4), (5), and Lemma 3(b), we therefore obtain for all \(k\in K\)

where the last inequality follows from the definition of \(\gamma _k\) in (S.2).

Since \(x^*\) is not a stationary point by assumption, we can follow the argument from the first part of the proof of Theorem 1 in order to see that there is a constant \( b > 0 \) such that

Let

We first prove that (6) holds for all \( k\in K, k \ge \hat{k}\) and all \( \Delta \in (0, \tilde{\Delta }]\). From the definition of \(\bar{d}_k(\Delta )\), we get that

where the second inequality follows directly from Lemma 1 and (27), the third inequality follows from (28) and (29) and recalling that \(0 < \gamma _k\le 1\), while the last inequality holds since \(\Delta \le \tilde{\Delta }.\)

In order to prove that (7) holds for \(k\in K\) and k sufficiently large and for \(\Delta \) belonging to an interval \((0,\bar{\Delta }]\), we will first show that

and

hold for suitable constants \( \beta > 0 \) and \( c_1 > 0 \)

First we show that (30) holds. To this aim, taking \(\Delta \in (0,\tilde{\Delta }]\), using Lemma 1 and (27), we can write

where the second inequality follows from (29), and the third holds recalling that \( (1- \sigma )< 1, \gamma _k \le 1 \), and (28). Consequently, we have

where the first inequality follows from the definitions of \(\bar{d}_k\) and \(t^*(\Delta )\) in (S.4). Thus, using (28) and recalling again that \(\gamma _k \le 1\), we obtain that there exists \(\beta > 0\) such that (30) is satisfied for all \( k\in K, k \ge \hat{k}\) and all \( \Delta \in (0, \tilde{\Delta }]\).

Now we prove (31). From Lemma 3(b), recalling the definitions of \( d_k^G(\Delta ) \), \( \bar{d}_k^G(\Delta ) \) and \(\gamma _k\), we have

From the definition of \( \bar{d}_k^{tr}(\Delta )\), using Lemma 3(b) again, and recalling that \(d_k^{tr}(\Delta )\) is the trust-region solution, we have

Consequently, from the last two inequalities we get \(\Vert \bar{d}_k(\Delta ) \Vert \le \Delta \). Since \(F'\) is locally Lipschitzian, it is globally Lipschitz on compact sets. Consequently, \( \nabla \varPsi \) is also globally Lipschitz on compact sets. Since \( x_k \rightarrow x^* \) for \( k \in K \) and \( \bar{d}_k(\Delta ) \) is bounded for all \( \Delta \in (0, \Delta _{\max } ] \), we can apply the Mean Value Theorem and obtain the existence of suitable numbers \( \theta _k \in (0,1) \) and a Lipschitz constant \( L > 0 \) such that

for all \( k \in K \) and all \( \Delta \in (0, \Delta _{\max }] \), where the last inequality takes into account the Cauchy–Schwarz inequality. Hence, we can write

for suitable constants \( c_1, c_2 > 0 \).

Finally, exploiting (30) and (31), it follows that there exists \( \bar{\Delta } > 0\) such that

This concludes the proof. \(\square \)

Rights and permissions

About this article

Cite this article

Galli, L., Kanzow, C. & Sciandrone, M. A nonmonotone trust-region method for generalized Nash equilibrium and related problems with strong convergence properties. Comput Optim Appl 69, 629–652 (2018). https://doi.org/10.1007/s10589-017-9960-3

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10589-017-9960-3