Abstract

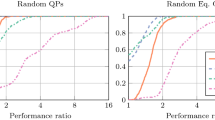

Interior point methods have attracted most of the attention in the recent decades for solving large scale convex quadratic programming problems. In this paper we take a different route as we present an augmented Lagrangian method for convex quadratic programming based on recent developments for nonlinear programming. In our approach, box constraints are penalized while equality constraints are kept within the subproblems. The motivation for this approach is that Newton’s method can be efficient for minimizing a piecewise quadratic function. Moreover, since augmented Lagrangian methods do not rely on proximity to the central path, some of the inherent difficulties in interior point methods can be avoided. In addition, a good starting point can be easily exploited, which can be relevant for solving subproblems arising from sequential quadratic programming, in sensitivity analysis and in branch and bound techniques. We prove well-definedness and finite convergence of the method proposed. Numerical experiments on separable strictly convex quadratic problems formulated from the Netlib collection show that our method can be competitive with interior point methods, in particular when a good initial point is available and a second-order Lagrange multiplier update is used.

Similar content being viewed by others

Notes

Freely available at: www.ime.usp.br/~egbirgin/tango.

Problems forplan, gfrd-pnc and pilot.we were removed from the Netlib collection, since they were not available in the presolved library [23].

References

Andreani, R., Birgin, E.G., Martínez, J.M., Schuverdt, M.L.: On augmented lagrangian methods with general lower-level constraints. SIAM J. Optim. 18, 1286–1309 (2008)

Andreani, R., Haeser, G., Martínez, J.M.: On sequential optimality conditions for smooth constrained optimization. Optimization 60(5), 627–641 (2011)

Andreani, R., Haeser, G., Schuverdt, M.L., Silva, P.J.S.: A relaxed constant positive linear dependence constraint qualification and applications. Math. Program. 135, 255–273 (2012)

Andreani, R., Haeser, G., Schuverdt, M.L., Silva, P.J.S.: Two new weak constraint qualifications and applications. SIAM J. Optim. 22, 1109–1135 (2012)

Andreani, R., Martínez, J.M., Ramos, A., Silva, P.J.S.: A cone-continuity constraint qualification and algorithmic consequences. SIAM J. Optim. 26(1), 96–110 (2016)

Barrios, J.G., Cruz, J.Y.B., Ferreira, O.P., Németh, S.Z.: A semi-smooth newton method for a special piecewise linear system with application to positively constrained convex quadratic programming. J. Comput. Appl. Math. 301, 91–100 (2016)

Bertsekas, D.P.: Constrained Optimization and Lagrange Multiplier Methods. Athena Scientific, Belmont (1996)

Bertsekas, D.P.: Nonlinear Programming. Athena Scientific, Belmont (1999)

Bertsekas, D.P.: Convex Optimization Algorithms. Athena Scientific, Belmont (2015)

Bezanson, J., Edelman, A., Karpinski, S., Shah, V.B.: Julia: A Fresh Approach to Numerical Computing. SIAM Rev. 59(1), 65–98 (2017)

Birgin, E.G., Martínez, J.M.: Practical Augmented Lagrangian Methods for Constrained Optimization. SIAM Publications, Philadelphia (2014)

Birgin, E.G., Bueno, L.F., Martínez, J.M.: Sequential equality-constrained optimization for nonlinear programming. Comput. Optim. Appl. 65, 699–721 (2016)

Birgin, E.G., Haeser, G., Ramos, A.: Augmented lagrangians with constrained subproblems and convergence to second-order stationary points. Comput. Optim. Appl. 69, 51–75 (2018)

Bixby, R.E., Saltzman, M.J.: Recovering an optimal LP basis from an interior point solution. Oper. Res. Lett. 15(4), 169–178 (1994)

Bueno, L.F., Haeser, G., Rojas, F.N.: Optimality conditions and constraint qualifications for generalized nash equilibrium problems and their practical implications. SIAM J. Optim. 29(1), 31–54 (2019)

Buys, J.D.: Dual algorithms for constrained optimization problems. Ph.D. thesis, University of Leiden (1972)

Dongarra, J.J., Grosse, E.: Distribution of mathematical software via electronic mail. Commun. ACM 30(5), 403–407 (1987)

Dostál, Z., Kozubek, T., Sadowska, M., Vondrák, V.: Scalable Algorithms for Contact Problems. Springer, New York (2016)

Duff, I.S.: MA57: a code for the solution of sparse symmetric definite and indefinite systems. ACM Trans. Math. Softw. 30(2), 118–144 (2004)

Gondzio, J.: Interior point methods 25 years later. European J. Oper. Res. 218(3), 587–601 (2012)

Güler, O.: Augmented lagrangian algorithms for linear programming. J. Optim. Theory Appl. 75(3), 445–470 (1992)

Haeser, G., Hinder, O., Ye, Y.: On the behavior of Lagrange multipliers in convex and non-convex infeasible interior point methods (2017). arXiv:1707.07327

Hager, W.W.: COAP test problems: a collection of optimization problems (2018). http://users.clas.ufl.edu/hager/coap/format.html

HSL: A collection of Fortran codes for large-scale scientific computation (2018). www.hsl.rl.ac.uk

Janin, R.: Directional derivative of the marginal function in nonlinear programming. In: Fiacco, A.V. (ed.) Sensitivity, Stability and Parametric Analysis, Mathematical Programming Studies, vol. 21, pp. 110–126. Springer, Berlin (1984)

John, E., Yildirim, E.A.: Implementation of warm-start strategies in interior-point methods for linear programming in fixed dimension. Comput. Optim. Appl. 41(2), 151–183 (2007)

Kanzow, C., Steck, D.: An example comparing the standard and safeguarded augmented Lagrangian methods. Oper. Res. Lett. 45(6), 598–603 (2017)

Mehrotra, S.: On the Implementation of a Primal-Dual Interior Point Method. SIAM J. Optim. 2(4), 575–601 (1992)

Qi, L., Wei, Z.: On the constant positive linear dependence conditions and its application to SQP methods. SIAM J. Optim. 10, 963–981 (2000)

Sridhar, S., Wright, S., Re, C., Liu, J., Bittorf, V., Zhang, C.: An approximate, efficient LP solver for LP rounding. In: Burges, C.J.C., Bottou, L., Welling, M., Ghahramani, Z., Weinberger, K.Q. (eds.) Advances in Neural Information Processing Systems 26, pp. 2895–2903. Curran Associates, Inc. (2013)

Vavasis, S.A., Ye, Y.: Identifying an optimal basis in linear programming. Ann. Oper. Res. 62(1), 565–572 (1996)

Ye, Y.: On the finite convergence of interior-point algorithms for linear programming. Math. Program. 57(1), 325–335 (1992)

Yen, I.E.H., Zhong, K., Hsieh, C.J., Ravikumar, P.K., Dhillon, I.S.: Sparse linear programming via primal and dual augmented coordinate descent. In: Cortes, C., Lawrence, N.D., Lee, D.D., Sugiyama, M., Garnett, R. (eds.) Advances in Neural Information Processing Systems 28, pp. 2368–2376. Curran Associates, Inc. (2015)

Yildirim, E.A., Wright, S.J.: Warm-start strategies in interior-point methods for linear programming. SIAM J. Optim. 12(3), 782–810 (2002)

Yuan, Y.X.: Analysis on a superlinearly convergent augmented Lagrangian method. Acta Math. Sin. (Engl. Ser.) 30(1), 1–10 (2014)

Acknowledgements

We dedicate this paper, in honor of his 70th birthday, to Professor J. M. Martínez, who greatly influenced our careers. Moreover, we would like to thank him for important discussions we had in designing the original ideas of this work. In addition, we would like to express gratitude to Professors Roger Behling, Ernesto Birgin and Thadeu Senne for their discussions along different stages of the trajectory of the results presented here. We also thank the anonymous referees whose valuable suggestions improved this paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This work was supported by Brazilian Agencies Fundação de Amparo à Pesquisa do Estado de São Paulo (FAPESP) (Grants 2015/02528-8, 2017/18308-2 and 2018/24293-0), Coordenação de Aperfeiçoamento de Pessoal de Nível Superior (CAPES) and Conselho Nacional de Desenvolvimento Científico e Tecnológico (CNPq).

Rights and permissions

About this article

Cite this article

Bueno, L.F., Haeser, G. & Santos, LR. Towards an efficient augmented Lagrangian method for convex quadratic programming. Comput Optim Appl 76, 767–800 (2020). https://doi.org/10.1007/s10589-019-00161-2

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10589-019-00161-2