Abstract

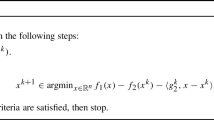

In this paper, we propose a new method for a class of difference-of-convex (DC) optimization problems, whose objective is the sum of a smooth function and a possibly non-prox-friendly DC function. The method sequentially solves subproblems constructed from a quadratic approximation of the smooth function and a linear majorization of the concave part of the DC function. We allow the subproblem to be solved inexactly, and propose a new inexact rule to characterize the inexactness of the approximate solution. For several classical algorithms applied to the subproblem, we derive practical termination criteria so as to obtain solutions satisfying the inexact rule. We also present some convergence results for our method, including the global subsequential convergence and a non-asymptotic complexity analysis. Finally, numerical experiments are conducted to illustrate the efficiency of our method.

Similar content being viewed by others

Notes

This is applicable since our choice of \({\varvec{B}_{k}}\) in (5.2) is the sum of a diagonal matrix and a rank-one matrix.

The code can be downloaded in https://github.com/stephenbeckr/zeroSR1/tree/master/paperExperiments/Lasso.

In [4], the restart frequency is 200. We replace the frequency by 2000 thus to improve the performance of pDCA\(_e\) in numerical experiments.

Indeed, for any \({\varvec{x}}\in \mathrm{I\!R}^n\), a subgradient in \(\partial g({\varvec{x}})\) is \(\varvec{\xi }= \tau {\varvec{x}} + \lambda \mu \,\varvec{u} + \varvec{A}^\top (\varvec{v} + \varvec{b})\), where \(\varvec{u}\) and \(\varvec{v}\) are given by

$$ \varvec{u}_i = {\left\{ \begin{array}{ll} {\text{sign}}({\varvec{x}}_i), &{} \ \ {\text{if}}\ i\in C_u \\ 0. &{} \ \ {\text{else.}} \end{array}\right. } \ \ \ \ \varvec{v}_i = {\left\{ \begin{array}{ll} (\varvec{A}{\varvec{x}} - \varvec{b})_i, &{} \ \ {\text{if}}\ i\in C_v\\ 0. &{} \ \ {\text{else.}} \end{array}\right. } $$Here, \(C_u\) is an arbitrary index set corresponding to the largest k elements of \({\varvec{x}}\) in magnitude, and \(C_v\) is an arbitrary index set corresponding to the largest r elements of \(\varvec{A}{\varvec{x}} - \varvec{b}\) in magnitude.

It has been shown in [24] that sequence generated by pDCA\(_e\) converges locally linearly to a stationary point of (6.5). Moreover, it has been shown in [24] that pDCA\(_e\) outperforms NPG\(_{\text{major}}\) proposed in [25, Algorithm 2]. Consequently, we do not compare our method with NPG\(_{\text{major}}\) here.

References

Ahn, M., Pang, J.S., Xin, J.: Difference-of-convex learning: directional stationarity, optimality, and sparsity. SIAM J. Optim. 27, 1637–1665 (2017)

Beck, A.: First-Order Methods in Optimization. SIAM (2017)

Becker, S., Fadili, J., Ochs, P.: On quasi-Newton forward-backward splitting: proximal calculus and convergence. SIAM J. Optim. 29, 2445–2482 (2019)

Becker, S., Candès, E.J., Grant, M.C.: Templates for convex cone problems with applications to sparse signal recovery. Math. Program. Comput. 3, 165–218 (2011)

Bonettini, S., Loris, I., Porta, F., Prato, M.: Variable metric inexact line-search-based methods for nonsmooth optimization. SIAM J. Optim. 26, 891–921 (2016)

Byrd, R.H., Nocedal, J., Oztoprak, F.: An inexact successive quadratic approximation method for \(L\)-1 regularized optimization. Math. Program. 157, 375–396 (2016)

Bonettini, S., Porta, F., Ruggiero, V.: A variable metric forward-backward method with extrapolation. SIAM J. Sci. Comput. 38, A2558–A2584 (2016)

Beck, A., Teboulle, M.: A fast iterative shrinkage-thresholding algorithm for linear inverse problems. SIAM J. Imaging Sci. 2, 183–202 (2009)

Chouzenoux, E., Pesquet, J.C., Repetti, A.: Variable metric forward-backward algorithm for minimizing the sum of a differentiable function and a convex function. J. Optim. Theory Appl. 162, 107–132 (2014)

Fan, J., Li, R.: Variable selection via nonconcave penalized likelihood and its oracle properties. J. Am. Stat. Assoc. 456, 1348–1360 (2001)

Ghanbari, H., Scheinberg, K.: Proximal quasi-Newton methods for regularized convex optimization with linear and accelerated sublinear convergence rates. Comput. Optim. Appl. 69, 597–627 (2018)

Gotoh, J.Y., Takeda, A., Tono, K.: DC formulations and algorithms for sparse optimization problems. Math. Program. 169, 141–176 (2018)

Gong, P., Zhang, C., Lu, Z., Huang, J., Ye, J.: A general iterative shrinkage and thresholding algorithm for non-convex regularized optimization problems. In: International Conference on Machine Learning, pp. 37–45 (2013)

Kanzow, C., Lechner, T.: Globalized inexact proximal Newton-type methods for nonconvex composite functions. https://www.mathematik.uni-wuerzburg.de/fileadmin/10040700/paper/ProxNewton.pdf (2020)

Karimi, S., Vavasis, S.: IMRO: a proximal quasi-Newton method for solving \(\ell _1\)-regularized least squares problems. SIAM J. Optim. 27, 583–615 (2017)

Lee, C.P., Wright, S.J.: Inexact successive quadratic approximation for regularized optimization. Comput. Optim. Appl. 72, 641–674 (2019)

Li, G., Liu, T., Pong, T.P.: Peaceman-Rachford splitting for a class of nonconvex optimization problems. Comput. Optim. Appl. 68, 407–436 (2017)

Li, J., Andersen, M.S., Vandenberghe, L.: Inexact proximal Newton methods for self-concordant functions. Math. Methods Oper. Res. 85, 19–41 (2017)

Li, H., Lin, Z.: Accelerated proximal gradient methods for nonconvex programming. In: Advances in Neural Information Processing Systems, pp. 379–387 (2015)

Lin, H., Mairal, J., Harchaoui, Z.: An inexact variable metric proximal point algorithm for generic quasi-Newton acceleration. SIAM J. Optim. 29, 1408–1443 (2019)

Lee, J.D., Sun, Y., Saunders, M.A.: Proximal Newton-type methods for minimizing composite functions. SIAM J. Optim. 24, 1420–1443 (2014)

Li, X., Sun, D., Toh, K.C.: A highly efficient semismooth Newton augmented Lagrangian method for solving Lasso problems. SIAM J. Optim. 28, 433–458 (2018)

Liu, T., Pong, T.K.: Further properties of the forward-backward envelope with applications to difference-of-convex programming. Comput. Optim. Appl. 67, 489–520 (2017)

Liu, T., Pong, T.K., Takeda, A.: A refined convergence analysis of pDCA\(_e\) with applications to simultaneous sparse recovery and outlier detection. Comput. Optim. Appl. 73, 69–100 (2019)

Liu, T., Pong, T.K., Takeda, A.: A successive difference-of-convex approximation method for a class of nonconvex nonsmooth optimization problems. Math. Program. 176, 339–367 (2019)

Luo, Z.Q., Tseng, P.: Error bound and convergence analysis of matrix splitting algorithms for the affine variational inequality problem. SIAM J. Optim. 2, 43–54 (1992)

Lou, Y., Yan, M.: Fast L\(_1\)-L\(_2\) minimization via a proximal operator. J. Sci. Comput. 74, 767–785 (2018)

Ma, T.H., Lou, Y., Huang, T.Z.: Truncated \(\ell _{1-2}\) models for sparse recovery and rank minimization. SIAM J. Imaging Sci. 10, 1346–1380 (2017)

Nakayama, S., Narushima, Y., Yabe, H.: Inexact proximal memoryless quasi-Newton methods based on the Broyden family for minimizing composite functions. Comput. Optim. Appl. 79, 127–154 (2021)

O’donoghue, B., Candès, E.J.: Adaptive restart for accelerated gradient schemes. J. Found. Comput. Math. 15, 715–732 (2015)

Peng, W., Zhang, H., Zhang, X., Cheng, L.: Global complexity analysis of inexact successive quadratic approximation methods for regularized optimization under mild assumptions. J. Glob. Optim. 78, 69–89 (2020)

Rockafellar, R.T., Wets, R.J.-B.: Variational Analysis. Springer, Berlin (1998)

Salzo, S.: The variable metric forward-backward splitting algorithm under mild differentiability assumptions. SIAM J. Optim. 27, 2153–2181 (2017)

Schmidt, M., Roux, N. L., Bach, F.: Convergence rates of inexact proximal-gradient methods for convex optimization. In: Advances in Neural Information Processing Systems, pp. 1458–1466 (2011)

Scheinberg, K., Tang, X.: Practical inexact proximal quasi-Newton method with global complexity analysis. Math. Program. 160, 495–529 (2016)

Stella, L., Themelis, A., Patrinos, P.: Forward-backward quasi-Newton methods for nonsmooth optimization problems. Comput. Optim. Appl. 67, 443–487 (2017)

Tao, P.D., An, L.T.H.: Convex analysis approach to DC programming: theory, algorithms and applications. Acta Mathematica Vietnamica 22, 289–355 (1997)

Tseng, P., Yun, S.: A coordinate gradient descent method for nonsmooth separable minimization. Math. Program. 117, 387–423 (2009)

Wen, B., Chen, X., Pong, T.K.: A proximal difference-of-convex algorithm with extrapolation. Comput. Optim. Appl. 69, 297–324 (2018)

Wang, Y., Luo, Z., Zhang, X.: New improved penalty methods for sparse reconstruction based on difference of two norms. Available at researchgate. https://doi.org/10.13140/RG.2.1.3256.3369.

Wright, S.J., Nowak, R.D., Figueiredo, M.A.T.: Sparse reconstruction by separable approximation. IEEE Trans. Signal Process. 57, 2479–2493 (2009)

Yang, L.: Proximal gradient method with extrapolation and line search for a class of nonconvex and nonsmooth problems. https://arxiv.org/abs/1711.06831

Yin, P., Lou, Y., He, Q., Xin, J.: Minimization of \(\ell _{1-2}\) for compressed sensing. SIAM J. Sci. Comput. 37, A536–A563 (2015)

Yue, M.C., Zhou, Z., So, A.M.C.: A family of inexact SQA methods for non-smooth convex minimization with provable convergence guarantees based on the Luo-Tseng error bound property. Math. Program. 174, 327–358 (2019)

Zhang, C.H.: Nearly unbiased variable selection under minimax concave penalty. Ann. Stat. 38, 894–942 (2010)

Acknowledgements

The authors would like to thank Lei Yang for his constructive suggestions during the writing of this paper. The research of the first author is supported in part by JSPS KAKENHI Grants No.19H04069. The research of the second author is supported in part by JSPS KAKENHI Grants No. 17H01699 and 19H04069.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

A Proof of Lemma 3.2

Proof

First, for any \(\alpha \in (0,\,1]\), we see from the L-smoothness of f and the convexity of g with \(\varvec{\xi }^{k+1}\in \partial g({\varvec{x}}^k)\) that

On the other hand, the convexity of h and \(\alpha \in (0,\, 1]\) yield

To show the well-definedness of three termination criteria \((\text {LS}_1)\), \((\text {LS}_2)\) and \((\text {LS}_3)\), we see from (A.1) and (A.2) that it suffices to show that \(\triangle _{k,1}\) could be bounded by a proper multiple of \(\Vert \varvec{d}_k\Vert ^2\). To proceed, we first see from the convexity of h and \({\varvec{B}_{k}}\succ {\varvec{0}}\) that \(G_k\) is strongly convex with modulus \(\lambda _{\min }({\varvec{B}_{k}})\). On the other hand, we know from (3.3) and \(\varvec{d}_k = \varvec{u}^k - {\varvec{x}}^k\) that there exists some \(\varvec{w}_k \in \partial G_k(\varvec{u}^k)\) such that \(\Vert \varvec{w}_k\Vert \le \epsilon _k\,\Vert \varvec{d}_k\Vert \). This together with the strong convexity of \(G_k\) with modulus \(\lambda _{\min }({\varvec{B}_{k}})\) implies that

Moreover, we know from (A.3) and \(\varvec{d}_k = \varvec{u}^k - {\varvec{x}}^k\) that

which implies that

Now we are ready to prove the well-definedness of the three termination criteria. We first consider the termination criteria \((\text {LS}_1)\) and \((\text {LS}_2)\) when \({\lambda _{\min }({\varvec{B}_{k}}) - \epsilon _k > 0}\). For any \(\alpha \in (0,\, 1]\), we have

where the second inequality follows from (A.1) and (A.2), and the last inequality follows from (A.4). Notice that \(\sigma \in (0,\, 1)\) and \({\lambda _{\min }({\varvec{B}_{k}}) - \epsilon _k > 0}\). We see from (A.5) that its left-hand side is nonpositive when \(\alpha \in (0,\, 2(1 - \sigma )\left( {\lambda _{\min }({\varvec{B}_{k}}) - \epsilon _k}\right) /L]\). This proves the well-definedness of \((\text {LS}_1)\). Similarly, we consider termination criterion \((\text {LS}_2)\). By (A.1), (A.2) and (A.4), we have

This proves the well-definedness of \((\text {LS}_2)\). Finally, we see from (A.1), (A.2) and (A.4) that

This proves the well-definedness of \((\text {LS}_3)\) when \({\lambda _{\min }({\varvec{B}_{k}}) - \epsilon _k} > \sigma \). Furthermore, we have (3.4) by noticing (A.5), (A.6), (A.7) and the line-search rule \(\alpha _k\in \left\{ \beta ^i: i = 0, 1, \ldots \right\} \). This completes the proof. \(\square \)

B Proof of Theorem 3.3

Proof

For simplicity of notation, we define

We know from (3.4) that in case of \((\text {LS}_1)\) or \((\text {LS}_2)\) with \(\delta > 0\) we have \(\alpha _k \ge c_1 > 0\) with \(c_1: = \min \left\{ 1,\, 2\beta (1 - \sigma )\delta /L\right\} \), and in case of \((\text {LS}_3)\) with \(\delta > \sigma \), we have \(\alpha _k \ge c_2 > 0\) with \(c_2: = \min \left\{ 1,\, 2\beta (\delta - \sigma )/L\right\} \). Furthermore, for the three line-search criteria we have

where (a) follows from (A.4), \({\delta =\inf _k\left( \lambda _{\min }({\varvec{B}_{k}}) - \epsilon _k\right) }\) and \(\alpha _k \ge c_1\) in case of \((\text {LS}_1)\) with \(\delta > 0\), (b) follows from (A.2) and (c) follows from \(\alpha _k\ge c_2\) in case of \((\text {LS}_3)\) with \(\delta > \sigma \). Consequently, for each line search there exists some \(c > 0\) such that

Next, we prove the three statements based on (B.2). First, we know from (B.2) that

which together with the level-boundedness of F gives the boundedness of \(\{{\varvec{x}}^k\}\). This proves (i).

Next, we prove (ii). We see from (B.2) that sequence \(\{F({\varvec{x}}^{t(k)})\}\) is non-increasing:

This together with the level-boundedness of F implies that there exists some \(\bar{F}\) such that

Next, we prove that the following relationships hold for all \(j\ge 1\) by induction:

We first prove that (B.4a) and (B.4b) hold for \(j = 1\). Replacing k in (B.2) by \(t(k) - 1\), we obtain

Rearranging (B.5) and letting \(k\rightarrow \infty \), using (B.3) and \(t(k)\rightarrow \infty \) while \(k\rightarrow \infty \) (when \(k\ge M\) we have \(t(k)\in [k - M,\, k]\)), we see that \(\lim _{k\rightarrow \infty }\varvec{d}_{t(k)-1} = {\varvec{0}}\). This proves that (B.4a) holds for \(j=1\). Furthermore, we see from (B.3) that

where the last equality follows from \(\alpha _k\le 1\), \(\lim _{k\rightarrow \infty }\varvec{d}_{t(k)-1} = {\varvec{0}}\) and the uniform continuity of F on the closure of the sequence \(\{{\varvec{x}}^k\}\) (This is because h is continuous on its domain, \({\varvec{x}}^k\in {\text{dom}}\,h\) and sequence \(\{{\varvec{x}}^k\}\) is bounded). This proves that (B.4b) holds for \(j = 1\).

Now we assume that (B.4a) and (B.4b) hold for some \(J\ge 1\), i.e., \(\lim _{k\rightarrow \infty }\varvec{d}_{t(k) - J} = {\varvec{0}}\) and \(\lim _{k\rightarrow \infty }F\big ({\varvec{x}}^{t(k)- J}\big ) = \bar{F}\). Replacing k in (B.2) by \(t(k) - J -1\), we obtain

Rearranging (B.6) and letting \(k\rightarrow \infty \), using assumption \(\lim _{k\rightarrow \infty }F\big ({\varvec{x}}^{t(k)- J}\big ) = \bar{F}\) and (B.3) with \(t(k) - J -1\rightarrow \infty \) while \(k\rightarrow \infty \) (when \(k\ge M\) we have \(t(k)\in [k - M,\, k]\)), we see that \(\lim _{k\rightarrow \infty }\varvec{d}_{t(k) - J-1} = {\varvec{0}}\). This proves that (B.4a) holds for \(j=J+1\). Similarly, we have

which proves that (B.4b) holds for \(J+1\). This completes the induction.

Now we are ready to prove (ii). Note from (B.1) that when \(k\ge M\), we have \(k-M\le t(k) \le k\). Thus, for any k, we have \(k - M - 1 = t(k) - j_k\) for some \(j_k\in [1,\, M+1]\). Therefore, it follows from (B.4a) that

which together with \({\varvec{x}}^{k+1} - {\varvec{x}}^k = \alpha _k\varvec{d}_k\) and \(\alpha _k\le 1\) proves (ii).

Finally, we prove (iii). Since \(\{{\varvec{x}}^k\}\) is bounded, there exists some convergence subsequence, say \(\{{\varvec{x}}^{k_j}\}\), which satisfies \(\lim _{j\rightarrow \infty }{\varvec{x}}^{k_j} = {\varvec{x}}^*\). On the other hand, since the set \(\partial G_k(\varvec{u}^k)\) is closed, we see from (3.3) that there exists some \(\varvec{w}_k\in {\text{dom}}\, h\) satisfying \(\Vert \varvec{w}_k\Vert \le \epsilon _k\Vert \varvec{u}^k - {\varvec{x}}^k\Vert \) and

This combined with \(\varvec{d}_k = \varvec{u}^k - {\varvec{x}}^k\) further implies that

Due to \(\varvec{\xi }^{k+1}\in \partial g({\varvec{x}}^k)\), the boundedness of \(\{{\varvec{x}}^k\}\) and the convexity and continuity of g, we see that \(\{\varvec{\xi }^k\}\) is bounded. Thus, by passing to a further subsequence if necessary, without loss of generality, we assume that \(\varvec{\xi }^*:= \lim _{j\rightarrow \infty }\varvec{\xi }^{k_j+1}\) exists and thus \(\varvec{\xi }^*\in \partial g({\varvec{x}}^*)\) due to \(\varvec{\xi }^{k_j+1}\in \partial g({\varvec{x}}^{k_j})\) and the closedness of \(\partial g\). On the other hand, we see from the boundedness of \(\{{\varvec{B}_{k}}\}\) and the assumption \(\delta > 0\) that \(\{\epsilon _k\}\) is bounded, which further gives \(\Vert \varvec{w}_k\Vert \le \epsilon _k\Vert \varvec{u}^k - {\varvec{x}}^k\Vert = \epsilon _k\Vert \varvec{d}_k\Vert \rightarrow 0\). Now passing to the limit in (B.7) and using \(\Vert \varvec{w}_k\Vert \rightarrow 0\), \(\left\| \varvec{d}_k\right\| \rightarrow 0\), the boundedness of \(\{{\varvec{B}_{k}}\}\), the L-smoothness of f and the closedness of \(\partial h\), we see that

This proves (iii) and completes the proof. \(\square \)

C Proof of Lemma 3.6

Proof

Since \({\varvec{x}}_{\varvec{I}}^k\) is a global minimizer of the optimization problem in (3.6), we have

If \({\varvec{x}}_{\varvec{I}}^k = {\varvec{x}}^k\), we see from (C.1) that \({\varvec{0}} \in \nabla f({\varvec{x}}^k) - \varvec{\xi }^{k +1} + \partial h({\varvec{x}}^k)\), which together with \(\varvec{\xi }^{k+1}\in \partial g({\varvec{x}}^k)\) proves that \({\varvec{x}}^k\) is a stationary point of (1.1). On the other hand, if \({\varvec{x}}^k\) is a stationary point of (1.1) and \(\partial g({\varvec{x}}^k)\) is a singleton, these together with \(\varvec{\xi }^{k+1}\in \partial g({\varvec{x}}^k)\) give

Now, using the monotonicity of operator \(\partial h\) with (C.1) and (C.2), we further have

which implies that \({\varvec{x}}_{\varvec{I}}^k = {\varvec{x}}^k\). This completes the proof. \(\square \)

D Proof of Theorem 4.2

Proof

First, we consider (FISTA). Since \({\varvec{B}_{k}}\succ {\varvec{0}}\), we know from the convexity of h that \(G_k(\cdot )\) is strongly convex with modulus \(\lambda _{\min }({\varvec{B}_{k}})\). We then further have

where the last inequality follows from [8, Theorem 4.4]. Furthermore, inequality (D.1) together with the definition of \(c_1\) in (4.5) implies that

Furthermore, we have that for \(\ell \ge 2\),

where the first equality follows from the \({\varvec{y}}\)-update in (FISTA) and the last inequality follows from (D.2). Notice that \({\varvec{z}}^0 = {\varvec{x}}^k\ne {\varvec{z}}^*\). Using (D.2) and (D.3), we further have for \(\ell \ge \max \{2,\, c_1\}\) that

Then the termination criterion (4.2) is satisfied whenever the right-hand side of (D.4) is upper bounded by \(\frac{\epsilon _k}{2L_{\phi }}\), which by calculus further gives (4.6).

Now we consider (V-FISTA). Similarly, the strong convexity of \(G_k(\cdot )\) implies that

where the second inequality follows from [2, Theorem 10.42] and the last equality follows from the definition of \(\tau \) and \(c_2\) in (4.5). We then see from (D.5) that

This together with the \({\varvec{y}}\)-update in (V-FISTA) that for \(\ell \ge 2\),

Since \({\varvec{z}}^0 \ne {\varvec{z}}^*\), we use (D.6) and have that for \(\ell \ge 1+ {\log}_{\tau }\frac{c_2}{\Vert {\varvec{z}}^0 - {\varvec{z}}^*\Vert }\),

Using this and (D.7), we have for any \(\ell \ge \max \{2,\, 1+ {\log}_{\tau }\frac{c_2}{\Vert {\varvec{z}}^0 - {\varvec{z}}^*\Vert }\}\) that

Then the termination criterion (4.2) is satisfied whenever the right-hand side of (D.8) is upper bounded by \(\frac{\epsilon _k}{2L_{\phi }}\), which by calculus further gives (4.7). This completes the proof. \(\square \)

Rights and permissions

About this article

Cite this article

Liu, T., Takeda, A. An inexact successive quadratic approximation method for a class of difference-of-convex optimization problems. Comput Optim Appl 82, 141–173 (2022). https://doi.org/10.1007/s10589-022-00357-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10589-022-00357-z