Abstract

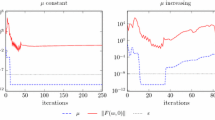

In this paper we study a class of nonconvex optimization which involves uncertainty in the objective and a large number of nonconvex functional constraints. Challenges often arise when solving this type of problems due to the nonconvexity of the feasible set and the high cost of calculating function value and gradient of all constraints simultaneously. To handle these issues, we propose a stochastic primal-dual method in this paper. At each iteration, a proximal subproblem based on a stochastic approximation to an augmented Lagrangian function is solved to update the primal variable, which is then used to update dual variables. We explore theoretical properties of the proposed algorithm and establish its iteration and sample complexities to find an \(\epsilon\)-stationary point of the original problem. Numerical tests on a weighted maximin dispersion problem and a nonconvex quadratically constrained optimization problem demonstrate the promising performance of the proposed algorithm.

Similar content being viewed by others

Data availability

The datasets generated during and/or analysed during the current study are available from the corresponding author on reasonable request.

Notes

For any feasible point \(x\in X\) with \(f(x)\le {\mathbf {0}}\), we say that BCQ holds at x if we can deduce \(y:=\left( y_{1},\ldots ,y_{M} \right) ^{{\prime }}={\mathbf {0}}\) from \(-J_f(x)^{{\prime }} y \in \mathcal {N}_{X}\left( x\right)\), \(y\ge {\mathbf {0}},\) and \(y_{i}= 0 \text { if } f_{i}\left( x\right) <0 ,i=1,\dots ,M.\)

References

Boob, D., Deng, Q., Lan, G.: Stochastic first-order methods for convex and nonconvex functional constrained optimization. Math. Program. (2022)

Campi, M.C., Garatti, S.: A sampling-and-discarding approach to chance-constrained optimization: Feasibility and optimality. J. Optim. Theory App. 148(2), 257–280 (2011)

Defazio, A., Bach, F., Lacoste-Julien, S.: SAGA: A fast incremental gradient method with support for non-strongly convex composite objectives. In: 28th NIPS, vol. 27 (2014)

Ghadimi, S.: Conditional gradient type methods for composite nonlinear and stochastic optimization. Math. Program. 173(1–2), 431–464 (2019)

Haines, S., Loeppky, J., Tseng, P., Wang, X.: Convex relaxations of the weighted maxmin dispersion problem. SIAM J. Optim. 23(4), 2264–2294 (2013)

Hamedani, E.Y., Aybat, N.S.: A primal-dual algorithm with line search for general convex-concave saddle point problems. SIAM J. Optim. 31(2), 1299–1329 (2021)

Hiriart-Urruty, J.-B., Lemaréchal, C.: Fundamentals of Convex Analysis. Springer (2001)

Huo, Z., Gu, B., Liu, J., Huang, H.: Accelerated method for stochastic composition optimization with nonsmooth regularization. In: 32nd AAAI Conference on Artificial Intelligence, pp. 3287–3294. AAAI (2018)

Johnson, R., Zhang, T.: Accelerating stochastic gradient descent using predictive variance reduction. NIPS 1(3), 315–323 (2013)

Lan, G.: First-Order and Stochastic Optimization Methods for Machine Learning. Springer, Cham (2020)

Lan, G., Romeijn, E., Zhou, Z.: Conditional gradient methods for convex optimization with general affine and nonlinear constraints. SIAM J. Optim. 31(3), 2307–2339 (2021)

Lan, G., Zhou, Z.: Algorithms for stochastic optimization with function or expectation constraints. Comput. Optim. App. 76(2), 461–498 (2020)

Li, Z., Chen, P.-Y., Liu, S., Lu, S., Xu, Y.: Rate-improved inexact augmented lagrangian method for constrained nonconvex optimization. In: 24th AISTATS, vol. 130, pp. 2170–2178 (2021)

Li, Z., Xu, Y.: Augmented lagrangian based first-order methods for convex-constrained programs with weakly-convex objective. INFROMS J. Optim. 3(4), 373–397 (2021)

Lin, Q., Ma, R., Xu, Y.: Complexity of an inexact proximal-point penalty methods for constrained non-convex optimization. Comput. Optim. Appl. 82(1), 175–224 (2022)

Lin, Q., Nadarajah, S., Soheili, N.: A level-set method for convex optimization with a feasible solution path. SIAM J. Optim. 28(4), 3290–3311 (2018)

Lin, T., Jin, C., Jordan, M.I.: On gradient descent ascent for nonconvex-concave minimax problems. In: 37th ICML, vol. 119, pp. 6083–6093 (2020)

Lu, S., Tsaknakis, I., Hong, M., Chen, Y.: Hybrid block successive approximation for one-sided non-convex min-max problems: algorithms and applications. IEEE T. Signal Proces. 68, 3676–3691 (2020)

Milzarek, A., Xiao, X., Cen, S., Wen, Z., Ulbrich, M.: A stochastic semismooth newton method for nonsmooth nonconvex optimization. SIAM J. Optim. 29(4), 2916–2948 (2019)

Nemirovski, A.: Prox-method with rate of convergence o(1/t) for variational inequalities with lipschitz continuous monotone operators and smooth convex-concave saddle point problems. SIAM J. Optim. 15(1), 229–251 (2004)

Nguyen, L.M., Liu, J., Scheinberg, K., Takác̆, M.: SARAH: a novel method for machine learning problems using stochastic recursive gradient. In: 34th ICML, vol. 70, pp. 2613–2621 (2017)

Nocedal, J., Wright, S.: Numerical Optimization. Springer, New York (2006)

Nouiehed, M., Sanjabi, M., Huang, T., Lee, J.D., Razaviyayn, M.: Solving a class of non-convex min-max games using iterative first order methods. In: 33th NIPS, vol. 32 (2019)

Pan, W., Shen, J., Xu, Z.: An efficient algorithm for nonconvex-linear minimax optimization problem and its application in solving weighted maximin dispersion problem. Comput. Optim. Appl. 78(1), 287–306 (2021)

Pham, N.H., Nguyen, L.M., Phan, D.T., Quoc, T.-D.: ProxSARAH: An efficient algorithmic framework for stochastic composite nonconvex optimization. J. Mach. Lean. Res. 21(110), 1–48 (2020)

Poljak, B.T.: A general method for solving extremal problems. Dokl. Akad. Nauk SSSR 174(1), 33–36 (1967)

Rafique, H., Liu, M., Lin, Q., Yang, T.: Weakly-convex concave min-max optimization: provable algorithms and applications in machine learning. Optim. Method Softw. pp. 1–35 (2021)

Reddi, S.J., Sra, S., Poczos, B., Smola, A.: Stochastic Frank-Wolfe Methods for Nonconvex Optimization. In: 54TH ALLERTON, pp. 1244–1251 (2016)

Robbins, H., Monro, S.: A stochastic approximation method. Ann. Math. Statist. 22(3), 400–407 (1951)

Rockafellar, R.T.: Convex Analysis. Princeton University Press (1972)

Rockafellar, R.T.: Lagrange multipliers and optimality. SIAM Rev. 35(2), 183–238 (1993)

Rockafellar, R.T., Wets, R.J.-B.: Variational Analysis. Springer Science & Business Media (2009)

Sahin, M. F., Eftekhari, A., Alacaoglu, A., Latorre, F., Cevher, V.: An inexact augmented lagrangian framework for nonconvex optimization with nonlinear constraints. In: 33th NIPS, vol. 32 (2019)

Seri, R., Choirat, C.: Scenario approximation of robust and chance-constrained programs. J. Optim. Theory App. 158(2), 590–614 (2013)

Wang, S., Xia, Y.: On the ball-constrained weighted maximin dispersion problem. SIAM J. Optim. 26(3), 1565–1588 (2016)

Wang, X., Wang, X., Yuan, Y.X.: Stochastic proximal quasi-newton methods for non-convex composite optimization. Optim. Method Softw. 34(5), 922–948 (2019)

Wang, X., Yuan, Y.: An augmented lagrangian trust region method for equality constrained optimization. Optim. Method Softw. 30(3), 559–582 (2015)

Wang, X., Zhang, H.: An augmented lagrangian affine scaling method for nonlinear programming. Optim. Methods Softw. 30(5), 934–964 (2015)

Xiao, L., Zhang, T.: A proximal stochastic gradient method with progressive variance reduction. SIAM J. Optim. 24(4), 2057–2075 (2014)

Xu, Y.: Primal-dual stochastic gradient method for convex programs with many functional constraints. SIAM J. Optim. 30(2), 1664–1692 (2020)

Xu, Y.: First-order methods for constrained convex programming based on linearized augmented lagrangian function. INFORMS J. Optim. 3(1), 89–117 (2021)

Xu, Z., Zhang, H., Xu, Y., Lan, G.: A unified single-loop alternating gradient projection algorithm for nonconvex-concave and convex-nonconcave minimax problems (2020). arXiv preprint arXiv:2006.02032

Zhang, J., Xiao, P., Sun, R., Luo, Z.: A single-loop smoothed gradient descent-ascent algorithm for nonconvex-concave min-max problems. In: 34th NIPS, vol. 33 (2020)

Funding

This research was partially supported by the National Natural Science Foundation of China (11871453, 11731013) and the Major Key Project of PCL (PCL2022A05).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

1.1 A.1: Lipschitz-continuity of \(\psi _{\beta }\left( u,v\right)\) in \(v \in \mathbb {R}_{+}\)

For any \(u \in \mathbb {R}\),

Proof

For \(\psi _{\beta }\left( u, v\right) =\left\{ \begin{array}{ll} u v+\frac{\beta }{2} u^{2}, &{} \text{ if } \beta u+v \ge 0 \\ -\frac{v^{2}}{2 \beta }, &{} \text{ if } \beta u+v<0\end{array}\right. ,\beta >0\), the result obviously holds for any \(u \ge 0\). So we will just talk about case when \(u < 0\).

If \(\beta u+v_{1} \ge 0,\beta u+v_{2} \ge 0\), the result holds obviously. If \(\beta u+v_{1} \ge 0,\beta u+v_{2} < 0\), since \(\psi _{\beta }\left( u, v\right)\) is monotonically decreasing in \(v\ge 0\) when \(u < 0\), we have

If \(\beta u+v_{1}< 0,\beta u+v_{2} < 0\), then we have

\(\square\)

1.2 A.2: Nonexpansiveness of prox operator

For \(x^{k+1}\) defined in (15) and \({\hat{x}}^{k+1}\) defined in (30), it holds that

Proof

From the optimality condition for (15), there exists a subgradient \(u \in \partial \chi _{0}\left( x^{k+1}\right)\) such that

Applying the convexity of \(\chi _{0}\), we obtain that

Similarly, it yields from the optimality condition for \({\hat{x}}^{k+1}\) that for any \(x\in X\),

Letting \(x={\hat{x}}^{k+1}\) in (54) and \(x=x^{k+1}\) in (55) and adding them up, we obtain

where the last inequality comes from the 1-strong convexity of \(v\left( x\right)\). Then applying Cauchy-Schwarz inequality indicates the result. \(\square\)

Rights and permissions

About this article

Cite this article

Jin, L., Wang, X. A stochastic primal-dual method for a class of nonconvex constrained optimization. Comput Optim Appl 83, 143–180 (2022). https://doi.org/10.1007/s10589-022-00384-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10589-022-00384-w

Keywords

- Nonconvex optimization

- Augmented Lagrangian function

- Stochastic gradient

- \(\epsilon\)-stationary point

- Complexity