Abstract

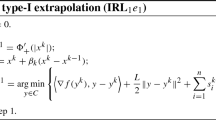

The iteratively reweighted \(\ell _1\) algorithm is a widely used method for solving various regularization problems, which generally minimize a differentiable loss function combined with a convex/nonconvex regularizer to induce sparsity in the solution. However, the convergence and the complexity of iteratively reweighted \(\ell _1\) algorithms is generally difficult to analyze, especially for non-Lipschitz differentiable regularizers such as \(\ell _p\) norm regularization with \(0<p<1\). In this paper, we propose, analyze and test a reweighted \(\ell _1\) algorithm combined with the extrapolation technique under the assumption of Kurdyka-Łojasiewicz (KL) property on the proximal function of the perturbed objective. Our method does not require the Lipschitz differentiability on the regularizers nor the smoothing parameters in the weights bounded away from 0. We show the proposed algorithm converges uniquely to a stationary point of the regularization problem and has local linear convergence for KL exponent at most 1/2 and local sublinear convergence for KL exponent greater than 1/2. We also provide results on calculating the KL exponents and discuss the cases when the KL exponent is at most 1/2. Numerical experiments show the efficiency of our proposed method.

Similar content being viewed by others

Data Availability

The data that support the findings of this study are available from the corresponding author upon request.

References

Attouch, Hedy, Bolte, Jérôme.: On the convergence of the proximal algorithm for nonsmooth functions involving analytic features. Math. Program. 116(1), 5–16 (2009)

Attouch, Hédy., Bolte, Jérôme., Redont, Patrick, Soubeyran, Antoine: Proximal alternating minimization and projection methods for nonconvex problems: an approach based on the kurdyka-łojasiewicz inequality. Math. Oper. Res. 35(2), 438–457 (2010)

Attouch, H., Bolte, J., Svaiter, B.F.: Convergence of descent methods for semi-algebraic and tame problems: proximal algorithms, forward-backward splitting, and regularized gauss-seidel methods. Math. Program. 137(1–2), 91–129 (2013)

Auslender, Alfred, Teboulle, Marc: Interior gradient and proximal methods for convex and conic optimization. SIAM J. Optim. 16(3), 697–725 (2006)

Bauschke,H.H., Dao, M.N., Moursi, W.M.: On fej\(\backslash \)’er monotone sequences and nonexpansive mappings. arXiv preprint arXiv:1507.05585, 2015

Beck, Amir, Teboulle, Marc: A fast iterative shrinkage-thresholding algorithm for linear inverse problems. SIAM J. Imag. Sci. 2(1), 183–202 (2009)

Becker, S.R., Candès, E.J., Grant, M.C.: Templates for convex cone problems with applications to sparse signal recovery. Math. Program. comput. 3(3), 165 (2011)

Bolte, J., Daniilidis, A., Lewis, A.: The łojasiewicz inequality for nonsmooth subanalytic functions with applications to subgradient dynamical systems. SIAM J. Optim. 17(4), 1205–1223 (2007)

Bolte, Jérôme., Sabach, Shoham, Teboulle, Marc: Proximal alternating linearized minimization for nonconvex and nonsmooth problems. Math. Program. 146(1), 459–494 (2014)

Candes, E.J., Wakin, M.B., Boyd, S.P.: Enhancing sparsity by reweighted \(\ell _1\) minimization. J. Fourier Anal. Appl. 14(5–6), 877–905 (2008)

Chartrand, R., Yin, W.: Iteratively reweighted algorithms for compressive sensing. In 2008 IEEE International conference on acoustics, speech and signal processing, pp. 3869–3872. IEEE, 2008

Chen, Xiaojun, Zhou, Weijun: Convergence of reweighted \(\ell _1\) minimization algorithms and unique solution of truncated lp minimization. The Hong Kong Polytechnic University, Department of Applied Mathematics (2010)

Fan, Jianqing, Li, Runze: Variable selection via nonconcave penalized likelihood and its oracle properties. J. Am. Stat. Assoc. 96(456), 1348–1360 (2001)

Figueiredo, M.A.T., Nowak, R.D., Wright, S.J.: Gradient projection for sparse reconstruction: application to compressed sensing and other inverse problems. IEEE J. Selected Topics Signal Process. 1(4), 586–597 (2007)

Frankel, Pierre, Garrigos, Guillaume, Peypouquet, Juan: Splitting methods with variable metric for kurdyka-łojasiewicz functions and general convergence rates. J. Optim. Theory Appl. 165(3), 874–900 (2015)

Ge, Dongdong, Jiang, Xiaoye, Ye, Yinyu: A note on the complexity of \(\ell _p\) minimization. Math. Program. 129(2), 285–299 (2011)

Yaohua, Hu., Li, Chong, Meng, Kaiwen, Yang, Xiaoqi: Linear convergence of inexact descent method and inexact proximal gradient algorithms for lower-order regularization problems. J. Global Optim. 79(4), 853–883 (2021)

Jaggi, M.: Sparse convex optimization methods for machine learning. PhD Thesis, ETH Zurich, 2011

Lai, Ming-Jun., Wang, Jingyue: An unconstrained \( \ell _q \) minimization with \(0<q\le 1\) for sparse solution of underdetermined linear systems. SIAM J. Optim. 21(1), 82–101 (2011)

Lan, G., Lu, Z., Monteiro, R.D.C.: Primal-dual first-order methods with \(o(1/\epsilon )\) iteration-complexity for cone programming. Math. Program. 126(1), 1–29 (2011)

Guoyin Li and Ting Kei Pong: Douglas-rachford splitting for nonconvex optimization with application to nonconvex feasibility problems. Math. Program. 159(1), 371–401 (2016)

Guoyin Li and Ting Kei Pong: Calculus of the exponent of kurdyka-łojasiewicz inequality and its applications to linear convergence of first-order methods. Found. Comput. Math. 18(5), 1199–1232 (2018)

Lobo, M.S., Fazel, M., Boyd, S.: Portfolio optimization with linear and fixed transaction costs. Annal Operat. Res. 152(1), 341–365 (2007)

Canyi Lu, Yunchao Wei, Zhouchen Lin, Shuicheng Yan.: Proximal iteratively reweighted algorithm with multiple splitting for nonconvex sparsity optimization. In Twenty-Eighth AAAI conference on artificial intelligence, 2014

Zhaosong, Lu.: Iterative reweighted minimization methods for \(\ell _p\) regularized unconstrained nonlinear programming. Math. Program. 147(1–2), 277–307 (2014)

Weixin Luo, Wen Liu, and Shenghua Gao.: A revisit of sparse coding based anomaly detection in stacked rnn framework. In Proceedings of the IEEE international conference on computer vision, pp. 341–349, 2017

Luo, Zhi-Quan., Pang, Jong-Shi., Ralph, Daniel: Mathematical programs with equilibrium constraints. Cambridge University Press, Cambridge (1996)

Lustig, M., Donoho, D., Pauly, J.M.: Sparse mri: the application of compressed sensing for rapid mr imaging. Magnetic Resonance Med.: Official J. Int. Soc. Magnetic Resonance Med. 58(6), 1182–1195 (2007)

Mairal, Julien, Elad, Michael, Sapiro, Guillermo: Sparse representation for color image restoration. IEEE Trans. Image Process. 17(1), 53–69 (2007)

Mairal, J., Bach, F., Ponce, J., Sapiro, G.: Online learning for matrix factorization and sparse coding. J. Mach. Learn. Res. 11(Jan), 19–60 (2010)

Nesterov, Yu.: Gradient methods for minimizing composite functions. Math. Program. 140(1), 125–161 (2013)

Nesterov, Yurii: Introductory lectures on convex programming volume i: basic course. Lecture Notes 3(4), 5 (1998)

Nesterov, Yurii: Primal-dual subgradient methods for convex problems. Math. Program. 120(1), 221–259 (2009)

Nesterov, Y.E.: A method for solving the convex programming problem with convergence rate o (1/k\(\hat{}\) 2). In Dokl. Akad. Nauk Sssr 269, 543–547 (1983)

Tseng, Paul: Approximation accuracy, gradient methods, and error bound for structured convex optimization. Math. Program. 125(2), 263–295 (2010)

Wang, Feng: Study on the Kurdyka-Łojasiewicz exponents of \(\ell _p\) regularization functions (in Chinese). PhD thesis Southwest Jiaotong University, Chengdu (2021)

Wang, H.,Zeng, H., Wang, J.: Relating \(\ell _p\) regularization and reweighted \(\ell _1\) regularization. arXiv preprint arXiv:1912.00723, 2019

Wen, B., Chen, X., Pong, T.K.: A proximal difference-of-convex algorithm with extrapolation. Comput. Optim. Appl. 69(2), 297–324 (2018)

Yangyang, Xu., Yin, Wotao: A block coordinate descent method for regularized multiconvex optimization with applications to nonnegative tensor factorization and completion. SIAM J. Imag. Sci. 6(3), 1758–1789 (2013)

Peiran Yu and Ting Kei Pong: Iteratively reweighted \(\ell _1\) algorithms with extrapolation. Comput. Optim. Appl. 73(2), 353–386 (2019)

Yu, P., Li, G., Pong, T.K.: Kurdyka-łojasiewicz exponent via inf-projection. Found. Comput. Math. 22, 1–47 (2021)

Zeng, Jinshan, Lin, Shaobo, Zongben, Xu.: Sparse regularization: convergence of iterative jumping thresholding algorithm. IEEE Trans. Signal Process. 64(19), 5106–5118 (2016)

Roman Zeyde, Michael Elad, Matan Protter.: On single image scale-up using sparse-representations. In International conference on curves and surfaces, pp. 711–730. Springer, 2010

Zhang, Cun-Hui., et al.: Nearly unbiased variable selection under minimax concave penalty. Ann. Stat. 38(2), 894–942 (2010)

Acknowledgements

Hao Wang was supported by the Natural Science Foundation of Shanghai under Grant 21ZR1442800 and the National Natural Science Foundation of China under Grant 12001367.The authors would like to thank Professor Ting Kei Pong and Professor Kaiwen Meng for their advice on calculating the KL exponent.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

All authors disclosed no relevant relationships.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

The translation of the proof of Theorem 5.

Proof

It follows from \(\nabla h(x^*)=0\) and the mean-value theorem that

with \(t_x \in (0,1)\). Since h is twice continuously differentiable on \(\mathbb {B}(x^*, \varepsilon _0)\), there exists \(L>0\) such that

for any \(x\in \mathbb {B}(x^*,\varepsilon _0)\). On the other hand,

where \(t_{ix}\in (0,1), i=1,2, \ldots , n\).

Since \(\nabla ^2 h(x^*)\) is nonsingular, there exists \(0<\varepsilon _1 < \varepsilon _0\) such that for any \(x\in \mathbb {B}(x^*, \varepsilon _1)\), \(A_x\) is nonsingular. Therefore, \(A_x^TA_x\) is positive definite on \(\mathbb {B}(x^*, \varepsilon _1)\). Hence, there exists \(0<\varepsilon < \varepsilon _1\) and \(\sigma > 0\) satisfying \(\sigma = \min _{x\in \mathbb {B}(x^*, \varepsilon )} \sigma _{\min }(A_x^TA_x)\). It then follows that

This implies that there exists \(\theta = \tfrac{1}{2} \in (0,1]\), \(\varepsilon > 0\) and \(c= \big ( \tfrac{L}{\sigma } \big )^{1/2}\) for any \(x\in B(x^*, \varepsilon )\),

or, equivalently, h satisfies the KL inequality with \(\theta = \tfrac{1}{2}\). \(\square \)

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Wang, H., Zeng, H. & Wang, J. An extrapolated iteratively reweighted \(\ell _1\) method with complexity analysis. Comput Optim Appl 83, 967–997 (2022). https://doi.org/10.1007/s10589-022-00416-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10589-022-00416-5