Abstract

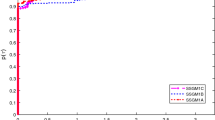

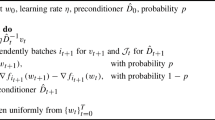

Recently, Rodomanov and Nesterov proposed a class of greedy quasi-Newton methods and established the first explicit local superlinear convergence result for Quasi-Newton type methods. In this paper, we study a variant of Powell-Symmetric-Broyden (PSB) updates based on the greedy strategy. Firstly, we give explicit condition-number-free superlinear convergence rates of proposed greedy PSB methods. Secondly, we prove the global convergence of greedy PSB methods by applying the trust-region framework. One advantage of this result is that the initial Hessian approximation can be chosen arbitrarily. Thirdly, we analyze the behaviour of the randomized PSB method, that selects the direction randomly from any spherical symmetry distribution. Finally, preliminary numerical experiments illustrate the efficiency of proposed PSB methods compared with the standard SR1 method and PSB method. Our results are given under the assumption that the objective function is a strongly convex function, and its gradient and Hessian are Lipschitz continuous.

Similar content being viewed by others

Data availability

Authors confirm that all data generated or analyzed during this study are included in this submitted article.

References

Broyden, C.G.: The convergence of a class of double-rank minimization algorithms 1. general considerations. IMA J. Appl. Math. 6, 76–90 (1970)

Broyden, C.G., Dennis, J.E., Moré, J.J.: On the local and superlinear convergence of quasi-Newton methods. IMA J. Appl. Math. 12(3), 223–245 (1973)

Byrd, R.H., Nocedal, J., Yuan, Y.Y.: Global convergence of a class of quasi-Newton methods on convex problem. SIAM J. Numer. Anal. 24, 1171–1190 (1987)

Byrd, R.H., Liu, D.C., Nocedal, J.: On the behavior of Broyden’s class of quasi-Newton methods. SIAM J. Optim. 2(4), 533–557 (1992)

Byrd, R.H., Khalfan, H.F., Schnabel, R.B.: Analysis of a symmetric rank-one trust region method. SIAM J. Optim. 6, 1025–1039 (1996)

Conn, A.R., Gould, N.I.M., Toint, P.L.: Convergence of quasi-Newton matrices generated by the symmetric rank one update. Math. Program. 50(1–3), 177–195 (1991)

Dai, Y.H.: Convergence properties of the BFGS algorithm. SIAM J. Optim. 13, 693–701 (2003)

Dai, Y.H.: A perfect example for the BFGS method. Math. Program. 138, 501–530 (2013)

Davidon, W.C.: Variable metric method for minimization. SIAM J. Optim. 1(1), 1–17 (1991)

Dennis, J.E., Moré, J.J.: A characterization of superlinear convergence and its application to quasi-Newton methods. Math. Comp. 28(126), 549–560 (1974)

Dixon, L.C.W.: Quasi-Newton algorithms generate identical points. Math. Program. 2(1), 383–387 (1972)

Donald, G.: A family of variable-metric methods derived by variational means. Math. Compt. 24(109), 23–26 (1970)

Engels, J., Martínez, H.: Local and superlinear convergence for partially known quasi-Newton methods. SIAM J. Optim. 1(1), 42–56 (1991)

Fletcher, R., Powell, M.J.D.: A rapidly convergent descent method for minimization. Comput. J. 6(2), 163–168 (1963)

Fletcher, R.: A new approach to variable metric algorithms. Comput. J. 13(3), 317–322 (1970)

Gao, W., Goldfarb, D.: Quasi-Newton methods: superlinear convergence without line searches for self-concordant functions. Optim. Methods Softw. 34(1), 194–217 (2019)

Jin, Q., Mokhtari, A.: Non-asymptotic superlinear convergence of standard Quasi-Newton methods. Proc. IEEE 108(11), 1906–1922 (2020)

Li, D.H., Fukushima, M.: On the global convergence of the BFGS method for nonconvex unconstrained optimization problems. SIAM J. Optim. 11, 1054–1064 (2001)

Lin, D.C., Ye, H.S., Zhang, Z.H.: Faster Explicit Superlinear Convergence for Greedy and Random Quasi-Newton Methods (2021). arXiv:2003.13607

Mascarenhas, W.F.: The BFGS method with exact line searches fails for non-convex objective functions. Math. Program. 99, 49–61 (2004)

Mokhtari, A., Eisen, M., Ribeiro, A.: IQN: an incremental quasi-Newton method with local superlinear convergence rate. SIAM J. Optim. 28(2), 1670–1698 (2018)

Moré, J.J., Trangenstein, J.A.: On the global convergence of Broyden’s method. Math. Comput. 30, 523–540 (1976)

Nocedal, J., Wright, S.J., Mikosch, T.V., et al.: Numerical Optimization. Springer, New York (1999)

Powell, M.J.D.: A new algorithm for unconstrained optimization. In: Rosen, J.B., Mangasarian, O.L., Ritter, K. (eds.) Nonlinear Programming, pp. 31–66. Academic Press, New York (1970)

Powell, M.J.D.: On the convergence of the variable metric algorithm. IMA J. Appl. Math. 7(1), 21–36 (1971)

Powell, M.J.D.: Convergence properties of a class of minimization algorithms. In: Mangasarian, O.L., Meyer, R.R., Robinson, S.M. (eds.) Nonlinear Programming 2, pp. 1–27. Academic Press, New York (1975)

Powell, M.J.D.: On the convergence of a wide range of trust region methods for unconstrained optimization. IMA J. Numer. Anal. 30(1), 289–301 (2010)

Rodomanov, A., Nesterov, Y.: Greedy quasi-newton methods with explicit superlinear convergence. SIAM J. Optim. 31(1), 785–811 (2021)

Rodomanov, A., Nesterov, Y.: Rates of superlinear convergence for classical quasi-newton methods. Math. Program. (2021). https://doi.org/10.1007/s10107-021-01622-5

Rodomanov, A., Nesterov, Y.: New results on superlinear convergence of classical Quasi-Newton methods. J. Optim. Theory Appl. 188, 744–769 (2021)

Shanno, D.F.: Conditioning of quasi-newton methods for function minimization. Math. Comp. 24, 647–656 (1970)

Sun, W.Y., Yuan, Y.X.: Optimization Theory and Methods: Nonlinear Programming. Springer, New York (2006)

Yabe, H., Yamaki, N.: Local and superlinear convergence of structured Quasi-Newton methods for nonlinear optimization. J. Oper. Res. Soc. Japan. 39(4), 541–557 (1996)

Acknowledgements

The authors would like to thank anonymous referees for their comments, which enabled us to improve the quality of the paper.

Funding

This research is supported by National Key R &D Program of China (nos. 2021YFA1000300 and 2021YFA1000301), the Chinese NSF grants (nos. 12021001, 11991021, 11991020, 11971372 and 11631013) and the Strategic Priority Research Program of Chinese Academy of Sciences (no. XDA27000000).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no confict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Ji, ZY., Dai, YH. Greedy PSB methods with explicit superlinear convergence. Comput Optim Appl 85, 753–786 (2023). https://doi.org/10.1007/s10589-023-00495-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10589-023-00495-y