Abstract

We present a branch-and-bound algorithm for globally solving parabolic optimal control problems with binary switches that have bounded variation and possibly need to satisfy further combinatorial constraints. More precisely, for a given tolerance \(\varepsilon >0\), we show how to compute in finite time an \(\varepsilon \)-optimal solution in function space, independently of any prior discretization. The main ingredients in our approach are an appropriate branching strategy in infinite dimension, an a posteriori error estimation in order to obtain safe dual bounds, and an adaptive refinement strategy in order to allow arbitrary switching points in the limit. The performance of our approach is demonstrated by extensive experimental results.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Optimal control problems with discrete switches have recently become an increasing focus of research. Most approaches presented in the literature, however, produce only heuristic solutions without any quality guarantee. The well-known Sum-Up Rounding approach [28, 33] computes binary switching patterns by first solving a convex relaxation of the problem and then approximating the resulting continuous switching by a binary one. This approach often requires a large number of switchings when trying to come close to the optimal continuous solution. In particular, it cannot deal with an explicit bound on the number of switchings, let alone with more complex combinatorial constraints. If such constraints need to be satisfied, the Combinatorial Integral Approximation approach [32] can be applied. Since the latter again tries to approximate a given continuous control by a feasible binary one, this approach does not lead to optimal solutions to the original problem in general, even when a best-possible approximation can be computed. Other approaches aim at optimizing the switching times of the discrete switches directly [13, 14, 18, 25, 30, 31, 37], or use non-smooth penalty techniques, partly in combination with convexification, to impose the switching structure, see, e.g., [9,10,11,12, 40] and the references therein. However, both strategies in general lead to non-convex problems with potentially multiple local minima and a convexifcation of the arising problems may destroy the switching structure of the optimal solution.

In this paper, we present a branch-and-bound approach for solving parabolic optimal control problems with combinatorial switching constraints to global optimality. More precisely, we consider problems of the form

Here \(T > 0\) is a given final time and \(\Omega \subset {\mathbb {R}}^d\), \(d\in {\mathbb {N}}\), is a bounded domain, i.e., a bounded, open, and connected set, with Lipschitz boundary \(\partial \Omega \) in the sense of [24, Def. 1.2.2.1]. The form function \(\psi \in H^{-1}(\Omega )\) and the initial state \(y_0 \in L^2(\Omega )\) are given. Moreover, \(y_{d } \in L^2(Q)\) is a given desired state and \(\alpha > 0\) is a Tikhonov parameter weighting the deviation from \(\tfrac{1}{2}\). Finally,

denotes the set of feasible switching controls and is supposed to satisfy the following assumptions:

Here BV(0, T) denotes the set of all functions in \(L^1(0,T)\) with bounded variation, equipped with the norm \(\Vert u\Vert _{BV(0,T)}:= \Vert u\Vert _{L^1(0,T)} + |u|_{BV(0,T)}\); see e.g., [1] for details on the space of functions with bounded variation. For simplicity, we restrict ourselves to the case of one binary switch in (P), but our main results are easily extended to the case of multiple switches, i.e., to \(D\subset BV(0,T;\{0,1\}^k)\) for some \(k\in {\mathbb {N}}\).

Assumption (D1) is crucial in our context. Without this condition, it would be possible to approximate any control u with \(u(t)\in [0,1]\text { f.a.a.\ } t \in (0,T)\) arbitrarily well by binary switch es using an increasing number of switchings, e.g., by applying the Sum-Up Rounding approach mentioned above. Indeed, for a given control \(u\in L^2(0,T;[0,1])\), there exists a sequence \(\{u^n\}_{n\in {\mathbb {N}}}\) of binary controls in \(BV(0,T;\{0,1\})\) such that \(u^n\) converges weakly in \(L^2(0,T)\) to u, and this weak convergence is sufficient to pass to the limit in the state equation. Moreover, in our branch-and-bound algorithm, the existence of a uniform bound on the variation is essential in order to ensure the effectiveness of fixings.

The Tikhonov term \(\Vert u-\tfrac{1}{2}\Vert ^2_{L^2(0,T)}\) in the objective function of (P) is constant on D by construction, but not on the relaxations of D considered in the course of the algorithm. In [6, 7], we present tailored convexifications of (P) and an outer approximation approach to solve the resulting relaxations. The core of the approach is the generation of linear cutting planes describing the closed convex hull of D in \(L^p(0,T)\). While the overall approach is very general, the specific shape of the cutting planes is problem-dependent. In particular, we devise results for the case of bounded total variation (without further constraints) and for the case where the switching points of the control u must satisfy given linear constraints. The latter case comprises the so-called mininum dwell time contraints [42].

Building on the results of [6, 7], our aim is thus to determine globally optimal solutions for problems of type (P) that are independent of any prior discretization, using a branch-and-bound approach. For this, we start from the convex relaxations of (P) studied in [6]. These convex relaxations correspond to the root nodes in our branch-and-bound algorithm. In order to extend this to a full branch-and-bound algorithm for computing globally optimal solutions (at least in the limit), we have to overcome several obstacles:

-

Since we optimize in function space, fixing the value of the switch in finitely many points (as is common in finite-dimensional branch-and-bound algorithms) has no direct effect, or is not even well-defined. We thus have to take the bounded variation into account in order to obtain implicit restrictions on the set of admissible controls in the nodes of the branch-and-bound tree; see Sect. 3.

-

The fixing of the switch at certain points in time leads to a non-closed set of admissible controls in the nodes. Moreover, the closed convex hulls of these sets are structurally different from the admissible controls arising in the root node. We study the most important classes of these sets in Sect. 4.

-

In [7], we devised a semi-smooth Newton method to solve the root relaxation. However, with an increasing number of fixings, this method became less stable, so that we now propose to solve all subproblems by the alternating direction method of multipliers; see Sect. 5.

-

In order to obtain globally optimal solutions, all dual bounds computed in the nodes of the branch-and-bound tree must be safe. In particular, they need to take discretization errors into account. In case the time-mesh independent dual bound is too weak to cut off a node, we may either have to branch or to refine the temporal grid, depending on the relation between the current primal bound and the time-mesh dependent dual bound. The sophisticated interplay between branching, error analysis, and adaptive refinement is at the core of our proposed approach, it is discussed in Sect. 6.

The main contribution of this paper is to present solutions for the challenges listed above. An extensive experimental evaluation presented in Sect. 7 shows that an effective and stable implementation of the resulting branch-and-bound approach is possible.

2 Preliminaries

We first collect some definitions and observations that are needed in the following sections, concerning the solution mapping for the PDE in (P) as well as functions of bounded variation and special switching constraints.

2.1 Solution mapping

The assumptions on the optimal control problem (P) listed above guarantee that, for every control \(u\in D\subset L^2(0,T)\), the PDE in (P) admits a unique weak solution

see [38, Chapter 3]. To specify the associated solution operator

we introduce the linear and continuous (and thus Fréchet differentiable) operator

as well as the solution operator \(\Sigma : L^2(0,T;H^{-1}(\Omega )) \rightarrow W(0,T)\) of the heat equation with homogeneous initial condition, i.e., given \(w\in L^2(0,T;H^{-1}(\Omega ))\), \(y = \Sigma (w)\) solves

Moreover, we introduce the function \(\zeta \in W(0,T)\) as the solution for

Then the solution operator S is given by \( S = \Sigma \circ \Psi + \zeta \). In particular, it is affine and continuous. Using this solution operator, the problem (P) can be written as

2.2 Functions of bounded variation

Functions of bounded variation are of central importance in the following. In order to deal with such functions, first recall that each function \(u \in BV(0,T)\) admits a right-continuous representative given by \({{\hat{u}}}(t) = c+\mu ((0,t])\), \(t\in (0,T)\), where \(\mu \) is the regular Borel measure on [0, T] associated with the distributional derivative of u and \(c\in {\mathbb {R}}\) is a constant. Note that \({{\hat{u}}}\) is unique on (0, T). Here and in the following, with a slight abuse of notation, we denote this function by the same symbol as the equivalence class in BV(0, T) and, when it comes to pointwise evaluations, we always refer to this representative function. In particular, we will often write constraints in the form \(u(t)=b\) for \(b\in {\mathbb {R}}\). For \(t\in (0,T)\), this is well-defined by the above reasoning, it then means \({{\hat{u}}}(t)=b\), while for \(t=0\), we use the same notation as shorthand for \(\lim _{t\searrow 0}{{\hat{u}}}(t)=b\).

In this paper, we will mostly deal with binary controls \(u\in BV(0,T;\{0,1\})\). In this case, the representative \({{\hat{u}}}\) can be parameterized through its switching points \(0\le t_1\le \cdots \le t_\sigma \), where \(\sigma \le {|u|}_{BV(0,T)}\). More formally, if one already counts \({{\hat{u}}}(0)=\lim _{t\searrow 0} {\hat{u}}(t)=1\) as one switching from 0 to 1, then the representative can be written in the form

see [6] for more details. In the following, we will always regard \(u_{t_1,\dots ,t_\sigma }(t)\) as a function in BV(0, T).

2.3 Examples of switching constraints

In [6, 7], we present tailored convexifications of (P’) and an outer approximation algorithm to solve the resulting relaxations. In particular, we elaborate the details of this approach for the following two relevant classes of constraints D. By Assumption (D1), the total number of switchings is bounded, and the first type of constraint arises when this is the only restriction. More specifically, we restrict the total variation of the single switch from above by \(\sigma >0\), so that the set of feasible controls is

The second type of constraint imposes affine linear relations between the positions of the switching points of u. More precisely, for a given polytope \(P\subseteq {\mathbb {R}}_+^\sigma \), we define

An important special case are the so-called minimum dwell time constraints, defined as

for some given \(s>0\). In words, a minimum time span s is required between two consecutive switchings of u.

All sets \(D(\sigma )\) and D(P) defined here, and hence also D(s), satisfy the general assumptions (D1) and (D2); see [6, Lemma 3.7 and Lemma 3.10]. We will investigate these sets under fixings in Sect. 4 and use \(D(\sigma )\) for our experiments presented in Sect. 7.

3 Branch-and-bound algorithm

In finite-dimensional optimization, branch-and-bound is the standard approach for solving non-convex optimization problems to global optimality. First, a dual bound is computed for the original problem, corresponding to the root node of the branch-and-bound tree. Often, this is done by solving a convex relaxation of the problem. In case the optimal solution for the latter is infeasible for the original problem, a branching is applied. In the most abstract form, this means that the set of feasible solutions is subdivided into two (or more) subsets, corresponding to the child nodes of the root node. Recursive application of the branching leads to the so-called branch-and-bound tree. The bounding is now applied in order to reduce the number of nodes in this tree, which leads to a finite algorithm in many cases: one first needs to obtain so-called primal solutions, i.e., feasible solutions of the original problem. Each such solution yields (in case of a minimization problem) a global upper bound on the optimal value of the original problem. Now, if the dual bound obtained in some branch-and-bound node is larger than the best known upper bound, it follows that this node cannot contain any optimal solution, so that it can be pruned, i.e., the entire subtree rooted at this node can be ignored in the enumeration.

The most natural branching strategy for finite-dimensional binary optimization problems consists of picking a binary variable having a fractional value in the optimal solution for the convex relaxation used for computing the dual bound, and then fixing this variable to zero in the first child node and to one in the other. However, in the infinite-dimensional setting considered here, the situation is more complicated: we need to deal with infinitely many binary variables, suggesting that an infinite number of function values has to be fixed in order to uniquely determine a solution for (P). In fact, fixing a pointwise value of u has no direct effect (or is not even well-defined) in the function space \(L^p(0,T)\). At this point, we can exploit Assumption (D1), which yields a finite bound on the total number of switching points. The relevant restrictions in a given node of the branch-and-bound tree are now a joint consequence of the finitely many fixing decisions taken so far and of the constraint \(u\in D\).

The main challenge is now to describe these resulting restrictions. Assume that our branching strategy always picks appropriate time points \(\tau \in (0,T)\) and fixes \(u(\tau )=0\) in the first subproblem and \(u(\tau )=1\) in the second. Then all our subproblems, corresponding to the nodes in the branch-and-bound tree, are problems in BV(0, T) of the form

with \((\tau _j,c_j) \in [0,T)\times \{0,1\}\) for \(1\le j \le N\); see Sect. 2.2 for the precise meaning of the fixing constraints. In the following, we denote the feasible set of (SP) by

where we always assume \(\tau _1<\cdots <\tau _N\). Note that the set \(D_ SP \) is not closed in \(L^p(0,T)\) in general, and hence the subproblem (SP) does not necessarily admit a global minimizer. However, this is no problem since we are only interested in the optimal value of (SP) in our branch-and-bound framework. In fact, our approach will produce a series of dual bounds by convexifying (SP) and these co nvexifications will provide the same (primal) optimal value of (SP) in the limit; see Theorem 3.1 below. We consider the convexification

of the suproblem (SP) in \(L^p(0,T)\). Here and in the following, \({\overline{\operatorname {conv}}}\) always denotes the closed convex hull in \(L^p(0,T)\).

In a reasonable branching strategy, one may expect that an increasing number of fixing decisions, taken along a path in the branch-and-bound tree starting at the root node, leads to a unique solution in the limit. In particular, the dual bounds obtained in the nodes and the optimal values subject to the corresponding fixings should converge to each other. The next result shows that this is guaranteed in our infinite-dimensional setting if the fixing positions are sufficiently well-distributed.

Theorem 3.1

For \(N\in {\mathbb {N}}\), let \(0\le \tau _1^{N}<\dots<\tau _N^N< T\) and define

where \(\tau _0^N:=0\) and \(\tau _{N+1}^N:=T\). If \(\Delta \tau ^{N} \rightarrow 0\) for \(N\rightarrow \infty \), then

-

(i)

the diameters of the feasible sets of (SPC) and (SP) in \(L^2(0,T)\) vanish and

-

(ii)

the optimal values of (SPC) and (SP) converge to each other.

Proof

Let \(D_ SP ^{N}:=\{u\in D: u(\tau _j^{N}) = c_j^{N} \ \forall j=1,\ldots ,N\}\) denote the feasible set of (SP) for \(N\in {\mathbb {N}}\). Without loss of generality, we may assume \(D_ SP ^N\ne \emptyset \) for \(N\in {\mathbb {N}}\), since otherwise the feasible set of (SPC) is also empty and thus both optimal values agree. We first claim that two controls \(u_1,u_2\in D_ SP ^{N}\) can only differ in at most \(\sigma \) of the intervals \((\tau _{j-1}^{N},\tau _j^{N})\) for \(2\le j\le N\), where \(\sigma \) denotes the upper bound on the total number of switchings guaranteed by Assumption (D1). Indeed, assume that \(u_1\) and \(u_2\) differ between \(\tau _{j-1}^{N}\) and \(\tau _j^{N}\). Since the values of \(u_1\) and \(u_2\) agree at \(\tau _{j-1}^{N}\) and \(\tau _j^{N}\), either one of the two functions has to switch at least twice in \((\tau _{j-1}^{N},\tau _j^{N})\), if \(c_{j-1}^{N}=c_j^{N}\), or both functions have to switch at least once, if \(c_{j-1}^{N}\ne c_j^{N}\). Hence, for each interval where \(u_1\) and \(u_2\) differ, both functions together have at least two switchings, but the total number of their switchings is bounded by \(2\sigma \).

Taking into account also the intervals \((0,\tau _1^{N})\) and \((\tau _N^{N},T)\) and using that \(u_1,u_2\in [0,1]\) a.e. in (0, T), we thus obtain

and consequently, for \(N\rightarrow \infty \), we get

which shows assertion (i).

Since the solution operator S in (SP) is linear and bounded, the objective \(J:u \mapsto J(Su,u)\) is continuous from \(L^2(0,T)\) to \({\mathbb {R}}\). Together with (4), this implies that the maximal difference of all objective values of feasible controls in (SPC) vanishes for \(N\rightarrow \infty \). Since (SPC) is a relaxation of (SP), we obtain (ii). \(\square \)

As a consequence of Theorem 3.1 and its proof, we immediately obtain the following.

Corollary 3.2

For each \(\varepsilon >0\) there exist \(N\in {\mathbb {N}}\) and fixings \((\tau _j,c_j) \in (0,T)\times \{0,1\}\), \(j=1,\ldots ,N\), such that the optimal value of (SPC) differs by at most \(\varepsilon \) from the optimal value of the original problem (P).

In other words, up to an arbitrary desired precision \(\varepsilon >0\), the optimal solution of (P) can be approximated by (SPC) using a finite number of fixings. This is crucial for the branch-and-bound algorithm we are going to present in the following. Clearly, the number of necessary fixings depends on \(\varepsilon \).

To solve the subproblems in the branch-and-bound algorithm, we will use the outer approximation approach presented in [6, 7]. For this purpose, we need to discuss how to deal with the resulting projections under fixings (see Sect. 4) and how to adapt the outer approximation algorithm (see Sect. 5). For both tasks, first note that

again with all closures taken in \(L^p(0,T)\). While the inclusion “\(\subseteq \)” is obvious, it suffices for the other direction to show that \(\overline{D_\text {SP}}\) is a subset of \(\overline{\text {conv}}(D_\text {SP})\), as the latter is closed and convex by definition. Now if u is the limit of a sequence \(\{u^m\}_{m\in {\mathbb {N}}}\) in \(D_\text {SP}\), then \(u^m\in {\text {conv}}(D_\text {SP})\) for all \(m\in {\mathbb {N}}\) and hence \(u\in \overline{\text {conv}}(D_\text {SP})\). Problem (SPC) is thus very similar to the problem without fixings addressed in [6, 7], except that D is now replaced by the more complex set \(\overline{D_ SP }\). In an outer approximation approach, the impact of the fixings is then implicitly modeled by the cutting planes describing \({\overline{\operatorname {conv}}}(D_ SP )\).

However, the fixings may also directly determine significant parts of the switching pattern in such a way that u must be constant on some intervals \([\tau _{j-1},\tau _j)\), i.e., \(u|_{[\tau _{j-1},\tau _j)}\equiv c_{j-1}\) for all controls \(u\in D_ SP \). Indeed, as shown by the proof of Theorem 3.1, the non-fixed part of the time horizon vanishes under the assumptions of Theorem 3.1 when \(N\rightarrow \infty \). In other words, the share of the time horizon (0, T) on which u is completely determined by the branching decisions converges to one. In our branch-and-bound algorithm, it is much more efficient to deal with these constraints explicity, instead of modeling them by cutting planes describing \({\overline{\operatorname {conv}}}(D_ SP )\).

Finally, note that it is also possible that the given fixings are inconsistent with the constraint D, i.e., that the feasible set of (SP) is empty, which is easy to detect for most choices of D. In this case, the subproblem is infeasible and the corresponding node in the branch-and-bound tree can be pruned.

Example 3.3

Consider the set \(D(\sigma )\) defined in (1). Let \(N'=|\{j\in \{2,\dots ,N\}:c_{j-1}\ne c_{j}\}|\). If \(N'>\sigma \), we have \(D(\sigma )_ SP =\emptyset \), since even the number of switchings enforced by the fixing is too large for a feasible solution. The subproblem can thus be pruned. If \(\sigma -1\le N'\le \sigma \), we can fix all intervals \([\tau _{j-1},\tau _j)\) with \(c_{j-1}=c_j\) to the value \(c_{j-1}\), since any other value in this interval would increase the number of switchings by two. If \(\sigma -1\le N'\le \sigma \) and \(c_{j-1}\ne c_j\), then no value of u in \((\tau _{j-1},\tau _j)\) is fixed, but u has to be monotone in \([\tau _{j-1},\tau _j]\), which is modeled implicitly by cutting planes. The same is true for all further restrictions resulting from the fixings. \(\square \)

Example 3.4

For the minimum dwell time constraints D(s) defined in (3), we can fix an interval \([\tau _{j-1},\tau _j)\) with \(c_{j-1}=c_j\) to the value \(c_{j-1}\) if and only if \(\tau _j-\tau _{j-1}\le s\). Otherwise, no direct fixing is possible, but the number of allowed switchings within the interval \((\tau _{j-1},\tau _j)\) reduces to \(\lceil \nicefrac {\tau _j-\tau _{j-1}}{s}\rceil \). An infeasible subproblem arises whenever u is fixed to the same value at two time points having a distance of at most s, but fixed to the other value at some point in between. \(\square \)

4 Convex hull under fixings

As already indicated, our aim is to fully describe the convex hull of feasible switching patterns, i.e., the feasible set of (SPC), by cutting planes derived from finite-dimensional projections, extending the approach proposed in [6] for the case without fixings. For this, we project the set \(\overline{D_ SP }\) to the finite-dimensional space \({\mathbb {R}}^M\), by means of local averaging

where \(I_i\subseteq (0,T)\) for \(i=1,\ldots ,M\) are suitably chosen subintervals. Each projection \(\Pi \) then gives rise to a relaxation

of the feasible region [6, Lemma 3.4], where \(C_{\overline{D_ SP },\Pi }:= \operatorname {conv}\{\Pi (u):u \in \overline{D_ SP }\}\). By a suitable construction of projections \(\Pi _k\), with increasing dimension \(M_k\), a complete outer description of the finite-dimensional convex hulls \(C_{\overline{D_ SP },\Pi }\) also yields a complete outer description of the convex hull of \(\overline{D_ SP }\) in function space [6, Thm. 3.5], i.e.,

In order to solve the convexified subproblem (SPC) by means of the outer approximation algorithm presented in [7, Alg. 1], it is particularly desirable that the sets \(C_{\overline{D_ SP },\Pi }\) are polyhedra for which the separation problem is tractable, in order to efficiently generate cuts of the form \(a^\top \Pi (u)\le b\) for \(u\in L^p(0,T)\), where \(a^\top w\le b\), \(a\in {\mathbb {R}}^M\), \(b\in {\mathbb {R}}\) represents a valid inequality for \(C_{\overline{D_ SP },\Pi }\). For prominent examples of D, it is shown in [6] that this is the case for the sets \(C_{D,\Pi }\), i.e., when no fixings are considered. However, it can be shown that the fixings may destroy this property in general.

For the two classes of constraints D already discussed in [6] and defined in Sect. 2.3, one can show with a similar reasoning as in [6] that the corresponding projection sets \(C_{\overline{D(\sigma )_ SP },\Pi }\) and \(C_{\overline{D(P)_ SP },\Pi }\) are still polyhedra for a fixed projection \(\Pi \). For convenience of the reader, we include full proofs of both results in Appendix A. In the remainder of this section, we focus on the tractability of the projection sets, since the latter is crucial for the efficient computation of dual bounds by means of outer approximation within our branch-and-bound algorithm. For this, we consider a fixed projection \(\Pi \) and assume that the intervals \(I_i\), \(i=1,\dots ,M\), are pairwise disjoint. Moreover, without loss of generality, we may assume that the fixing points \(0\le \tau _1< \cdots< \tau _N < T\) satisfy \(\tau _j\notin I_i\) for all \(j=1,\ldots ,N\) and \(i=1,\ldots ,M\), since otherwise one can refine the projection intervals and thus generate stronger cutting planes [7, Thm. 2.2].

4.1 Restricted total variation

In this section, we show that the separation problem for \(C_{\overline{D(\sigma )_ SP },\Pi }\) can be solved in polynomial time. Without any fixings, i.e., when \(D(\sigma )_ SP =D(\sigma )\), it is shown in [6, Thm. 3.8] that the separation problem for \(C_{D(\sigma ),\Pi }\) reduces to the separation problem of

For the slightly different setting where \(v_1\) is fixed to zero, it is shown in [8] that the separation problem for the above set can be solved in polynomial time. It is easy to see that the separation problem remains tractable also without this fixing, i.e., for \(C_{D(\sigma ),\Pi }\). Our aim is now to efficiently reduce the separation problem for \(C_{\overline{D(\sigma )_ SP },\Pi }\) to the separation problem for \(C_{D(\sigma ),\Pi }\). To this end, we extend the vector v by the fixings \(c_1,\ldots ,c_N\). More precisely, for all \(j\in \{1,\dots ,N\}\), let \(i_j\in \{0,\ldots ,M\}\) be the index such that \(b_{i_{j-1}}\le \tau _j\le a_{i_j}\) holds, where \(b_0:=0\) and \(I_i=(a_i,b_i)\) for \(i=1,\ldots ,M\). In addition, define \(E:{\mathbb {R}}^M \rightarrow {\mathbb {R}}^{M+N}\) by

The desired reduction is now based on the fact that, analogously to [6, Thm. 3.8], one can again show that \(C_{\overline{D(\sigma )_ SP },\Pi }\) is the convex hull of all projection vectors resulting from feasible controls that are constant almost everywhere on each of the intervals \(I_1,\ldots ,I_N\), i.e., \(C_{\overline{D(\sigma )_ SP },\Pi }=\operatorname {conv}(K)\) with

see Theorem A.1, and on the following:

Lemma 4.1

A vector \(v\in {\mathbb {R}}^M\) belongs to K if and only if Ev belongs to

Proof

For the first direction, let \(v=\Pi (u)\in K\) for some \(u\in \overline{D(\sigma )_ SP }\) being constant almost everywhere on each projection interval. Then there exists a sequence \(\{u^m\}_{m\in {\mathbb {N}}}\subseteq D(\sigma )_ SP \) with \(u^m\rightarrow u\) in \(L^p(0,T)\) for \(m\rightarrow \infty \). For every \(m\in {\mathbb {N}}\), the control \(u^m\) has at most \(\sigma \) switchings and satisfies \(u^m(\tau _j)=c_j\) for \(j=1,\ldots ,N\), so that we have

The continuity of \(\Pi \) in \(L^p(0,T)\) yields \(v=\Pi (u)=\lim _{m\rightarrow \infty } \Pi (u^m)\) and hence

i.e., we have \(Ev\in {{{\mathcal {C}}}}\) as desired.

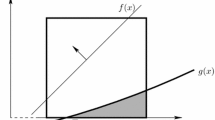

We next show the opposite direction. So, let \(Ev\in {{{\mathcal {C}}}}\) for some vector \(v\in {\mathbb {R}}^M\). In addition, let \(0=z_0<z_1<\cdots <z_r=T\) include all endpoints of the intervals \(I_1\ldots ,I_M\) and the fixed positions \(\tau _1,\ldots ,\tau _N\). Construct functions \(u^m\) for \(m\in {\mathbb {N}}\) such that

where \(\varepsilon _j=\min \{|z_i-\tau _j|: i\in \{1,\ldots ,r\}, z_i\ne \tau _j\}>0\). For points in (0, T) not covered by the above intervals, we copy the value of the left neighboring interval. The construction is illustrated in Fig. 1a. We have \(u^m(\tau _j)=c_j\) for every \(m\in {\mathbb {N}}\) and \(j=1,\ldots ,N\), hence all fixings are respected. Moreover, \(Ev\in {{{\mathcal {C}}}}\) guarantees that \(u^m\) switches at most \(\sigma \) times, i.e., we get \(u^m\in D(\sigma )_ SP \). By copying always the value of the left neighboring interval, we guarantee that the control functions \(u^m\) converge in \(L^p(0,T)\) to some function u; see Fig. 1b.

Illustration of the second part of the proof of Lemma 4.1

Moreover, by construction, the limit u is \(v_1,\ldots ,v_M\) almost everywhere on the projection intervals \(I_1,\ldots ,I_M\), respectively, and due to \(\{u^m\}\subseteq D(\sigma )_ SP \), we have \(u\in \overline{D(\sigma )_ SP }\). Therefore \(v=\Pi (u)\in K\). \(\square \)

Theorem 4.2

The separation problem for \(C_{\overline{D(\sigma )_ SP },\Pi }\) can be solved in polynomial time.

Proof

Using Lemma 4.1 and \(C_{\overline{D(\sigma )_ SP },\Pi }=\operatorname {conv}(K)\), we obtain that \(v\in C_{\overline{D(\sigma )_ SP },\Pi } \) if and only if \(Ev\in \operatorname {conv}(\mathcal{C})\). The separation problem for \(C_{\overline{D(\sigma )_ SP },\Pi }\) thus reduces to the separation problem for \(\operatorname {conv}({{{\mathcal {C}}}})\). \(\square \)

The separation algorithm used in the outer approximation approach devised in [6] even computes the most violated cutting plane. The same can be done when considering fixings: our aim is thus to find the most violated cutting plane \(({\bar{a}},{\bar{b}})\in {\mathbb {R}}^{M+1}\) in the set

of all valid inequalities for \(C_{\overline{D(\sigma )_ SP },\Pi }\), where \(a\in [-1,1]^M\) can be assumed without loss of generality by scaling. It is easily verified that this aim can be achieved by first computing the most violated cutting plane \((a,b)\in {\mathbb {R}}^{M+N+1}\) for Ev in

and then replacing the \((i_j+j)\) th variable by the constant \(c_j\) for all \(j=1,\dots ,N\), i.e., \({\bar{a}}\) results from a by deleting the \((i_j+j)\) th variables and \({\bar{b}}=b-\sum _{j=1}^N a_{i_j+j}\,c_j\). The first task can again be reduced to the case without fixings.

4.2 Dwell time constraints

We now focus on the special case of dwell time constraints, as defined in (3). Here, a minimum time span \(s>0\) between two switchings is required. For the case without fixings, it is stated in [6, Thm. 3.13] that there exists a separation algorithm with polynomial running time in M and in the implicit bound \(\sigma =\lceil \tfrac{T}{s}\rceil \) on the number of allowed switchings. In the presence of fixings \((\tau _j,c_j)\), \(1\le j\le N\), we show in the following that the separation problem for \(C_{\overline{D(s)_ SP },\Pi }\) is still tractable. More precisely, we claim that there exists a separation algorithm with polynomial time in M, \(\sigma \), and the number N of fixings.

We thus consider the set

and first argue, similar to [6, Sect. 3.2], that it suffices to consider as switching points the finitely many points in the set

where \(I_i=(a_i,b_i)\) for \(i=1,\dots ,M\). The set S thus contains, as before, all end points of the intervals \(I_1,\dots ,I_M\) and [0, T] shifted by arbitrary integer multiples of s, as long as they are included in [0, T]. In addition, we now need to consider all fixing points \(\tau _1,\ldots ,\tau _N\) and their corresponding shiftings. Clearly, we can compute S in \(O((M+N)\sigma )\) time.

Lemma 4.3

Let v be a vertex of \(C_{\overline{D(s)_ SP },\Pi }\). Then there exists \(u\in \overline{D(s)_ SP }\) with \(\Pi (u)=v\) such that u switches only in S.

Proof

The proof is similar to that of [6, Lemma 3.12], but one needs to pay attention to the fixings when shifting switching points outside of S. The full proof can be found in Appendix A.2. \(\square \)

We next show our main result that there exists an efficient separation algorithm for \(C_{\overline{D(s)_ SP },\Pi }\) by specifying an efficient optimization algorithm over \(C_{\overline{D(s)_ SP },\Pi }\). Let \(\omega _1\dots ,\omega _{|S|}\) be the elements of S sorted in ascending order.

Theorem 4.4

One can optimize over \(C_{\overline{D(s)_ SP },\Pi }\) (and hence also separate from \(C_{\overline{D(s)_ SP },\Pi }\)) in time polynomial in M, \(\sigma \), and N.

Proof

By Lemma 4.3, it suffices to optimize over the projections of all \(u\in \overline{D(s)_ SP }\) with switchings only in S. This can be done by a dynamic programming approach similar to the one presented in [6, Thm. 3.13]; we mainly need to change the recursion formula for the fixing points \(\tau _1,\ldots ,\tau _N\). So assume that an arbitrary objective function \(c\in {\mathbb {R}}^M\) is given. Then we compute the optimal value

recursively for all \(t\in S\) as follows. Starting with \(c^*(\omega _1,b)=0\) if \(\tau _1\ne 0\) and

otherwise, we obtain for \(k\in \{2,\ldots ,|S|\}\) with \(\omega _k\in S{\setminus }\{\tau _1,\ldots ,\tau _N\}\) that

where for \(b\in \{0,1\}\) we define \(\tau (b):=\{\tau _j: c_j=b, j=1,\ldots ,N\}\). For \(k\in \{2,\ldots ,|S|\}\) with \(\tau _j=\omega _k\in S\), \(i\in \{1,\ldots ,N\}\), we get

and

The desired optimal value is \(\min \{c^*(T,0),c^*(T,1)\}\) then, and a corresponding optimal solution can be derived if this value is finite. Otherwise, the problem is infeasible due to the fixings, i.e., the polytope \(C_{\overline{D(s)_ SP },\Pi }\) is empty. \(\square \)

In the proof of Theorem 4.4, the recursion formula of the dynamic optimization approach over \(C_{\overline{D(s)_ SP },\Pi }\) is the same for the fixing points \(\tau _1,\ldots ,\tau _N\) as for the points in \(S{\setminus } \{\tau _1,\ldots ,\tau _N\}\), as long as the fixings are respected. This is not surprising, since in this case we do not know whether the control is already constantly \(c_j\) before or after \(\tau _j\). However, if the fixing is not respected, then it is clear that the control has to be constantly \(c_j\) on \([\tau _j-s,\tau _j)\) and one has to check whether this is possible taking the other fixings and the start value zero into account. In particular, if \(\tau _1=0\) and \(c_1=1\), all controls in \(\overline{D(s)_ SP }\) have to respect the fixing due to the start value zero, so that we have \(c^*(0,0)=\infty \) in this case.

5 Computation of primal and dual bounds

The main task in every branch-and-bound algorithm is the fast computation of primal and dual bounds. While primal bounds are often obtained by applying rather straightforward heuristics to the original problem (P), see Sect. 5.2, the computation of dual bounds is a more complex task, see Sect. 5.1.

5.1 Dual bounds

Our goal is to obtain strong dual bounds by solving the convexified subproblems (SPC); see Sect. 3. To this end, we can use the outer approximation algorithm developed in [7], since \({\overline{\operatorname {conv}}}(D_ SP )={\overline{\operatorname {conv}}}(\overline{D_ SP })\) as already noted above. This approach is applicable whenever we have a separation algorithm for \({\overline{\operatorname {conv}}}(\overline{D_ SP })\) at hand; see Sect. 4. Within the outer approximation algorithm, we thus need to repeatedly solve problems of the form

where \(G:L^2(0,T)\rightarrow {\mathbb {R}}^k\) with \((Gu)_\ell =a_\ell ^\top \Pi _\ell (u)\) for \(\ell =1,\ldots ,k\). The latter constraints represent the cutting planes for the sets \(C_{\overline{D_ SP },\Pi }\) that have been generated so far.

As discussed in Sect. 3, our branching strategy will implicitly fix the control u on certain subintervals of the time horizon [0, T]; see Example 3.3 and Example 3.4. Let \({\mathcal {A}}\) be the union of all such fixed intervals and set \({\mathcal {I}}:=[0,T]\setminus {\mathcal {A}}\). Denote the restrictions to \({\mathcal {A}}\) and \({\mathcal {I}}\) by \(\chi _{{\mathcal {A}}}:L^2(0,T) \rightarrow L^2({\mathcal {A}})\) and \(\chi _{{\mathcal {I}}}:L^2(0,T) \rightarrow L^2({\mathcal {I}})\), respectively, and let \(\chi _{\mathcal {A}}^*\) and \(\chi _{{\mathcal {I}}}^*\) be the respective extension-by-zero operators mapping from \(L^2({\mathcal {A}})\) and \(L^2({\mathcal {I}})\) to \(L^2(0,T)\), respectively. Then we can restrict (\(\textrm{SPC }_k\)) to the unfixed control \(u|_{{\mathcal {I}}}\), which leads to

where \(u|_{ {\mathcal {A}}}\) is fixed and implicitly given through the fixings. As a first attempt to solve this problem, we applied the semi-smooth Newton method described in [7], but, as the branching implicitly fixed larger parts of the switching structure, i.e., \({\mathcal {A}}\) got larger, the semi-smooth Newton method matrix became singular. To overcome these numerical issues, we decided to replace the semi-smooth Newton method by the alternating direction method of multipliers (ADMM), which was first mentioned in [21] for nonlinear elliptic problems and is widely applied to elliptic control problems [2, 4, 27]. Its convergence for convex optimization problems is well-studied, see, e.g., [15, 16, 20, 23]. Recently, [22] also addressed linear parabolic problems with state constraints by the ADMM and proved its convergence without any assumptions on the existence and regularity of the Lagrange multiplier.

We first need to rewrite our problem in the form

where \({\bar{b}}:= b-G(\chi _{{\mathcal {A}}}^*u|_{ {\mathcal {A}}})\) and

Note that (\(\textrm{SPC}{'_k}\)) is still a convex optimization problem, but no longer strictly convex. The first-order algorithm ADMM is an alternating minimization scheme for computing a saddle point of the augmented Lagrangian

which differs from the Lagrangian by the penalty terms \(\tfrac{\rho }{2}\Vert G(\chi _{{\mathcal {I}}}^*u|_{{\mathcal {I}}})-v\Vert ^2\) for the cutting planes and \(\tfrac{\beta }{2}\Vert u|_{{\mathcal {I}}}-w\Vert ^2_{L^2({\mathcal {I}})}\) for the box constraints, but has the same saddle points as the Lagrangian [15]. First, the augmented Lagrangian is minimized with respect to the unfixed control variables

then with respect to v and w, i.e.,

and finally, the dual variables \(\lambda \) and \(\mu \) are updated by a gradient step as follows

For \(\gamma _\rho ,\gamma _\beta \in (0,\tfrac{1+\sqrt{5}}{2})\), the convergence of ADMM is guaranteed [19], but these parameters and the penalty parameters influence the convergence performance and numerical stability of the algorithm. For instance, the penalty parameter \(\beta \) should be chosen close to \(\alpha \) in order to balance the Tikhonov term \(\tfrac{\alpha }{2}\Vert \chi _{{\mathcal {I}}}^*u|_{{\mathcal {I}}}+\chi _{{\mathcal {A}}}^*u|_{{\mathcal {A}}}-\tfrac{1}{2}\Vert _{L^2(0,T)}\) and the penalty term of the box constraints in the augmented Lagrangian. Moreover, the best choice for \(\gamma _\rho \) and \(\gamma _\beta \) generally seems to be one [19]. We thus use \(\gamma _\rho =\gamma _\beta =1\) in the following.

With the solution mapping \(S=\Sigma \circ \Psi +\zeta \), as defined in Sect. 2, the reduced objective in (\(\textrm{SPC}{'_k}\)) reads

such that, by the chain rule, its Fréchet derivative at \(u|_{ {\mathcal {I}}} \in L^2({\mathcal {I}})\) is given by

where we identified \(L^2({\mathcal {I}})\) with its dual using the Riesz representation theorem. For the penalty term associated with the cutting planes, the Fréchet derivative at \(u|_{ {\mathcal {I}}} \in L^2({\mathcal {I}})\) is

With the above Fréchet derivatives at hand, we are able to write down the ADMM method for (\(\textrm{SPC}{'_k}\)). Algorithm 1 shows the procedure, where m is the iteration counter.

The primal and dual residuals

of the optimality conditions for (\(\textrm{SPC}{'_k}\)) can be used to bound the primal objective sub-optimality [5], i.e., \(f(u_{\ |{\mathcal {I}}}^{m})-f(u^\star )\). More precisely, [5] derived sub-optimality estimates for problems in \({\mathbb {R}}^n\) based on their primal and dual residuals, but the arguments readily carry over to our setting. We thus have

so that we can estimate

since \(u_{\ |{\mathcal {I}}}^{m},\ u_{\ |{\mathcal {I}}}^\star \in \{0,1\}\) a.e. in \({\mathcal {I}}\subset [0,T]\). As a reasonable stopping criterion, we choose that the primal and dual residual must be small, as well as the primal objective sub-optimality. As tolerances for the residuals, we may use an absolute and relative criterion, such as

where \(\varepsilon ^\text {abs}>0\) is an absolute tolerance, whose scale depends on the scale of the variable values, and \(\varepsilon ^\text {rel} > 0\) is a relative tolerance, which might be \(\varepsilon ^\text {rel}=10^{-3}\) or \(\varepsilon ^\text {rel}=10^{-4}\). The factor \(\sqrt{k}\) accounts for the fact that (\(\textrm{SPC}{'_k}\)) contains k cutting plane constraints. In addition, the absolute error \(e^{m}\) in the primal objective should be less than a chosen tolerance \(\varepsilon ^{\text {pr}}>0\).

When the algorithm stops, we obtain \(f(u_{\ |{\mathcal {I}}}^{m})-e^{m}\) as a dual bound for the subproblem (SP) of the branch-and-bound algorithm, and we can either proceed by calling the separation algorithm again, in order to generate another violated cutting plane, if possible, or by stopping the outer approximation algorithm. When proceeding with the cutting plane algorithm, one has to solve another parabolic optimal control problem of the form (\(\textrm{SPC}_{k}\)) with an additional cutting plane \(k+1\) by Algorithm 1. The performance of the algorithm can be improved by choosing the prior solution \((u,v,\lambda ,w,\mu )\) as initialization in Step 1, and setting the auxiliary variable to \(v_{k+1}=b-G(\chi _{{\mathcal {A}}}^*u|_{ {\mathcal {A}}})\) as well as the dual variable to \(\lambda _{k+1}=0\) for the new cutting plane, since the latter is violated by u for sure.

5.2 Primal bounds

Another crucial ingredient in the branch-and-bound framework are primal heuristics, i.e., algorithms for computing good feasible solutions of the original problem (P), which yield tight primal bounds. It is common to call such primal heuristics in each subproblem, where the heuristic is often guided by the optimal solution for the convexified problem being solved in this subproblem for obtaining a dual bound. In our case, we can apply problem-specific rounding strategies from the literature to the solution of (\(\textrm{SPC}{'_k}\)) found by the ADMM method, e.g., the Dwell Time Sum-up Rounding and Dwell Time Next Force Rounding algorithms [42] for the case of a minimum time span between two switchings, and the Adaptive Maximum Dwell Rounding strategy [35] for the case of an upper bound on the total number of switchings.

Moreover, it is often possible to efficiently optimize a linear objective function over the set \(C_{D,\Pi }\), as shown in [6]. We can benefit from this as follows. First, we define an appropriate objective function based on the solution u of (\(\textrm{SPC}{'_k}\)). Second, we can use the resulting minimizer \(v^\star \in C_{D,\Pi }\) and construct a control \(u'\in D\) with \(\Pi (u')=v^\star \). For the first task, one can consider the distance of u to \(\tfrac{1}{2}\) over the intervals \(I_i\) defining the local averaging operators of the projection \(\Pi \) and define the i-th objective coefficient as

The intuition in this definition is that a bigger objective coefficient, i.e., a smaller average value of u on \(I_i\), will promote a smaller entry \(v^\star _i\) in the minimizer \(v^\star \), and vice versa. The minimizer \(v^\star \) will thus agree with \(\Pi (u)\) as much as possible while guaranteeing \(v^\star \in C_{D,\Pi }\). In fact, if \(C_{D,\Pi }\) is a 0/1-polytope, then the minimization problem

can be reformulated as a linear optimization problem over \(C_{D,\Pi }\), which is equivalent to the one with the objective coefficients given in (7). Moreover, if the intervals \(I_1,\dots ,I_M\) agree with the given discretization, the minimization problem (8) is equivalent to the CIA problem addressed in [26, 34], which tracks the average of the relaxed solution over the given temporal grid of the discretization while respecting the considered switching constraints.

Example 5.1

For \(D(\sigma )\), the set \(C_{D(\sigma ),\Pi }\) is a 0/1-polytope by [6, Thm. 3.8], and any linear objective function can be optimized in linear time over \(C_{D(\sigma ),\Pi }\) [8]. The minimizer \(v^\star \) can thus be guaranteed to be binary and it can be computed very efficiently, which even allows to choose as intervals \(I_1,\ldots ,I_M\) for the projection exactly the ones given by the currently used discretization in time. In this case, the minimizer \(v^\star \) solves the CIA problem over \(D(\sigma )\) and it is trivial to find a control \(u'\) with \(\Pi (u')=v^\star \). Indeed, on each interval \(I_i\), we can set \(u'\) constantly to \(v^\star _i\). \(\square \)

Example 5.2

The set \(C_{D(s),\Pi }\) of the minimum dwell time constraints is not necessarily a 0/1-polytope, but one can optimize over \(C_{D(s),\Pi }\) in \(O(M\sigma )\) time, and, by backtracking, one can reconstruct the corresponding solution \(u'\in D(s)\) in \(O(M\sigma )\) time; see [6, Thm. 3.13]. \(\square \)

The implicit fixings of the control in a subproblem of the branch-and-bound algorithm can also be considered explicitly in the optimization over \(C_{D,\Pi }\) by setting the corresponding objective coefficients in (7) to \(\infty \) and \(-\infty \), respectively. More precisely, one may use sufficiently large/small objective coefficients in this case.

In the above examples, a feasible control \(u\in D\) can be computed quickly. Nevertheless, in order to obtain the corresponding primal bound, one needs to first calculate the resulting state \(y=S(u)\) and then to evaluate the objective function.

6 Discretization error and adaptive refinement

The dual bounds computed by the outer approximation algorithm described in the previous section are safe bounds for (\(\textrm{SPC}_{k}\)), as long as we do not take discretization errors into account. However, our objective is to solve (P) in function space. This implies that we need to (a) estimate the discretization error contained in these bounds and (b) devise a method to deal with situations where the discretization-dependent dual bound allows to prune a subproblem but the discretization-independent dual bound does not, i.e., where the current primal bound lies between the two dual bounds. In the latter case, the only way out is the refinement of the discretization.

In order to address the first task, we will estimate the a posteriori error of the discretization with respect to the cost functional. We use the dual weighted residual (DWR) method, which has already achieved good results in practice, and combine the results from [29] and [39] to obtain an error analysis for the suproblems (\(\textrm{SPC}_{k}\)) arising in our branch-and-bound tree. First, we describe the finite element discretization of the optimal control problems arising in the branch-and-bound algorithm (Sect. 6.1). Then we discuss how to compute safe dual bounds (Sect. 6.2) as well as safe primal bounds (Sect. 6.3). Finally, we describe our adaptive refinement strategy (Sect. 6.4).

6.1 Finite element discretization

To solve problems of the form (\(\textrm{SPC}_{k}\)) in practice, we need to discretize the PDE constraint given as

in its weak formulation, as well as the control function, so that we implicitly discretize the Lagrangian \(L:W(0,T)\times L^2(0,T)\times W(0,T) \times L^2(0,T)\times L^2(0,T)\times {\mathbb {R}}^k\) corresponding to (\(\textrm{SPC}_{k}\)) given as

By calculating the derivative of L w.r.t. y in arbitrary direction \(\varphi \in W(0,T)\), as well as applying interval-wise integration by parts to the equation, we get the adjoint equation

We use a discontinuous Galerkin element method for the time discretization of the PDE constraint with piecewise constant functions. Let

be a partition of [0, T] with half open subintervals \(J_l=(t_{l-1},t_l]\) of size \(s_l=t_l-t_{l-1}\) with time points \(0=t_0<t_1<\cdots<t_{L-1}<t_L=T\). Define \(s:=\max _{l=1,\ldots ,N} s_l\) as the maximal length of a subinterval. The spatial discretization of the state equation uses a standard Galerkin method with piecewise linear and continuous functions, where the domain \(\Omega \) is partitioned into disjoint subsets \(K_i\) of diameter \(h_i:=\max _{p,q\in K_i}\Vert p-q\Vert _2\) for \(i=1,\ldots , R\), i.e., \(\overline{\Omega }=\cup _{i=1}^R \overline{K_i}\). For the one-dimensional domain \(\Omega \) used in our experiments in Sect. 7, this means that we subdivide \(\Omega \) into R disjoint intervals of length \(h_i\). Set \(h:=\max _{i=1,\ldots ,R} h_i\) and \({\mathcal {K}}_h=K_1\cup \cdots \cup K_R\). We define the finite element space

and associate with each time point \(t_l\) a partition \({\mathcal {K}}_h^l\) of \(\Omega \) and a corresponding finite element space \(V_h^l\subset H_0^1(\Omega )\) which is used as spatial trial and test space in the time interval \(J_l\). Denote by \(P_0(J_l,V_h^l)\) the space of constant functions on \(J_l\) with values in \(V_h^l\). Then we use as a trial and test space for the state equation in (P) the space

By introducing the notation

for the discontinuities of functions \(y_{sh}\in X_{sh}\) in time, we obtain the following fully discretized state equation: Find for a given \(u_{sh}\in L^2(0,T)\) a state \(y_{sh} \in X_{s,h}\) such that

where \(\langle \cdot ,\cdot \rangle _{J_l}:=\langle \cdot ,\cdot \rangle _{L^2(J_l;H^{-1}(\Omega )),L^2(J_l;H_0^1(\Omega ))}\), \((\cdot ,\cdot )_{J_l}:=(\cdot ,\cdot )_{L^2(J_l;\Omega )}\), and \((\cdot ,\cdot ):=(\cdot ,\cdot )_{L^2(\Omega )}\). Note that, for piecewise constant states \(y_{sh}\in X_{s,h}\), the term \(\langle \partial _t y_{sh}, \varphi \rangle _{J_l}\) in (11) is zero for all \(l=1,\ldots ,L\). We denote the discrete solution operator by \(S_{sh}:L^2(0,T)\rightarrow X_{s,h}\), i.e., \(y_{sh}=S_{sh}(u_{sh})\) satisfies the discrete state equation (11) for \(u_{sh}\in L^2(0,T)\). Finally, we use piecewise constant functions for the temporal discretization of the control function on the same temporal grid as for the state equation, i.e., we use the space

Altogether, the discretization of (\(\textrm{SPC}_{k}\)) is given as

Moreover, the Lagrangian \({\tilde{L}}:X_{s,h}\times Q_\rho \times X_{s,h}\times Q_\rho \times Q_\rho \times {\mathbb {R}}^k \rightarrow {\mathbb {R}}\) associated with (\(\textrm{SPC}{_{k\rho }}\)) results as

Based on this, we will devise a posteriori error estimates for both primal and dual bounds in the next sections.

6.2 A posteriori discretization error of dual bounds

Following the ideas of [29, 39], we now derive an a posteriori estimate for the error term \(J(y,u)-J(y_\rho ,u_\rho )\), where \((y,u)\in W(0,T)\times L^2(0,T)\) denotes the optimizer of (\(\textrm{SPC}_{k}\)) and \((y_\rho ,u_\rho )\in X_{s,h}\times Q_\rho \) the one of (\(\textrm{SPC}{_{k\rho }}\)). For this, let us write down the first-order optimality conditions of (\(\textrm{SPC}_{k}\)) and (\(\textrm{SPC}{_{k\rho }}\)) by means of the Lagrangian L and \({\tilde{L}}\), respectively. If \((y,u)\in W(0,T)\times L^2(0,T)\) is optimal for (\(\textrm{SPC}_{k}\)), then there exist multipliers \(p\in W(0,T)\), \(\mu ^+\in L^2(0,T)\), \(\mu ^-\in L^2(0,T)\) and \(\lambda \in {\mathbb {R}}^k\) such that for \(\chi :=(y,u,p,\mu ^+,\mu ^-,\lambda )\), we have

Analogously, if \((y_\rho ,u_\rho )\in X_{s,h}\times Q_\rho \) is optimal for (\(\textrm{SPC}{_{k\rho }}\)), then there exist \(p_\rho \in X_{s,h}\), \(\mu _\rho ^+\in Q_\rho \), \(\mu _\rho ^-\in Q_\rho \) and \(\lambda _\rho \in {\mathbb {R}}^k\) such that for \(\chi _\rho :=(y_\rho ,u_\rho ,p_\rho ,\mu _\rho ^+,\mu _\rho ^-,\lambda _\rho )\) we have

Using the shorthand notation

we have everything at hand to combine the results from [29] and [39] to obtain the following a posteriori discretization error estimation.

Theorem 6.1

Let \(\chi =(y,u,p,\mu ^+,\mu ^-,\lambda )\in {\mathcal {Y}}\) fulfill the first-order necessary optimality conditions (12a)–(12d) for (\(\textrm{SPC}_{k}\)) and \(\chi _\rho =(y_\rho ,u_\rho ,p_\rho ,\mu _\rho ^+,\mu _\rho ^-,\lambda _\rho )\in ~{\mathcal {Y}}_\rho \) the first-order necessary optimality conditions (13a)–(13d) for the discretized problem (\(\textrm{SPC}{_{k\rho }}\)). Then

Proof

The main arguments of the following proof are taken from the proofs of [29, Thm. 4.1] and [39, Thm. 4.2]. From the first-order optimality system (12a)–(12d) of \(\chi \in {{\mathcal {Y}}}\) for (\(\textrm{SPC}_{k}\)) we obtain \(J(y,u)=L(\chi )\). Analogously, the first-order conditions (13a)–(13d) of \(\chi _\rho \in {\mathcal {Y}}_\rho \) for ( \(\textrm{SPC}{_{k\rho }}\)) lead to \(J(y_\rho ,u_\rho )={\tilde{L}}(\chi _\rho )\). Moreover, it holds \(L(\chi )={\tilde{L}}(\chi )\) since the continuous embedding \(W(0,T)\hookrightarrow C([0,T];L^2(\Omega ))\) [41, Prop. 23.23] guarantees \(y\in W(0,T)\) to be continuous in time such that the additional jump terms in \({\tilde{L}}\) compared to L vanish. We thus obtain

Evaluation of the integral by the trapezoidal rule leads to

with the residual

Since the PDE contained in (\(\textrm{SPC}_{k}\)) as well as the control constraints in u are linear, and the objective is quadratic in y and u, respectively, we have \(R=0\).

We now have a closer look at the different error terms arising in (14). First, we have

because the other terms are zero thanks to the condition (12a), which can be seen as follows: since \(y\in W(0,T)\) is continuous in time due to \(W(0,T)\hookrightarrow C([0,T];L^2(\Omega ))\) by [41, Prop. 23.23], the additional terms in \({\tilde{L}}_y^\prime \) compared to \({L}_y^\prime \) and \({\tilde{L}}_p^\prime \) compared to \({L}_p^\prime \), respectively, vanish, so that (12a) immediately yields \({\tilde{L}}_y^\prime (\chi )(y)=0\) and \({\tilde{L}}_p^\prime (\chi )(p)=0\). Moreover, the continuity of y in time implies that \({\tilde{L}}_p^\prime (\chi )(p_\rho )=0\) can equivalently be expressed as

For the continuous state y, the state equation (9) implies that \((\varphi ,y(0))=(\varphi ,y_0)\) holds for all \(\varphi \in L^2(\Omega )\), so that the term \((y_0^+,p_{\rho ,0}^+)\) containing \(y(0)=y_0^+ \) cancels out with \((y_0,p_{\rho ,0}^+)\), as \(p_{\rho ,0}^+\in L^2(\Omega ),\) and it remains to ensure

Again from the continuous state equation (9), the latter equation is satisfied by y such that we obtain \({\tilde{L}}_p^\prime (\chi )(p_\rho )=0\), as desired. It remains to prove \({\tilde{L}}_y^\prime (\chi )(y_\rho )=0\). To this end, note that \(p\in W(0,T)\) is continuous with respect to time by [41, Prop. 23.23], so that we can rewrite \({\tilde{L}}_y^\prime (\chi )(y_\rho )=0\) after interval-wise integration by parts in W(0, T) [17] as

Using \(p_L^-=p(T)=0\) for the adjoint \(p\in W(0,T)\), the above equation becomes

By the adjoint equation (10) and the density of W(0, T) in \(L^2(0,T;H_0^1(\Omega ))\), the equation is satisfied by \(y_\rho \in L^2(0,T;H_0^1(\Omega ))\). We thus get \({\tilde{L}}_y^\prime (\chi )(y_\rho )=0\). Finally, (12a) directly yields \({\tilde{L}}_u^\prime (\chi )(u-u_\rho )=0\) because of \((u-u_\rho )\in L^2(0,T)\). The second term in (14) is given as

which completes the proof. \(\square \)

We need to further specify the estimation of the a posteriori error given in Theorem 6.1, since it contains the unknown solution \(\chi \in {\mathcal {Y}}\). A common approach in the context of the DWR method is to use higher-order approximations, which work satisfactorily in practice; see, e.g., [3]. Since our control function can only vary over time and the novelty of our approach lies primarily in the determination of the finitely many switching points, we assume for simplicity that there is no error caused by the spatial discretization of the state equation to keep the discussion concise. Thus, we only use a higher-order interpolation in time. For that, we introduce the piecewise linear interpolation operator \(I_s^{(1)}\) in time and map the computed solutions to the approximations of the interpolation errors

Then we obtain the approximations

Since the space of the Lagrange multiplier \(\lambda \) of the cutting planes is finite-dimensional and thus not implicitly discretized by the discretization of the control space, we may choose \(\lambda _\rho \) as higher-order interpolating and consequently neglect the error terms in \(\lambda \), i.e.,

Finally, as mentioned in [39], the control u typically does not possess sufficient smoothness, due to the box and cutting plane constraints. We thus suggest, as in [39], based on the gradient equation

and the resulting projection formula

the choice of

and

with \({\tilde{\mu }}^+,{\tilde{\mu }}^-\ge 0\) a.e. on (0, T). The computable error estimate is thus given as

with \({\tilde{\chi }}=(I_s^{(1)}y_\rho ,{\tilde{u}},I_s^{(1)}p_\rho ,{\tilde{\mu }}^+,{\tilde{\mu }}^-,\lambda _\rho )\).

As in [29], one could split the error \(J(y,u)-J(y_\rho ,u_\rho )\) into (a) the error caused by the semi-discretization of the state equation in time, (b) the error caused by the additional spatial discretization of the state equation, which we would consider as zero again, and (c) the error caused by the control space discretization. This would allow to choose different time grids for the state equation and the control space, where the former has to be at least as fine as the latter [29]. Since we are mostly interested in the combinatorial switching constraints, so that our focus is on the controls, we decided not to split the error and thus not to consider a finer temporal grid for the state.

As discussed in Sect. 3, the given fixings may determine parts of the switching pattern of u in (\(\textrm{SPC}_{k}\)). In this case, we need to calculate the a posteriori error (\(\textrm{E}_{\eta }\)) only on the unfixed control variables \(u|_{\mathcal {I}}\), as well as on the Lagrange multipliers \(\mu ^+,\mu ^-\in L^2({\mathcal {I}})\) corresponding to the box constraints, since we explicitly eliminated the fixed control variables from the problem (\(\textrm{SPC}_{k}\)). Then, it is clear that the terms \({\tilde{L}}_{u}^\prime (\chi _\rho )({\tilde{u}}-u_\rho )\), \({\tilde{L}}_{\mu ^+}^\prime ({\tilde{\chi }})({\tilde{\mu }}^+-\mu _\rho ^+)\), \({\tilde{L}}_{\mu ^-}^\prime ({\tilde{\chi }})({\tilde{\mu }}^--\mu _\rho ^-)\), \({\tilde{L}}_{\mu ^+}^\prime (\chi _\rho )({\tilde{\mu }}^+-\mu _\rho ^+)\), and \({\tilde{L}}_{\mu ^-}^\prime (\chi _\rho )({\tilde{\mu }}^--\mu _\rho ^-)\) in the error estimator (\(\textrm{E}_{\eta }\)) tend to zero for an increasing number of fixings satisfying the assumptions of Theorem 3.1, since the non-fixed part of the time horizon vanishes in this case. On the other hand, the error terms \({\tilde{L}}_y^\prime (\chi _\rho )(I_s^{(1)} y_\rho -y_\rho )\) and \({\tilde{L}}_p^\prime (\chi _\rho )(I_s^{(1)} p_\rho -p_\rho )\) reflect the error \(J(Su_\rho ,u_\rho )-J(y_\rho ,u_\rho )\) in the cost functional caused by calculating the discretized state \(y_\rho =S_{sh}(u_\rho )\) rather than \(Su_\rho \). This error is also taken into account in the primal bounds throughout our branch-and-bound scheme; see Sect. 6.3 below.

In summary, in order to numerically compute a safe dual bound for the subproblem (SP), we first calculate a solution \(u_\rho \) of the fully discretized problem (\(\textrm{SPC}{_{k\rho }}\)) with objective value \(J(y_\rho ,u_\rho )\) by means of the ADMM method, as described in Sect. 5.1. Second, we use \(J(y_\rho ,u_\rho )-e+\eta \) as a dual bound, where e denotes the absolute error in the primal objective caused by the ADMM algorithm, see (6), and \(\eta \) the a posteriori error of the discretization of (\(\textrm{SPC}_{k}\)); compare (\(\textrm{E}_{\eta }\)).

6.3 A posteriori discretization error of primal bounds

Every feasible solution \(u\in D\), e.g., obtained by applying primal heuristics as described in Sect. 5.2, leads to a primal bound J(Su, u) for the original problem (P). However, this bound is again subject to discretization errors. To estimate the latter, we first need to solve the fully discretized equation (11) to get a state \(y_{sh}=S_{sh}(u)\) and then to estimate the a posteriori error \(\nu :=J(Su,u)-J(S_{sh}u,u)\) in the cost functional. For the latter, we can again use the DWR method, which was originally invented to estimate the error in the cost function caused by the discretization of the state equation, see, e.g., [3]. We may directly apply [3, Prop. 2.4] to get the approximation

with \(\langle \partial _t y_{sh}, p_{sh}\rangle _{J_l}=0\) for \(l=1,\ldots ,L\), where \(p=S^*(y)\) and \(p_{sh}=S_{sh}^*(y_{sh})\) denotes the adjoint corresponding to the state \(y=S(u)\) and \(y_{sh}=S_{sh}(u)\), respectively. Assuming again that there is no error caused by the spatial discretization, we may use the piecewise linear interpolation \(I_s^{(1)}p_{sh}\) of \(p_{sh}\) in time to obtain the computable a posteriori error

Then \(J(S_{sh}u,u)+\nu \) is a safe primal bound for (P).

6.4 Adaptive refinement strategy

The central feature of our branch-and-bound algorithm is the approximate computation of an optimal solution for (P) in function space. In the limit, this solution does not depend on any predetermined discretization of the time horizon. However, in practice, we need to discretize our subproblems (SP) in order to numerically compute dual bounds, as described in Sect. 6.2. The main idea of our approach is to use a coarse temporal grid at the beginning, when the branchings have not yet determined a significant part of the switching structure, and then to refine the subintervals (only) if necessary.

More specifically, as long as the time-mesh dependent dual bound \(J(y_\rho ,u_\rho )-e\) for (SP) is below the best known primal bound, we proceed with the given discretization. Otherwise, we cannot find a better solution for (SP) for the given discretization. We then must decide whether better solutions for (SP) may potentially exist when using a finer temporal grid. This is the case if and only if the time-mesh independent bound \(J(y_\rho ,u_\rho )-e+\eta \) is still below the primal bound PB. We thus have to refine the grid whenever

If even \(J(y_\rho ,u_\rho )-e+\eta \) exceeds the primal bound, we can prune the subproblem. Indeed, in this case we cannot find better solutions for the subproblem even in function space.

The adaptive refinement of the temporal grid is guided by the a posteriori error estimation of the discretization proposed in Sect. 6.2. The error estimator (\(\textrm{E}_{\eta }\)) can be easily split into its contribution on each subinterval \(J_l\), i.e.,

with the local error contributions \(\eta _l\) on \(J_l\) for \(l=1,\ldots ,L.\) Note that this splitting is directly possible since we assumed that there is no error caused by the spatial discretization of the state equation, and thus no further localization on each spatial mesh is needed. A popular strategy for mesh adaptation is to order the subintervals according to the absolute values of their error indicators in descending order, i.e., to find a permutation \(\varrho \) of \(\{1,\ldots ,L\}\) such that \(|\eta _{\varrho (1)}| \ge \cdots \ge |\eta _{\varrho (L)}|\), and then to refine the subintervals which make up a certain percentage \(\gamma >0\) of the total absolute error, i.e., the subintervals \(J_{\varrho (1)},\ldots ,J_{\varrho (L_\gamma )}\) with

The resulting subproblem (\(\textrm{SPC}{_{k\rho }}\)) with respect to the refined discretization again has to be solved by Algorithm 1. As a reoptimization strategy, the values of the prior discretized solution \((u_\rho ,v_\rho ,\lambda _\rho ,w_\rho ,\mu _\rho )\) returned by Algorithm 1 can be used to initialize the variables in Step 1. More precisely, the values of \((u_\rho ,w_\rho ,\mu _\rho )\) can be duplicated according to the refinement of the subintervals and \((v_\rho ,\lambda _\rho )\) can be kept unchanged. In this way, we produce a primal feasible solution \((u_\rho ,v_\rho ,w_\rho )\) for the new subproblem (\(\textrm{SPC}{_{k\rho }}\)), but note that \((\lambda _\rho ,\mu _\rho )\) is not feasible for the corresponding dual problem.

7 Numerical experiments

We now report the results of an extensive numerical evaluation of our branch-and-bound algorithm presented in the previous sections. The overall branch-and-bound method has been implemented in C++, using the DUNE-library [36] for the discretization of the PDE. The source code can be downloaded at https://github.com/agruetering/dune-bnb. For all experiments, we discretize the problems as described in Sect. 6.1. This means that the spatial discretization uses a standard Galerkin method with continuous and piecewise linear functionals, while the temporal discretization for the control, the state, and the desired state \(y_d \) uses piecewise constant functionals in time. The spatial integrals in the weak formulation of the state equation (9) and the adjoint equation (10), respectively, are approximated by a Gauss-Legendre rule with order 3. This means that all spatial integrals except for the one containing the form function \(\varphi \) are calculated exactly. The discretized systems, arising from the discretization of the state and adjoint equation, are solved by a sequential conjugate gradient solver preconditioned with AMG smoothed by SSOR. All computations have been performed on a 64bit Linux system with an Intel Xeon E5-2640 CPU @ 2.5 GHz and 32 GB RAM.

7.1 Algorithmic framework

We start the branch-and-bound algorithm with an equidistant time grid with 20 nodes and, if necessary, we refine the subintervals that account for \(\gamma =50\,\%\) of the total error; see Sect. 6.4. The choice of the time point \(\tau \) for the branching is crucial for the practical performance of the algorithm, since the implicit restrictions on the controls are highly influenced by the branching points; see Example 3.3 and Example 3.4. Thus, the quality of the dual bounds of each node in the branch-and-bound tree strongly depends on the branching decisions. As already mentioned in Sect. 3, it is natural to take the last computed relaxed control of the outer approximation algorithm into account, which we know up to a discretization of (0, T); see Sect. 6.1. As a branching point, we choose the point of the time grid where the control has the highest deviation from 0/1, i.e., where the distance to \(\{0,1\}\) multiplied by the length of the corresponding grid cell is maximal. This branching strategy corresponds to the choice of the variable with the most fractional value in finite-dimensional integer optimization. Finally, we use breadth-first search as an enumeration strategy since our computed primal bounds track the average of the relaxed solution over the given temporal grid of the discretization, i.e., solve the CIA problem over \(D(\sigma )\); compare Example 5.1. In depth-first search, the shape of the computed relaxed controls for the subproblems hardly changed, so that our primal heuristic always produced the same feasible solution and good primal bounds were found late. As a result, many nodes had to be examined before pruning. This effect is avoided by breadth-first search.

The results presented in [7] suggest to add only a few cutting planes before resorting to branching, because a significant increase in the dual bound was mostly obtained in the first cutting plane iterations. Moreover, we observed that the dual bounds got better with a decreasing Tikhonov parameter \(\alpha \), but the time needed to compute them increased with decreasing \(\alpha \). Thus, we investigate in Sect. 7.3 whether a good quality or a quick computation of the dual bounds have a greater influence on the overall performance.

The parabolic optimal control problems arising in each iteration of the outer approximation algorithm are solved by the ADMM algorithm; see Algorithm 1 in Sect. 5.1. As tolerances for the primal and dual residuals in the ADMM algorithm, we chose \(\varepsilon ^\text {rel}=\varepsilon ^\text {abs}=10^{-3}\) and required the absolute error of the discretization of (\(\textrm{SPC}{'_k}\)) to be less than \(\varepsilon ^\text {pr}=10^{-5}\). In order to guarantee the numerical stability of the ADMM algorithm, the penalty parameter of the cutting planes was set to \(\rho =\tfrac{1+\sqrt{5}}{2}\). The best choice of the penalty term \(\beta \) of the box constraints depending on the Tikhonov term \(\alpha \) is investigated in Sect. 7.3. The resulting linear system in Step 3 of Algorithm 1 is solved by the conjugate gradient method, preconditioned with \(P=(\alpha +\beta ) I + \rho G^\star G.\)

7.2 Instances

In all experiments, we focus on the case of an upper bound \(\sigma \) on the number of switchings, i.e., we consider the feasible set

as defined in Sect. 2.3. However, we assume that u is fixed to zero before the time horizon, so that we already count it as one switching if u is 1 at the beginning. Notwithstanding this slight modification, the most violated cutting plane for a given vector \(v\notin C_{D(\sigma )_ SP ,\Pi }\) can be computed in \(O(M+N)\) time as discussed in Sect. 4.1, using the separation algorithm presented in [8]. This separation algorithm is thus fast enough to allow to choose the intervals for the projection exactly as the intervals given by the discretization in time; compare Sect. 6.1.

We created instances of (P) with \(\Omega =(0,1)\), \(T=1\textrm{, and }\psi (x)=\exp (x)\sin (\pi \,x)+0.5.\) In order to obtain challenging instances, we produced the desired state \(y_d \) as follows: we first generated a control \(u_d :[0,T] \rightarrow \{0,1\}\) with a total variation \(|u_d |_{BV(0,T)}=\theta \) and chose the desired state \(y_d \textrm{as }S(u_d )\), such that \(u_d \) is the optimal solution for Problem (P) if we allow \(\theta \) switchings. More specifically, we randomly chose \(\theta \) jump points \(0< t_1< \cdots<t_{\theta } < T\) on the equidistant time grid with 320 nodes. Then, we chose \(u_d :[0,T]\rightarrow \{0,1\}\) as the binary control starting in zero and having the switching points \(t_1,\ldots ,t_{\theta }\). In this way, we generated non-trivial instances, where the constraint \(D(\sigma )\) strongly affects the optimal solution of (P) in case \(\sigma \ll \theta \).

7.3 Parameter tuning

Before testing the potential of our approach, we investigate the influence of some parameters on the overall performance. We first consider the Tikhonov term \(\alpha \) and the penalty term \(\beta \) of the box constraints; see Sect. 5.1. Afterwards, we investigate how time-consuming it is to solve the subproblems arising in the branch-and-bound algorithm, depending on when we stop the outer approximation algorithm for each subproblem (SP). Here, we resort to branching if the relative change of the bound is less than a certain percentage (RED) in three successive iterations. Finally, we vary the allowed relative deviation (TOL) of the objective value of the returned solution from the optimal value of (P); a subproblem in the branch-and-bound node is pruned when the remaining gap between primal and dual bound falls below this relative threshold. We start with RED \(=\) TOL \(=\) 1 %.

For all results presented in this subsection, we have chosen the same instance with \(\theta =8\) jump points and allowed \(\sigma =3\) switchings, since we observed the typical behavior of the algorithm with these settings. We always report the overall number of investigated subproblems (Subs), of cutting plane iterations (Cuts), and of ADMM iterations (ADMM). Moreover, the average number of fixings (\(\varnothing \) FixPoints) and the average percentage of control variables that are implicitly fixed (\(\varnothing \) FixIndices) are reported, where both averages are taken over all pruned subproblems. We also provide the overall run time (Time) in CPU hours.

The results for different values of \(\alpha \) and \(\beta \) can be found in Table 1. The main message of Table 1 is that a small value of \(\alpha \) is generally favorable for the branch-and-bound algorithm, since a smaller value of \(\alpha \) leads to stronger dual bounds and consequently, fewer fixings are needed on average to prune a subproblem. So, as long as no numerical issues arise with the ADMM algorithm and the DWR error estimator, one should choose \(\alpha =0.001\). But, with smaller value of \(\alpha \) it becomes more likely that the higher-order approximation of the unknown quantities (see Sect. 6.3) is too imprecise to estimate the error in the cost functional, so that the branch-and-bound algorithm returns wrong solutions. This was also observed in our experiments: in many instances, the obtained solutions for \(\alpha \in \{0.01,0.005\}\) switched three times and had very similar switching times for all values of \(\beta \). In contrast, the obtained solutions for \(\alpha =0.001\) frequently switched only twice and differed enormously from the others. By recalculating the objective on such a fine grid that all returned solutions are piecewise constant on it, it turned out that the solutions obtained for \(\alpha \in \{0.01,0.005\}\) were indeed better than the ones for \(\alpha =0.001\). Moreover, the primal heuristic even produced some of the better solutions within the branch-and-bound scheme for \(\alpha =0.001\), but due to the DWR error estimator, their time-mesh independent objective values were worse. For that reason, we choose \(\alpha =\beta =0.005\) in all subsequent experiments.

We next investigate the interplay between branching and outer approximation. Table 2 demonstrates that a good balance is important: a stronger focus on the outer approximation leads to fewer branching decisions needed to cut off a subproblem. However, this does not necessarily imply that fewer fixings are needed to prune a subproblem, since the branching points strongly depend on the shape of the relaxed solutions. Moreover, it is more time-consuming to solve each node due to the increased number of cutting plane iterations. On the other hand, it is also not beneficial to resort to branching too early because more subproblems need to be investigated then. We thus use RED = 2% in the following.

Finally, the impact of the relative allowed deviation from the optimal objective value on the performance of the branch-and-bound algorithm is shown in Table 3. As expected, a higher tolerance leads to an earlier pruning of the subproblems, as indicated by the number of fixings required to prune a subproblem, and the running time decreases significantly. At the same time, however, the best known primal bound (Obj) found by the algorithm obviously increases, so that ultimately the user has to decide which deviation is still acceptable. We choose TOL = 2% in the following, which we think is a reasonable optimality tolerance.

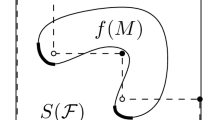

Complete branch-and-bound tree of an instance generated with \(\theta =3\) jump points and with \(\sigma =1\) allowed switchings. The path of the optimal solution is marked in bold and the branching decisions along the optimal path are listed. In the case of a single child node, the temporal discretization of the subproblem has been refined

7.4 Performance of the algorithm

Before reporting running times and other key performance indicators of our algorithm, we first illustrate the interplay between branching and adaptive refinement by an example. Figure 2 shows the complete branch-and-bound tree obtained for an instance with \(\theta =3\) jump points and only one allowed switching, i.e., \(\sigma =1\). Whenever a node has a single child node in the illustration, the discretization of the subproblem has been refined. The branch-and-bound tree shows that a large part of the generated subproblems can already be pruned without any refinement. Moreover, in relatively few branches the subproblems need to be refined multiple times in order to decide whether a solution of desired quality can be found in these branches. The branching decisions taken along the path leading to the returned solution illustrate that, e.g., the generated subproblem 16 was refined in order to choose the sixth fixing point as \(\tau _6=0.225\). This was not possible with the previous discretization of the problem. In particular, the fourth and fifth fixing point together have limited the switching point to be in the interval (0.2, 0.25]. The last branching decision in this tree serves to determine \(t=0.2375\) as the switching point of the returned solution.

Table 4 shows the performance of the branch-and-bound algorithm for various instances generated with \(\theta \in \{1,\ldots ,8\}\) and \(\sigma \in \{1,\ldots ,4\}\) for the total number of switching points. We were able to solve problems with up to four allowed switchings, but, as could be expected, the number of generated subproblems strongly increases in \(\sigma \). However, we note that the ratio between generated subproblems and total cutting plane iterations is not affected by \(\sigma \). While the branch-and-bound algorithm is able to solve problems with \(\sigma =3\) within 14 CPU hours, the algorithm does not terminate within 60 CPU hours for most instances with \(\sigma =4\) allowed switchings. However, the results of Table 4 show that the average number of subproblems in the branch-and-bound-tree remains relatively small for all instances, showing that the dual bounds computed by our algorithm are rather tight, and that the main challenge in terms of running times is the fast computation of these dual bounds.

Moreover, the reported results show that our approach to globally solve parabolic optimal control problems with dynamic switches by means of branch-and-bound, combined with an adaptive refinement strategy, works in practice. Whenever the maximal number of refinements of a grid cell within the branch-and-bound algorithm was larger than 4 in our experiments, a grid cell was refined this often in less than \(10\,\%\) of the subproblems. Here, the finest grid mesh size decreases with the number of allowed switching points. This means that, if more switchings are allowed, a finer temporal discretization is needed to detect the optimal positions of the switching points.

In summary, our proposed branch-and-bound method is an effective and robust algorithm to globally solve control problems of the form (P). A few pointwise fixings of the controls suffice to significantly truncate the set of feasible switching patterns. Moreover, thanks to the computation of tight dual bounds by means of outer approximation, relatively few subproblems need to be inspected and refined within the branch-and-bound algorithm.

Data Availability