Abstract

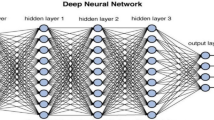

With the rapid development of Artificial Intelligence, Internet of Things, 5G, and other technologies, a number of emerging intelligent applications represented by image recognition, voice recognition, autonomous driving, and intelligent manufacturing have appeared. These applications require efficient and intelligent processing systems for massive data calculations, so it is urgent to apply better DNN in a faster way. Although, compared with GPU, FPGA has a higher energy efficiency ratio, and shorter development cycle and better flexibility than ASIC. However, FPGA is not a perfect hardware platform either for computational intelligence. This paper provides a survey of the latest acceleration work related to the familiar DNNs and proposes three new directions to break the bottleneck of the DNN implementation. So as to improve calculating speed and energy efficiency of edge devices, intelligent embedded approaches including model compression and optimized data movement of the entire system are most commonly used. With the gradual slowdown of Moore’s Law, the traditional Von Neumann Architecture generates a “Memory Wall” problem, resulting in more power-consuming. In-memory computation will be the right medicine in the post-Moore law era. More complete software/hardware co-design environment will direct researchers’ attention to explore deep learning algorithms and run the algorithm on the hardware level in a faster way. These new directions start a relatively new paradigm in computational intelligence, which have attracted substantial attention from the research community and demonstrated greater potential over traditional techniques.

Similar content being viewed by others

References

Abadi M, Agarwal A, Barham P, Brevdo E, Chen Z, Citro C, Corrado GS, Davis A, Dean J, Devin M (2016) Tensorflow: large-scale machine learning on heterogeneous distributed systems. arXiv preprint arXiv:1603.04467

Akin B, Franchetti F, Hoe JC (2015) Data reorganization in memory using 3D-stacked dram. ACM SIGARCH Comput Archit News 43(3S):131–143

Beric A, van Meerbergen J, de Haan G, Sethuraman R (2008) Memory-centric video processing. IEEE Trans Circuits Syst Video Technol 18(4):439–452. https://doi.org/10.1109/Tcsvt.2008.918775

Beyls K, D’Hollander EH (2009) Refactoring for data locality. Computer 42(2):62–71. https://doi.org/10.1109/Mc.2009.57

Boo Y, Sung W (2017) Structured sparse ternary weight coding of deep neural networks for efficient hardware implementations. In: 2017 IEEE international workshop on signal processing systems (SIPS)

Cadambi S, Durdanovic I, Jakkula V, Sankaradass M, Cosatto E, Chakradhar S, Graf HP (2009) A massively parallel FPGA-based coprocessor for support vector machines. In: Proceedings of the 2009 17th IEEE symposium on field programmable custom computing machines, pp 115–122. https://doi.org/10.1109/Fccm.2009.34

Chang J, Sha J (2019) Prune deep neural networks with the modified l-1/2 penalty. IEEE Access 7:2273–2280. https://doi.org/10.1109/Access.2018.2886876

Chang YJ, Tsai KL, Cheng YC (2020) Data retention based low leakage power TCAM for network packet routing. IEEE Trans Circuits Syst II Express Briefs 1. https://doi.org/10.1109/TCSII.2020.3014154

Chen G, Meng H, Liang Y, Huang K (2020) GPU-accelerated real-time stereo estimation with binary neural network. IEEE Trans Parallel Distrib Syst 31(12):2896–2907

Chen T, Du Z, Sun N, Wang J, Wu C, Chen Y, Temam O (2014) Diannao: a small-footprint high-throughput accelerator for ubiquitous machine-learning. ACM SIGPLAN Not 49:269–284

Chen Y, Chen T, Xu Z, Sun N, Temam O (2016) Diannao family. Commun ACM 59(11):105–112. https://doi.org/10.1145/2996864

Chen Y, Luo T, Liu S, Zhang S, He L, Wang J, Li L, Chen T, Xu Z, Sun N et al (2014) Dadiannao: a machine-learning supercomputer. In: Proceedings of the 47th annual IEEE/ACM international symposium on microarchitecture, pp 609–622. IEEE Computer Society

Chen YH, Krishna T, Emer JS, Sze V (2017) Eyeriss: an energy-efficient reconfigurable accelerator for deep convolutional neural networks. IEEE J Solid-State Circuits 52(1):127–138. https://doi.org/10.1109/jssc.2016.2616357

Cheng J, Wu J, Leng C, Wang Y, Hu Q (2018) Quantized CNN: a unified approach to accelerate and compress convolutional networks. IEEE Trans Neural Netw Learn Syst 29(10):4730–4743. https://doi.org/10.1109/TNNLS.2017.2774288

Chi P, Li S, Xu C, Zhang T, Zhao J, Liu Y, Wang Y, Xie Y (2016) Prime: a novel processing-in-memory architecture for neural network computation in reram-based main memory. In: 2016 ACM/IEEE 43rd annual international symposium on computer architecture (ISCA), vol 3, pp 27–39. IEEE Press

Cloutier J, Cosatto E, Pigeon S, Boyer FR, Simard PY (1996) VIP: an FPGA-based processor for image processing and neural networks. In: Proceedings of fifth international conference on microelectronics for neural networks, pp 330–336. IEEE

Deng L, Li J, Huang JT, Yao K, Yu D, Seide F, Seltzer M, Zweig G, He X, Williams J (2013) Recent advances in deep learning for speech research at Microsoft. In: 2013 IEEE international conference on acoustics, speech and signal processing, pp 8604–8608. IEEE

Dong H, Jiang L, Li TJ, Liang XY (2018) A systematic FPGA acceleration design for applications based on convolutional neural networks. In: Advances in materials, machinery, electronics II, vol 1955

Du ZD, Fasthuber R, Chen TS, Ienne P, Li L, Luo T, Feng, XB, Chen YJ, Temam O (2015) Shidiannao: shifting vision processing closer to the sensor. In: 2015 ACM/IEEE 42nd annual international symposium on computer architecture (ISCA), pp 92–104. https://doi.org/10.1145/2749469.2750389

Esteva A, Kuprel B, Novoa RA, Ko J, Swetter SM, Blau HM, Thrun S (2017) Dermatologist-level classification of skin cancer with deep neural networks. Nature 542(7639):115–118. https://doi.org/10.1038/nature21056

Farabet C, Poulet C, Han JY, Lecun Y (2009) CNP: an FPGA-based processor for convolutional networks. In: FPL: 2009 international conference on field programmable logic and applications p 32. https://doi.org/10.1109/Fpl.2009.5272559

Farmahini-Farahani A, Ahn JH, Morrow K, Kim NS (2014) Drama: an architecture for accelerated processing near memory. IEEE Comput Archit Lett 14(1):26–29

Finker R, del Campo I, Echanobe J, Doctor F (2013) Multilevel adaptive neural network architecture for implementing single-chip intelligent agents on FPGAs. In: 2013 international joint conference on neural networks (IJCNN)

Foucher C, Muller F, Giulieri A (2012) Fast integration of hardware accelerators for dynamically reconfigurable architecture. In: 2012 7th international workshop on reconfigurable and communication-centric systems-on-chip (RECOSOC)

Gao HB, Cheng B, Wang JQ, Li KQ, Zhao JH, Li DY (2018) Object classification using CNN-based fusion of vision and lidar in autonomous vehicle environment. IEEE Trans Ind Inform 14(9):4224–4231. https://doi.org/10.1109/Tii.2018.2822828

Geng T, Wang T, Sanaullah A, Yang C, Patel R, Herbordt M (2018) A framework for acceleration of CNN training on deeply-pipelined FPGA clusters with work and weight load balancing. In: 2018 28th international conference on field programmable logic and applications (FPL), pp 394–3944

Gokhale V, Jin J, Dundar A, Martini B, Culurciello E (2014) A 240 g-ops/s mobile coprocessor for deep neural networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition workshops, pp 682–687

Graves A, Mohamed AR, Hinton G (2013) Speech recognition with deep recurrent neural networks. In: 2013 IEEE international conference on acoustics, speech and signal processing (ICASSP), pp 6645–6649

Guo HN, Ren XD, Li SH (2018) A new pruning method to train deep neural networks. Commun Signal Process Syst 423:767–775. https://doi.org/10.1007/978-981-10-3229-5

Guo K, Sui L, Qiu J, Yu J, Wang J, Yao S, Han S, Wang Y, Yang H (2018) Angel-eye: a complete design flow for mapping CNN onto embedded FPGA. IEEE Trans Comput Aided Des Integr Circuits Syst 37(1):35–47. https://doi.org/10.1109/tcad.2017.2705069

Hajduk Z (2018) Reconfigurable FPGA implementation of neural networks. Neurocomputing 308:227–234. https://doi.org/10.1016/j.neucom.2018.04.077

HajiRassouliha A, Taberner AJ, Nash MP, Nielsen PMF (2018) Suitability of recent hardware accelerators (DSPs, FPGAs, and GPUs) for computer vision and image processing algorithms. Signal Process Image Commun 68:101–119. https://doi.org/10.1016/j.image.2018.07.007

Han S, Kang JL, Mao HZ, Hu YM, Li X, Li YB, Xie DL, Luo H, Yao S, Wang Y, Yang HZ, Dally WJ (2017) ESE: efficient speech recognition engine with sparse LSTM on FPGA. In: FPGA’17: proceedings of the 2017 ACM/SIGDA international symposium on field-programmable gate arrays, pp 75–84. https://doi.org/10.1145/3020078.3021745

Han S, Liu X, Mao H, Pu J, Pedram A, Horowitz M, Dally W (2016) EIE: efficient inference engine on compressed deep neural network. 2016 ACM/IEEE 43rd annual international symposium on computer architecture (ISCA). pp 243–254. https://doi.org/10.1109/ISCA.2016.30

Han S, Pool J, Tran J, Dally WJ (2015) Learning both weights and connections for efficient neural networks. In: Advances in neural information processing systems 28 (NIPS 2015), vol 28

Hennessy JL, Patterson DA (2018) A new golden age for computer architecture: domain-specific hardware/software co-design, enhanced security, open instruction sets, and agile chip development. Turing lecture at international symposium on computer architecture (ISCA’18), Los Angles, USA

Horowitz M (2014) Computing’s energy problem (and what we can do about it). In: 2014 IEEE international solid-state circuits conference digest of technical papers (ISSCC), vol 57, pp 10–14

Hsien-De Huang T, Yu CM, Kao HY (2017) Data-driven and deep learning methodology for deceptive advertising and phone scams detection. In: 2017 conference on technologies and applications of artificial intelligence (TAAI), pp 166–171

Irfan M, Ullah Z, Cheung RCC (2019) D-TCAM: a high-performance distributed RAM based TCAM architecture on FPGAs. IEEE Access 7:96060–96069

Izeboudjen N, Larbes C, Farah A (2012) A new classification approach for neural networks hardware: from standards chips to embedded systems on chip. Artif Intell Rev 41(4):491–534. https://doi.org/10.1007/s10462-012-9321-7

Jaki Z, Cadenelli N, Prats DB, Polo J, Perez DC (2019) A highly parameterizable framework for conditional restricted Boltzmann machine based workloads accelerated with FPGAs and OPENCL. Future Gener Comput Syst 104:201–211. https://doi.org/10.1016/j.future.2019.10.025

Jia Y, Shelhamer E, Donahue J, Karayev S, Long J, Girshick R, Guadarrama S, Darrell T (2014) Caffe: convolutional architecture for fast feature embedding. In: MM 2014—proceedings of the 2014 ACM conference on multimedia

Jiang W, Song Z, Zhan J, He Z, Jiang K (2020) Optimized co-scheduling of mixed-precision neural network accelerator for real-time multitasking applications. J Syst Archit 110:101775

Jiang YG, Wu ZX, Wang J, Xue XY, Chang SF (2018) Exploiting feature and class relationships in video categorization with regularized deep neural networks. IEEE Trans Pattern Anal Mach Intell 40(2):352–364. https://doi.org/10.1109/Tpami.2017.2670560

Jiao L, Luo C, Cao W, Zhou X, Wang L (2017) Accelerating low bit-width convolutional neural networks with embedded FPGA. In: Santambrogio M, Gohringer D, Stroobandt D, Mentens N, Nurmi J (eds) 2017 27th international conference on field programmable logic and applications (FPL), pp 1–4

Jouppi NP, Young C, Patil N, Patterson D, Agrawal G, Bajwa R, Bates S, Bhatia S, Boden N, Borchers A et al (2017) In-datacenter performance analysis of a tensor processing unit. In: 2017 ACM/IEEE 44th annual international symposium on computer architecture (ISCA), pp 1–12. IEEE

Krizhevsky A, Sutskever I, Hinton GE (2017) Imagenet classification with deep convolutional neural networks. Commun ACM 60(6):84–90. https://doi.org/10.1145/3065386

Kwon H, Samajdar A, Krishna T (2018) Maeri: enabling flexible dataflow mapping over DNN accelerators via programmable interconnects. In: Proceedings of the 23rd international conference on architectural support for programming languages and operating systems, pp 461–475

Lebedev V, Lempitsky V (2016) Fast convnets using group-wise brain damage. In: 2016 IEEE conference on computer vision and pattern recognition (CVPR), pp 2554–2564. https://doi.org/10.1109/Cvpr.2016.280

LeCun Y, Bengio Y, Hinton G (2015) Deep learning. Nature 521(7553):436–44. https://doi.org/10.1038/nature14539

LeCun Y, Denker JS, Solla SA (2000) Optimal brain damage. In: Advances in neural information processing systems, vol 2, pp 598–605

Li HM, Fan XT, Jiao L, Cao W, Zhou XG, Wang LL (2016) A high performance FPGA-based accelerator for large-scale convolutional neural networks. In: 2016 26th international conference on field programmable logic and applications (FPL). https://doi.org/10.1109/Fpl.2016.7577308

Li LQ, Xu YH, Zhu J (2018) Filter level pruning based on similar feature extraction for convolutional neural networks. IEICE Trans Inf Syst E101d(4):1203–1206. https://doi.org/10.1587/transinf.2017EDL8248

Li X, Cai Y, Han J, Zeng X (2017) A high utilization FPGA-based accelerator for variable-scale convolutional neural network. In: 2017 IEEE 12th international conference on ASIC (ASICON), pp 944–947. IEEE

Liang S, Yin S, Liu L, Luk W, Wei S (2018) FP-BNN: binarized neural network on FPGA. Neurocomputing 275:1072–1086. https://doi.org/10.1016/j.neucom.2017.09.046

Liu W, Lin J, Wang Z (2020) A precision-scalable energy-efficient convolutional neural network accelerator. IEEE Trans Circuits Syst I Regul Pap PP(99):1–14

Lu HY, Wang M, Foroosh H, Tappen M, Penksy M (2015) Sparse convolutional neural networks. In: 2015 IEEE conference on computer vision and pattern recognition (CVPR), pp 806–814

Luo T, Liu S, Li L, Wang Y, Zhang S, Chen T, Xu Z, Temam O, Chen Y (2017) Dadiannao: a neural network supercomputer. IEEE Trans Comput 66(1):73–88. https://doi.org/10.1109/tc.2016.2574353

Luo X, Shen R, Hu J, Deng J, Hu L, Guan Q (2017) A deep convolution neural network model for vehicle recognition and face recognition. Proc Comput Sci 107:715–720. https://doi.org/10.1016/j.procs.2017.03.153

Ma RR, Niu LF (2018) A survey of sparse-learning methods for deep neural networks. In: 2018 IEEE/WIC/ACM international conference on web intelligence (WI 2018), pp 647–650. https://doi.org/10.1109/Wi.2018.00-20

Ma Y, Cao Y, Vrudhula S, Seo J (2018) Optimizing the convolution operation to accelerate deep neural networks on FPGA. IEEE Trans Very Large Scale Integr (VLSI) Syst 26(7):1354–1367. https://doi.org/10.1109/tvlsi.2018.2815603

Ma YF, Cao Y, Vrudhula S, Seo JS (2017) Optimizing loop operation and dataflow in FPGA acceleration of deep convolutional neural networks. In: FPGA’17: proceedings of the 2017 ACM/SIGDA international symposium on field-programmable gate arrays, pp 45–54. https://doi.org/10.1145/3020078.3021736

Mair J, Huang ZY, Eyers D, Chen YW (2015) Quantifying the energy efficiency challenges of achieving exascale computing. In: 2015 15th IEEE/ACM international symposium on cluster, cloud and grid computing, pp 943–950. https://doi.org/10.1109/CCGrid.2015.130

Marwa GAM, Mohamed B, Najoua C, Hedi BM (2017) Parallelism hardware computation for artificial neural network. In: 2017 IEEE/ACS 14th international conference on computer systems and applications (AICCSA), pp 1049–1055. https://doi.org/10.1109/Aiccsa.2017.166

Meiners CR, Liu AX, Torng E (2007) TCAM razor: a systematic approach towards minimizing packet classifiers in TCAMs. In: 2007 IEEE international conference on network protocols, pp 266–275

Meloni P, Capotondi A, Deriu G, Brian M, Conti F, Rossi D, Raffo L, Benini L (2018) Neuraghe:exploiting CPU-FPGA synergies for efficient and flexible CNN inference acceleration on ZYNQ SOCS.ACM Trans Reconfig Technol Syst 11(3). https://doi.org/10.1145/3284357

Misra J, Saha I (2010) Artificial neural networks in hardware a survey of two decades of progress. Neurocomputing 74(1–3):239–255. https://doi.org/10.1016/j.neucom.2010.03.021

Motamedi M, Gysel P, Akella V, Ghiasi S (2016) Design space exploration of FPGA-based deep convolutional neural networks. In: 2016 21st Asia and South Pacific design automation conference (ASP-DAC), pp 575–580

Nabavinejad Morteza S (2020) An overview of efficient interconnection networks for deep neural network accelerators. IEEE J Emerg Sel Top Circuits Syst 10(3):268–282. https://doi.org/10.1109/JETCAS.2020.3022920

Nakahara H, Fujii T, Sato S (2017) A fully connected layer elimination for a binarizec convolutional neural network on an fpga. In: 2017 27th international conference on field programmable logic and applications (FPL), pp 1–4. IEEE

Norige E, Liu AX, Torng E (2018) A ternary unification framework for optimizing TCAM-based packet classification systems. IEEE/ACM Trans Netw 26(2):657–670

Nurvitadhi E, Sheffield D, Sim J, Mishra A, Venkatesh G, Marr D (2016) Accelerating binarized neural networks: comparison of FPGA, CPU, GPU, and ASIC. In: 2016 international conference on field-programmable technology (FPT), pp 77–84. IEEE

Peemen M, Setio AA, Mesman B, Corporaal H (2013) Memory-centric accelerator design for convolutional neural networks. In: 2013 IEEE 31st international conference on computer design (ICCD), pp 13–19. IEEE

Podili A, Zhang C, Prasanna V (2017) Fast and efficient implementation of convolutional neural networks on FPGA. In: 2017 IEEE 28th international conference on application-specific systems, architectures and processors (ASAP), pp 11–18

Posewsky T, Ziener D (2018) Throughput optimizations for FPGA-based deep neural network inference. Microprocess Microsyst 60:151–161. https://doi.org/10.1016/j.micpro.2018.04.004

Qiu JT, Wang J, Yao S, Guo KY, Li BX, Zhou EJ, Yu JC, Tang TQ, Xu NY, Song S, Wang Y, Yang HZ (2016) Going deeper with embedded FPGA platform for convolutional neural network. In: Proceedings of the 2016 ACM/SIGDA international symposium on field-programmable gate arrays (FPGA’16), pp 26–35. https://doi.org/10.1145/2847263.2847265

Rahman A, Lee J, Choi K (2016) Efficient FPGA acceleration of convolutional neural networks using logical-3D compute array. In: Proceedings of the 2016 design, automation & test in Europe conference & exhibition (date), pp 1393–1398

Scanlan AG (2019) Low power & mobile hardware accelerators for deep convolutional neural networks. Integration 65:110–127. https://doi.org/10.1016/j.vlsi.2018.11.010

Shafiee A, Nag A, Muralimanohar N, Balasubramonian R, Strachan JP, Hu M, Williams RS, Srikumar V (2016) Isaac: a convolutional neural network accelerator with in-situ analog arithmetic in crossbars. ACM SIGARCH Comput Archit News 44(3):14–26

Shin D, Lee J, Lee J, Lee J, Yoo HJ (2018) Dnpu: an energy-efficient deep-learning processor with heterogeneous multi-core architecture. IEEE Micro 38(5):85–93

Simonyan K, Zisserman A (2014) Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556

Song L, Qian X, Li H, Chen Y (2017) Pipelayer: a pipelined reram-based accelerator for deep learning. In: 2017 IEEE international symposium on high performance computer architecture (HPCA), pp 541–552. IEEE

Srinivas S, Babu RV (2015) Data-free parameter pruning for deep neural networks. arXiv preprint arXiv:1507.06149

Vansteenkiste E, Al Farisi B, Bruneel K, Stroobandt D (2014) Tpar: place and route tools for the dynamic reconfiguration of the FPGA’s interconnect network. IEEE Trans Comput Aided Des Integr Circuits Syst 33(3):370–383. https://doi.org/10.1109/Tcad.2013.2291659

Waldrop MM (2016) The chips are down for Moore’s law. Nat News 530(7589):144

Wang JS, Lou QW, Zhang XF, Zhu C, Lin YH, Chen DM (2018) Design flow of accelerating hybrid extremely low bit-width neural network in embedded FPGA. In: 2018 28th international conference on field programmable logic and applications (FPL), pp 163–169. https://doi.org/10.1109/Fpl.2018.00035

Wang Y, Xu J, Han YH, Li HW, Li XW (2016) Deepburning: automatic generation of FPGA-based learning accelerators for the neural network family. In: 2016 ACM/EDAC/IEEE design automation conference (DAC). https://doi.org/10.1145/2897937.2898003

Xia LX, Li BX, Tang TQ, Gu P, Chen PY, Yu SM, Cao Y, Wang Y, Xie Y, Yang HZ (2018) Mnsim: simulation platform for memristor-based neuromorphic computing system. IEEE Trans Comput Aided Des Integr Circuits Syst 37(5):1009–1022. https://doi.org/10.1109/Tcad.2017.2729466

Xiao QC, Liang Y, Lu LQ, Yan SG, Tai YW (2017) Exploring heterogeneous algorithms for accelerating deep convolutional neural networks on FPGAs. In: Proceedings of the 2017 54th ACM/EDAC/IEEE design automation conference (DAC). https://doi.org/10.1145/3061639.3062244

Yin L, Cheng R, Yao W, Liu C, He J (2021) Emerging 2D memory devices for in-memory computing. Adv Mater 33. https://doi.org/10.1002/adma.202007081

Yu NG, Qiu S, Hu XL, Li JM (2017) Accelerating convolutional neural networks by group-wise 2D-filter pruning. In: 2017 international joint conference on neural networks (IJCNN), pp 2502–2509

Yu S, Jia S, Xu C (2017) Convolutional neural networks for hyperspectral image classification. Neurocomputing 219:88–98. https://doi.org/10.1016/j.neucom.2016.09.010

Zhan C, Fang ZM, Zhou PP, Pan PC, Cong J (2016) Caffeine: towards uniformed representation and acceleration for deep convolutional neural networks. In: 2016 IEEE/ACM international conference on computer-aided design (ICCAD). https://doi.org/10.1145/2966986.2967011

Zhang C, Li P, Sun G, Guan Y, Xiao B, Cong J (2015) Optimizing FPGA-based accelerator design for deep convolutional neural networks. In: Proceedings of the 2015 ACM/SIGDA international symposium on field-programmable gate arrays, pp 161–170. ACM

Zhang C, Prasanna V (2017) Frequency domain acceleration of convolutional neural networks on CPU-FPGA shared memory system. In: FPGA’17: proceedings of the 2017 ACM/SIGDA international symposium on field-programmable gate arrays, pp 35–44. https://doi.org/10.1145/3020078.3021727

Zhang M, Li LP, Wang H, Liu Y, Qin HB, Zhao W (2019) Optimized compression for implementing convolutional neural networks on FPGA. Electronics 8(3). https://doi.org/10.3390/electronics8030295

Zhang SJ, Du ZD, Zhang L, Lan HY, Liu SL, Li L, Guo Q, Chen TS, Chen YJ (2016) Cambricon-x: an accelerator for sparse neural networks. In: 2016 49th annual IEEE/ACM international symposium on microarchitecture (Micro)

Zhou S, Guo Q, Du Z, Liu D, Chen T, Li L, Liu S, Zhou J, Teman O, Feng X, Zhou X, Chen Y (2019) Paraml: a polyvalent multi-core accelerator for machine learning. IEEE Trans Comput Aided Des Integr Circuits Syst 39(9):1764–1777. https://doi.org/10.1109/TCAD.2019.2927523

Zhou X, Zhang J, Wan J, Zhou L, Wei Z, Zhang J (2019) Scheduling-efficient framework for neural network on heterogeneous distributed systems and mobile edge computing systems. IEEE Access 7:171853–171863

Zhou XC, Li SL, Tang F, Hu SD, Lin Z, Zhang L (2018) Danoc: an efficient algorithm and hardware codesign of deep neural networks on chip. IEEE Trans Neural Netw Learn Syst 29(7):3176–3187. https://doi.org/10.1109/Tnnls.2017.2717442

Zuo W, Liang Y, Li P, Rupnow K, Chen D, Cong J (2013) Improving high level synthesis optimization opportunity through polyhedral transformations. In: Proceedings of the ACM/SIGDA international symposium on field programmable gate arrays, pp 9–18. ACM

Acknowledgements

This work was supported in part by the National Natural Science Foundation of China under Grant 61836010.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

You, Y., Chang, Y., Wu, W. et al. New paradigm of FPGA-based computational intelligence from surveying the implementation of DNN accelerators. Des Autom Embed Syst 26, 1–27 (2022). https://doi.org/10.1007/s10617-021-09256-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10617-021-09256-8