Abstract

A key feature of embodied education is the participation of the learners’ body and mind with the environment. Yet, little work has been done to review the state of embodied education with Artificial Intelligence (AI). The goal of this systematic review is to examine the state of human and AI’s triad engagement in education, that is the mind, body, and environment. Through a review of N = 38 articles retrieved from SCOPUS and Web of Science (WoS), we code and analyze which of mind, body, and environment is present in each engagement reported per reviewed study. Further, we examine which one of the technologies (which may include AI), human or human + technology is present in each engagement. We summarize the demographic and embodied trends in the reviewed studies. Findings of our review show among the body, mind, and environment triad, the mind is most significantly present in the studies. The reviewed studies are most often concerned with the technicality of embodied AI in education, concentrating on the algorithms and accuracy of facial expressions, speech, etc. Far less attention has been paid to other important learner needs. The contribution of this work is in presenting a blueprint for current research on embodied AI in education, identifying implications of research, and offering a classification that includes the environment-body-mind triad and three possible entities per triad, namely the human, technology/AI, or human + technology. Future work needs to examine the combinations in which the engagement triad and entities may be present and their impact on humans’ well-being and research in the field overall.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Data availability

Data sharing does not apply to this article as no datasets were generated or analyzed during the current study.

References

Aaron, E., Mendoza, J. P., & Admoni, H. (2011). Integrated dynamical intelligence for interactive embodied agents. In ICAART 2011: Proceedings of the 3rd International Conference on Agents and Artificial Intelligence, VOL 2 (Issue PG-296-301, pp. 296–301). NS -.

Al Moubayed, S., Beskow, J., Bollepalli, B., Hussen-Abdelaziz, A., Johansson, M., Koutsombogera, M., Lopes, J. D., Novikova, J., Oertel, C., Skantze, G., Stefanov, K., & Varol, G. (2014). Tutoring robots multiparty multimodal social dialogue with an embodied tutor. In Innovative and Creative Developments in Multimodal Interaction Systems (Vol. 425, Issue PG-80-+, pp. 80-+). NS -.

Anderson, M. L. (2003). Embodied cognition: A field guide. Artificial Intelligence, 149(1), 91–130.

Andolina, S., Santangelo, A., & Gentile, A. (2010). Adaptive voice interaction for 3D representation of cultural heritage site. In Proceedings of the International Conference on Complex, Intelligent and Software Intensive Systems (CISIS 2010) (Issue PG-729-733, pp. 729–733). https://doi.org/10.1109/CISIS.2010.139.

Benjamin, D. P., Lyons, D., & Lonsdale, D. (2006). Embodying a cognitive model in a mobile robot. Proceedings of Spie The International Society for Optical Engineering, 6384(PG-), https://doi.org/10.1117/12.686163.

Bresler, L. (2013). Knowing bodies, moving minds: Towards embodied teaching and learning (3.). Springer Science & Business Media.

Burden, D. J. (2008). Deploying embodied AI into virtual worlds. International Conference on Innovative Techniques and Applications of Artificial Intelligence, 103–115.

Canamero, L. (2021). Embodied robot models for interdisciplinary emotion research. IEEE Transactions on Affective Computing, 12(2 PG-340–351), 340–351. https://doi.org/10.1109/TAFFC.2019.2908162.

Chaplot, D. S., Dalal, M., Gupta, S., Malik, J., & Salakhutdinov, R. (2021). SEAL: Self-supervised embodied active learning using exploration and 3D consistency. In Advances in Neural Information Processing Systems 34 (NEURIPS 2021) (Vol. 34, Issue PG-). NS -.

Choi, Y., Kim, N., Park, J., & Oh, S. (2020). Viewpoint estimation for visual target navigation by leveraging keypoint detection. Int. Conf. Control, Autom. Syst, 2020-Octob(PG-1162-1165), 1162–1165. https://doi.org/10.23919/ICCAS50221.2020.9268215.

Cubero, C. G., Pekarik, M., Rizzo, V., & Jochum, E. (2021). The robot is present: Creative approaches for artistic expression with robots. Frontiers in Robotics and AI, 8(PG-). https://doi.org/10.3389/frobt.2021.662249.

Damiano, L., & Stano, P. (2021). A wetware embodied AI? Towards an autopoietic organizational approach grounded in synthetic biology. Frontiers in Bioengineering and Biotechnology, 9, 724023.

de Back, T. T., Tinga, A. M., Nguyen, P., & Louwerse, M. M. (2020). Benefits of immersive collaborative learning in CAVE-based virtual reality. International Journal of Educational Technology in Higher Education, 17, https://doi.org/10.1186/s41239-020-00228-9.

Debenham, J., & Simoff, S. (2012). “Believable” agents build relationships on the web. In Distributed Computing and Artificial Intelligence (Vol. 151, Issue PG-65-+, pp. 65-+). NS -.

Dickinson, B. C., Jenkins, O. C., Moseley, M., Bloom, D., & Hartmann, D. (2007). Roomba pac-man: Teaching autonomous robotics through embodied gaming. AAAI Spring Symp. Tech. Rep, SS-07-09, 35–39. https://www.scopus.com/record/display.uri?eid=2-s2.0-37349037861&origin=inward&txGid=9256517655484ce2b6cb0fbb4862b592.

Duan, J. F., Jian, S. Y. B., & Tan, C. O. (2021). SPACE: A simulator for physical interactions and causal learning in 3D environments. In 2021 IEEE/CVF International Conference on Computer Vision Workshops (ICCVW 2021) (Issue PG-2058-2063, pp. 2058–2063). https://doi.org/10.1109/ICCVW54120.2021.00233.

Duan, J., Yu, S., Tan, H. L., Zhu, H., & Tan, C. (2022). A survey of embodied AI: From simulators to research tasks. IEEE Transactions on Emerging Topics in Computational Intelligence, 6(2), 230–244.

Echeverria, V., Martinez-Maldonado, R., Yan, L. X., Zhao, L. X., Fernandez-Nieto, G., Gasevic, D., & Shum, S. B. (2021). HuCETA: A framework for human-centered embodied teamwork analytics. IEEE Pervasive Computing. https://doi.org/10.1109/MPRV.2022.3217454.

Fan, X. C., Yen, J., Miller, M., Ioerger, T. R., & Volz, R. (2006). MALLET - A Multi-Agent Logic Language for Encoding Teamwork. IEEE Transactions on Knowledge and Data Engineering, 18(1 PG-123–138), 123–138. https://doi.org/10.1109/TKDE.2006.13.

Figat, M., & Zielinski, C. (2023). Synthesis of robotic system controllers using robotic system specification language. IEEE Robotics and Automation Letters, 8(2), 688–695. https://doi.org/10.1109/LRA.2022.3229231.

Fitton, I. S., Finnegan, D. J., & Proulx, M. J. (2020). Immersive virtual environments and embodied agents for e-learning applications. PEERJ Computer Science, 6:e315. https://doi.org/10.7717/peerj-cs.315.

Fogel, A., Swart, M., Scianna, J., Berland, M., & Nathan, M. J. (2021). Design for remote embodied learning: The hidden village-online. In 29th International Conference on Computers in Education (ICCE 2021), VOL II (Issue PG-247–252, pp. 247–252). NS -.

Foglia, L., & Wilson, R. A. (2013). Embodied cognition. Wiley Interdisciplinary Reviews: Cognitive Science, 4(3), 319–325.

Francesconi, D., & Tarozzi, M. (2012). Embodied education: A convergence of phenomenological pedagogy and embodiment. Studia Phaenomenologica, 12, 263–288.

Gao, Q., Thattai, G., Gao, X., Shakiah, S., Pansare, S., Sharma, V., & Natarajan, P. (2023). Alexa arena: A user-centric interactive platform for embodied ai. ArXiv Preprint.

Griol, D., Molina, J. M., & Callejas, Z. (2014). An approach to develop intelligent learning environments by means of immersive virtual worlds. J. Ambient Intell. Smart Environ, 6(2 PG-237–255), 237–255. https://doi.org/10.3233/AIS-140255.

Hegna, H. M., & Ørbæk, T. (2021). Traces of embodied teaching and learning: A review of empirical studies in higher education. Teaching in Higher Education, 1–22.

Hughes, J., Abdulali, A., Hashem, R., & Iida, F. (2022). Embodied Artificial Intelligence: Enabling the next intelligence revolution (Vol. 1261, No. 1, p. 012001). IOP Conference Series: Materials Science and Engineering.

Husbands, P., Shim, Y., Garvie, M., Dewar, A., Domcsek, N., Graham, P., Knight, J., Nowotny, T., & Philippides, A. (2021). Recent advances in evolutionary and bio-inspired adaptive robotics: Exploiting embodied dynamics. Appl Intell, 51(9 PG-6467–6496), 6467–6496. https://doi.org/10.1007/s10489-021-02275-9.

Johal, W., Bruno, B., Olsen, J. K., Chetouani, M., Lemaignan, S., & Sandygulova, A. (2021). Robots for learning - learner centred design. In HRI ’21: Companion of the 2021 ACM/IEEE International Conference on Human-Robot Interaction (Issue PG-715-716, pp. 715–716). https://doi.org/10.1145/3434074.3444873.

Johnson, W. L. (2001). Pedagogical agent research at CARTE. AI Mag, 22(4 PG-85–94), 85–94. https://www.scopus.com/inward/record.uri?eid=2-s2.0-0035695995&partnerID=40&md5=6c1144a7bd966ded2c380f37e3fc91c6 NS -.

Kenny, P., Hartholt, A., Gratch, J., Traum, D., Marsella, S., & Swartout, B. (2007). The more the merrier: Multi-party negotiation with Virtual Humans. Proc Natl Conf Artif Intell, 2(PG-1970-1971), 1970–1971. https://www.scopus.com/inward/record.uri?eid=2-s2.0-36348955482&partnerID=40&md5=72d3eecf13bbafbb8b5f7d3fc15cbc74 NS -.

Koster, N., Wrede, S., & Cimiano, P. (2018). An ontology for modelling human machine interaction in smart environments. In Proceedings of Sai Intelligent Systems Conference (Intellisys) 2016, VOL 2 (Vol. 16, Issue PG-338-350, pp. 338–350). https://doi.org/10.1007/978-3-319-56991-8_25.

Kovacs, A., & Jain, T. (2020). Informed consent-said who? A feminist perspective on principles of consent in the age of embodied data. SSRN.

Kovalev, A. K., & Panov, A. I. (2022). Application of pretrained large language models in embodied artificial intelligence. Doklady Mathematics, 106(1), 85–90.

Krishnaswamy, N., & Pustejovsky, J. (2018). Deictic adaptation in a virtual environment. In Spatial Cognition XI, Spatial Cognition 2018 (Vol. 11034, Issue PG-180-196, pp. 180–196). https://doi.org/10.1007/978-3-319-96385-3_13.

Küpers, W. (2014). Phenomenology of the embodied organization: The contribution of Merleau-Ponty for organizational studies and practice. Springer.

Lee, E. S., Kim, J., Park, S., & Kim, Y. M. (2022). MoDA: Map style transfer for self-supervised domain adaptation of embodied agents. In Computer Vision, ECCV 2022, PT XXXIX (Vol. 13699, Issue PG-338-354, pp. 338–354). https://doi.org/10.1007/978-3-031-19842-7_20.

Leigh, J. (2016). An embodied perspective on judgements of written reflective practice for professional development in higher education. Reflective Practice, 17(1), 72–85.

Li, J. C., Tang, S. L., Wu, F., & Zhuang, Y. T. (2019). Walking with MIND: Mental Imagery eNhanceD Embodied QA. In Proceedings of the 27th ACM International Conference on Multimedia (MM’19) (Issue PG-1211-1219, pp. 1211–1219). https://doi.org/10.1145/3343031.3351017.

Li, X. H., Guo, D., Liu, H. P., & Sun, F. C. (2022). REVE-CE: Remote Embodied Visual Referring Expression in Continuous Environment. IEEE Robotics and Automation Letters, 7(2 PG-1494–1501), 1494–1501. https://doi.org/10.1109/LRA.2022.3141150.

Li, C., Zhang, R., Wong, J., Gokmen, C., Srivastava, S., Martín-Martín, R., & Fei-Fei, L. (2023). Behavior-1k: A benchmark for embodied ai with 1,000 everyday activities and realistic simulation. Conference on Robot Learning, 80–93.

Lindgren, R., & Johnson-Glenberg, M. (2013). Emboldened by embodiment: Six precepts for research on embodied learning and mixed reality. Educational Researcher, 42(8), 445–452.

Linson, A., Clark, A., Ramamoorthy, S., & Friston, K. (2018). The active inference approach to ecological perception: General inforation dynamics for natural and artifical embodied cognition. Frontiers in Robotics and AI, 5(PG-). https://doi.org/10.3389/frobt.2018.00021.

Liu, S. G., & Ba, L. (2021). Construction and implementation of embodied mixed-reality learning environments. In 2021 International Conference on Big Data Engineering and Education (BDEE 2021) (Issue PG-126-131, pp. 126–131). https://doi.org/10.1109/BDEE52938.2021.00029.

Macrine, S. L., & Fugate, J. (2021). Translating embodied cognition for embodied learning in the classroom. Frontiers in Education, 6, 712626.

McShane, N., McCreadie, K., Charles, D., Korik, A., & Coyle, D. (2022). Online 3D motion decoder BCI for embodied virtual reality upper limb control: A pilot study. In 2022 IEEE International Conference on Metrology for Extended Reality, Artificial Intelligence and Neural Engineering (Metroxraine) (Issue PG-697–702, pp. 697–702). https://doi.org/10.1109/MetroXRAINE54828.2022.9967577

Merriam-Webster (2023). embody. https://www.merriam-webster.com/dictionary/embodied.

Nelekar, S., Abdulrahman, A., Gupta, M., & Richards, D. (2022). Effectiveness of embodied conversational agents for managing academic stress at an Indian University (ARU) during COVID-19. Br J Educ Technol, 53(3 PG-491–511), 491–511. https://doi.org/10.1111/bjet.13174.

Pfeifer, R. (2001). Embodied artificial intelligence 10 years back, 10 years forward. Informatics (pp. 294–310). Springer.

Rathunde, K. (2009). Nature and embodied education. The Journal of Developmental Processes, 4(1), 70–80.

Rodemeyer, L. M. (2020). Levels of Embodiment. In Time and Body: Phenomenological and Psychopathological Approaches.

Shapiro, L., & Stolz, S. A. (2019). Embodied cognition and its significance for education. Theory and Research in Education, 17(1), 19–39.

Shu, X., & Gu, X. (2023). An empirical study of a smart education model enabled by the edu-metaverse to enhance better learning outcomes for students. Systems, 11, https://doi.org/10.3390/systems11020075. 2 PG-).

Sinha, T., & Malhotra, S. (2022). Embodied Agents to Scaffold Data Science Education. Lecture Notes in Computer Science, 13356 LNCS, 150–155. https://doi.org/10.1007/978-3-031-11647-6_26.

Skulmowski, A., & Rey, G. D. (2017). Measuring cognitive load in embodied learning settings. Frontiers in Psychology, 8, 1191.

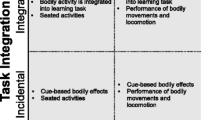

Skulmowski, A., & Rey, G. D. (2018). Embodied learning: Introducing a taxonomy based on bodily engagement and task integration. Cognitive Research: Principles and Implications, 3(1), 1–10.

Wachsmuth, I., & Knoblich, G. (2005). Embodied communication in humans and Machines - a research agenda. Artificial Intelligence Review, 24(3–4), 517–522. https://doi.org/10.1007/s10462-005-9015-5. PG-517–522.

Weihs, L., Salvador, J., Kotar, K., Jain, U., Zeng, K. H., Mottaghi, R., & Kembhavi, A. (2020). Allenact: A framework for embodied ai research. ArXiv Preprint.

Xu, J., Nagai, Y., Takayama, S., & Sakazawa, S. (2015). Developing embodied agents for education applications with accurate synchronization of gesture and speech. Lecture Notes in Computer Science, 9420(PG-1-22), 1–22. https://doi.org/10.1007/978-3-319-27543-7_1.

Yang, J. W., Ren, Z. L., Xu, M. Z., Chen, X. L., Crandall, D. J., Parikh, D., & Batra, D. (2019). Embodied amodal recognition: Learning to move to perceive objects. In 2019 IEEE/CVF International Conference on Computer Vision (ICCV 2019) (Issue PG-2040-2050, pp. 2040–2050). https://doi.org/10.1109/ICCV.2019.00213.

Yang, B. L., Xie, X. Y., Habibi, G., & Smith, J. R. (2021). Competitive physical human-robot game play. In HRI ’21: Companion of the 2021 ACM/IEEE International Conference on Human-Robot Interaction (Issue PG-242-246, pp. 242–246). https://doi.org/10.1145/3434074.3447168.

Zeng, K. H., Weihs, L., Farhadi, A., & Mottaghi, R. (2021). Pushing it out of the way: Interactive visual navigation. In 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR 2021 (Issue PG-9863-9872, pp. 9863–9872). https://doi.org/10.1109/CVPR46437.2021.00974.

Zhang, W., Chen, Z., & Zhao, R. (2021). A review of embodied learning research and its implications for information teaching practice. IEEE 3rd International Conference on Computer Science and Educational Informatization (CSEI), 27–34.

Acknowledgements

This study was funded by Canada Research Chair Program and Canada Foundation for Innovation.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

None.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Memarian, B., Doleck, T. Embodied AI in education: A review on the body, environment, and mind. Educ Inf Technol 29, 895–916 (2024). https://doi.org/10.1007/s10639-023-12346-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10639-023-12346-8