Abstract

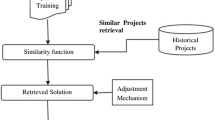

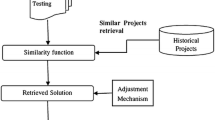

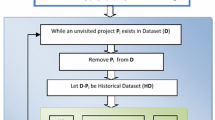

Among numerous possible choices of effort estimation methods, analogy-based software effort estimation based on Case-based reasoning is one of the most adopted methods in both the industry and research communities. Solution adaptation is the final step of analogy-based estimation, employed to aggregate and adapt to solutions derived during the case-based reasoning process. Variants of solution adaptation techniques have been proposed in previous studies; however, the ranking of these techniques is not conclusive and shows conflicting results, since different studies rank these techniques in different ways. This paper aims to find a stable ranking of solution adaptation techniques for analogy-based estimation. Compared with the existing studies, we evaluate 8 commonly adopted solution techniques with more datasets (12), more feature selection techniques included (4), and more stable error measures (5) to a robust statistical test method based on the Brunner test. This comprehensive experimental procedure allows us to discover a stable ranking of the techniques applied, and to observe similar behaviors from techniques with similar adaptation mechanisms. In general, the linear adaptation techniques based on the functions of size and productivity (e.g., regression towards the mean technique) outperform the other techniques in a more robust experimental setting adopted in this study. Our empirical results show that project features with strong correlation to effort, such as software size or productivity, should be utilized in the solution adaptation step to achieve desirable performance. Designing a solution adaptation strategy in analogy-based software effort estimation requires careful consideration of those influential features to ensure its prediction is of relevant and accurate.

Similar content being viewed by others

References

Albrecht AJ, Gaffney JE (1983) Software function, source lines of code, and development effort prediction: A software science validation. IEEE Trans Softw Eng 9 (6):639–648

Alpaydin E (2014) Introduction to machine learning MIT press

Azzeh M (2012) A replicated assessment and comparison of adaptation techniques for analogy-based effort estimation. Empirical Softw Eng 17(1-2):90–127

Baker DR (2007) A hybrid approach to expert and model based effort estimation. Master’s thesis, Lane Department of Computer Science and Electrical Engineering West Virginia University

Bakır A, Turhan B, Bener AB (2010) A new perspective on data homogeneity in software cost estimation: A study in the embedded systems domain. Software Qual J 18(1):57–80

Boehm BW (1981) Software Engineering Economics, 1st edn. Prentice Hall PTR, Upper Saddle River, NJ USA

Bosu MF, MacDonell SG (2013) A taxonomy of data quality challenges in empirical software engineering. In: Proceedings of the 2013 Australian Software Engineering Conference, pp 97–106

Brunner E, Munzel U, Puri ML (2002) The multivariate nonparametric behrens–fisher problem. J Stat Plan and Inf 108(1):37–53

Chen Z, Menzies T, Port D, Boehm B (2005) Feature subset selection can improve software cost estimation accuracy. SIGSOFT Softw Eng Notes 30(4):1–6

Chiu NH, Huang SJ (2007) The adjusted analogy-based software effort estimation based on similarity distances. J Syst Softw 80(4):628–640

Demšar J (2006) Statistical comparisons of classifiers over multiple data sets. J Mach Learn Res 7:1–30

Foss T, Stensrud E, Kitchenham B, Myrtveit I (2003) A simulation study of the model evaluation criterion mmre. IEEE Trans Softw Eng 29(11):985–995

Hastie T, Tibshirani R, Friedman J (2009) The elements of statistical learning: Data mining Inference and Prediction

Jørgensen M, Indahl U, Sjøberg D (2003) Software effort estimation by analogy and r̈egression toward the mean. J Syst Softw 68(3):253–262

Kemerer CF (1987) An empirical validation of software cost estimation models. Commun ACM 30(5):416–429

Keung J (2008) Empirical evaluation of analogy-x for software cost estimation. In: Proceedings of the 2nd ACM-IEEE International Symposium on Empirical Software Engineering and Measurement, pp 294–296

Keung J (2009) Software development cost estimation using analogy: A review. In: Proceedings of the 2009 Australian Software Engineering Conference, pp 327–336

Keung J, Kitchenham B (2008) Experiments with analogy-x for software cost estimation. In: Proceeding of the 19th Australasian Software Engineering Conference, pp 229–238

Keung J, Kocaguneli E, Menzies T (2013) Finding conclusion stability for selecting the best effort predictor in software effort estimation. Automated Software Eng 20(4):543–567

Keung JW, Kitchenham B, Jeffery DR, etal (2008) Analogy-x: Providing statistical inference to analogy-based software cost estimation. IEEE Trans Softw Eng 34(4):471–484

Kirsopp C, Mendes E, Premraj R, Shepperd M (2003) An empirical analysis of linear adaptation techniques for case-based prediction. In: Proceedings of the 5th international conference on Case-based reasoning: Research and Development, pp 231–245

Kitchenham B (2015) Robust statistical methods: why, what and how: keynote. In: Proceedings of the 19th International Conference on Evaluation and Assessment in Software Engineering, vol 1

Kitchenham B, Känsälä K (1993) Inter-item correlations among function points. In: Proceedings of the 15th International Conference on Software Engineering, pp 477–480

Kitchenham B, Lawrence Pfleeger S, McColl B, Eagan S (2002) An empirical study of maintenance and development estimation accuracy. J Syst Softw 64(1):57–77

Kitchenham B, Mendes E (2004) Software productivity measurement using multiple size measures. IEEE Trans Softw Eng 30(12):1023–1035

Kitchenham B, Mendes E (2009) Why comparative effort prediction studies may be invalid. In: Proceedings of the 5th International Conference on Predictor Models in Software Engineering, p 4

Kittler J (1986) Feature selection and extraction. Handbook of pattern recognition and image processing 59–83

Kocaguneli E, Gay G, Menzies T, Yang Y, Keung JW (2010) When to use data from other projects for effort estimation. In: Proceedings of the International Conference on Automated Software Engineering, pp 321–324

Kocaguneli E, Menzies T, Bener A, Keung JW (2012a) Exploiting the essential assumptions of analogy-based effort estimation. IEEE Trans Softw Eng 38 (2):425–438

Kocaguneli E, Menzies T, Hihn J, Kang BH (2012b) Size doesn’t matter?: On the value of software size features for effort estimation. In: Proceedings of the 8th International Conference on Predictive Models in Software Engineering. ACM, New York, pp 89–98

Kocaguneli E, Menzies T, Keung J (2012c) On the value of ensemble effort estimation. IEEE Trans Softw Eng 38(6):1403–1416

Kocaguneli E, Menzies T (2013) Software effort models should be assessed via leave-one-out validation. J Syst Softw 86(7):1879–1890

Kocaguneli E, Menzies T, Keung JW (2013a) Kernel methods for software effort estimation - effects of different kernel functions and bandwidths on estimation accuracy. Empir Software Eng 18(1):1–24

Kohavi R (1995) A study of cross-validation and bootstrap for accuracy estimation and model selection. In: Proceedings of the 14th International Joint Conference on Artificial Intelligence, pp 1137–1143

Kosti MV, Mittas N, Angelis L (2012) Alternative methods using similarities in software effort estimation. In: Proceedings of the 8th International Conference on Predictive Models in Software Engineering, pp 59–68

Li J, Ruhe G, Al-Emran A, Richter MM (2007) A flexible method for software effort estimation by analogy. Empirical Softw Eng 12(1):65–106

Li YF, Xie M, Goh TN (2009) A study of the non-linear adjustment for analogy based software cost estimation. Empirical Softw Eng 14(6):603–643

Maxwell K (2002) Applied Statistics for Software Managers. Englewood Cliffs, NJ. Prentice-Hall

Mendes E, Mosley N, Counsell S (2003) A replicated assessment of the use of adaptation rules to improve web cost estimation. In: Proceedings of the 2003 International Symposium on Empirical Software Engineering, pp 100–109

Menzies T, Jalali O, Hihn J, Baker D, Lum K (2010) Stable rankings for different effort models. Automated Software Eng 17(4):409–437

Menzies T, Rees-Jones M, Krishna R, Pape C (2015) Tera-promise: One of the largest repositories of se research data http://openscience.us/repo/index.html

Miyazaki Y, Terakado M, Ozaki K, Nozaki H (1994) Robust regression for developing software estimation models. J Syst Softw 27(1):3–16

Phannachitta P, Keung J, Matsumoto K (2013) An empirical experiment on analogy-based software cost estimation with cuda framework. In: Proceedings of the 2013 22nd Australian Conference on Software Engineering, pp 165–174

Phannachitta P, Monden A, Keung J, Matsumoto K (2015) Case consistency: a necessary data quality property for software engineering data sets. In: Proceeding of the 19th International Conference on Evaluation and Assessment in Software Engineering, p 19

Premraj R, Shepperd M, Kitchenham B, Forselius P (2005) An empirical analysis of software productivity over time. In: Proceedings of the 11th IEEE International Software Metrics Symposium, p 37

Shepperd M, Cartwright M (2005) A replication of the use of regression towards the mean (r2m) as an adjustment to effort estimation models. In: Proceedings of the 11th IEEE International Software Metrics Symposium, pp 38–47

Shepperd M, Schofield C (1997) Estimating software project effort using analogies. IEEE Trans Softw Eng 23(11):736–743

Shepperd M, Kadoda G (2001) Comparing software prediction techniques using simulation. IEEE Trans Softw Eng 27(11):1014–1022

Tosun A, Turhan B, Bener AB (2009) Feature weighting heuristics for analogy-based effort estimation models. Expert Syst Appl 36(7):10,325–10,333

Walkerden F, Jeffery R (1999) An empirical study of analogy-based software effort estimation. Empirical Softw Eng 4(2):135–158

Wen J, Li S, Tang L (2009) Improve analogy-based software effort estimation using principal components analysis and correlation weighting. In: Proceeding of the 2009 Asia-Pacific Software Engineering Conference, pp 179–186

Wilcox R (2011) Modern statistics for the social and behavioral sciences: A practical introduction CRC press

Wilson DR, Martinez TR (1997) Improved heterogeneous distance functions. J Artif Int Res 6(1):1–34

Zimmerman DW (2000) Statistical significance levels of nonparametric tests biased by heterogeneous variances of treatment groups. J Gen Psychol 127(4):354–364

Acknowledgments

This research was supported by JSPS KAKENHI Grant number 26330086, was conducted as a part of the JSPS Program for Advancing Strategic International Networks to Accelerate the Circulation of Talented Researchers, and was supported in part by the City University of Hong Kong research fund (Project number 7200354, 7004222, and 7004474).

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by: Martin Shepperd

Rights and permissions

About this article

Cite this article

Phannachitta, P., Keung, J., Monden, A. et al. A stability assessment of solution adaptation techniques for analogy-based software effort estimation. Empir Software Eng 22, 474–504 (2017). https://doi.org/10.1007/s10664-016-9434-8

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10664-016-9434-8