Abstract

Context

Classification techniques of supervised machine learning have been successfully applied to various domains of practice. When building a predictive model, there are two important criteria: predictive accuracy and interpretability, which generally have a trade-off relationship. In particular, interpretability should be accorded greater emphasis in the domains where the incorporation of expert knowledge into a predictive model is required.

Objective

The aim of this research is to propose a new classification model, called superposed naive Bayes (SNB), which transforms a naive Bayes ensemble into a simple naive Bayes model by linear approximation.

Method

In order to evaluate the predictive accuracy and interpretability of the proposed method, we conducted a comparative study using well-known classification techniques such as rule-based learners, decision trees, regression models, support vector machines, neural networks, Bayesian learners, and ensemble learners, over 13 real-world public datasets.

Results

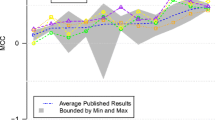

A trade-off analysis between the accuracy and interpretability of different classification techniques was performed with a scatter plot comparing relative ranks of accuracy with those of interpretability. The experiment results show that the proposed method (SNB) can produce a balanced output that satisfies both accuracy and interpretability criteria.

Conclusions

SNB offers a comprehensible predictive model based on a simple and transparent model structure, which can provide an effective way for balancing the trade-off between accuracy and interpretability.

Similar content being viewed by others

References

Agterberg FP, Bonham-Carter GF, Wright DF (1990) Statistical pattern integration for mineral exploration. Computer Applications in Resource Estimation Prediction and Assessment for Metals and Petroleum, pp 1–21

Akaike H (1973) Information theory and an extension of the maximum likelihood principle. In: Second international symposium on information theory, pp 267–281

Allahyari H, Lavesson N (2011) User-oriented assessment of classification model understandability. In: 11th Scandinavian conference on artificial intelligence, pp 11–19

Aly M (2005) Survey on multiclass classification methods. Neural Netw 19:1–9

Bauer E, Kohavi R (1999) An empirical comparison of voting classification algorithms: bagging, boosting, and variants. Mach Learn 36(1-2):105–139

Bishop CM (2006) Pattern recognition and machine learning. Springer

Bonham-Carter GF, Agterberg FP, Wright DF (1988) Integration of geological datasets for gold exploration in Nova Scotia. Digital Geologic and Geographic Information Systems, pp 15–23

Bouckaert RR (2004) Naive bayes classifiers that perform well with continuous variables. In: Australasian joint conference on artificial intelligence, pp 1089–1094

Breiman L (1996) Bagging predictors. Mach Learn 24(2):123–140

Breiman L (2001) Random forests. Mach Learn 45(1):5–32

Briand LC, Basili VR, Thomas WM (1992) A pattern recognition approach for software engineering data analysis. IEEE Trans Softw Eng 18(11):931–942

Burges CJC (1998) A tutorial on support vector machines for pattern recognition. Data Min Knowl Disc 2(2):121–167

Bury H, Wagner D (2008) Group judgement with ties. Distance-based methods. In: Aschemann H (ed) New approaches in automation and robotics. IntechOpen, London, pp 153–172

Carranza EJM (2004) Weights of evidence modeling of mineral potential: a case study using small number of prospects, Abra, Philippines. Nat Resour Res 13(3):173–187

Cestnik B (1990) Estimating probabilities: a crucial task in machine learning. In: Proceedings of the 9th European conference on artificial intelligence, ECAI '90, pp 147–149

Choetkiertikul M, Dam HK, Tran T, Ghose A (2015) Characterization and prediction of issue-related risks in software projects. In: Proceedings of the 12th working conference on mining software repositories, pp 280–291

Cohen WW (1995) Fast effective rule induction. In: Proceedings of the twelfth international conference on machine learning, ICML’95, pp 115–123

Dahal RK, Hasegawa S, Nonomura A, Yamanaka M, Masuda T, Nishino K (2008) GIS-based weights-of-evidence modelling of rainfall-induced landslides in small catchments for landslide susceptibility mapping. Environ Geol 54(2):311–324

Dejaeger K, Verbeke W, Martens D, Baesens B (2012) Data mining techniques for software effort estimation: a comparative study. IEEE Trans Softw Eng 38(2):375–397

Dejaeger K, Verbraken T, Baesens B (2013) Toward comprehensible software fault prediction models using bayesian network classifiers. IEEE Trans Softw Eng 39(2):237–257

Domingos P, Pazzani M (1997) On the optimality of the simple Bayesian classifier under zero-one loss. Mach Learn 29(2):103–130

Fawcett T (2006) An introduction to ROC analysis. Pattern Recogn Lett 27(8):861–874

Fayyad U, Irani K (1993) Multi-interval discretization of continuous-valued attributes for classification learning. In: Proceedings of the 13th international joint conference on artificial intelligence, IJCAI’93, pp 1022–1029

Fayyad U, Piatetsky-Shapiro G, Smyth P (1996) From data mining to knowledge discovery in databases. AI Mag 17(3):37–54

Fenton NE, Neil M (1999) A critique of software defect prediction models. IEEE Trans Softw Eng 25(5):675–689

Fenton N, Neil M, Marsh W, Hearty P, Radliński Ł, Krause P (2008) On the effectiveness of early life cycle defect prediction with Bayesian nets. Empir Softw Eng 13(5):499–537

Freitas AA (2004) A critical review of multi-objective optimization in data mining: a position paper. ACM SIGKDD Explorations Newsletter 6(2):77–86

Freitas AA (2014) Comprehensible classification models: a position paper. ACM SIGKDD Explorations Newsletter 15(1):1–10

Freund Y, Schapire RE (1997) A decision-theoretic generalization of on-line learning and an application to boosting. J Comput Syst Sci 55(1):119–139

Friedman JH (2001) Greedy function approximation: a gradient boosting machine. Ann Stat 29(5):1189–1232

Friedman JH (2002) Stochastic gradient boosting. Comput Stat Data Anal 38(4):367–378

Friedman N, Geiger D, Goldszmidt M (1997) Bayesian network classifiers. Mach Learn 29(2-3):131–163

Ghotra B, McIntosh S, Hassan AE (2015) Revisiting the impact of classification techniques on the performance of defect prediction models. In: Proceedings of the 37th international conference on software engineering. ICSE’15, pp 789–800

Goldstein A, Kapelner A, Bleich J, Pitkin E (2015) Peeking inside the black box: Visualizing statistical learning with plots of individual conditional expectation. J Comput Graph Stat 24(1):44–65

Good IJ (1985) Weight of evidence: a brief survey. In: Bernardo JM, DeGroot MH, Lindley DV, Smith AFM (eds) Bayesian Statistics 2: Proceedings of the second valencia international meeting: September 6/10, 1983, New York: North Holland, pp 249–269 (including discussion)

Goodman SN (1999) Toward evidence-based medical statistics. 2: the Bayes factor. Ann Intern Med 130(12):1005–1013

Guo L, Ma Y, Cukic B, Singh H (2004) Robust prediction of fault-proneness by random forests. In: IEEE 15th international symposium on software reliability engineering, ISSRE, pp 417–428

Hall T, Beecham S, Bowes D, Gray D, Counsell S (2012) A systematic literature review on fault prediction performance in software engineering. IEEE Trans Softw Eng 38(6):1276–1304

Halstead MH (1977) Elements of software science. Elsevier

Hastie T, Tibshirani R, Friedman J (2009) The elements of statistical learning, 2nd edn. Springer

Heckerman DE, Horvitz EJ, Nathwani BN (1991) Toward normative expert systems: the Pathfinder project. Methods Inf Med 31(2):90–105

Holte RC (1993) Very simple classification rules perform well on most commonly used datasets. Mach Learn 11(1):63–90

Hu Y, Zhang X, Ngai EWT, Cai R, Liu M (2013) Software project risk analysis using Bayesian networks with causality constraints. Decis Support Syst 56:439–449

Huysmans J, Dejaeger K, Mues C, Vanthienen J, Baesens B (2011) An empirical evaluation of the comprehensibility of decision table, tree and rule based predictive models. Decis Support Syst 51(1):141–154

Jain AK, Mao J, Mohiuddin KM (1996) Artificial neural networks: a tutorial. IEEE Comput 29(3):31–44

Jelihovschi E, Faria JC, Allaman IB (2014) ScottKnott: a package for performing the Scott-Knott clustering algorithm in R. Trends in Applied and Computational Mathematics 15(1):003–017

Jiang Y, Cukic B, Ma Y (2008a) Techniques for evaluating fault prediction models. Empir Softw Eng 13(5):561–595

Jiang Y, Cukic B, Menzies T, Bartlow N (2008b) Comparing design and code metrics for software quality prediction. In: Proceedings of the 4th international workshop on Predictor models in software engineering, pp 11–18

Jiang L, Zhang H, Cai Z (2009) A novel Bayes model: hidden naive Bayes. IEEE Trans Knowl Data Eng 21(10):1361–1371

John GH, Langley P (1995) Estimating continuous distributions in Bayesian classifiers. In: Proceedings of the 11th conference on uncertainty in artificial intelligence, pp 338–345

Kamei Y, Shihab E (2016) Defect prediction: accomplishments and future challenges. In: 23rd International conference on software analysis, evolution, and reengineering, SANER, vol 5, pp 33–45

Kamei Y, Shihab E, Adams B, Hassan AE, Mockus A, Sinha A, Ubayashi N (2013) A large-scale empirical study of just-in-time quality assurance. IEEE Trans Softw Eng 39(6):757–773

Kim S, Whitehead EJ Jr, Zhang Y (2008) Classifying software changes: clean or buggy? IEEE Trans Softw Eng 34(2):181–196

Kohavi R (1996) Scaling up the accuracy of Naive-Bayes classifiers: a decision-tree hybrid. In: Proceedings of the 2nd international conference on knowledge discovery and data mining. KDD96, pp 202–207

Kononenko I (1993) Inductive and Bayesian learning in medical diagnosis. Appl Artif Intell 7(4):317–337

Kotsiantis SB, Zaharakis I, Pintelas P (2007) Supervised machine learning: a review of classification techniques. Informatica 31:249–268

Kulesza T, Burnett M, Wong WK, Stumpf S (2015) Principles of explanatory debugging to personalize interactive machine learning. In: Proceedings of the 20th International Conference on Intelligent User Interfaces, pp 126–137

Le Cessie S, Van Houwelingen JC (1992) Ridge estimators in logistic regression. Appl Stat 191–201

Lessmann S, Baesens B, Mues C, Pietsch S (2008) Benchmarking classification models for software defect prediction: a proposed framework and novel findings. IEEE Trans Softw Eng 34(4):485–496

Lewis DD (1998) Naive (Bayes) at forty: the independence assumption in information retrieval. In: European conference on machine learning, pp 4–15

Lipton ZC (2016) The mythos of model interpretability. In: 2016 ICML workshop on human interpretability in machine learning. WHI 2016

Madigan D, Mosurski K, Almond RG (1997) Graphical explanation in belief networks. J Comput Graph Stat 6(2):160–181

Malhotra R (2015) A systematic review of machine learning techniques for software fault prediction. Appl Soft Comput 27:504–518

Martens D, Vanthienen J, Verbeke W, Baesens B (2011) Performance of classification models from a user perspective. Decis Support Syst 51(4):782–793

McCabe TJ (1976) A complexity measure. IEEE Trans Softw Eng 2(4):308–320

Menzies T, Greenwald J, Frank A (2007) Data mining static code attributes to learn defect predictors. IEEE Trans Softw Eng 33(1):2–13

Menzies T, Krishna R, Pryor D (2016) The Promise Repository of Empirical Software Engineering Data; http://openscience.us/repo. North Carolina State University, Department of Computer Science bibtex

Mori T (2015) Superposed Naive Bayes for Accurate and Interpretable Prediction. In: Proceedings of the 14th IEEE international conference on machine learning and applications. ICMLA 2015, pp 1228–1233

Mori T, Tamura S, Kakui S (2013) Incremental estimation of project failure risk with Naive Bayes classifier. In: Proceedings of 7th ACM/IEEE international symposium on empirical software engineering and measurement. ESEM 2013, pp 283–286

Platt JC (1999) Probabilistic outputs for support vector machines and comparisons to regularized likelihood methods. Advances in Large Margin Classifiers, 10(3):61–74

Quinlan JR (1993) C4.5: programs for machine learning. Morgan Kaufmann, San Mateo

Ribeiro MT, Singh S, Guestrin C (2016) Why should I trust you?: Explaining the predictions of any classifier. In: Proceedings of the 22nd ACM SIGKDD international conference on knowledge discovery and data mining, pp 1135–1144

Ridgeway G, Madigan D, Richardson T, O'Kane J (1998) Interpretable boosted Naive Bayes classification. In: Proceedings of the 4th international conference on knowledge discovery and data mining. KDD98, pp 101–104

Rish I (2001) An empirical study of the naive Bayes classifier. In: IJCAI 2001 workshop on empirical methods in artificial intelligence, 3(22):41–46

Saaty TL (1990) How to make a decision: the analytic hierarchy process. Eur J Oper Res 48(1):9–26

Sakamoto Y, Akaike H (1978) Analysis of cross classified data by AIC. Ann Inst Stat Math 30(1):185–197

Schwarz G (1978) Estimating the dimension of a model. Ann Stat 6(2):461–464

Shepperd M, Song Q, Sun Z, Mair C (2013) Data quality: some comments on the NASA software defect datasets. IEEE Trans Softw Eng 39(9):1208–1215

Spiegelhalter DJ, Knill-Jones RP (1984) Statistical and knowledge-based approaches to clinical decision-support systems, with an application in gastroenterology. Journal of the Royal Statistical Society. Series A (General):35–77

Vandecruys O, Martens D, Baesens B, Mues C, De Backer M, Haesen R (2008) Mining software repositories for comprehensible software fault prediction models. J Syst Softw 81(5):823–839

Webb GI (2000) Multiboosting: a technique for combining boosting and wagging. Mach Learn 40(2):159–196

Webb GI, Boughton JR, Wang Z (2005) Not so naive Bayes: aggregating one-dependence estimators. Mach Learn 58(1):5–24

Wen J, Li S, Lin Z, Hu Y, Huang C (2012) Systematic literature review of machine learning based software development effort estimation models. Inf Softw Technol 54(1):41–59

Witten IH, Frank E, Hall MA, Pal CJ (2011) Data mining: practical machine learning tools and techniques, 3rd edn. Morgan Kaufmann

Yang Y, Webb GI (2009) Discretization for naive-Bayes learning: managing discretization bias and variance. Mach Learn 74(1):39–74

Zadrozny B, Elkan C (2002) Transforming classifier scores into accurate multiclass probability estimates. In: Proceedings of the eighth ACM SIGKDD international conference on Knowledge discovery and data mining, pp 694–699

Zhang H (2004) The optimality of naive Bayes. In: Proceedings of the 17th Florida artificial intelligence research society conference. FLAIRS2004, pp 562–567

Zimmermann T, Premraj R, Zeller A (2007) Predicting defects for eclipse. In: Proceedings of the third international workshop on predictor models in software engineering, IEEE Computer Society, pp 9

Zimmermann T, Nagappan N, Gall H, Giger E, Murphy B (2009) Cross-project defect prediction: a large scale experiment on data vs. domain vs. process. In: Proceedings of the the 7th joint meeting of the European software engineering conference and the ACM SIGSOFT symposium on The foundations of software engineering, pp 91–100

Acknowledgments

The authors would like to thank Prof. William Riley Holden of Japan Advanced Institute of Science and Technology (JAIST) for his helpful comments and advice on the manuscript. The authors also would like to thank the anonymous reviewers who gave us invaluable suggestions.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by: Tim Menzies

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix 1

The AIC for the contingency table of Table 1 is derived as follows (Sakamoto and Akaike 1978):

If mutual independence between Y and Xi is assumed, the AIC changes like the following:

The difference between the AIC of the dependence model (\( {AIC}_1^{(i)} \)) and that of the independence model (\( {AIC}_0^{(i)} \)) is given by

Similarly to the MDL criterion (Fayyad and Irani 1993), the discretization method using AIC starts with a single interval containing all data, and recursively splits intervals. If ΔAIC(i) is greater than a given (negative) threshold, the independence model will be selected as a model with a smaller AIC, so that no more splitting is needed. The total sum of ΔAIC(i) for all the splittings is AIC(i), the reversal of which indicates the importance of the variable Xi. The variables that have positive AIC can be ignored, because the AIC of a single interval variable is equal to zero, as derived from Eq. 11.

The discretization process using BIC is almost same as that based on AIC. ΔBIC(i), i.e., the difference between the BIC of a dependence model (\( {BIC}_1^{(i)} \)) and that of a independence model (\( {BIC}_0^{(i)} \)), is obtained by simply replacing the last term 2(k − 1) in Eq. 13 with logn(k − 1), where n is the number of instances.

The total sum of ΔBIC(i) for all the splittings is BIC(i), the reversal of which indicates the importance of the variable Xi.

Appendix 2

Abbr. | Parameter Settings in Weka machine learning toolkit |

OneR | OneR -B 6 |

JRip | JRip -F 3 -N 2.0 -O 2 -S 1 |

J48 | J48 -C 0.25 -M 2 |

NBTree | NBTree |

LR | Logistic -R 1.0E-8 -M -1 |

SVM | SMO -C 1.0 -L 0.001 -P 1.0E-12 -N 0 -M -V -1 -W 1 -K “weka.classifiers.functions.supportVector.PolyKernel -C 250007 -E 1.0” |

MLP | MultilayerPerceptron -L 0.3 -M 0.2 -N 500 -V 0 -S 0 -E 20 -H a |

NBc | NaiveBayes |

NBd | NaiveBayes –D |

TAN | BayesNet -D -Q weka.classifiers.bayes.net.search.local.TAN -- -S BAYES -E weka.classifiers.bayes.net.estimate SimpleEstimator -- -A 0.5 |

AODE | FilteredClassifier -F “weka.filter.supervised.attribute.Discretize -R first-last” -W weka.classifiers.bayes.AODE -- -F 1 |

HNB | FilteredClassifier -F “weka.filter.supervised.attribute.Discretize -R first-last” -W weka.classifiers.bayes.HNB |

AdaBst | AdaBoostM1 -P 100 -S 1 -I 100 -W weka.classifiers.trees.DecisionStump |

RF | RandomForest -I 100 -K 0 -S 1 |

Appendix 3

Dataset | Random Seeds used in Weka | ||||

|---|---|---|---|---|---|

1st CV | 2nd CV | 3rd CV | 4th CV | 5th CV | |

MC2 | 869 | 280 | 820 | 326 | 961 |

KC3 | 309 | 433 | 717 | 708 | 321 |

MW1 | 154 | 509 | 564 | 850 | 493 |

CM1 | 325 | 629 | 602 | 391 | 467 |

PC1 | 111 | 501 | 663 | 151 | 200 |

PC2 | 474 | 303 | 537 | 936 | 614 |

PC3 | 298 | 566 | 445 | 44 | 381 |

PC4 | 711 | 652 | 811 | 516 | 832 |

PC5 | 543 | 569 | 505 | 684 | 978 |

MC1 | 292 | 604 | 61 | 154 | 746 |

JM1 | 172 | 283 | 92 | 966 | 398 |

Bugzilla | 559 | 776 | 599 | 493 | 383 |

Columba | 782 | 155 | 941 | 517 | 882 |

Rights and permissions

About this article

Cite this article

Mori, T., Uchihira, N. Balancing the trade-off between accuracy and interpretability in software defect prediction. Empir Software Eng 24, 779–825 (2019). https://doi.org/10.1007/s10664-018-9638-1

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10664-018-9638-1