Abstract

Self-healing is becoming an essential feature of Cyber-Physical Systems (CPSs). CPSs with this feature are named Self-Healing CPSs (SH-CPSs). SH-CPSs detect and recover from errors caused by hardware or software faults at runtime and handle uncertainties arising from their interactions with environments. Therefore, it is critical to test if SH-CPSs can still behave as expected under uncertainties. By testing an SH-CPS in various conditions and learning from testing results, reinforcement learning algorithms can gradually optimize their testing policies and apply the policies to detect failures, i.e., cases that the SH-CPS fails to behave as expected. However, there is insufficient evidence to know which reinforcement learning algorithms perform the best in terms of testing SH-CPSs behaviors including their self-healing behaviors under uncertainties. To this end, we conducted an empirical study to evaluate the performance of 14 combinations of reinforcement learning algorithms, with two value function learning based methods for operation invocations and seven policy optimization based algorithms for introducing uncertainties. Experimental results reveal that the 14 combinations of the algorithms achieved similar coverage of system states and transitions, and the combination of Q-learning and Uncertainty Policy Optimization (UPO) detected the most failures among the 14 combinations. On average, the Q-Learning and UPO combination managed to discover two times more failures than the others. Meanwhile, the combination took 52% less time to find a failure. Regarding scalability, the time and space costs of the value function learning based methods grow, as the number of states and transitions of the system under test increases. In contrast, increasing the system’s complexity has little impact on policy optimization based algorithms.

Similar content being viewed by others

Notes

UML: Unified Modeling Language (https://www.omg.org/spec/UML/)

Stereotype: it is an extension mechanism provided by UML. We defined a set of stereotypes, also called UML profiles, to extend UML class diagram and state machine to specify components, uncertainties, and expected behaviors of the SH-CPS under test.

OCL: Object Constraint Language. https://www.omg.org/spec/OCL/

Other sensors include barometer, accelerometer and gyroscope. Limited by the space, they are not shown in Fig. 1.

DresdenOCL (https://github.com/dresden-ocl/standalone) is used in TM-Executor to evaluate OCL constraints.

An episode is to execute an SH-CPS from an initial state to a final state once.

The test models are available at http://zen-tools.com/journal/TSHCPS_RL.html

Q represents Q-learning and S represents SARSA.

References

Ali S, Iqbal MZ, Arcuri A, Briand LC (2013) Generating test data from OCL constraints with search techniques. IEEE Trans Softw Eng 39(10):1376–1402

Arulkumaran K, Deisenroth MP, Brundage M, Bharath AA (2017) A brief survey of deep reinforcement learning. arXiv preprint arXiv:1708.05866

Benjamini Y, Hochberg Y (1995) Controlling the false discovery rate: a practical and powerful approach to multiple testing. J R Stat Soc Ser B Methodol:289–300

Brandstotter M, Hofbaur MW, Steinbauer G, Wotawa F (2007) Model-based fault diagnosis and reconfiguration of robot drives, 2007 IEEE/RSJ international conference on intelligent robots and systems, vol 2007, San Diego, CA, USA, pp 1203–1209. https://doi.org/10.1109/IROS.2007.4399092

Camilli M, Gargantini A, Scandurra P (2020) Model-based hypothesis testing of uncertain software systems. Softw Test Verifi Reliab 30(2):e1730

Derderian KA (2006) Automated test sequence generation for finite state machines using genetic algorithms. Brunel University, School of Information Systems, Computing and Mathematics, Ph.D. thesis. http://bura.brunel.ac.uk/handle/2438/3062

Duan Y, Chen X, Houthooft R, Schulman J, Abbeel P (2016) Benchmarking Deep Reinforcement Learning for Continuous Control. Proceedings of The 33rd International Conference on Machine Learning, in PMLR 48:1329–1338

Dunn OJ (1964) Multiple comparisons using rank sums. Technometrics 6(3):241–252

Groce A, Fern A, Pinto J, Bauer T, Alipour A, Erwig M et al (2012) Lightweight automated testing with adaptation-based programming, 2012 IEEE 23rd international symposium on software reliability engineering. Dallas, TX, USA 2012:161–170. https://doi.org/10.1109/ISSRE.2012.1

Güdemann M, Ortmeier F, Reif W (2006) Safety and dependability analysis of self-adaptive systems, second international symposium on leveraging applications of formal methods, verification and validation (isola 2006), vol 2006, Paphos, Cyprus, pp 177–184. https://doi.org/10.1109/ISoLA.2006.38

Henderson P, Islam R, Bachman P, Pineau J, Precup D, Meger D (2018) Deep reinforcement learning that matters. Proceedings of the AAAI Conference on Artificial Intelligence 32(1). Retrieved from https://ojs.aaai.org/index.php/AAAI/article/view/11694

Jaderberg M, Dalibard V, Osindero S, Czarnecki WM, Donahue J, Razavi A, et al. (2017) Population based training of neural networks. arXiv preprint arXiv:1711.09846

Jedlitschka A, Ciolkowski M, Pfahl D (2008) Reporting experiments in software engineering. In: Shull F, Singer J, Sjøberg DIK (eds) Guide to advanced empirical software engineering. Springer, London. https://doi.org/10.1007/978-1-84800-044-5_8

Ji R, Li Z, Chen S, Pan M, Zhang T, Ali S et al (2018) Uncovering unknown system behaviors in uncertain networks with model and search-based testing. In: IEEE 11th international conference on software testing, verification and validation (ICST), vol 2018, Västerås, Sweden, pp 204–214. https://doi.org/10.1109/ICST.2018.00029

Khadka S, Tumer K (2018) Evolution-guided policy gradient in reinforcement learning. arXiv preprint arXiv:1611.01224

Kitchenham BA, Pfleeger SL, Pickard LM, Jones PW, Hoaglin DC, El Emam K et al (2002) Preliminary guidelines for empirical research in software engineering. IEEE Trans Softw Eng 28(8):721–734

Kiumarsi B, Vamvoudakis KG, Modares H, Lewis FL (2017) Optimal and autonomous control using reinforcement learning: a survey. IEEE Trans Neural Netw Learn Syst 29(6):2042–2062

Kober J, Bagnell JA, Peters J (2013) Reinforcement learning in robotics: a survey. Int J Robot Res 32(11):1238–1274

Kruskal WH, Wallis WA (1952) Use of ranks in one-criterion variance analysis. J Am Stat Assoc 47(260):583–621

Lefticaru R, Ipate F (2007) Automatic state-based test generation using genetic algorithms, vol 2007. Ninth International Symposium on Symbolic and Numeric Algorithms for Scientific Computing (SYNASC 2007), Timisoara, Romania, pp 188–195. https://doi.org/10.1109/SYNASC.2007.47

Lehre PK, Yao X (2014) Runtime analysis of the (1+ 1) EA on computing unique input output sequences. Inf Sci 259:510–531

Lillicrap TP, Hunt JJ, Pritzel A, Heess N, Erez T, Tassa Y, et al. (2015). Continuous control with deep reinforcement learning. arXiv preprint arXiv:1509.02971

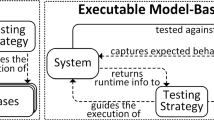

Ma T, Ali S, Yue T (2019a) Modeling foundations for executable model-based testing of self-healing cyber-physical systems. Softw Syst Model 18(5):2843–2873

Ma T, Ali S, Yue T, Elaasar M (2019b) Testing self-healing cyber-physical systems under uncertainty: a fragility-oriented approach. Softw Qual J 27(2):615–649

Mariani L, Pezze M, Riganelli O, Santoro M (2012) AutoBlackTest: automatic black-box testing of interactive applications. In: 2012 IEEE fifth international conference on software testing, verification and validation, vol 2012, Montreal, QC, Canada, pp 81–90. https://doi.org/10.1109/ICST.2012.88

Martens J, Grosse R (2015) Optimizing neural networks with Kronecker-factored approximate curvature. Proceedings of the 32nd International Conference on Machine Learning, in Proceedings of Machine Learning Research 37:2408–2417. Available from http://proceedings.mlr.press/v37/martens15.html

Minnerup P, Knoll A (2016) Testing automated vehicles against actuator inaccuracies in a large state space. IFAC-PapersOnLine 49(15):38–43

Mnih V, Kavukcuoglu K, Silver D, Rusu AA, Veness J, Bellemare MG et al (2015) Human-level control through deep reinforcement learning. Nature 518(7540):529

Mnih V, Badia AP, Mirza M, Graves A, Lillicrap T, Harley T, Silver D, Kavukcuoglu K (2016) Asynchronous methods for deep reinforcement learning. In: Proceedings of the 33rd international conference on machine learning, vol 48. PMLR, pp 1928–1937

Munos R, Stepleton T, Harutyunyan A, Bellemare M (2016) Safe and efficient off-policy reinforcement learning. arXiv preprint arXiv:1606.02647

OMG Specification (2006) Object constraint language V2.4. OMG file id: formal/14–02-03, https://www.omg.org/spec/OCL/2.4/PDF

OMG Specification (2016) Semantics of a foundational subset for executable UML models V1.2.1. OMG file id: formal/2016-01-05. https://www.omg.org/spec/FUML/1.1/PDF

OMG Specification (2017) Precise semantics of UML State Machines (PSSM). V1.0. OMG file id: formal/19–05-01. https://www.omg.org/spec/PSSM/1.0/PDF

Pascanu R, Bengio Y (2013) Revisiting natural gradient for deep networks. arXiv preprint arXiv:1301.3584

Pollard D (2000) Asymptopia: an exposition of statistical asymptotic theory. Available at http://www.stat.yale.edu/~pollard/Books/Asymptopia

Priesterjahn C, Steenken D, Tichy M (2013) Timed Hazard analysis of self-healing systems. In: Cámara J, de Lemos R, Ghezzi C, Lopes A (eds) Assurances for self-adaptive systems. Lecture notes in computer science, vol 7740. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-642-36249-1_5

Ramirez AJ, Jensen AC, Cheng BH, Knoester DB (2011) Automatically exploring how uncertainty impacts behavior of dynamically adaptive systems. In: 2011 26th IEEE/ACM international conference on automated software engineering (ASE 2011). Lawrence, KS, USA, pp 568–571. https://doi.org/10.1109/ASE.2011.6100127

Reichstaller A, Knapp A (2018) Risk-based testing of self-adaptive systems using run-time predictions. In: 2018 IEEE 12th international conference on self-adaptive and self-organizing systems (SASO), vol 2018, Trento, Italy, pp 80–89. https://doi.org/10.1109/SASO.2018.00019

Reichstaller A, Eberhardinger B, Knapp A, Reif W, Gehlen M (2016) Risk-based interoperability testing using reinforcement learning. In: Wotawa F, Nica M, Kushik N (eds) Testing software and systems. ICTSS 2016. Lecture notes in computer science, vol 9976. Springer, Cham. https://doi.org/10.1007/978-3-319-47443-4_4

Royston P (1995) Remark AS R94: a remark on algorithm AS 181: the W-test for normality. J R Stat Soc: Ser C: Appl Stat 44(4):547–551

Schulman J, Levine S, Abbeel P, Jordan M, Moritz P (2015) Trust region policy optimization. Proceedings of the 32nd International Conference on Machine Learning, in Proceedings of Machine Learning Research 37:1889–1897. Available from http://proceedings.mlr.press/v37/schulman15.html

Schulman J, Wolski F, Dhariwal P, Radford A, Klimov O (2017) Proximal policy optimization algorithms. arXiv preprint arXiv:1707.06347

Singh S, Jaakkola T, Littman ML, Szepesvári C (2000) Convergence results for single-step on-policy reinforcement-learning algorithms. Mach Learn 38(3):287–308

Spieker H, Gotlieb A, Marijan D, Mossige M (2017) Reinforcement learning for automatic test case prioritization and selection in continuous integration. In: Proceedings of the 26th ACM SIGSOFT international symposium on software testing and analysis (ISSTA 2017). Association for Computing Machinery, New York, NY, USA, pp 12–22. https://doi.org/10.1145/3092703.3092709

Steinbauer G, Mörth M, Wotawa F (2006) Real-time diagnosis and repair of faults of robot control software. In: Bredenfeld A, Jacoff A, Noda I, Takahashi Y (eds) RoboCup 2005: robot soccer world cup IX. RoboCup 2005. Lecture notes in computer science, vol 4020. Springer, Berlin, Heidelberg. https://doi.org/10.1007/11780519_2

Sutton RS, Barto AG (1998) Reinforcement learning: An introduction (Vol. 1, Vol. 1). MIT press, Cambridge

Vargha A, Delaney HD (2000) A critique and improvement of the CL common language effect size statistics of McGraw and Wong. J Educ Behav Stat 25(2):101–132

Veanes M, Roy P, Campbell C (2006) Online testing with reinforcement learning. In: Havelund K, Núñez M, Roşu G, Wolff B (eds) Formal approaches to software testing and runtime verification. FATES 2006, RV 2006. Lecture notes in computer science, vol 4262. Springer, Berlin, Heidelberg. https://doi.org/10.1007/11940197_16

Venkatasubramanian V, Rengaswamy R, Yin K, Kavuri SN (2003) A review of process fault detection and diagnosis: part I: quantitative model-based methods. Comput Chem Eng 27(3):293–311

Walkinshaw N, Fraser G (2017) Uncertainty-driven black-box test data generation. In: 2017 IEEE international conference on software testing, verification and validation (ICST), Tokyo, Japan, 2017, pp. 253–263. https://doi.org/10.1109/ICST.2017.30

Wang Z, Bapst V, Heess N, Mnih V, Munos R, Kavukcuoglu K, et al. (2016) Sample efficient actor-critic with experience replay. arXiv preprint arXiv:1611.01224

Whiteson S, Stone P (2006) Evolutionary function approximation for reinforcement learning. J Mach Learn Res 7(May):877–917

Wohlin C, Runeson P, Höst M, Ohlsson MC, Regnell B, Wesslén A (2012) Experimentation in software engineering. IEEE Trans Softw Eng SE-12(7):733–743, July 1986. https://doi.org/10.1109/TSE.1986.6312975

Wu Y, Mansimov E, Grosse RB, Liao S, Ba J (2017) Scalable trust-region method for deep reinforcement learning using kronecker-factored approximation. arXiv preprint arXiv:1708.05144

Zhang M, Ali S, Yue T (2019) Uncertainty-wise test case generation and minimization for cyber-physical systems. J Syst Softw 153 Elsevier. https://doi.org/10.1016/j.jss.2019.03.011

Acknowledgements

This work was supported by the Research Council of Norway funded MBT4CPS (grant no. 240013/O70) project. Tao Yue and Shaukat Ali are also supported by the Co-evolver project funded by the Research Council of Norway (grant no. 286898/LIS) under the category of Researcher Projects of the FRIPO funding scheme. Tao Yue is also supported by the National Nature Science Foundation of China (grant no. 61872182).

Author information

Authors and Affiliations

Corresponding author

Additional information

Guest Editor: Hélène Waeselynck

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix 1 Evaluation Results for Effectiveness

Appendix 2 Evaluation Results for Efficiency

Appendix 3 Time and Space Costs of Reinforcement Learning Algorithms

Rights and permissions

About this article

Cite this article

Ma, T., Ali, S. & Yue, T. Testing self-healing cyber-physical systems under uncertainty with reinforcement learning: an empirical study. Empir Software Eng 26, 52 (2021). https://doi.org/10.1007/s10664-021-09941-z

Accepted:

Published:

DOI: https://doi.org/10.1007/s10664-021-09941-z