Abstract

In this work a cooperative, bid-based, model for problem decomposition is proposed with application to discrete action domains such as classification. This represents a significant departure from models where each individual constructs a direct input-outcome map, for example, from the set of exemplars to the set of class labels as is typical under the classification domain. In contrast, the proposed model focuses on learning a bidding strategy based on the exemplar feature vectors; each individual is associated with a single discrete action and the individual with the maximum bid ‘wins’ the right to suggest its action. Thus, the number of individuals associated with each action is a function of the intra-action bidding behaviour. Credit assignment is designed to reward correct but unique bidding strategies relative to the target actions. An advantage of the model over other teaming methods is its ability to automatically determine the number of and interaction between cooperative team members. The resulting model shares several traits with learning classifier systems and as such both approaches are benchmarked on nine large classification problems. Moreover, both of the evolutionary models are compared against the deterministic Support Vector Machine classification algorithm. Performance assessment considers the computational, classification, and complexity characteristics of the resulting solutions. The bid-based model is found to provide simple yet effective solutions that are robust to wide variations in the class representation. Support Vector Machines and classifier systems tend to perform better under balanced datasets albeit resulting in black-box solutions.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Notes

Hereafter, we will use ‘class’ and ‘action’ interchangeably.

As opposed to the test data employed for assessing the generalization performance of the trained classifier.

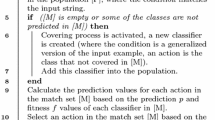

Compared to a parallel implementation, training overhead increases minimally as Step 2(c) in Fig. 1 is executed |L| times more often where L is the set of class labels.

An alternative approach might take the form of an auction-based model for credit assignment. Such models are based on the concept of ‘wealth’ [38]. However, it becomes increasingly difficult to establish robust mechanisms for deriving the ‘wealth’ property when the subset of exemplars from which wealth-based performance metrics are derived is continuously varying—as is the case of the competitive coevolutionary paradigm central to scaling the BGP model to large problem domains.

For efficiency, micro-classifiers representing identical condition-action rules are recorded as a single macro-classifier. Each macro-classifier has an associated numerosity which is used to weigh calculations accordingly. Here, the algorithm is described in terms of micro-classifiers.

Otherwise the test data would have been used to build the model compromising the independence of the test partition.

All datasets with more than two classes will be ‘unbalanced’ since each class can at best (i.e., when the number of exemplars belonging to each class is the same) account for \(\frac{1}{\# of classes}\) of all exemplars.

The removal of introns, which were found to account for between 60% to 90% of instructions in linear GP [51], was not performed.

Given that 1,250,000 new learners are generated when training learners of each action for 50,000 generations, Step 2 of the learning algorithm, on THY roughly one in 127, 116, and 173 new learners is accepted into the population when training for action 0, 1, and 2 respectively.

Although a small mutation in a program’s genotype may yield a disproportionally large change in its phenotype.

References

J.R. Koza, in Genetic Programming: On the Programming of Computers by Means of Natural Selection. (MIT Press, Cambridge, MA, 1992)

W.B. Langdon, R. Poli, in Foundations of Genetic Programming (Springer-Verlag, Berlin, 2002)

P.J. Angeline, J.B. Pollack, The evolutionary induction of subroutines, in Proceedings of the Fourteenth Annual Conference of the Cognitive Science Society (Lawrence Erlbaum, Hillsdale, NJ, 1992), pp. 236–241.

M. Brameier, W. Banzhaf, Evolving teams of predictors with linear genetic programming. Genet. Program. Evolvable Mach. 2(4), 381–407 (2001)

T. Soule, in Cooperative Evolution on the Intertwined Spirals Problem, ed. by E. Cantú-Paz, J.A. Foster, K. Deb, L.D. Davis, R. Roy, U.-M. O’Reilly, H.-G. Beyer, R. Standish, G. Kendall, S. Wilson, J. Wegener, D. Dasgupta, M.A. Potter, A.C. Schultz. Proceedings of the European Conference on Genetic Programming (Springer-Verlag, Berlin, 2003), pp. 434–442

R. Thomason, T. Soule, in Novel Ways of Improving Cooperation and Performance in Ensemble Classifiers, ed. by D. Thierens, H.-G. Beyer, J. Bongard, J. Branke, J.A. Clark, D. Cliff, C.B. Congdon, K. Deb, B. Doerr, T. Kovacs, S. Kumar, J.F. Miller, J. Moore, F. Neumann, M. Pelikan, R. Poli, K. Sastry, K.O. Stanley, T. Stutzle, R.A. Watson, I. Wegener. Proceedings of the Genetic and Evolutionary Computation Conference. (ACM Press, New York, NY, 2007), pp. 1708–1715

E.D. De Jong, J.B. Pollack, Ideal evaluation from coevolution. Evol. Comput. 12, 159–192 (2004)

S.G. Ficici, J.B. Pollack, Pareto Optimality in Coevolutionary Learning, ed. by J. Kelemen, P. Sosik. Proceedings of the 6th European Conference on Advances in Artificial Life (Springer-Verlag, Berlin, 2001), pp. 316–325

J. Noble, R.A. Watson, in Pareto Coevolution: Using Performance Against Coevolved Opponents in a Game as Dimensions for Pareto Selection, ed. by L. Spector, E.D. Goodman, A. Wu, W.B. Langdon, H.-M. Voigt, M. Gen, S. Sen, M. Dorigo, S. Pezeshk, M.H. Garzon, E. Burke. Proceedings of the Genetic and Evolutionary Computation Conference (Morgan Kaufmann, San Francisco, CA, 2001), pp. 493–500

M. Lemczyk, M.I. Heywood, in Training Binary GP Classifiers Efficiently: A Pareto-coevolutionary Approach, ed. by M. Ebner, M. O’Neill, A. Ekárt, L. Vanneschi, A.I. Esparcia-Alcázar. Proceedings of the European Conference on Genetic Programming (Berlin, Springer-Verlag, 2007), pp. 229–240

P. Lichodzijewski, M.I. Heywood, in Pareto-coevolutionary Genetic Programming for Problem Decomposition in Multi-class Classification, ed. by D. Thierens, H.-G. Beyer, J. Bongard, J. Branke, J.A. Clark, D. Cliff, C.B. Congdon, K. Deb, B. Doerr, T. Kovacs, S. Kumar, J.F. Miller, J. Moore, F. Neumann, M. Pelikan, R. Poli, K. Sastry, K.O. Stanley, T. Stutzle, R.A. Watson, I. Wegener. Proceedings of the Genetic and Evolutionary Computation Conference (ACM Press, New York, NY, 2007), pp. 464–471

A. McIntyre, M.I. Heywood, in Multi-Objective Competitive Coevolution for Efficient GP Classifier Problem Decomposition. Proceedings of the International Conference on Systems, Man and Cybernetics (IEEE Press, 2007), pp. 1930–1937

J.H. Holland, J.S. Reitman, Cognitive Systems Based on Adaptive Algorithms (Pattern Directed Inference Systems, 1978)

S. Wilson, Classifier fitness based on accuracy. Evol. Comput. 3(2), 149–175 (1995)

C.-C. Chang, C.-J. Lin. LIBSVM: A Library for Support Vector Machines, Version 2.85 (2007), Software available at http://www.csie.ntu.edu.tw/~cjlin/libsvm

E. Bauer, R. Kohavi, An empirical comparison of voting classification algorithms: bagging, boosting, and variants. Mach. Learn. 36(1–2), 105–139 (1999)

G. Folino, C. Pizzuti, G. Spezzano, GP ensembles for large-scale data classification. IEEE Trans. Evol. Comput. 10(5), 604–616 (2006)

H. Iba, in Bagging, Boosting and Bloating in Genetic Programming, ed. by W. Banzhaf, J. Daida, A.E. Eiben, M.H. Garzon, V. Honavar, M. Jakiela, R.E. Smith. Proceedings of the Genetic and Evolutionary Computation Conference (Morgan Kaufmann, San Francisco, CA, 1999), pp. 1053–1060

K. Imamura, T. Soule, R.B. Heckendorn, J.A. Foster, Behavioral diversity and a probabilistically optimal GP ensemble. Genet. Program. Evolvable Mach. 4(3), 235–253 (2003)

A.R. McIntyre, M.I. Heywood, in MOGE:GP Classification Problem Decomposition Using Multi-objective Optimization, ed. by M. Keijzer, M. Cattolico, D. Arnold, V. Babovic, C. Blum, P. Bosman, M.V. Butz, C. Coello Coello, D. Dasgupta, S.G. Ficici, J. Foster, A. Hernandez-Aguirre, G. Hornby, H. Lipson, P. McMinn, J. Moore, G. Raidl, R. Franz, C. Ryan, D. Thierens. Proceedings of the Genetic and Evolutionary Computation Conference (ACM Press, New York, NY, 2006), pp. 863–870

P.L. Lanzi, R.L. Riolo, Recent Trends in Learning Classifier Systems research. Advances in Evolutionary Computing: Theory and Applications (2003), pp. 955–988

M. Butz, S.W. Wilson, in An Algorithmic Description of XCS, ed. by P.L. Lanzi, W. Stolzmann, S.W. Wilson. IWLCS ’00: Revised Papers from the Third International Workshop on Advances in Learning Classifier Systems (Springer-Verlag, 2001, Berlin), pp. 253–272

C. Stone, L. Bull, For real! XCS with continuous-valued inputs. Evol. Comput. 11(3), 299–336 (2003)

S.W. Wilson, in Get Real! XCS With Continuous-Valued Inputs, ed. by L.P. Lanzi, W. Stolzmann, S.W. Wilson. Learning Classifier Systems, From Foundations to Applications. (Springer-Verlag, Berlin, 2000), pp. 209–222

A.J. Bagnall, G.C. Cawley, in Learning Classifier Systems for Data Mining: A Comparison of XCS with Other Classifiers for the Forest Cover Data Set, ed. by D.C. Wunsch, M. Hasselmo, K. Venayagamoorthy, D. Wang. Proceedings of the International Joint Conference on Neural Networks, vol 3 (IEEE Press, 2003), pp. 1802–1807

E. Bernado-Mansilla, J.M. Garrell-Guiu, Accuracy-based learning classifier systems: models, analysis and applications to classification tasks. Evol. Comput. 11, 209–238 (2003)

C. Gathercole, P. Ross, in Dynamic Training Subset Selection for Supervised Learning in Genetic Programming, ed. by Y. Davidor, H.-P. Schwefel, R. Männer. Proceedings the Third Conference on Parallel Problem Solving from Nature (Springer-Verlag, Berlin, 1994), pp. 312–321

F.H. Bennett III, J.R. Koza, J. Shipman, O. Stiffelman, in Building a Parallel Computer System for $18,000 that Performs a Half Peta-flop Per Day, ed. by W. Banzhaf, J. Daida, A.E. Eiben, M.H. Garzon, V. Honavar, M. Jakiela, R.E. Smith. Proceedings of the Genetic and Evolutionary Computation Conference (Morgan Kaufmann, San Francisco, CA, 1999), pp. 1484–1490

H. Juillé, J.B. Pollack, Massively parallel genetic programming. Adv. Genet. Program. 2, 339–357 (1996)

R. Curry, P. Lichodzijewski, M.I. Heywood, Scaling Genetic Programming to large datasets using hierarchical dynamic subset selection. IEEE Trans. Syst. Man. Cybern. B Cybern. 37(4), 1065–1073 (2007)

D. Song, M.I. Heywood, A.N. Zincir-Heywood, Training Genetic Programming on half a million patterns: An example from anomaly detection. IEEE Trans. Evol. Comput. 9(3), 225–239 (2005)

C.W.G. Lasarczyk, P.W.G. Dittrich, W.W.G. Banzhaf, Dynamic subset selection based on a fitness case topology. Evol. Comput. 12(2), 223–242 (2004)

D.-Y. Cho, B.-T. Zhang, in Genetic Programming with Active Data Selection, ed. by B. McKay, X. Yao, C.S. Newton, J.-H. Kim, T. Furuhashi. Simulated Evolution and Learning: Second Asia-Pacific Conference on Simulated Evolution and Learning (Springer-Verlag, London, UK, 1998), pp. 146–153

K. Tumer, D. Wolpert, A survey of collectives. Collectives and the Design of Complex Systems (2004), pp. 1–42

L. Panait, S. Luke, Cooperative Multi-agent Learning: The State of the art. Auton. Agent. Multi. Agent. Syst. 11(3), 387–434 (2005)

L. Panait, S. Luke, R.P. Wiegand, Biasing coevolutionary search for optimal multiagent behaviors. IEEE Trans. Evol. Comput. 10(6), 629–645 (2006)

M. Potter, K. de Jong, Cooperative coevolution: An architecture for evolving coadapted subcomponents. Evol. Comput. 8(1), 1–29 (2000)

P. Lichodzijewski, M.I. Heywood, in GP Classifier Problem Decomposition Using First-price and Second-price Auctions, ed. by M. Ebner, M. O’Neill, A. Ekárt, L. Vanneschi, A.I. Esparcia-Alcázar. Proceedings of the European Conference on Genetic Programming (Springer-Verlag, Berlin, 2007), pp. 137–147

M. Laumanns, L. Thiele, K. Deb, E. Zitzler, Combining convergence and diversity in evolutionary multiobjective optimization. Evol. Comput. 10(3), 263–282 (2002)

M. Brameier, W. Banzhaf, Linear Genetic Programming (Springer, New York, NY, 2007)

S.W. Wilson, in Generalization in the XCS Classifier System, ed. by J.R. Koza, W. Banzhaf, K. Chellapilla, K. Deb, M. Dorigo, D. Fogel, M. Garzon, D.E. Goldberg, H. Iba, R. Riolo. Genetic Programming 1998: Proceedings of the Third Annual Conference (Morgan Kaufmann, San Francisco, CA, 1998), pp. 665–674

A. Orriols-Puig, E. Bernado-Mansilla, in Bounding XCS’s Parameters for Unbalanced Datasets, ed. by M. Keijzer, M. Cattolico, D. Arnold, V. Babovic, C. Blum, P. Bosman, M.V. Butz, C. Coello Coello, D. Dasgupta, S.G. Ficici, J. Foster, A. Hernandez-Aguirre, G. Hornby, H. Lipson, P. McMinn, J. Moore, G. Raidl, R. Franz, C. Ryan, D. Thierens. Proceedings of the Genetic and Evolutionary Computation Conference (ACM Press, New York, NY, 2006), pp. 1561–1568

M.V. Butz. Documentation of XCS+TS C-Code 1.2. IlliGAL Technical Report 200323 (2003)

T. Kovacs, in Deletion Schemes for Classifier Systems, ed. by W. Banzhaf, J. Daida, A.E. Eiben, M.H. Garzon, V. Honavar, M. Jakiela, R.E. Smith. Proceedings of the Genetic and Evolutionary Computation Conference (Morgan Kaufmann, San Francisco, CA, 1999), pp. 329–336

M. Butz, K. Sastry, D.E. Goldberg, in Tournament Selection in XCS, ed. by E. Cantú-Paz, J.A. Foster, K. Deb, L.D. Davis, R. Roy, U.-M. O’Reilly, H.-G. Beyer, R. Standish, G. Kendall, S. Wilson, J. Wegener, D. Dasgupta, M.A. Potter, A.C. Schultz. Proceedings of the Genetic and Evolutionary Computation Conference (Springer-Verlag, Berlin, 2003), pp. 1857–1869

S. Hettich, S.D. Bay, The UCI KDD Archive (University of California, Dept. of Information and Comp. Science, Irvine, CA, 1999),http://kdd/ics/uci/edu

D.J. Newman, S. Hettich, C.L. Blake, C.J. Merz, UCI Repository of Machine Learning Databases (University of California, Dept. of Information and Comp. Science, Irvine, CA, 1998) http://www.ics.uci.edu/∼mlearn/mlrepository.html

N. Japkowicz, in Why Question Machine Learning Evaluation Methods? (An Illustrative Review of the Shortcomings of Current Methods), ed. by C. Drummond, W. Elazmeh, N. Japkowicz. AAAI-2006 Workshop on Evaluation Methods for Machine Learning, Technical Report WS-06-06 (AAAI Press, Menlo Park, CA, 2006), pp. 6–11

G. Weiss, F. Provost, The Effect of Class Distribution on Classifier Learning. Technical Report ML-TR 43 (Department of Computer Science, Rutgers University, 2001)

R.-E. Fan, P.-H. Chen, C.-J. Lin, Working set selection using second order information for training support vector machines. J. Mach. Learn. Res. 6, 1889–1918 (2005)

M. Brameier, W. Banzhaf, A comparison of linear genetic programming and neural networks in medical data mining. IEEE Trans. Evol. Comput. 5(1), 17–26 (2001)

R.A. Watson, J.B. Pollack, in Coevolutionary Dynamics in a Minimal Substrate, ed. by L. Spector, E.D. Goodman, A. Wu, W.B. Langdon, H.-M. Voigt, M. Gen, S. Sen, M. Dorigo, S. Pezeshk, M.H. Garzon, E. Burke. Proceedings of the Genetic and Evolutionary Computation Conference (Morgan Kaufmann, San Francisco, CA, 2001), pp. 702–709

M. Lemczyk, M.I. Heywood, in Pareto-coevolutionary Genetic Programming Classifier, ed. by M. Keijzer, M. Cattolico, D. Arnold, V. Babovic, C. Blum, P. Bosman, M.V. Butz, C. Coello Coello, D. Dasgupta, S.G. Ficici, J. Foster, A. Hernandez-Aguirre, G. Hornby, H. Lipson, P. McMinn, J. Moore, G. Raidl, R. Franz, C. Ryan, D. Thierens. Proceedings of the Genetic and Evolutionary Computation Conference (ACM Press, New York, NY, 2006), pp. 945–946

Acknowledgements

This work was conducted while Peter Lichodzijewski held a Precarn Graduate Scholarship and a Killam Postgraduate Scholarship. Malcolm. I. Heywood would like to thank the Natural Sciences and Engineering Research Council of Canada, The Mathematics of Information Technology and Complex Systems network, and the Canadian Foundation for Innovation for their financial support.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Lichodzijewski, P., Heywood, M.I. Coevolutionary bid-based genetic programming for problem decomposition in classification. Genet Program Evolvable Mach 9, 331–365 (2008). https://doi.org/10.1007/s10710-008-9067-9

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10710-008-9067-9