Abstract

Task based approaches with dynamic load balancing are well suited to exploit parallelism in irregular applications. For such applications, the execution time of tasks can often not be predicted due to input dependencies. Therefore, a static task assignment to execution resources usually does not lead to the best performance. Moreover, a dynamic load balancing is also beneficial for heterogeneous execution environments. In this article a new adaptive data structure is proposed for storing and balancing a large number of tasks, allowing an efficient and flexible task management. Dynamically adjusted blocks of tasks can be moved between execution resources, enabling an efficient load balancing with low overhead, which is independent of the actual number of tasks stored. We have integrated the new approach into a runtime system for the execution of task-based applications for shared address spaces. Runtime experiments with several irregular applications with different execution schemes show that the new adaptive runtime system leads to good performance also in such situations where other approaches fail to achieve comparable results.

Similar content being viewed by others

References

Agrawal, K., He, Y., Leiserson, C.E.: Adaptive work stealing with parallelism feedback. In: Yelick, K.A., Mellor-Crummey, J.M. (eds.) Proceedings of the ACM SIGPLAN Symposium on Principles and Practice of Parallel Programming (22th PPOPP’2007), pp. 112–120. ACM, New york (2007)

Allen, E., Chase, D., Hallett, J., Luchangco, V., Maessen, J.-W., Ryu, S., Steele, G.L. Jr., Tobin-Hochstadt, S.: The Fortress Language Specification, version 1.0beta. Technical report, SUN, Mar (2007)

Banicescu, I., Hummel, S.F.: Balancing processor loads and exploiting data locality in n-body simulations. In: Supercomputing ’95: Proceedings of the 1995 ACM/IEEE Conference on Supercomputing (CDROM), p. 43. ACM, New York, NY, USA (1995)

Banicescu I., Velusamy V., Devaprasad J.: On the scalability of dynamic scheduling scientific applications with adaptive weighted factoring. Clust. Comput. J. Netw. Softw. Tools Appl. 6, 215–226 (2003)

Bellens, P., Perez, J.M., Badia, R.M., Labarta, J.: CellSs: A programming model for the cell BE architecture. In: Proceedings of the 2006 ACM/IEEE SC’06 Conference. IEEE (2006)

Blumofe, R., Joerg, C., Kuszmaul, B., Leiserson, C., Randall, K., Zhou, Y.: Cilk: An efficient multithreaded runtime system. In: Proceedings of the 5th Symposium on Principles and Practice of Parallel Programming (PPOPP’1995), pp. 55–69. ACM (1995)

Blumofe, R., Leiserson, C.: Scheduling multithreaded computations by work stealing. In: Proceedings of the 35th Annual Symposium on Foundations of Computer Science, pp. 356–368. IEEE Computer Society (1994)

Burton, F.W., Sleep, M.R.: Executing functional programs on a virtual tree of processors. In: FPCA ’81: Proceedings of the 1981 Conference on Functional Programming Languages and Computer Architecture, pp. 187–194. ACM, New York, NY, USA. (1981)

Cariño R., Banicescu I.: Dynamic load balancing with adaptive factoring methods in scientific applications. J. Supercomput. 44(1), 41–63 (2008)

Charles, P., Grothoff, C., Saraswat, V.A., Donawa, C., Kielstra, A., Ebcioglu, K., von Praun, C., Sarkar, V.: X10: An object-oriented approach to non-uniform cluster computing. In: Johnson, R., Gabriel, R.P. (eds.) Proceedings of the 20th Annual ACM SIGPLAN Conference on Object-Oriented Programming, Systems, Languages, and Applications (OOPSLA), pp. 519–538. ACM, New york (2005)

Callahan, D., Chamberlain, B.L., Zima, H.P.: The cascade high productivity language. In: 9th international workshop on high-level parallel programming models and supportive environments (HIPS’04), pp. 52–60. IEEE (2004)

Dinan, J., Larkins, D., Sadayappan, P., Krishnamoorthy, S., Nieplocha, J.: Scalable work stealing. In: SC ’09: Proceedings of the Conference on High Performance Computing Networking, Storage and Analysis, pp. 1–11. ACM (2009)

Duran, A., Corbalan, J., Ayguade, E.: An adaptive cut-off for task parallelism. In: SC’08 USB Key. ACM/IEEE, Austin, TX, Nov. 2008. Universitat Politecnica de Catalunya (2008)

Halstead, R.H. Jr.: Implementation of multilisp: Lisp on a multiprocessor. In: LFP ’84: Proceedings of the 1984 ACM Symposium on LISP and Functional Programming, pp. 9–17. ACM, New York, NY, USA. (1984)

Hanrahan P., Salzman D., Aupperle L.: A rapid hierarchical radiosity algorithm. ACM SIGGRAPH Comput. Graph. 25(4), 197–206 (1991)

Hendler, D., Shavit, N.: Non-blocking steal-half work queues. In: Proceedings of the Twenty-First Annual Symposium on Principles of Distributed Computing (PODC’02), pp. 280–289. ACM (2002)

Hippold, J., Rünger, G.: Task pool teams for implementing irregular algorithms on clusters of SMPs. In: Proceedings of IPDPS. Nice, France, CD-ROM (2003)

Hoare C.A.R.: Quicksort. Comput. J. 5(4), 10–15 (1962)

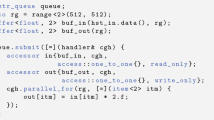

Hoffmann, R., Rauber, T.: Fine-grained task scheduling using adaptive data structures. In: Proceedings of Euro-Par 2008, vol. 5168 of LNCS, pp. 253–262. Springer (2008)

Kalé L.V., Krishnan S.: CHARM++. In: Wilson, G.V., Lu, P. (eds) Parallel Programming in C++ , chap. 5, pp. 175–214. MIT Press, Cambridge, MA (1996)

Kumar S., Hughes C.J., Nguyen A.: Carbon: Architectural support for fine-grained parallelism on chip multiprocessors. ACM SIGARCH Comput. Arch. News 35(2), 162–173 (2007)

Kumar V., Grama A., Vempaty N.: Scalable load balancing techniques for parallel computers. J. Parallel Distrib. Comput. 22(1), 60–79 (1994)

Polychronopoulos C., Kuck D.: Guided self-scheduling: A practical scheduling scheme for parallel supercomputers. IEEE Trans. Comput. C-36(12), 1425–1439 (1987)

Power Architecture editors, developerWorks, IBM: Just Like Being There: Papers from the Fall Processor Forum 2005: Unleashing the Power of the Cell Broadband Engine—A Programming Model Approach. IBM developerWorks (2005)

Reinders, J.: Intel Threading Building Blocks: Outfitting C++ for Multi-core Processor Parallelism. O’Reilly (2007)

Schloegel, K., Karypis, G., Kumar, V.: A Unified algorithm for load-balancing adaptive scientific simulations. In: Proceedings of Supercomputing’2000, pp. 75–75. IEEE (2000)

Singh, J.: Parallel Hierarchical N-Body Methods and their Implication for Multiprocessors. PhD thesis, Stanford University (1993)

Singh J.P., Gupta A., Levoy M.: Parallel visualization algorithms: Performance and architectural implications. IEEE Comput. 27(7), 45–55 (1994)

Singh J.P., Holt C., Tosuka T., Gupta A., Hennessy J.L.: Load balancing and data locality in adaptive hierarchical n-body methods: Barnes-hut, fast multipole, and radiosity. J. Parallel Distrib. Comput. 27(2), 118–141 (1995)

Woo, S.C., Ohara, M., Torrie, E., Singh, J.P., Gupta, A.: The SPLASH-2 programs: characterization and methodological considerations. In: Proceedings of the 22nd International Symposium on Computer Architecture, pp. 24–36. ACM, Santa Margherita Ligure, Italy (1995)

Wu, M., Li, X.-F.: Task-pushing: A scalable parallel GC marking algorithm without synchronization operations. In: Proceedings of the 21th IEEE International Parallel and Distributed Processing Symposium (IPDPS 2007). IEEE (2007)

Xu C., Lau F.C.: Load Balancing in Parallel Computers: Theory and Practice. Kluwer Academic Publishers, Dordrecht (1997)

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Hoffmann, R., Rauber, T. Adaptive Task Pools: Efficiently Balancing Large Number of Tasks on Shared-address Spaces. Int J Parallel Prog 39, 553–581 (2011). https://doi.org/10.1007/s10766-010-0156-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10766-010-0156-z