Abstract

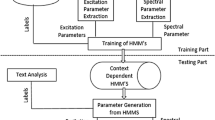

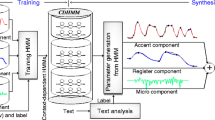

HMM based Bengali speech synthesis system (Bengali-HTS) generates highly intelligible synthesized speech but its naturalness is not adequate even though it is trained with a very good amount of speech corpus. In case of interrogative, imperative and exclamatory sentences, naturalness of the synthesized speech falls drastically. This paper proposes a method to overcome this problem by modifying the \(\hbox {F}_{0}\) contour of synthetic speech based on Fujisaki model. The Fujisaki model features for different types of Bengali sentences are analyzed for the generation of \(\hbox {F}_{0}\) contour. These features depend on prosodic word/phrase boundary of the sentence. So a two layer supervised classification and regression tree is trained to predict the prosodic word/phrase boundary. Fujisaki model then generates \(\hbox {F}_{0}\) contour from input text using the prosodic word/phrase boundary and segmental duration information from HMM-based speech synthesis system. Moreover, for HMM training purpose, prosodic structure of sentence has been employed rather than lexical structure. From MOS and preference test it is found that proposed method significantly improved the overall quality of synthesized speech than that of Bengali-HTS.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.References

Anastasakos, T., McDonough, J., Schwartz, R., & Makhoul, J. (1996). A compact model for speaker-adaptive training. Proceedings of Fourth International Conference on Spoken Language Processing, 2, 1137–1140.

Black, A. (2002). Perfect synthesis for all of the people all of the time. In Proceedings of IEEE Speech Synthesis Workshop.

Black, A., & Taylor, P. (1997). Automatically clustering similar units for unit selection in speech synthesis. In Proceedings of Eurospeech (pp. 601–604).

Bulyko, I., Ostendorf, M., & Bilmes, J. (2002). Robust splicing costs and efficient search with BMM models for concatenative speech synthesis. In Proceedings of ICASSP (pp. 461–464).

Campbell, N., & Black, A. (1996). Prosody and the selection of source units for concatenative synthesis. Progress in Speech Synthesis, 3, 279–292.

Chen, C.-P., Huang, Y.-C., Wu, C.-H. & Lee, K.-D. (2012). Cross-lingual frame selection method for polyglot speech synthesis. In Proceeding of IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) (pp. 4521–4524).

Dines, J., & Sridharan, S. (2001). Trainable speech synthesis with trended hidden Markov models. In Proceedings of ICASSP (pp. 833–837).

Donovan, R., & Woodland, P. (1995). Improvements in an HMM-based speech synthesiser. In Proceedings of Eurospeech (pp. 573–576).

Fujisaki, H., & Hirose, K. (1984). Analysis of voice fundamental frequency contours for declarative sentences of japanese. Journal of the Acoustical Society of Japan (E), 5(4), 233–242.

Fukada, T., Tokuda, K., Kobayashi, T., & Imai, S. (1992). An adaptive algorithm for mel-cepstral analysis of speech. Proceeding of IEEE International Conference on Acoustics, Speech, and Signal Processing, 1, 137–140.

Gao, B.-H., Qian, Y., Wu, Z.-Z., & Soong, F.-K. (2008). Duration refinement by jointly optimizing state and longer unit likelihood. In Proceedings of Interspeech (pp. 2266–2269).

Hsia, C.-C., Wu, C.-H., & Wu, J.-Q. (2007). Conversion function clustering and selection using linguistic and spectral information for emotional voice conversion. IEEE Transactions on Computers, 56(9), 1245–1254.

Hunt, A., & Black, A. (1996). Unit selection in a concatenative speech synthesis system using a large speech database. In Proceedings of ICASSP (pp. 373–376).

Kawai, H., & Tsuzaki, M. (2002). A study on time-dependent voice quality variation in a large-scale single speaker speech corpus used for speech synthesis. In Proceedings of IEEE Speech Synthesis Workshop.

Ling, Z.-H., Wu, Y.-J., Wang, Y.-P., Qin, L., & Wang, R.-H. (2006). USTC system for Blizzard challenge 2006—an improved HMM-based speech synthesis method. In Proceedings of Blizzard Challenge Workshop.

Mandal, S. D., Saha, A., & Datta, A. (2005). Annotated speech corpora development in Indian languages. Vishwa Bharat, 6, 49–64.

Mandal, S. D., Warsi, A. H., Basu, T., Hirose, K., & Fujisaki, H. (2010). Analysis and synthesis of \(\text{ F }_{0}\) contours for bangla readout speech. In Proceedings of Oriental COCOSDA, Kathmandu, Nepal.

Mukherjee, S. & Mandal, S. D. (2012). A Bengali HMM based speech synthesis system. In Proceeding of International Conference on Speech Database and Assessments (Oriental COCOSDA) (pp. 225–259).

Mukherjee, S. & Mandal, S. D. (2013). Bengali parts-of-speech tagging using global linear model. In Proceeding of IEEE INDICON -2013.

Oura, K., Zen, H., Nankaku, Y., Lee, A., & Tokuda, K. (2007). Postfiltering for HMM-based speech synthesis using mel-LSPs. In Proceedings of Autumn Meeting of ASJ (pp. 367–368) (in Japanese).

Picard, R. W. (1997). Affective computing. Cambridge: MIT Press.

Qian, Y., Xu, J., & Soong, F. K. (2011). A frame mapping based HMM approach to cross-lingual voice transformation. In Proceeding of IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) (pp. 5120–5123).

Shi, Y., Chang, E., Peng, H., & Chu, M. (2002). Power spectral density based channel equalization of large speech database for concatenative TTS system. In Proceedings of ICSLP (pp. 2369–2372).

Stylianou, Y. (1999). Assessment and correction of voice quality variabilities in large speech databases for concatenative speech synthesis. In Proceedings of ICASSP (pp. 377–380).

Sun, J.-W., Ding, F., & Wu, Y.-H. (2009). Polynomial segment model based statistical parametric speech synthesis system. In Proceedings of ICASSP (pp. 4021–4024).

Toda, T., & Tokuda, K. (2007). A speech parameter generation algorithm considering global variance for HMM-based speech synthesis. IEICE Transactions on Information and Systems E Series D, 90(5), 816–824.

Tokuda, K., Yoshimura, T., Masuko, T., Kobayashi, T., & Kitamura, T. (2000). Speech parameter generation algorithms for HMM-based speech synthesis. Proceeding of IEEE International Conference on Acoustics, Speech, and Signal Processing, 3, 1315–1318.

Tokuda, K., Zen, H., & Black, A.W. (2002). An HMM-based speech synthesis system applied to english. In Proceedings of IEEE Workshop on Speech Synthesis (pp. 227–230).

Tseng, C.-Y. (2006). Higher level organization and discourse prosody. The Second International Symposium on Tonal Aspects of Languages (pp. 23–34).

Warsi, A. H., Basu, T., Hirose, K., & Fujisaki, H. (2012). Analysis and synthesis of \(\text{ F }_{0}\) contours of declarative, interrogative, and imperative utterances of bangla. In Proceeding of International Conference on Speech Database and Assessments (Oriental COCOSDA) (pp. 56–61).

Yoshimura, T., Tokuda, K., Masuko, T., Kobayashi, T., & Kitamura, T. (1998). Duration modeling for HMM-based speech synthesis. In Proceedings of ICSLP (pp. 29–32).

Yoshimura, T., Tokuda, K., Masuko, T., Kobayashi, T., & Kitamura, T. (1999). Simultaneous modeling of spectrum, pitch and duration in HMM-based speech synthesis. In Proceedings of Eurospeech (pp. 2347–2350).

Yoshimura, T., Tokuda, K., Masuko, T., Kobayashi, T., & Kitamura, T. (2001). Mixed excitation for HMM-based speech synthesis. In Proceedings of Eurospeech (pp. 2263–2266).

Zen, H., Toda, T., & Tokuda, K. (2006). The Nitech-NAIST HMM-based speech synthesis system for the Blizzard challenge 2006. In Proceedings of Blizzard Challenge Workshop.

Zen, H., Tokuda, K., Masuko, T., Kobayashi, T., & Kitamura, T. (2007a). A hidden semi-Markov model-based speech synthesis system. IEICE Transactions on Information and Systems E Series D, 90(5), 825–834.

Zen, H., Nose, T., Yamagishi, J., Sako, S., Masuko, T., Black, A. W., & Tokuda, K. (2007b). The HMM-based speech synthesis system (hts) version 2.0. In Proceedings of Sixth ISCA Workshop on Speech Synthesis (pp. 294–299).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Mukherjee, S., Mandal, S.K.D. \(\hbox {F}_{0}\) contour generation and synthesis using Bengali Hmm-based speech synthesis system. Int J Speech Technol 18, 25–36 (2015). https://doi.org/10.1007/s10772-014-9247-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10772-014-9247-3