Abstract

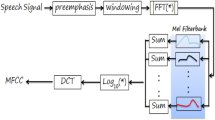

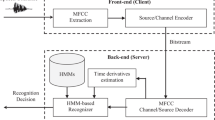

Conventional Hidden Markov Model (HMM) based Automatic Speech Recognition (ASR) systems generally utilize cepstral features as acoustic observation and phonemes as basic linguistic units. Some of the most powerful features currently used in ASR systems are Mel-Frequency Cepstral Coefficients (MFCCs). Speech recognition is inherently complicated due to the variability in the speech signal which includes within- and across-speaker variability. This leads to several kinds of mismatch between acoustic features and acoustic models and hence degrades the system performance. The sensitivity of MFCCs to speech signal variability motivates many researchers to investigate the use of a new set of speech feature parameters in order to make the acoustic models more robust to this variability and thus improve the system performance. The combination of diverse acoustic feature sets has great potential to enhance the performance of ASR systems. This paper is a part of ongoing research efforts aspiring to build an accurate Arabic ASR system for teaching and learning purposes. It addresses the integration of complementary features into standard HMMs for the purpose to make them more robust and thus improve their recognition accuracies. The complementary features which have been investigated in this work are voiced formants and Pitch in combination with conventional MFCC features. A series of experimentations under various combination strategies were performed to determine which of these integrated features can significantly improve systems performance. The Cambridge HTK tools were used as a development environment of the system and experimental results showed that the error rate was successfully decreased, the achieved results seem very promising, even without using language models.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.References

Atal, B., & Rabiner, L. (1976). A pattern recognition approach to voiced-unvoiced-silence classification with application to speech recognition. IEEE Transactions on Acoustics, Speech, and Signal Processing, 24(3), 201–212.

Boll, S. (1979). Suppression of acoustic noise in speech using spectral subtraction. IEEE Transactions on Acoustics, Speech, and Signal Processing, 27(2), 113–120.

Boril, H., & Pollák, P. (2004). Direct time domain fundamental frequency estimation of speech in noisy conditions. In Proceedings of the EUSIPCO2004, Wien, Austria (Vol. 1, pp. 1003–1006).

Cherif, A., & Dabbabi, T. (2001). Pitch detection and formants analysis of Arabic speech processing. Applied Acoustics, 62, 1129–1140.

Daniel, J., & James, H. (2008) Speech and language processing: An introduction to natural language processing, computational linguistics, and speech recognition. (2nd ed.). Upper Saddle River: Prentice Hall.

Davis, S., Sants, B., & Mermelstein, P. (1980). Comparison of parametric representations for monosyllabic word recognition in continuously spoken sentences. IEEE Transaction on Acoustics, Speech and Signal Processing, 28(4), 357–366.

De Mori, R., Moisa, L., Gemello, R., Mana, F., & Albensano, D. (2001). Augmenting standard speech recognition features with energy gravity centres. Computer Speech and Language, 15, 341–354.

ElHadj, O. M. Y. et al. (2007). A manual system to segment and transcribe Arabic Speech. In Proceedings of IEEE ICSPC’07 (pp. 233–236) Dubai, UAE.

Elhadj, O. M. Y., Alghamdi, M., & Alkanhal, M. (2013a) Approach for recognizing allophonic sounds of the classical arabic based on Quran recitations. Theory and Practice of Natural Computing, Lecture Notes in Computer Science (Vol. 8273: pp. 57–67).

Elhadj, O. M. Y., Alghamdi, M., & Alkanhal, M. (2013b). Phoneme-based recognizer to assist reading the Holy Quran. Recent advances in intelligent informatics. Advances in Intelligent Systems and Computing, 235, 141–152.

Elhadj, O. M. Y., Alsughayeir, I. A., Alghamdi, M., Alkanhal, M., Ohali, Y. M., & Alansari, A. M. (2012). Computerized teaching of the Holy Quran (in Arabic), Final Technical Report, King Abdulaziz City for Sciences and Technology (KACST), Riyadh, KSA.

Elhadj, Y. O. M., Khelifa, M. O. M., Yousfi, A., & Belkasmi, M. (2016). An accurate recognizer for basic arabic sounds. ARPN Journal of Engineering and Applied Sciences, 11(5), 3239–3243.

Ezzaidi, H. (2002). Discrimination Speech/music and study of new parameters and models for a speaker identification system in the context of conference calls. (Ph.D. thesis, Chicoutimi: The University of Quebec at Chicoutimi; Department of Applied Science).

Gargouri, D., Kammoun, M. A., & Hamida, A. B. (2006). A comparative study of formant frequencies estimation techniques. In Proceedings of the 5th WSEAS International Conference on Signal Processing, Istanbul, Turkey (pp. 15–19). May 27–29.

Hermansky, H. (1990). Perceptual linear predictive (PLP) analysis of speech. The Journal of the Acoustical Society of America, 87(4), 1738–1752.

Hermansky, H., et al. (1991) Compensation for the effect of the communication channel in auditory-like analysis of speech (RASTAPLP). In EUROSPEECH Genova (Ed.), 1367–1370.

Hermansky, H., & Morgan, N. (1994). RASTA of processing of speech. IEEE Transactions on Speech and Audio Processing, 2(4), 578–589.

Holmes, J., Holmes, W., & Garner, P. (1997). Using formant frequencies in speech recognition. In European Conference on Speech Communication and Technology, Rhodes, Greece (Vol. 4, pp. 2083–2086).

Iqbal, H., Awais, M., Masud, S., & Shamail, S. (2008). On vowels segmentation and identification using formant transitions in continuous recitation of Quranic Arabic. In New Challenges in Applied Intelligence Technologies, ser. Studies in Computational Intelligence (Vol. 134, pp. 155–162). Berlin, Heidelberg: Springer.

Jonathon, S. (2005). A tutorial on principal components analysis. Institute for Nonlinear Science. San Diego: University of California.

Jurafsky, D., & Martin, J. (2009). Speech and language processing—an introduction to natural language processing, computational linguistics, and speech recognition. Upper Saddle River: Prentice Hall.

Khelifa, M. O. M., ElHadj, Y. O. M., Abdellah, Y., & Belkasmi, M. (2016). Enhancing Arabic phoneme recognizer using duration modeling techniques. In Proceedings of Fourth International Conference on Advances in Computing, Electronics and Communication—ACEC Dec 15, 2016, Rome.

Khelifa, M. O. M., ElHadj, Y. O. M., Abdellah, Y., & Belkasmi, M. (2017a). Strategies for implementing an optimal ASR system for quranic recitation recognition. International Journal of Computer Applications, 172(9):35–41.

Khelifa, M. O. M., ElHadj, Y. O. M., Abdellah, Y., & Belkasmi, M. (2017b). An accurate HSMM-based system for Arabic phonemes recognition. In Proceedings of The IEEE Ninth International conference on Advanced Computational Intelligence (ICACI 2017), Feb. 2, Qatar: Doha.

Khelifa, M. O. M., ElHadj, Y. O. M., Abdellah, Y., & Belkasmi, M. (2017c). Helpful statistics in recognizing basic Arabic phonemes. International Journal of Advanced Computer Science and Applications(ijacsa). doi:10.14569/IJACSA.2017.080231.

Leena, M. (2012). Extraction and representation of prosody for speaker, speech and language recognition. New York: Springer.

Liu, S., et al. (1998). The effect of fundamental frequency on mandarin speech recognition. In Proceedings of ICSLP, Sydney, Australia (Vol. 6).

Makhoul, J., & Bolt, B. (1975). Newman, linear prediction: A tutorial review. Proceedings of IEEE, 63(4), 561–580.

Mary, L., & Yegnanarayana, B. (2008). Extraction and representation of prosodic features for language and speaker recognition. Speech Communication, 50, 782–796.

Meftah, A., Selouani, S., & Yousef, L. (2014). Preliminary Arabic speech emotion classification. In IEEE International Symposium on Signal Processing and Information Technology, Noida, India.

Mitchell, M. (1994). Wavelets: A conceptual overview. Cambridge: Massachusetts Institute of Technology, Laboratory for Information and Decision Systems.

Povey, D., Ghoshal, A., Boulianne, G., Burget, L., Glembek, O., Goel, N., et al. (2011). The Kaldi Speech Recognition Toolkit. In IEEE 2011 workshop on automatic speech recognition and understanding (No. EPFL-CONF-192584). IEEE Signal Processing Society.

Rabiner, L., et al. (1976). A comparative performance study of several pitch detection algorithms. IEEE Transactions on Acoustics, Speech, and Signal Processing, 24, 399–417.

Rabiner, L. (1977). On the use of autocorrelation analysis for pitch detection. IEEE Transactions on Acoustics, Speech, and Signal Processing, 25, 1.

Schultz, T., & Black, A. (2008). Rapid language adaptation tools and technologies for multilingual speech processing. In Proceedings of ICASSP, Las Vegas, NV.

Sphinx-4 Java-based Speech Recognition Engine. (2017). http://cmusphinx.sourceforge.net/sphinx4/. Accessed Nov 2017.

Stuttle, M., & Gales, M. (2002). Combining a Gaussian mixture model front end with MFCC parameters. In International Conference on Spoken Language Processing, Denver, Colorado (Vol. 3, pp. 1565–1568).

Thomson, D., & Chengalvarayan, R. (1998). Use of periodicity and jitter as speech recognition feature. In Proceedings of the 1998 IEEE International Conference on acoustics, speech, and signal processing, Seattle, WA, (Vol. 1, pp. 21–24).

Thomson, D., & Chengalvarayan, R. (2002). Use of voicing features in HMM-based speech recognition. Speech Communication, 37(3–4), 197–211.

Vaseghi, S., & Milner, B. (1997). Noise compensation methods for Hidden Markov Model speech recognition in adverse environments. IEEE Transactions on Speech and Audio Processing, 5(1), 11–21.

Weber, K., Bourlard, H., & Bengio, S. (2001). Hmm2-extraction of formant features and their use for robust ASR. In European Conference on Speech Communication and Technology (pp. 607–610).

Welling, L., & Ney, H. (1996). A model for efficient formant estimation. In IEEE international conference on acoustics, speech, and signal processing, 2, pp. 797–801.

Wong, P., Siu, M. (2004). Decision tree based tone modeling for Chinese speech recognition. In Proceedings of ICASSP, Montreal, Canada (Vol. 1, pp. 905–908).

Yang, W. J., et al. (1988). Hidden Markov Model for Mandarin lexical tone recognition. IEEE Transactions On Acoustics, speech, and Signal Processing, 36, 988–992.

Young, S., et al. (2009). HTK Book (V.3.4). Cambridge: Cambridge University Engineering Dept.

Yousef, L., & Amir, H. (2010). Comparative analysis of Arabic vowels using formants and an automatic speech recognition system. International Journal of Signal Processing, Image Processing and Pattern Recognition processing and Pattern Recognition, 3, 2.

Zaineb, B., & Ahmed, B. (2011). Combining formant frequency based on variable order LPC coding with acoustic features for TIMIT phone recognition. International Journal of Speech Technology, 14(4), 393–403.

Zolnay, A., Schlüter, R., & Ney, H. (2003). Extraction methods of voicing feature for robust speech recognition. In European conference on speech communication and technology (Vol. 1, pp. 497–500). Geneva.

Acknowledgements

The presented work utilizes the results (The Speech Database) of a project previously funded by King Abdulaziz City for Science and Technology (KACST) in Saudi Arabia under grant number “AT – 25–113”.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Khelifa, M.O.M., Elhadj, Y.M., Abdellah, Y. et al. Constructing accurate and robust HMM/GMM models for an Arabic speech recognition system. Int J Speech Technol 20, 937–949 (2017). https://doi.org/10.1007/s10772-017-9456-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10772-017-9456-7