Abstract

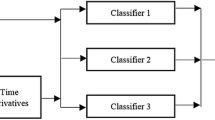

In this work, we have developed a speech mode classification model for improving the performance of phone recognition system (PRS). In this paper, we have explored vocal tract system, excitation source and prosodic features for development of speech mode classification (SMC) model. These features are extracted from voiced regions of a speech signal. In this study, conversation, extempore, and read speech are considered as three different modes of speech. The vocal tract component of speech is extracted using Mel-frequency cepstral coefficients (MFCCs). The excitation source features are captured through Mel power differences of spectrum in sub-bands (MPDSS) and residual Mel-frequency cepstral coefficients (RMFCCs) of the speech signal. The prosody information is extracted from pitch and intensity. Speech mode classification models are developed using above described features independently, and in fusion. The experiments carried out on Bengali speech corpus to analyze the accuracy of the speech mode classification model using the artificial neural network (ANN), naive Bayes, support vector machines (SVMs) and k-nearest neighbor (KNN). We proposed four classification models which are combined using maximum voting approach for optimal performance. From the results, it is observed that speech mode classification model developed using the fusion of vocal tract system, excitation source and prosodic features of speech, yields the best performance of 98%. Finally, the proposed speech mode classifier is integrated to the PRS, and the accuracy of phone recognition system is observed to be improved by 11.08%.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.References

Ananthapadmanabha, T., & Yegnanarayana, B. (1979). Epoch extraction from linear prediction residual for identification of closed glottis interval. IEEE Transactions on Acoustics Speech and Signal Processing, 27(4), 309–319.

Bachu, R., Kopparthi, S., Adapa, B., & Barkana, B. (2008). Separation of voiced and unvoiced using zero crossing rate and energy of the speech signal. In Proceedings of Zone Conference Proceedings on American Society for Engineering Education (pp. 1–7).

Bhattacharjee, U., & Sarmah, K. (2013). Language identification system using mfcc and prosodic features. In Proceedings of International Conference on Intelligent Systems and Signal Processing (pp. 194–197). IEEE

Cheriyadat, A., Bruce, L. M., & Mathur, A. (2003). Decision level fusion with best-bases for hyperspectral classification. In Proceedings of IEEE Workshop on Advances in Techniques for Analysis of Remotely Sensed Data (pp. 399–406). IEEE.

Hossan, M. A., Memon, S., & Gregory, M. A. (2010). A novel approach for mfcc feature extraction. In Proceedings of 4th International Conference on Signal Processing and Communication Systems (pp. 1–5). IEEE.

Islam, M. (2014). Feature and score fusion based multiple classifier selection for iris recognition. Computational Intelligence and Neuroscience, 2014, 10.

Jalil, M., Butt, F. A., & Malik, A. (2013). Short-time energy, magnitude, zero crossing rate and autocorrelation measurement for discriminating voiced and unvoiced segments of speech signals. In Proceedings of International Conference on Technological Advances in Electrical, Electronics and Computer Engineering (pp. 208–212). IEEE.

Koolagudi, S. G., & Rao, K. S. (2012). Emotion recognition from speech using source, system, and prosodic features. International Journal of Speech Technology, 15(2), 265–289.

Kotsiantis, S. B., Zaharakis, I., & Pintelas, P. (2007). Supervised machine learning: A review of classification techniques. Informatica, 31, 249–268.

Kumar, S. S., Rao, K. S., & Pati, D. (2013). Phonetic and prosodically rich transcribed speech corpus in indian languages: Bengali and odia. In International Conference on Oriental COCOSDA held Jointly with Conference on Asian Spoken Language Research and Evaluation (pp. 1–5). IEEE.

Lippmann, R. P. (1989). Review of neural networks for speech recognition. Neural Computation, 1(1), 1–38.

Mangai, U. G., Samanta, S., Das, S., & Chowdhury, P. R. (2010). A survey of decision fusion and feature fusion strategies for pattern classification. IETE Technical Review, 27(4), 293–307.

Manjunath, K., & Rao, K. S. (2014). Automatic phonetic transcription for read, extempore and conversation speech for an indian language: Bengali. In Proceedings of Twentieth National Conference on Communications (pp. 1–6). IEEE.

Manjunath, K., & Rao, K. S. (2015). Source and system features for phone recognition. International Journal of Speech Technology, 18(2), 257–270.

Manjunath, K. E., & Rao, K. S. (2016). Articulatory and excitation source features for speech recognition in read, extempore and conversation modes. International Journal of Speech Technology, 19(1), 121–134.

Murty, K. S. R., & Yegnanarayana, B. (2008). Epoch extraction from speech signals. IEEE Transactions on Audio Speech, and Language Processing, 16(8), 1602–1613.

Povey, D., Burget, L., Agarwal, M., Akyazi, P., Kai, F., Ghoshal, A., et al. (2011). The subspace gaussian mixture modela structured model for speech recognition. Computer Speech & Language, 25(2), 404–439.

Povey, D., Ghoshal, A., Boulianne, G., Burget, L., Glembek, O., Goel, N., Hannemann, M., Motlicek, P., Qian, Y., & Schwarz, P. et al. (2011). The kaldi speech recognition toolkit. In Proceedings of IEEE 2011 workshop on automatic speech recognition and understanding, no. EPFL-CONF-192584. IEEE Signal Processing Society.

Rabiner, L. (1977). On the use of autocorrelation analysis for pitch detection. IEEE Transactions on Acoustics Speech and Signal Processing, 25(1), 24–33.

Rabiner, L. R. (1989). A tutorial on hidden markov models and selected applications in speech recognition. Proceedings of the IEEE, 77(2), 257–286.

Rao, K. S., Koolagudi, S. G., & Vempada, R. R. (2013). Emotion recognition from speech using global and local prosodic features. International Journal of Speech Technology, 16(2), 143–160.

Rao, K. S., & Manjunath, K. (2017). Speech recognition using articulatory and excitation source features. New York: Springer.

Reddy, M. K., & Rao, K. S. (2017). Robust pitch extraction method for the hmm-based speech synthesis system. IEEE Signal Processing Letters, 24(8), 1133–1137.

The International Phonetic Association. (2015). International phonetic alphabet. http://www.langsci.ucl.ac.uk/ipa/index.html. Accessed Feb 2017.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Tripathi, K., Rao, K.S. Improvement of phone recognition accuracy using speech mode classification. Int J Speech Technol 21, 489–500 (2018). https://doi.org/10.1007/s10772-017-9483-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10772-017-9483-4