Abstract

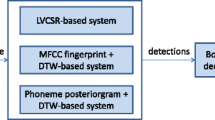

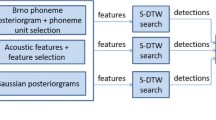

Spoken language processing poses to be a challenging task in multilingual and mixlingual scenario in linguistically diverse regions like Indian subcontinent. Common articulatory based framework is explored for the representation of phonemes of different languages. This framework is designed to handle typical features like aspirated plosives, nasalized vowels, combined letters, unvoiced retroflex plosive which are found in majority of Indian languages. It is trained with two languages. Different strategies like transfer training and joint training are studied to adapt English trained neural networks with smaller amount of Bangla data. It is observed that such training not only improves Query-by-Example Spoken Term Detection (QbE-STD) in the language of same language family like Hindi but also other Indian languages like Tamil and Telugu. While cross lingual adaptation of neural networks with a language specific softmax layer has been studied earlier in context of speech recognition, this work presents an architecture which is language independent uptil softmax layer. It is observed that this architecture has higher accuracy for unseen languages, is more compact and can be adapted more easily for new languages in comparison to the classic phoneme posteriorgrams based architecture.

Similar content being viewed by others

References

Das, B., Mandal, S., & Mitra, P. (2011). Bengali speech corpus for continuous auutomatic speech recognition system. In 2011 International conference on speech database and assessments (Oriental COCOSDA). (pp 51–55). https://doi.org/10.1109/ICSDA.2011.6085979.

Ellis, D. (2000). Quicknet-icsi. http://www.icsi.berkeley.edu/Speech/qn.html.

Garofolo, J. (1993). Csr-i (wsj0) complete ldc93s6a.

Garofolo, J. (1994). Csr-ii (wsj1) complete ldc94s13a.

Gupta, V., Ajmera, J., Kumar, A., & Verma, A. (2011). A language independent approach to audio search. In Proceedings of INTERSPEECH, ISCA (pp. 1125–1128).

Hazen, T., Shen, W., & White, C. (2009). Query-by-example spoken term detection using phonetic posteriorgram templates. In Proceedings of ASRU (pp. 421–426). https://doi.org/10.1109/ASRU.2009.5372889.

Heigold, G., Vanhoucke, V., Senior, A. W., Nguyen, P., Ranzato, M., Devin, M., et al. (2013). Multilingual acoustic models using distributed deep neural networks. In Proceedings of ICASSP (pp. 8619–8623).

Huang, J. T., Li, J., Yu, D., Deng, L., & Gong, Y. (2013). Cross-language knowledge transfer using multilingual deep neural network with shared hidden layers. In Proceedings of ICASSP (pp. 7304–7308).

Lopez-Otero, P., Docio-Fernandez, L., & Garcia-Mateo, C. (2015). Phonetic unit selection for cross-lingual query-by-example spoken term detection. In Proceedings of ASRU (pp. 223–229).

Mantena, G., Achanta, S., & Prahallad, K. (2014). Query-by-example spoken term detection using frequency domain linear prediction and non-segmental dynamic time warping. IEEE/ACM Transactions on Audio, Speech, and Language Processing, 22(5), 946–955. https://doi.org/10.1109/TASLP.2014.2311322.

Mantena, G., & Prahallad, K. (2014). Use of articulatory bottle-neck features for query-by-example spoken term detection in low resource scenarios. In Proceedings of ICASSP (pp. 7128–7132). https://doi.org/10.1109/ICASSP.2014.6854983.

Popli, A., & Kumar, A. (2015). Query-by-example spoken term detection using low dimensional posteriorgrams motivated by articulatory classes. In 2015 IEEE 17th international workshop on multimedia signal processing (MMSP) (pp. 1–6). https://doi.org/10.1109/MMSP.2015.7340826.

Popli, A., & Kumar, A. (2017a). Capturing Indian phonemic diversity with multiple posteriorgrams for multilingual Query-by-Example spoken term detection. In 2017 Twenty-third national conference on communications (NCC) (NCC-2017) (pp. 453–458). India: Chennai.

Popli, A., & Kumar, A. (2017b). Multimodal keyword search for multilingual and mixlingual speech corpus. In A. Karpov, R. Potapova, & I. Mporas (Eds.), Proceedings, springer, lecture notes in computer science, speech and computer—19th international conference, SPECOM 2017 Hatfield, UK, September 12–16, 201710458 (pp. 535–545). https://doi.org/10.1007/978-3-319-66429-3_53.

Proenga, J., Veiga, A., & Perdigao, F. (2015). Query by example search with segmented dynamic time warping for non-exact spoken queries. In Proceedings of EUSIPCO (pp. 1661–1665).

Saxena, A., & Yegnanarayana, B. (2015). Distinctive feature based representation of speech for query-by-example spoken term detection. In Proceedings of INTERSPEECH.

Thomas, S., Ganapathy, S., & Hermansky, H. (2012). Multilingual mlp features for low-resource lvcsr systems. In Proceedings of ICASSP (pp. 4269–4272). https://doi.org/10.1109/ICASSP.2012.6288862.

van Hout, J., Ferrer, L., Vergyri, D., Scheffer, N., Lei, Y., Mitra, V., & Wegmann, S. (2014). Calibration and multiple system fusion for spoken term detection using linear logistic regression. In Proceedings of ICASSP (pp. 7138–7142).

Vertanen, K. (2005). HTK wall street journal training recipe. https://www.keithv.com/software/htk/.

Vu, N. T., Breiter, W., Metze, F., & Schultz, T. (2012). Initialization schemes for multilayer perceptron training and their impact on ASR performance using multilingual data. In Proceedings of INTERSPEECH, 2012 (pp. 2586–2589).

Wang, H., Lee, T., Leung, C. C., Ma, B., & Li, H. (2013). Using parallel tokenizers with dtw matrix combination for low-resource spoken term detection. In Proceedings of ICASSP (pp. 8545–8549). https://doi.org/10.1109/ICASSP.2013.6639333.

Wikipedia (2016). Languages of India. Accessed December 27, 2016, from https://en.wikipedia.org/wiki/Languages_of_India.

Yuan, Y., Leung, C. C., Xie, L., Chen, H., Ma, B., & Li, H. (2017). Pairwise learning using multi-lingual bottleneck features for low-resource query-by-example spoken term detection. In Proceedings of ICASSP (pp. 5645–5649). https://doi.org/10.1109/ICASSP.2017.7953237.

Zhang, Y., & Glass, J. (2009). Unsupervised spoken keyword spotting via segmental DTW on gaussian posteriorgrams. In Proceedings of ASRU (pp. 398–403). https://doi.org/10.1109/ASRU.2009.5372931.

Acknowledgements

The authors would like to thank Dr. K. Samudravijaya (TIFR) and Dr. S. Lata (DEITY) for providing Hindi data and Dr. Suryakanth V. Gangashetty (IIIT, Hyderabad) for providing Telugu data. The first author would like to thank his manager Mr. Biren Karmakar and Mr. Vipin Tyagi, Executive Director, CDOT, New Delhi for their permission to carry out this research.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Popli, A., Kumar, A. Multilingual query-by-example spoken term detection in Indian languages. Int J Speech Technol 22, 131–141 (2019). https://doi.org/10.1007/s10772-018-09585-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10772-018-09585-3