Abstract

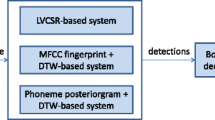

This study proposes a new approach to improving spoken term detection by employing Acoustic Word Embeddings. Our model combines CNNs and LSTM networks to capture sequential information and generate fixed-dimensional word-level embeddings. We have introduced a novel deep word discrimination loss to increase the distinctiveness of these embeddings, thereby improving word differentiation. Additionally, we have developed a matching scheme that utilizes a neural network framework alongside a text-to-speech technique to generate acoustic embeddings from text. These embeddings are crucial for effective cross-modal retrieval and audio indexing, especially in detecting unseen words. Our experimental results demonstrate that our method outperforms traditional baselines in word discrimination tasks, achieving higher mean Average Precision scores. Furthermore, our matching scheme significantly enhances spoken term detection for both regular and unseen words, which could pave the way for future advances in audio indexing, cross-modal retrieval, and search functionalities.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.References

Ardila, R., Branson, M., Davis, K., Henretty , M., Kohler, M., Meyer, J., Morais, R., Saunders, L., Tyers, F. M., & Weber, G. (2019). Common voice: A massively-multilingual speech corpus. In International conference on language resources and evaluation. https://api.semanticscholar.org/CorpusID:209376338

Ardila, R., Branson, M., Davis, K., Kohler, M., Meyer, J., Henretty, M., Morais, R., Saunders, L., Tyers, F., & Weber, G. (2020). Common voice: A massively-multilingual speech corpus. In N. Calzolari, F. Béchet, P. Blache, K. Choukri, C. Cieri, T. Declerck, S. Goggi, H. Isahara, B. Maegaard, J. Mariani, H. Mazo, A. Moreno, J. Odijk, & S. Piperidis (Eds.), Proceedings of the twelfth language resources and evaluation conference (pp. 4218–4222). European Language Resources Association, Marseille, France. https://aclanthology.org/2020.lrec-1.520

Baevski, A., Zhou, H., Mohamed, A., & Auli, M. (2020). Wav2vec 2.0: A framework for self-supervised learning of speech representations. In Proceedings of the 34th international conference on neural information processing systems. Curran Associates Inc., Red Hook, NY, USA, NIPS’20. https://proceedings.neurips.cc/paper/2020/hash/92d1e1eb1cd6f9fba3227870bb6d7f07-Abstract.html

Chantangphol, P., Sakdejayont, T., & Chalothorn, T. (2023). Enhancing word discrimination and matching in query-by-example spoken term detection with acoustic word embeddings. In M. Abbas, & A. A. Freihat (Eds.), Proceedings of the 6th international conference on natural language and speech processing (ICNLSP 2023) (pp. 293–302),virtual event, 16–17 December 2023. Association for Computational Linguistics. https://aclanthology.org/2023.icnlsp-1.31

Chen, G., Parada, C., & Sainath, T. N. (2015). Query-by-example keyword spotting using long short-term memory networks. In 2015 IEEE international conference on acoustics, speech and signal processing (ICASSP) (pp. 5236–5240). https://doi.org/10.1109/ICASSP.2015.7178970

Chen, H., Leung, C.-C., Xie, L., Ma, B., & Li, H. (2016). Unsupervised bottleneck features for low-resource query-by-example spoken term detection. In N. Morgan (Ed.), 17th annual conference of the international speech communication association (Interspeech) (pp. 923–927). ISCA. https://doi.org/10.21437/INTERSPEECH.2016-313

Chuangsuwanich, E., Suchato, A., Karunratanakul, K., Naowarat, B., Chaichot, C., Sangsa-nga, P., Anutarases, T., Chaipojjana, N., & Chaichana, Y. (2020). Gowajee corpus. Technical report, Chulalongkorn University, Faculty of Engineering, Computer Engineering Department. https://github.com/ekapolc/gowajee_corpus

Chung, Y., & Glass, J. R. (2018). Speech2vec: A sequence-to-sequence framework for learning word embeddings from speech. In B. Yegnanarayana (Ed.), 19th annual conference of the international speech communication association (Interspeech) (pp. 811–815). ISCA. https://doi.org/10.21437/INTERSPEECH.2018-2341

Conneau, A., Baevski, A., Collobert, R., Mohamed, A., & Auli, M. (2021). Unsupervised cross-lingual representation learning for speech recognition. In H. Hermansky, H. Cernocký, L. Burget, L. Lamel, O. Scharenborg, & P. Motlícek (Eds.), 22nd annual conference of the international speech communication association (Interspeech) (pp. 2426–2430). ISCA. https://doi.org/10.21437/INTERSPEECH.2021-329

Garofolo, J. S., Lamel, L. F., Fisher, W. M., Fiscus, J. G., Pallett, D. S., Dahlgren, N. L., & Zue, V. (1983). Timit acoustic-phonetic continuous speech corpus. https://doi.org/10.35111/17gk-bn40

Ge, W., Huang, W., Dong, D., & Scott, M. R. (2018). Deep metric learning with hierarchical triplet loss. In V. Ferrari, M. Hebert, C. Sminchisescu & Y. Weiss (Eds.), Computer vision–ECCV 2018 (pp. 272–288). Springer. https://doi.org/10.1007/978-3-030-01231-1_17

Holzenberger, N., Du, M., Karadayi, J., Riad, R., & Dupoux, E. (2018). Learning word embeddings: Unsupervised methods for fixed-size representations of variable-length speech segments. In B. Yegnanarayana (Ed.), 19th annual conference of the international speech communication association (Interspeech). ISCA. https://doi.org/10.21437/INTERSPEECH.2018-2364

Hsu, W.-N., Bolte, B., Tsai, Y.-H.H., Lakhotia, K., Salakhutdinov, R., & Mohamed, A. (2021). Hubert: Self-supervised speech representation learning by masked prediction of hidden units. IEEE/ACM Transactions on Audio, Speech, and Language Processing, 29, 3451–3460. https://doi.org/10.1109/TASLP.2021.3122291

Kamper, H., Anastassiou, A., & Livescu, K. (2019). Semantic query-by-example speech search using visual grounding. In 2019 IEEE international conference on acoustics, speech and signal processing (ICASSP 2019) (pp. 7120–7124). https://doi.org/10.1109/ICASSP.2019.8683275

Kamper, H., Wang, W., & Livescu, K. (2016). Deep convolutional acoustic word embeddings using word-pair side information. In 2016 IEEE international conference on acoustics, speech and signal processing (ICASSP) (pp. 4950–4954). https://doi.org/10.1109/ICASSP.2016.7472619

Lertpiya, A., Chaiwachirasak, T., Maharattanamalai, N., Lapjaturapit, T., Chalothorn, T., Tirasaroj, N., & Chuangsuwanich, E. (2018). A preliminary study on fundamental Thai NLP tasks for user-generated web content. In 2018 international joint symposium on artificial intelligence and natural language processing (iSAI-NLP) (pp. 1–8). https://api.semanticscholar.org/CorpusID:121396330

Levin, K., Henry, K., Jansen, A., & Livescu, K. (2013). Fixed-dimensional acoustic embeddings of variable-length segments in low-resource settings. In 2013 IEEE workshop on automatic speech recognition and understanding (pp. 410–415). https://doi.org/10.1109/ASRU.2013.6707765

Lopez-Otero, P., Parapar, J., & Barreiro, A. (2019). Efficient query-by-example spoken document retrieval combining phone multigram representation and dynamic time warping. Information Processing and Management, 56(1), 43–60. https://doi.org/10.1016/j.ipm.2018.09.002

Ma, M., Wu, H., Wang, X., Yang, L., Wang, J., & Li, M. (2021). Acoustic word embedding system for code-switching query-by-example spoken term detection. In 2021 12th international symposium on Chinese spoken language processing (ISCSLP) (pp. 1–5). https://doi.org/10.1109/ISCSLP49672.2021.9362056

Madhavi, M. C., & Patil, H. A. (2017). Partial matching and search space reduction for QbE-STD. Computer Speech and Language, 45, 58–82. https://doi.org/10.1016/j.csl.2017.03.004

Mandal, A., Kumar, K. R. P., & Mitra, P. (2014). Recent developments in spoken term detection: A survey. International Journal of Speech Technology, 17(2), 183–198. https://doi.org/10.1007/S10772-013-9217-1

Mantena, G., Achanta, S., & Prahallad, K. (2014). Query-by-example spoken term detection using frequency domain linear prediction and non-segmental dynamic time warping. IEEE/ACM Transactions on Audio, Speech, and Language Processing, 22(5), 946–955. https://doi.org/10.1109/TASLP.2014.2311322

McAuliffe, M., Socolof, M., Mihuc, S., Wagner, M., & Sonderegger, M. (2017). Montreal Forced Aligner: Trainable text-speech alignment using Kaldi. In F. Lacerda (Ed.), 18th annual conference of the international speech communication association (Interspeech) (pp. 498–502). ISCA. https://doi.org/10.21437/INTERSPEECH.2017-1386

Naik, P., Gaonkar, M. N., Thenkanidiyoor, V., & Dileep, A. D. (2020). Kernel based matching and a novel training approach for CNN-based QbE-STD. In 2020 international conference on signal processing and communications (SPCOM) (pp. 1–5). https://doi.org/10.1109/SPCOM50965.2020.9179588

Panayotov, V., Chen, G., Povey, D., & Khudanpur, S. (2015). LibriSpeech: An ASR corpus based on public domain audio books. In 2015 IEEE international conference on acoustics, speech and signal processing (ICASSP) (pp. 5206–5210). https://doi.org/10.1109/ICASSP.2015.7178964

Pitt, M. A., Johnson, K., Hume, E., Kiesling, S., & Raymond, W. (2005). The buckeye corpus of conversational speech: Labeling conventions and a test of transcriber reliability. Speech Communication, 45(1), 89–95. https://doi.org/10.1016/j.specom.2004.09.001

Ram, D., Miculicich, L., & Bourlard, H. (2018). CNN based query by example spoken term detection. In B. Yegnanarayana (Ed.), 19th annual conference of the international speech communication association (Interspeech) (pp. 92–96). ISCA. https://doi.org/10.21437/INTERSPEECH.2018-1722

Ram, D., Miculicich, L., & Bourlard, H. (2020). Neural network based end-to-end query by example spoken term detection. IEEE/ACM Transactions on Audio, Speech, and Language Processing, 28, 1416–1427. https://doi.org/10.1109/TASLP.2020.2988788

Ram, S., & Aldarmaki, H. (2022). Supervised acoustic embeddings and their transferability across languages. In M. Abbas, & A. A. Freihat (Eds.), Proceedings of the 5th international conference on natural language and speech processing (ICNLSP 2022) (pp. 212–218). Association for Computational Linguistics, Trento, Italy. https://aclanthology.org/2022.icnlsp-1.24

Reddy, C. K. A., Beyrami, E., Pool, J., Cutler, R., Srinivasan, S., & Gehrke, J. (2019). A scalable noisy speech dataset and online subjective test framework. In Interspeech. https://api.semanticscholar.org/CorpusID:202660998

Rodriguez-Fuentes, L. J., Varona, A., Penagarikano, M., Bordel, G., & Diez, M. (2014). High-performance query-by-example spoken term detection on the SWS 2013 evaluation. In 2014 IEEE international conference on acoustics, speech and signal processing (ICASSP) (pp. 7819–7823). https://doi.org/10.1109/ICASSP.2014.6855122

Sanabria, R., Tang, H., & Goldwater, S. (2023). Analyzing acoustic word embeddings from pre-trained self-supervised speech models. In 2023 IEEE international conference on acoustics, speech and signal processing (ICASSP) (pp. 1–5). https://doi.org/10.1109/ICASSP49357.2023.10096099

Seamless Communication, Barrault, L., Chung, Y.-A., Meglioli, M. C., Dale, D., Dong, N., Duppenthaler, M., Duquenne, P.-A., Ellis, B., ElSahar, H., Haaheim, J., Hoffman, J., Hwang, M.-J., Inaguma, H., Klaiber, C., Kulikov, I., Li, P. L., Daniel, M., Jean, M., ... Williamson, M. (2023). Seamless: Multilingual expressive and streaming speech translation. arXiv. https://arxiv.org/abs/2312.05187. https://api.semanticscholar.org/CorpusID:266149504

Settle, S., Levin, K. D., Kamper, H., & Livescu, K. (2017). Query-by-example search with discriminative neural acoustic word embeddings. In F. Lacerda (Ed.), 18th annual conference of the international speech communication association (Interspeech) (pp. 2874–2878). ISCA. https://doi.org/10.21437/INTERSPEECH.2017-1592

Settle, S., & Livescu, K. (2016). Discriminative acoustic word embeddings: Tecurrent neural network-based approaches. In 2016 IEEE spoken language technology workshop (SLT) (pp. 503–510). https://doi.org/10.1109/SLT.2016.7846310

Svec, J., Lehecka, J., & Smídl, L. (2022). Deep LSTM spoken term detection using wav2vec 2.0 recognizer. In H. Ko & J. H. L. Hansen (Eds.), 23rd annual conference of the international speech communication association (Interspeech) (pp. 1886–1890). ISCA. https://doi.org/10.21437/INTERSPEECH.2022-10409

Vasudev, D., Vasudev, S. V., Anish Babu, K. K., & Riyas, K. S. (2016). Combined MFCC-FBCC features for unsupervised query-by-example spoken term detection. In S. Berretti, S. M. Thampi, & P. R. Srivastava (Eds.), Intelligent systems technologies and applications (pp. 511–519). Springer. https://doi.org/10.1007/978-3-319-23036-8_44

Wang, X., Han, X., Huang, W., Dong, D., & Scott, M. R. (2019). Multi-similarity loss with general pair weighting for deep metric learning. In 2019 IEEE/CVF conference on computer vision and pattern recognition (CVPR) (pp. 5017–5025). https://doi.org/10.1109/CVPR.2019.00516

Wang, Y. H., Lee, H. Y., & Lee, L. S. (2018). Segmental audio word2vec: Representing utterances as sequences of vectors with applications in spoken term detection. In 2018 IEEE international conference on acoustics, speech and signal processing (ICASSP) (pp. 6269–6273). https://doi.org/10.1109/ICASSP.2018.8462002

Yuan, Y., Leung, C.-C., Xie, L., Chen, H., Ma, B., & Li, H. (2018). Learning acoustic word embeddings with temporal context for query-by-example speech search. In B. Yegnanarayana (Ed.), 19th annual conference of the international speech communication association (Interspeech) (pp. 97–101). ISCA. https://doi.org/10.21437/INTERSPEECH.2018-1010

Zhang, K., Wu, Z., Jia, J., Meng, H. M., & Song, B. (2019). Query-by-example spoken term detection using attentive pooling networks. In 2019 Asia-Pacific signal and information processing association annual summit and conference (pp. 1267–1272). IEEE. https://doi.org/10.1109/APSIPAASC47483.2019.9023023

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Chantangphol, P., Sakdejayont, T. & Chalothorn, T. Enhancing spoken term detection with deep acoustic word embeddings and cross-modal matching techniques. Int J Speech Technol 27, 875–886 (2024). https://doi.org/10.1007/s10772-024-10145-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10772-024-10145-1