Abstract

In this paper, we propose a new term dependence model for information retrieval, which is based on a theoretical framework using Markov random fields. We assume two types of dependencies of terms given in a query: (i) long-range dependencies that may appear for instance within a passage or a sentence in a target document, and (ii) short-range dependencies that may appear for instance within a compound word in a target document. Based on this assumption, our two-stage term dependence model captures both long-range and short-range term dependencies differently, when more than one compound word appear in a query. We also investigate how query structuring with term dependence can improve the performance of query expansion using a relevance model. The relevance model is constructed using the retrieval results of the structured query with term dependence to expand the query. We show that our term dependence model works well, particularly when using query structuring with compound words, through experiments using a 100-gigabyte test collection of web documents mostly written in Japanese. We also show that the performance of the relevance model can be significantly improved by using the structured query with our term dependence model.

Similar content being viewed by others

1 Introduction

The structured query approach has been used to include more meaningful phrases in a proximity search query to improve retrieval effectiveness (Croft et al. 1991; Metzler and Croft 2005). Phrase-based queries are known to perform effectively, especially with large-scale collections such as the Web (Mishne and de Rijke 2005; Metzler and Croft 2005). This is caused by the fact that larger collections are in general noisier while they contain more information, and the fact that phrase-based structured queries can filter out many of such noisy documents. These methods can capture term dependencies that appear in a query. However, they did not distinguish different types of term dependencies such as: (i) long-range dependencies that may appear for instance within a passage or a sentence in a target document, and (ii) short-range dependencies that may appear for instance within a compound word in a target document. Capturing this kind of complex dependencies should be promising for any language; however, we believe it more promising especially for some languages, for instance Japanese, in which individual words are frequently composed into a long compound word and the formation of an endless variety of compound words is allowed.

Another technique, pseudo-relevance feedback, has been commonly used to address important notions of synonymy and polysemy in information retrieval (Buckley et al. 1994; Xu and Croft 1996; Lavrenko and Croft 2001; Zhai and Lafferty 2001). It can significantly improve ad hoc retrieval results by expanding a query, assuming that all top-ranked documents retrieved in response to the query are relevant. Although pseudo-relevance feedback generally improves effectiveness by capturing the context of query terms in documents, it can occasionally add terms to the query that are not helpful. Recently, pseudo-relevance feedback was used to estimate multinomial models that were representative of user’s interests, within the framework of probabilistic language models (Lavrenko and Croft 2001; Zhai and Lafferty 2001). Following Lavrenko and Croft (2001), we will use the term relevance models to describe such models. The combination of phrase-based query structuring and query expansion via the relevance model is promising, because each model has its own advantages: phrase-based query structuring can capture dependencies between query terms and the relevance model can handle mismatched vocabulary.

In this paper, we use the structured query approach using word-based units to capture compound words, as well as more general phrases, in a query. Our approach is based on a theoretical framework using Markov random fields (Metzler and Croft 2005). Our work is the first attempt, to the best of our knowledge, to explicitly capture both long-range and short-range term dependencies for information retrieval. We further investigate how query structuring with term dependence can improve the performance of query expansion via a relevance model. Our experiments were performed using the 100-gigabyte web test collections that were developed in the NTCIR Workshop Web Task (Eguchi et al. 2003, 2004; Yoshioka 2005) and mostly written in Japanese. This study is also the first attempt to thoroughly examine term dependencies in the Japanese language to formulate structured queries for web information retrieval.

The rest of this paper is structured as follows. Section 2 discusses problems of various kinds of term dependencies that appear in Japanese information retrieval, and research efforts to address the problems. Section 3 introduces the retrieval model that we use and the term dependence model using Markov random fields, which gives a theoretical framework to our investigation. Section 4 describes phrase-based query structuring with our two-stage term dependence model. Section 5 describes query expansion via a relevance model. Section 6 explains the test collections we use in this paper, and our experimental results. Section 7 concludes the paper.

2 Problem statement and related research efforts for Japanese information retrieval

We assume two types of dependencies of terms in a query: (i) long-range dependencies that may appear, for instance, within a passage or a sentence in a target document, and (ii) short-range dependencies that may appear, for instance, within a compound word in a target document. We believe that this assumption is realistic in any language; however, handling compound words may be different for different languages. Some languages, such as English, favor open compound words, in which multiple words are separated by spaces. In these languages, we must detect compound word boundaries; however, once a compound word is specified, the constituents of the compound word can be tokenized simply by the spaces. Some other languages usually use closed compound words, which are expressed without explicit word separators; typical examples are German, Swedish, Danish and Finnish. In these languages, we must find the constituents of a compound word, but detecting compound words should not be hard. In some East Asian languages that use ideograms, such as Japanese and Chinese, we must both segment individual words and detect compound words.

In this paper, we develop, in Sect. 4, a two-stage term dependence model that captures long-range and short-range dependencies differently. We assume that: (i) global dependencies occur between query components that are explicitly delimited by separators in a query; and (ii) local dependencies occur between constituents within a compound word when the compound word is specified in a query component. These correspond to long-range and short-range dependencies, respectively. We experiment, in Sect. 6, using a large-scale web document collection mostly written in Japanese; however, the two-stage term dependence model may be reasonable for other languages, if compound words and their constituents can be specified in a query, as mentioned above.

2.1 Problems in processing the Japanese language for query formulation

First, we consider what language units are appropriate for a query formulation process for the Japanese language. One question is, for example, how a compound noun with prefix or suffix words, such as “ozon-s ō” (ozone layer), should be represented as a query. Possible ways are as follows.

-

1.

The compound noun should be used as it is.

-

2.

The compound noun should be decomposed into primitive words, such as “ozon” (ozone) and “s ō” (layer).

-

3.

The suffix and prefix should be removed from the compound noun, but the dominant constituent word, such as “ozon” (ozone), should be kept.

-

4.

Adding to the original compound noun, the dominant constituent word should also be used, in the case of the example, “ozon-s ō” (ozone layer) and “ozon” (ozone) are used.

Another question is whether or not other compound words, not containing a prefix or suffix, should be decomposed into their constituents, for example, whether a loan word expressed in katakana characters, “ozon-h ō ru” (ozone hole), should be decomposed into “ozon” (ozone) and “h ō ru” (hole) or not; and whether a general compound noun, “kabushiki-t ō shi” (stock investment), should be decomposed into “kabushiki” (stock) and “t ō shi” (investment) or not. Note that these compound words are expressed without a separator in the Japanese language when using the original Japanese characters, but not using the Latin alphabet as above. Thus, query terms input by a user are often expressed as such compound words, in which constituent words are not delimited by a separator. Possible ways are as follows.

-

1.

The compound word should be used as it is.

-

2.

The compound word should be decomposed into primitive words.

-

3.

Adding to the original compound word, the primitive constituent words should also be used.

We will discuss these issues and empirically specify appropriate language units (hereafter, compound word models) for the query structuring with two-stage term dependence in Sect. 4.1.

Furthermore, web search engines usually support query expression with a delimiter, even for users who use the Japanese language, so that a user can express multiple concepts that should reflect the user’s information needs in a query, and the search engine can then construct a complex query. Using this function, the user can input a query consisting of multiple components, each of which is expressed as a compound word or a single word, such as “ozon-s ō ozon-h ō ru jintai” (three components of ‘ozone layer’, ‘ozone hole’ and ‘human body’ with a space delimiter between each component), to search for the effects of destruction of the ozone layer and expansion of the ozone hole on the human body. This kind of query requires more global dependence between query components, such as between “ozon-s ō” (ozone layer), “ozon-h ō ru” (ozone hole) and “jintai” (human body), and more local dependence between constituents within each compound word, in this case “ozon” (ozone) and “s ō” (layer), or “ozon” (ozone) and “h ō ru” (hole). Note that this local dependence is tighter than global dependence, in general.

We will discuss the issue of how to formulate the query, taking into account both global dependence and local dependence, and develop appropriate models for this purpose, mainly in Sect. 4.2.

2.2 Research efforts for Japanese information retrieval—focusing compound words and segmentation

Japanese text retrieval is required to handle several types of problems specific to the Japanese language, such as compound words and segmentation (Fujii and Croft 1993). To treat these problems, word-based indexing is typically achieved by applying a morphological analyzer, and character-based indexing has also been investigated. In earlier work, Fujii and Croft compared character unigram-based indexing and word-based indexing, and found their retrieval effectiveness comparable, especially when applied to text using kanji characters Footnote 1 (Fujii and Croft 1993). Following this work, many researchers have investigated more complex character-based indexing methods, such as using overlapping character bigrams, sometimes mixed with character unigrams, for the Japanese language as well as for Chinese or Korean. Some researchers compared this kind of character-based indexing with word-based indexing, and found little difference between them in retrieval effectiveness (Fujii and Croft 1993; Jones et al. 1998; Chen and Gey 2002; Moulinier et al. 2002). The focus of these studies was rather on how to improve efficiency while maintaining effectiveness in retrieval. Some other researchers (1) used both phrases and their constituent words as well as individual words as an index (Ogawa and Matsuda 1997; Kando et al. 1998); or (2) made use of supplemental phrase-based indexing in addition to word-based indexing Footnote 2 (Fujita 1999), where phrase detection on targeted documents is required in advance. However, we believe this kind of approaches is not appropriate for the languages, for instance Japanese, in which individual words are frequently composed into a long compound word and the formation of an endless variety of compound words is allowed.

Meanwhile, the structured query approach does not require phrase detection on targeted documents in advance of searching (Croft et al. 1991; Metzler and Croft 2005). A few researchers have investigated this approach to retrieval for Japanese newspaper articles (Fujii and Croft 1993; Moulinier et al. 2002); however, they emphasized formulating a query using character n-grams and showed that this approach performed comparably in retrieval effectiveness with the word-based approach. We are not aware of any studies that have used structured queries to formulate queries reflecting Japanese compound words or phrases appropriately. We also have not seen any studies that used structured queries to effectively retrieve web documents written in Japanese. In this paper, we use the structured query approach using word-based units to capture, in a query, both term dependencies within a compound word and more general term dependencies.

3 Retrieval model and query language

3.1 Retrieval model

Indri is a search engine platform that can handle large-scale document collections efficiently and effectively (Metzler and Croft 2004; Strohman et al. 2005). The retrieval model implemented in Indri combines the language modeling (Croft and Lafferty 2003) and inference network (Turtle and Croft 1991) approaches to information retrieval. This model allows structured queries similar to those used in InQuery (Turtle and Croft 1991) to be evaluated using language modeling estimates within the network. Some of the query language operators supported in Indri are shown in Table 1, where the estimate of a document with respect to a query operator is referred to as a belief. Because we focus on query formulation rather than retrieval models, we use Indri as a baseline platform for our experiments. The efficiency of Indri operators is discussed in Strohman et al. (2005). Our approach described in Sect. 4 is not limited to the platform of Indri, but can be implemented in any system where ordered and unordered phrase operators, such as those in Table 1, are workable. How indexes for ordered/unordered phrase operations can be implemented efficiently in any system is discussed in Strohman (2007). For example, most web search engines already index high-order n-grams, and our methods can be implemented with that type of index.

3.2 Term dependence model via Markov random fields

Metzler and Croft (2005) developed a general, formal framework for modeling term dependencies via Markov random fields, and showed that the model is very effective in a variety of retrieval situations using the Indri platform. This section summarizes this term dependence model. Markov random fields (MRFs), also called undirected graphical models, are commonly used in statistical machine learning to model joint distributions succinctly. In Metzler and Croft (2005), the joint distribution P Λ(Q, D) over queries Q and documents D, parameterized by Λ, was modeled using MRFs, and for ranking purposes the posterior P Λ(D|Q) was derived by the following ranking function, assuming a graph G that consists of a document node and query term nodes:

where \(Q=t_1, {\ldots}, t_n,\) C(G) is the set of cliques in an MRF graph G, f(c) is some real-valued feature function over clique values, and λ c is the weight given to that particular feature function. The sign ‘ \(\stackrel{rank}=\)’ indicates that the ranking of documents according to the left-hand side is equivalent to that according to the right-hand side.

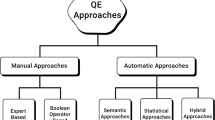

Full independence Footnote 3 (fi), sequential dependence (sd), and full dependence (fd) are assumed as three variants of the MRF model. Figure 1 shows graphical model representation of each. The full-independence variant makes the assumption that query terms are independent of each other. The sequential dependence variant assumes dependence between query terms that appear contiguously, while the full-dependence variant assumes that all query terms are in some way dependent on each other. To express these assumptions, the following specific ranking function was derived:

where T is defined as the set of 2-cliques involving a query term and a document D, O is the set of cliques containing the document node and two or more query terms that appear contiguously within the query, and U is the set of cliques containing the document node and two or more query terms appearing noncontiguously within the query. Here, the constraint λ T + λ O + λ U = 1 can be imposed.

Example Markov random field model for three query terms under various dependence assumptions: (Left) Full independence, (middle) sequential dependence, and (right) full dependence (Metzler and Croft 2005)

Table 2 provides a summary of the feature functions and Indri query language expressions proposed in Metzler and Croft (2005). In this table, \({{\tt{\#1(\cdot)}}}\) indicates exact phrase expressions. \({{\tt{\#uw}}N(\cdot)}\) is described in Table 1. Let us take an example query that consists of three words: “term dependence models”. The following are Indri query expressions for this example according to the sd and fd models, respectively, each of which is formulated in the form of “\({\tt{\#weight}} \ (\lambda_T\, {\tt{\#combine}}(\cdots f_{T}^{i} \cdots) \quad \lambda_O\, {\tt{\#combine}}(\cdots f_{O}^{i} \cdots) \quad \lambda_U\, {\tt{\#combine}}(\cdots f_{U}^{i} \cdots))\)”:

where \({{\tt{\#uw}}}N_{\ell}(\cdot)\) indicates phrase expressions in which the specified terms appear unordered within a window of N ℓ terms, and N ℓ is given by (N 1 × ℓ) when ℓ terms appear in the window. The window-size parameter N 1 is determined empirically.

4 Query structuring with two-stage term dependence

In order to process the Japanese language or some other East Asian languages that use ideograms, we must know what language units are appropriate for phrase-based query structuring. We will discuss this issue in Sect. 4.1. We will also discuss, in Sect. 4.2, the issue of how to formulate a query, taking into account both the global dependence between query components that are separated by delimiters, and the local dependence between constituents of a compound word when the compound word is specified in a query component.

4.1 Compound word models

Compound words containing prefix/suffix words may only be treated in the same way as single words; otherwise, adding to these, the constituent words qualified by prefix/suffix words may also be used for query components. At least, the prefix/suffix words themselves should not be used as query components independently, because each prefix/suffix word usually expresses a specific concept only by being concatenated with the following or preceding word. Other compound words, that do not contain prefix/suffix words, may be used together with constituent words for query components, because both the compound words and often their constituent words convey specific meanings by themselves.

We define the following models, sometimes distinguishing compound words containing the prefix/suffix words from other compound words.

-

1.

dcmp1: Decomposes all compound words.

-

e.g., “ozon-s ō” (in English, ‘ozone layer’) is decomposed into “ozon” and “s ō”.

-

-

2.

dcmp2: Decomposes all compound words and removes the prefix/suffix words.

-

e.g., “ozon-s ō”, in which “s ō” is a suffix noun, is converted into “ozon”.

-

-

3.

cmp1: Composes each compound word as an exact phrase.

-

e.g., “ozon-s ō” is used as an exact phrase (“\({{\tt{\#1}}}(\ ozon\ s\bar{o}\ \))” in the Indri query language, as shown in Table 1).

-

-

4.

cmp2: Composes each compound word that contains prefix/suffix words as an exact phrase, and each other compound word as an ordered phrase with at most one term between each constituent word

-

e.g., “ozon-s ō” that contains a suffix word and “ozon-h ō ru” (in English, ‘ozone hole’) that does not contain prefix/suffix words are expressed as phrases in different manners. In the Indri query language, the former is expressed as “\({{\tt{\#1}}}(\ ozon\ s\bar{o}\ \))”, the same as in cmp1; and the latter is expressed as “\({{\tt{\#od}}}2(\ ozon\ h\bar{o}ru \ \))”.

-

-

5.

pfx1: Composes each of the compound words containing prefix/suffix words as an exact phrase, and decomposes other compound words.

-

e.g., “ozon-s ō” is used as an exact phrase, the same as in cmp1; on the other hand, “ozon-h ō ru” is decomposed into “ozon” and “h ō ru”.

-

-

6.

pfx2: Composes each overlapping word-based bigram of the constituent words of the compound words containing prefix/suffix words as an exact phrase, and decomposes other compound words.

-

e.g., “dai-kyū-j ō” (in English, ‘article nine’ such as of the Constitution) in which “dai” and “j ō” are prefix and suffix words, respectively, is decomposed into a couple of exact phrases, “dai-kyū” and “kyū-j ō” (“\({{\tt{\#1}}}(\ dai\ ky\bar{u}\ \))” and “\({{\tt{\#1}}}(\ ky\bar{u}\ j\bar{o}\ \))”, respectively, in the Indri query language).

-

-

7.

pfx3: Linearly combines pfx1, pfx2 and dcmp2.

The combined model pfx3 was defined to investigate how each of the three component models contributes to retrieval effectiveness by changing weights for the component models. We discuss the details in Sect. 6.3.1.

We mainly assume pfx1 as the basic technique for expressing Japanese compound words in the rest of the paper, because we found some empirical evidence through experiments to support its use, as we describe in Sect. 6.3.1. More general term dependence models that we describe in Sect. 4.2 are grounded, in part, in the idea of the pfx1 compound word model.

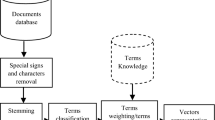

4.2 Two-stage term dependence model

In compound words that often appear for instance in Japanese, the dependencies of each constituent word are tighter than more general term dependencies. Therefore, we consider that these term dependencies should be treated as global between query components that make up a whole query and as local within a compound word when the compound word appears in a query component. Metzler and Croft’s term dependence model, which we summarized in Sect. 3.2, gives a theoretical framework for this study, but must be enhanced when we consider more complex dependencies as mentioned above. We propose two-stage term dependence model that captures term dependencies both between query components in a query and between constituents within a compound word. To achieve the model mentioned above, we extend the term dependence model given in Eq. 2, on the basis of Eq. 1, as follows:

where

Here, Q consists of query components q 1 ... q k ... q m , and each query component consists of individual terms t 1 ... t n . T(Q), O(Q) and U(Q) express the clique sets with (global) dependence between query components consisting of a whole query. T(Q) is defined as the set of 2-cliques involving a query component and a document D, O(Q) is the set of cliques containing the document node and two or more query components that appear contiguously within the whole query, and U(Q) is the set of cliques containing the document node and two or more query components appearing noncontiguously within the whole query. Moreover, T(q k ), O(q k ) and U(q k ) express the clique sets with (local) dependence between individual terms consisting of a query component q k , and can be defined similarly as T(Q), O(Q) and U(Q). We then define the feature functions f * T , f * O and f * U so that some treatments on Japanese compound words are reflected, as we will describe later in this section, and that the global dependence between query components are reflected as well. Hereafter, we assumed that the constraint λ T + λ O + λ U = 1 was imposed independently of the query. When Q consists of two or more query components and each of which has one word, Eq. 3 is equivalent to Eq. 2. The model given by Eq. 2 can be referred to as single-stage term dependence model. When we ignore the dependencies between query components in f * O and f * U , Eq. 3 represents dependencies only between constituent terms within each query component, which can be referred to as local term dependence model; otherwise, Eq. 3 expresses the two-stage term dependence model.

According to Eq. 3, we assumed the following instances, considering special features of the Japanese language.

4.2.1 Two-stage term dependence model

-

1.

glsd + expresses the dependencies on the basis of the sequential dependence (see Sect. 3.2) both between query components and between constituent terms within a query component, assuming dependence between neighboring elements. The beliefs for the resulting feature terms/phrases for each of f * T , f * O and f * U are combined as in Eq. 3. A graphical model representation of this model is shown in the middle of Fig. 2.

-

2.

glfd + expresses the dependencies between query components on the basis of the full dependence (see Sect. 3.2), assuming all the query components are in some way dependent on each other. It expresses the dependencies between constituent terms within a query component on the basis of the sequential dependence, even in this model. A graphical model representation of this model is shown in the right of Fig. 2.

Here in f * T , f * O and f * U , each compound word containing prefix/suffix words is represented as an exact phrase and treated the same as the other words, on the basis of the empirical results reported in Sect. 6.3.1. Moreover, in f * O , each general compound word (not containing prefix/suffix words) is expressed as an ordered phrase with at most one term between each constituent word. Let us take an example from the NTCIR-3 WEB topic set (Eguchi et al. 2003), which is written in Japanese. The title field of Topic 0015, as shown in Fig. 3, was described as three query components, “ozon-s ō, ozon-h ō ru, jintai” (which mean ‘ozone layer’, ‘ozone hole’ and ‘human body’). A morphological analyzer converted this to “ozon” (‘ozone’ as a general noun) and “s ō” (‘layer’ as a suffix noun), “ozon” (‘ozone’ as a general noun) and “h ō ru” (‘hole’ as a general noun), and “jintai” (‘human body’ as a general noun). The following are Indri query expressions for this example according to the glsd + and glfd + models, respectively, in the form used in Sect. 3.2.

4.2.2 Local term dependence models

-

3.

lsd + indicates the model obtained by ignoring the dependencies between query components in glsd +. A graphical model representation of this model is shown in the left of Fig. 2.

-

4.

lfd + indicates the model obtained by ignoring the dependencies between query components and applying the fd model to constituent terms within each query component.

In these models, each compound word containing prefix/suffix words is represented as an exact phrase and treated the same as the other words, in the same manner as the cases of glsd + and glfd +. The following is an example of an Indri query expression according to lsd + on Topic 0015.

Our two-stage term dependence models are based on the framework of Markov Random Field model from the following view. When we suppose an MRF graph consisting of a document as a root node and all decomposed query terms as leaf nodes, ignoring query components, the set of all cliques that contain the document node and multiple query terms that appear contiguously can be enumerated, and then the following limitations can be applied to the clique set. We assume that (1) individual word-to-word dependencies across different query components (e.g., dependence between h ō ru” and “jintai” in the case of Topic 0015) are disregarded; however, (2) only word-to-word dependencies within each query component (e.g., dependence between “ozon” and “s ō” or between “ozon” and “h ō ru”) and (3) component-to-component dependencies (e.g., dependence among all elements of two or more query components, for instance, among “ozon”, “h ō ru” and “jintai”, are kept and the corresponding features are considered as in Eq. 3. When simply applying Metzler and Croft’s single-stage term dependence model, which was reviewed in Sect. 3.2, to all decomposed query terms in a whole query, not only the meaningless dependencies as mentioned in (1) are involved, but it is also obvious that the number of combinations of the query terms (i.e., the number of cliques) exponentially increases. Our two-stage term dependence models correspond to considering both (2) and (3), and our local term dependence models correspond to only considering (2), both of which are expected to improve retrieval effectiveness with reasonable efficiency. In Sect. 6.3.2, we investigate the effects of the models defined in this section.

5 Query expansion via relevance models

Lavrenko and Croft (2001) formulated relevance models that explicitly incorporated relevance into the language modeling. Metzler et al. (2004) modified the relevance models as a pseudo-relevance feedback function in the framework of inference network-based retrieval models. In this paper, we follow this method of pseudo-relevance feedback, as briefly described below.

Given an initial query Q st , we retrieve a set of #docs fb documents and form a relevance model from them. We then form Q rm by wrapping the \({{\tt{\#combine}}}\) operator of Indri, around the most likely #terms fb terms from the relevance model that are not stopwords. Finally, an expanded query is formed that has the following form:

where \({{\tt{\#weight}}}\) indicates an Indri operator as described in Table 1. The parameter ν controls a balance between the original query Q st and expanded query Q rm . In this paper, we formulate Q st using the two-stage term dependence models, instead of using the term independence model, and reformulate Q new using the relevance model-based pseudo-relevance feedback as above.

6 Experiments

An overview of the test collections we use is given in Sect. 6.1. Our experimental setup is described in Sect. 6.2. Using the NTCIR-3 WEB test collection as a training data set, we investigated the effects of the compound word models and the two-stage term dependence model, and attempted to optimize the parameters in these models using the training data set, as described in Sects. 6.3.1 and 6.3.2, respectively. Using the NTCIR-5 WEB Task data, we performed the experiments with two-stage term dependence model for testing, as described in Sect. 6.3.3. Moreover, we experimented using pseudo-relevance feedback with the two-stage term dependence model over the training and testing data sets, as described in Sects. 6.4.1 and 6.4.2, respectively.

6.1 Data

We used a 100-gigabyte web document collection for experiments. The document collection consisted of web documents gathered from the .jp domain and thus were mostly written in Japanese. This document collection, ‘NW100G-01’, was the same as that used for the NTCIR-3 Web Retrieval Task (‘NTCIR-3 WEB’) (Eguchi et al. 2003), for the NTCIR-4 Web Task (‘NTCIR-4 WEB’) (Eguchi et al. 2004), and for the NTCIR-5 Web TaskFootnote 4 (‘NTCIR-5 WEB’) (Yoshioka 2005).

We used the topics and the relevance judgment data of the NTCIR-3 WEB for training the system parameters.Footnote 5 We used the topics and the relevance judgment data that were used in NTCIR-5 WEBFootnote 6 for testing. All the topics were written in Japanese. The numbers of topics can be seen in Table 3. A topic example can be seen in Fig. 3. A summary of the document collection is shown in Table 3. In the NW100G-01 collection, the proportion of the estimated number of pages in each language (Eguchi et al. 2003) is shown in Table 4. The title field of each topic gives 1–3 query components that are suggested by the topic creator to be similar to the query terms used in real Web search engines. This definition of the title is different from the one used by the TREC Web Track (Craswell and Hawking 2003) or the TREC Terabyte Track (Clarke et al. 2004) in the following ways: (i) the terms in the title field are listed in their order of importance for searching, and they are delimited by commas; (ii) each of these terms is supposed to indicate a certain concept, and so it sometimes consists of a single word, but it may also consist of a compound word; and (iii) the title field has an attribute (i.e., ‘CASE’ and ‘RELAT’) that indicates the kind of search strategies and can optionally be used as a Boolean-type operator (Eguchi et al. 2004). These were designed to prevent as far as possible retrieval effectiveness evaluation from being influenced by other effects, such as the performance of Japanese word segmentation, but also to reflect as far as possible the reality of user input queries for current Web search engines. In this paper, we only used the title fields of the topics. We did not use any query structure information provided as the attributes in the title field, as we thought that users of current search engines tend not to use Boolean-type operators, even if a search engine supports them.

6.2 Experimental setup

We used the texts that were extracted from and bundled with the NW100G-01 document collection. In these texts, all the HTML tags, comments, and explicitly declared scripts were removed. We segmented each document into words using the morphological analyzer ‘MeCab version 0.81’.Footnote 7 We did not use the part-of-speech (POS) tagging function of the morphological analyzer for the documents, because it requires more time.Footnote 8 On completion of the morphological analysis, all Japanese words were separated by spaces. We used Indri to make an index of the web documents in the NW100G-01 document collection, using these segmented texts described above. We only used one-byte symbol characters as stopwords in the indexing phase, to enable querying even by phrases consisting of high-frequency words, similar to “To be or not to be” in English, and to understand the effectiveness of the phrase-based query structuring described in Sect. 4.2. Instead, we used in the querying phase several types of stopwords only over the term feature f T , but not over the ordered/unordered phrase features f O or f U , in Eqs. 2 and 3. As for the types of stopwords we used, see Eguchi (2005).

In the experiments described in this paper, we only used the title fields of the topics, as described in Sect. 6.1. We performed morphological analysis using the MeCab tool described at the beginning of this section to segment each of the query components delimited by commas within each title field, and to add POS tags to the segmented words. Here, the POS tagsFootnote 9 are used to specify prefix and suffix words that appear in a query because, in the query structuring process, we make a distinction between compound words containing prefix/suffix words and other compound words, as described in Sects. 4.1 and 4.2. Note that we only used the POS information to specify the type of a compound word: whether it contains prefix or suffix words; and thus, once the type was specified, we did not use the POS information itself for querying.

6.3 Experiments on two-stage term dependence model

6.3.1 Effects of compound word models

We investigated the effects of compounding or decomposing of the query terms that were specified as constituent words of a compound word by the morphological analyzer, using the models described in Sect. 4.1, to empirically determine appropriate language units for the phrase-based query structuring that we discussed in Sect. 4.2. The experimental results using the NTCIR-3 WEB topic set are shown in Table 5. In this table, ‘AvgPrec a ’ indicates the mean average precision over all 47 topics, and ‘AvgPrec c ’ indicates the mean average precision over the 23 topics that include compound words in the title field. ‘%chg’ was calculated by the percentage of difference between performance of a target method and that of a baseline method, being divided by the baseline performance. As the baseline, we used dcmp1, the result of retrieval by decomposing all compound words. In the experiments in this section we did not use stopword lists, for simplicity.

From the results using cmp1, the naive phrase search using compound words did not work well. Comparing pfx1 with cmp1 or cmp2, we can see that the case of decomposing general compound words that do not contain prefix/suffix words turned out better than the case of not decomposing those. Furthermore, comparing pfx1 with dcmp1, we can see that the case of using compound words containing prefix/suffix words as exact phrases was better than the case of decomposing those. Therefore, it turned out that compounding the prefix/suffix words and decomposing other compound words work well, such as in pfx1 or pfx2. As for pfx3, we linearly combined pfx1, pfx2 and dcmp2, with weights, on the basis of Eq. 1, and optimized the weights for each of these features, changing each weight from 0 to 1 in steps of 0.1. In Table 5, we show the results using the optimized weights for the features of pfx1, pfx2 and dcmp2 in (λ pfx1, λ pfx2, λ dcmp2) = (0.7, 0.3, 0.0), which maximized the mean average precision. From the fact that the weight for the feature dcmp2 was optimized to be 0 and from the poor performance result only using dcmp2 shown in Table 5, it is apparent that the constituent words qualified by the prefix/suffix words contributed very little to the retrieval effectiveness by themselves without the prefix/suffix words. Moreover, the above model combining pfx1 and pfx2 did not improve the retrieval effectiveness, compared with pfx1 or pfx2 alone, in spite of the complexity of the query. Based on these considerations and the fact that the performance of the pfx1 and pfx2 was almost the same, we used the simpler pfx1 compound word model as the basic way of expressing Japanese compound words, and extended this idea to the query structuring using more general term dependence models that we discussed in Sect. 4.2.

6.3.2 Experiments on two-stage term dependence model for training

We investigated the effects of query structuring using the local term dependence and the two-stage term dependence that we described in Sect. 4.2. These approaches are grounded in the empirical evidence through the experiments shown in Sect. 6.3.1, as well as in the theoretical framework explained in Sect. 3.2. Using the NTCIR-3 WEB test collection, we optimized each of the models, defined in Sect. 4.2, changing each weight of λ T , λ O and λ U from 0 to 1 in steps of 0.1, and changing the window size N 1 for the unordered phrase feature as 2, 4, 8, 50 or ∞ times the number of words specified in the phrase expression. Additionally, we used (λ T , λ O , λ U ) = (0.9, 0.05, 0.05) for each N 1 value above. The results of the optimization that maximized the mean average precision over all 47 topics (‘AvgPrec a ’) are shown in Table 6. This table includes the mean average precision over 23 topics that contain compound words in the title field as ‘AvgPrec c ’. ‘%chg’ was calculated on the basis of fi, the result of retrieval with the term independence model. After optimization, the glsd + model worked best when (λ T , λ O , λ U , N) = (0.9, 0.05, 0.05, ∞). while the glfd + model worked best when (λ T , λ O , λ U , N) = (0.9, 0.05, 0.05, 50). In the experiments in this section, stopword removal was only applied to the term feature f T , not to the phrase features f O or f U . The impact of stopwords on our models is discussed in Eguchi (2005).

For comparison, we naively applied Metzler and Croft’s single-stage term dependence model, using either the sequential dependence or the full dependence variants defined in Sect. 3.2, to decomposed words within each of the query components delimited by commas in the title field of a topic, and combined the beliefs about the resulting structure expressions using the \({{\tt{\#combine}}}\) operator shown in Table 1. Footnote 10 We show the results of these as naive-lsd and naive-lfd, respectively, in Table 6. These are different from our local term dependence models, lsd + and lfd + that are based on the empirical results reported in Sect. 6.3.1, involving treatments on Japanese compound words. The results in Table 6 suggest that Metzler and Croft’s model must be enhanced to handle the more complex dependencies that appear in Japanese queries. For reference, we also show the best results from NTCIR-3 WEB participation (Eguchi et al. 2003), as ntcir-3, at the bottom of Table 6. This shows that even our baseline system worked better than the ntcir-3 results.

Our two-stage term dependence and local term dependence models worked well especially for the queries that contain various compound words, such as on Topic 0060: “sekai-ju”, “hoku ō shinwa” and “namae” (in English, ‘the World Tree’, ‘Norse mythology’ and ‘name’). These three query components are expressed using a compound word having a suffix word “ju”, another compound word having no prefix/suffix words, and a general noun, respectively. How to formulate structured queries in this case is very similar to the example shown in Sect. 4.2. In this case, the mean average precision of the fi, lsd + and glsd + models were 0.2162, 0.5164 and 0.5503, respectively, and so our local term dependence model lsd + worked well and our two-stage term dependence model glsd + worked the best. Our two-stage term dependence models also worked for a part of (but not all of) the queries that do not contain compound words, such as on Topic 0019: “ume”, “meisho” and “Tokyo” (in English, ‘plum tree’, ‘place of interest’ and ‘Tokyo’), where no compound words appear in the Japanese query. In such a case, the local term dependence models behave the same as the full independence model fi, and the mean average precision of both the fi and lsd + models was 0.1202 and that of glsd + was 0.1318. The difference between the performance of glsd + and glfd + depends on topics.

6.3.3 Experiments on two-stage term dependence model for testing

For testing, we used the models optimized in Sect. 6.3.2. We used the relevance judgment data, for evaluation, that were provided by the organizers of the NTCIR-5 WEB task. The results are shown in Table 7. In this table, ‘AvgPrec a ’, ‘AvgPrec c ’ and ‘AvgPrec o ’ indicate the mean average precisions over all 35 topics, over the 22 topics that include compound words in the title field, and over the 13 topics that do not include the compound words, respectively. ‘%chg’ was calculated on the basis of the result of retrieval with the term independence model (fi).

The results show that our two-stage term dependence models, especially the glfd + model, gave 13% better performance than the baseline (fi), which did not assume term dependence, and also better than the local term dependence models, lsd + and lfd +, which only assumed local dependence within a compound word. The advantage of glfd + over fi, lsd + and lfd + was statistically significant at the two-sided 5% level, where the Wilcoxon signed-rank test was used, in average precision over all the topics. The results of ‘AvgPrec c ’ and ‘AvgPrec o ’ imply that our models work more effectively for queries expressed in compound words.

6.4 Experiments on pseudo-relevance feedback with two-stage term dependence model

6.4.1 Experiments on pseudo-relevance feedback for training

We carried out preliminary experiments with a combination of phrase-based query structuring and pseudo-relevance feedback, using the NTCIR-3 WEB topic set, to investigate how this combination works. Pseudo-relevance feedback was implemented in Indri, based on Lavrenko’s relevance models (Lavrenko and Croft 2001), as described in Sect. 5. The results are shown in Fig. 4. In this graph, the horizontal axis indicates the number of feedback terms (#terms fb ), and the vertical axis shows the percentage increase in mean average precision compared to the mean average precision without phrase-based query structuring or pseudo-relevance feedback. For comparison, we naively applied the single-stage term dependence model in the same manner as naive-lsd in Sect. 6.3.2. Footnote 11

The explanatory note indicates which results used the naive applications of the single-stage term dependence model, naive-lsd, and the two-stage term dependence models, glfd + and glsd +. For baseline comparison, we also performed experiments with pseudo-relevance feedback using the term independence model (fi), which assumes that query terms are independent of each other. Here, the parameters were set as (λ T , λ O , λ U , N) = (0.9, 0.05, 0.05, 50) for the glfd + model, and (λ T , λ O , λ U , N) = (0.9, 0.05, 0.05, ∞) for the glsd + model, each of which maximized the mean average precision when pseudo-relevance feedback was not applied. The original query weight for pseudo-relevance feedback and the number of feedback documents were set as ν = 0.7 and #docs fb = 10. Figure 4 suggests that Metzler and Croft’s term dependence model should be enhanced to handle the more complex dependencies that appear in queries with compound words.

We also experimented using the two-stage term dependence models glsd + and glfd + with pseudo-relevance feedback, changing the weights of #docs fb and #terms fb to 5, 10, 15, 20, 30, 40 and 50, respectively, and ν to 0.3, 0.5, 0.7 and 0.9. The results show that the glfd + model worked better than the glsd + model when combined with pseudo-relevance feedback. Actually, the glfd + model worked 3.7% better than the glsd + model in average precision on average over all the combinations of the parameters (with a maximum of 17.4%). The mean average precision over all topics with optimized values for ν for each combination of (#docs fb , #terms fb ) is shown in Fig. 5. In this figure, the results for the term independence model, i.e., the fi model, are also shown as a baseline. Selected evaluation values are shown in Table 8, where ‘AvgPrec a ’, ‘AvgPrec c ’ and ‘AvgPrec o ’ indicate the mean average precision over all the 47 topics, that over 23 topics that include the compound words in the title field, and that over 24 topics that do not include the compound words, respectively. ‘%chg f ’ and ‘%chg t ’ were calculated on the bases of (i) no pseudo-relevance feedback, i.e., ν = 1.0, for each model; and (ii) the term independence model alone, i.e., the fi model without pseudo-relevance feedback, respectively. Figure 5 and Table 8 show that the phrase-based query structuring method works better than the baseline that does not assume term dependence at all, for almost every combination of (#docs fb , #terms fb ). These figure and table also show that, for a sufficient number of feedback documents, more feedback terms give more effective retrieval performance in general, but at the expense of searching cost.

6.4.2 Experiments on pseudo-relevance feedback for testing

We carried out experiments on the combination of phrase-based query structuring and pseudo-relevance feedback. For the phrase-based query structuring we used the glsd + and glfd + models with the optimal parameters. For evaluation, we used the relevance judgment data that were provided by the organizers of the NTCIR-5 WEB task. For the pseudo-relevance feedback, we used the top-ranked 5 and 10 documents (#docs fb ), and 5, 10 and 20 terms (#terms fb ) for feedback. We used the optimized value of the original query weight ν, which we obtained from training, in Sect. 6.4.1, corresponding to each pair of (#docs fb , #terms fb ). The results are shown in Table 9. In this table, ‘AvgPrec a ’, ‘AvgPrec c ’ and ‘AvgPrec o ’ indicate the mean average precision over all the 35 topics, that over 22 topics that include the compound words in the title field, and that over 13 topics that do not include the compound words, respectively. We performed significance tests on ‘AvgPrec a ’ on the bases of (i) no pseudo-relevance feedback, i.e., ν = 1.0, for each model; and (ii) the term independence model alone, i.e., the fi model without pseudo-relevance feedback, as shown in this table. Footnote 12 ‘%chg f ’ and ‘%chg t ’ were calculated on the bases of (i) and (ii) mentioned above, respectively.

As shown at the top of the results for the glfd + model (when ν = 1.0) in this table and at the top of the results of the fi model, the glfd + model alone worked 13% better than the fi model alone in mean average precision, in total. This is the same as reported in Table 7. The combination of the phrase-based query structuring and the pseudo-relevance feedback achieved statistically significant improvements over the phrase-based query structuring alone, under certain conditions of (#docs fb , #terms fb ). The results in Table 9 also show that, by combining with phrase-based query structuring, the pseudo-relevance feedback works effectively both for queries that include compound words and those that do not include compound words. Combining with the pseudo-relevance feedback, the glfd + model worked 9–15% better than the fi model, in mean average precision, under the same conditions of (#docs fb , #terms fb ) in the results shown in Table 9.

Table 10 shows example feedback terms in cases when average precision was increased and decreased using glfd + model under the condition that (#docs fb , #terms fb ) = (10, 20) and ν = 0.5. Whether and how much average precision was increased or decreased comparing with that without pseudo-relevance feedback are also indicated in this table. Topics 80 and 62 were the most successful cases and we can see that feedback terms include synonyms of query terms. For instance, “shikisai” and “karā” are synonyms of “iro” (color) that appear in the query of Topics 80. On the other hand, Topic 19 was the worst case partially because some numeric words hurt retrieval effectiveness.

7 Conclusions

In this paper, we proposed new phrase-based query structuring methods, which are based on a theoretical framework using Markov random fields. Our two-stage term dependence model captures both the global dependence between query components explicitly delimited by separators in a query, and the local dependence between constituents within a compound word when the compound word appears in a query component. We found that query structuring using our two-stage term dependence model worked 13% significantly better in mean average precision than the baseline that did not assume term dependence at all, and better than using models that only assumed either global dependence or local dependence in the query. The experimental results also imply that our models work more effectively for queries expressed in compound words, which are often used in the Japanese language.

As another contribution of this paper, we investigated how query structuring with term dependence could improve the performance of query expansion via a relevance model. We demonstrated through a series of experiments that the combination of the term dependence model and the relevance model was more effective than either the term dependence model or the relevance model alone. When we tested the two-stage term dependence alone, as mentioned above, this model worked 13% better in mean average precision than the baseline with the term independent model. When we tested the two-stage term dependence with the relevance model, this model worked 8–12% better in mean average precision than the baseline with the two-stage term dependence alone. Consequently, we achieved a significant 22–27% gain in mean average precision from the term independence model without query expansion, when we combined the term dependence model and the relevance model.

The two-stage term dependence model may be reasonable for other languages, if query components can be specified in a query, for example “‘ozone hole’ ‘human body”’. In this paper we assumed that the query components were delimited by separators in a query. This model is also applicable if we perform phrase detection on a long query or natural language input and we consider the resulting phrases to be query components. The application to natural language-based queries in either Japanese or English, employing an automatic phrase detection technique, is worth pursuing as future work.

Notes

The Japanese language is mainly expressed in kanji, hiragana and katakana characters. Kanji is derived from ancient Chinese characters. English alphabetic words are also sometimes used in a Japanese text, especially as proper nouns.

This kind of approaches was also employed for English (e.g., Mitra et al. 1997).

This is also referred to as the term independence model hereafter.

Query Term Expansion Subtask.

For the training, we used the relevance judgment data based on the page-unit document model (Eguchi et al. 2003) included in the NTCIR-3 WEB test collection.

The topics were a subset of those created for the NTCIR-4 WEB, Informational Retrieval Subtask. The relevance judgments were additionally performed by extension of the relevance data of the NTCIR-4 WEB. The task was motivated by the question “Which terms should be added to the original query to improve search results?” The objectives of this paper are different from those of that task; however, the data set is suitable for our experiments.

Regardless of whether the POS tagging function is chosen or not, the resulting segmentation is the same, in this case of the MeCab tool.

As suffix words, we used suffix nouns, suffix verbs and suffix adjectives; and as prefix words, we used nominal prefixes, verbal prefixes, adjectival prefixes and numerical prefixes, according to the part-of-speech system used in the MeCab tool.

In another way of applying Metzler and Croft’s model to all decomposed words in a whole query, ignoring boundaries across query components, the number of combinations of the words exponentially increases. Actually, in our preliminary experiments using some Japanese queries, the searching by this simple application did not accomplish within feasible time.

We tested using both the sequential dependence model and the full dependence model. The results of these two models were almost the same in this context. Only the result using the sequential dependence model is shown, as naive-lsd, in Fig. 4.

The results of significance tests on ‘AvgPrec c ’ or ‘AvgPrec o ’ are not presented, since 22 or 13 topics make it difficult to achieve statistical significance.

References

Buckley, C., Salton, G., Allan, J., & Singhal, A. (1994). Automatic query expansion using SMART: TREC 3. In Proceedings of the 3rd text retrieval conference, Gaithersburg, MD (pp. 69–80).

Chen, A., & Gey, F. C. (2002). Experiments on cross-language and patent retrieval at NTCIR-3 Workshop. In Proceedings of the 3rd NTCIR workshop, Tokyo, Japan.

Clarke, C., Craswell, N., & Soboroff, I. (2004). Overview of the TREC 2004 terabyte track. In Proceedings of TREC 2004, Gaithersburg, MD.

Craswell, N., & Hawking, D. (2003). Overview of the TREC 2003 web track. In Proceedings of TREC 2003, Gaithersburg, MD (pp. 78–92).

Croft, W. B., & Lafferty, J. (Eds.). (2003). Language modeling for information retrieval. Norwell, MA: Kluwer Academic Publishers.

Croft, W. B., Turtle, H. R., & Lewis, D. D. (1991). The use of phrases and structured queries in information retrieval. In Proceedings of ACM SIGIR 1991, Illinois, USA (pp. 32–45).

Eguchi, K. (2005). NTCIR-5 query expansion experiments using term dependence models. In Proceedings of the 5th NTCIR workshop. Tokyo, Japan.

Eguchi, K., Oyama, K., Aizawa, A., & Ishikawa, H. (2004). Overview of the informational retrieval task at NTCIR-4 WEB. In Proceedings of the 4th NTCIR workshop, Tokyo, Japan.

Eguchi, K., Oyama, K., Ishida, E., Kando, N., & Kuriyama, K. (2003). Overview of the web retrieval task at the third NTCIR workshop. In Proceedings of the 3rd NTCIR workshop, Tokyo, Japan.

Fujii, H., & Croft, W. B. (1993). A comparison of indexing techniques for Japanese text retrieval. In Proceedings of ACM SIGIR 1993, Pittsburgh, PA (pp. 237–246).

Fujita, S. (1999). Notes on phrasal indexing: JSCB evaluation experiments at NTCIR ad hoc. In Proceedings of the first NTCIR workshop, Tokyo, Japan (pp. 101–108).

Jones, G. J. F., Sakai, T., Kajiura, M., & Sumita, K. (1998). Experiments in Japanese text retrieval and routing using the NEAT system. In Proceedings of ACM SIGIR 1998, Melbourne, Australia (pp. 197–205).

Kando, N., Kageura, K., Yoshioka, M., & Oyama, K. (1998). Phrase processing methods for Japanese text retrieval. SIGIR Forum, 32(2), 23–28.

Lavrenko, V., & Croft, W. B. (2001). Relevance based language models. In Proceedings of ACM SIGIR 2001, New Orleans, LA (pp. 120–127).

Metzler, D., & Croft, W. B. (2004). Combining the language model and inference network approaches to retrieval. Information Processing and Management, 40(5), 735–750.

Metzler, D., & Croft, W. B. (2005). A Markov random field model for term dependencies. In Proceedings of ACM SIGIR 2005, Salvador, Brazil (pp. 472–479).

Metzler, D., Strohman, T., Turtle, H., & Croft, W. B. (2004). Indri at TREC 2004: Terabyte track. In Proceedings of TREC 2004, Gaithersburg, MD.

Mishne, G., & de Rijke, M. (2005). Boosting web retrieval through query operations. In Proceedings of the 27th European conference on information retrieval research, Santiago de Compostela, Spain (pp. 502–516).

Mitra, M., Buckley, C., Singhal, A., & Cardie, C. (1997). An analysis of statistical and syntactic phrases. In Proceedings of RIAO 97, Montreal, Canada (pp. 200–214).

Moulinier, I., Molina-Salgado, H., & Jackson, P. (2002). Thomson legal and regulatory at NTCIR-3: Japanese, Chinese and English retrieval experiments. In Proceedings of the 3rd NTCIR workshop, Tokyo, Japan.

Ogawa, Y., & Matsuda, T. (1997). Overlapping statistical word indexing: A new indexing method for Japanese text. In Proceedings of ACM SIGIR 1997, Philadelphia, PA (pp. 226–234).

Strohman, T. (2007). Efficient processing of complex features for information retrieval. PhD thesis, University of Massachusetts, Amherst.

Strohman, T., Turtle, H., & Croft, W. B. (2005). Optimization strategies for complex queries. In Proceedings of ACM SIGIR 2005, Salvador, Brazil (pp. 219–225).

Turtle, H. R., & Croft, W. B. (1991). Evaluation of an inference network-based retrieval model. ACM Transactions on Information Systems, 9(3), 187–222.

Xu, J., & Croft, W. B. (1996). Query expansion using local and global document analysis. In Proceedings of ACM SIGIR 1996, Zurich, Switzerland (pp. 4–11).

Yoshioka, M. (2005). Overview of the NTCIR-5 WEB query expansion task. In Proceedings of the 5th NTCIR workshop, Tokyo, Japan.

Zhai, C., & Lafferty, J. (2001). Model-based feedback in the language modeling approach to information retrieval. In Proceedings of ACM CIKM 2001, Atlanta, GA (pp. 403–410).

Acknowledgements

We thank Donald Metzler and David Fisher for valuable discussions and comments. This work was supported in part by the Grants-in-Aid for Scientific Research (#19024055 and #18650057, and #20300038) from the Ministry of Education, Culture, Sports, Science and Technology, Japan, and in part by the Center for Intelligent Information Retrieval. Any opinions, findings and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect those of the sponsor.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Eguchi, K., Croft, W.B. Query structuring and expansion with two-stage term dependence for Japanese web retrieval. Inf Retrieval 12, 251–274 (2009). https://doi.org/10.1007/s10791-009-9092-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10791-009-9092-1