Abstract

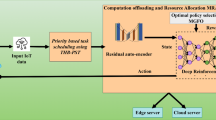

The Edge Cloud (EC) architecture aims at providing the compute power at the edge of the network to minimize the latency necessary for the Internet of Things (IoT). However, an EC endures a limited compute capacity in contrast with the back-end cloud (BC). Intelligent resource management techniques become imperative in such resource constrained environment. In this study, to achieve the efficient resource allocation objective, we propose HRL-Edge-Cloud, a novel heuristic reinforcement learning-based multi-resource allocation (MRA) framework which significantly overcomes the bottlenecks of wireless bandwidth and compute capacity jointly at the EC and BC. We solve the MRA problem by accelerating the conventional Q-Learning algorithm with a heuristic method and applying a novel linear-annealing technique. Additionally, our proposed pruning principle achieves remarkably high resource utilization efficiency while maintaining a low rejection rate. The effectiveness of our proposed method is validated by running extensive simulations in three different scales of environments. When compared with the baseline algorithm, the proposed HRL-Edge-Cloud achieves 240X, 95X and 2.4X reduction in runtime, convergence time and rejection rate, respectively, and achieves 2.34X operational cost efficiency improvement on average while satisfying the latency requirement.

Similar content being viewed by others

Explore related subjects

Discover the latest articles and news from researchers in related subjects, suggested using machine learning.Code Availability

The simulation code will be publicly available after the community release of COSMOS testbed. However, the code can be provided by the corresponding author on reasonable request.

Availability of data and materials

The authors confirm that all the data including numerical results captured and analyzed during the validation of this study are included in this article.

References

Agbali, M., Trillo, C., Fernando, T., Oyedele, L., Ibrahim, I.A., & Olatunji, V.O. (2019). Towards a refined conceptual framework model for a smart and sustainable city assessment. In 2019 IEEE international smart cities conference (ISC2), pp. 658–664. https://doi.org/10.1109/ISC246665.2019.9071697.

Arno, H.V., & Mazur, M. (2021). Telecom infrastructure the open source way. Technical report, Ubuntu, Canonical, United Kingdom.

Baena, B., Cobian, C., Larios, V.M., Orizaga, J.A., Maciel, R., Cisneros, M.P., & Beltran-Ramirez, J.R. (2020). Adapting food supply chains in smart cities to address the impacts of COVID19 a case study from Guadalajara metropolitan area. In 2020 IEEE international smart cities conference (ISC2), pp. 1–8. https://doi.org/10.1109/ISC251055.2020.9239076.

Barakabitze, A.A., Barman, N., Ahmad, A., Zadtootaghaj, S., Sun, L., Martini, M.G., & Atzori, L. (2020). Qoe management of multimedia streaming services in future networks: a tutorial and survey. IEEE Communications Surveys Tutorials, 2(1), 526–565. https://doi.org/10.1109/COMST.2019.2958784.

Bi, Y., Colman-Meixner, C., Wang, R., Meng, F., Nejabati, R., & Simeonidou, D. (2019). Resource allocation for ultra-low latency virtual network services in hierarchical 5G network. In ICC 2019 - 2019 IEEE international conference on communications (ICC), pp. 1–7. https://doi.org/10.1109/ICC.2019.8761272.

Bianchi, R.A.C., Ribeiro, C.H.C., & Costa, A.H.R. (2008). Accelerating autonomous learning by using heuristic selection of actions. Journal of Heuristics, 14, 135–168. https://doi.org/10.1007/s10732-007-9031-5.

Chen, J., Xing, H., Xiao, Z., Xu, L., & Tao, T. (2021). A DRL agent for jointly optimizing computation offloading and resource allocation in MEC. IEEE Internet of Things Journal, pp. 1–1. https://doi.org/10.1109/JIOT.2021.3081694.

Chen, H., Yuan, L., & Jing, G. (2020). 5G boosting smart cities development. In 2020 2nd International conference on artificial intelligence and advanced manufacture (AIAM), pp. 154–157. https://doi.org/10.1109/AIAM50918.2020.00038.

Cheng, M., Li, J., & Nazarian, S. (2018). DRL-cloud: deep reinforcement learning-based resource provisioning and task scheduling for cloud service providers. In 2018 23rd Asia and south pacific design automation conference (ASP-DAC), pp. 129–134. https://doi.org/10.1109/ASPDAC.2018.8297294.

Dong, C., & Wen, W. (2019). Joint optimization for task offloading in edge computing: an evolutionary game approach sensors, vol. 19(3). https://doi.org/10.3390/s19030740.

Elhassan, R., Ahmed, M.A., & AbdAlhalem, R. (2019). Smart waste management system for crowded area : makkah and holy sites as a model. In 2019 4th MEC international conference on big data and smart city (ICBDSC), pp. 1–5. https://doi.org/10.1109/ICBDSC.2019.8645576.

Fang, M., Li, H., & Zhang, X. (2012). A heuristic reinforcement learning based on state backtracking method. In 2012 IEEE/WIC/ACM international conferences on web intelligence and intelligent agent technology, vol. 1, pp. 673–678. https://doi.org/10.1109/WI-IAT.2012.187.

Feriani, A., & Hossain, E. (2021). Single and multi-agent deep reinforcement learning for AI-enabled wireless networks: a tutorial. IEEE Communications Surveys Tutorials, 23(2), 1226–1252. https://doi.org/10.1109/COMST.2021.3063822.

Gong, S., Yin, B., Zhu, W., & Cai, K.-Y. (2017). Adaptive resource allocation of multiple servers for service-based systems in cloud computing. In 2017 IEEE 41st annual computer software and applications conference (COMPSAC), vol. 2, pp. 603–608. https://doi.org/10.1109/COMPSAC.2017.43.

Habibi, M.A., Nasimi, M., Han, B., & Schotten, H.D. (2019). A comprehensive survey of RAN architectures toward 5G mobile communication system. IEEE Access, 7, 70371–70421. https://doi.org/10.1109/ACCESS.2019.2919657.

JošCsilo, S., & Dán, G. (2018). Joint allocation of computing and wireless resources to autonomous devices in mobile edge computing. In Proceedings of the 2018 workshop on mobile edge communications. MECOMM’18, pp. 13–18. Association for computing machinery. https://doi.org/10.1145/3229556.3229559.

Lei, L., Tan, Y., Zheng, K., Liu, S., Zhang, K., & Shen, X. (2020). Deep reinforcement learning for autonomous internet of things: model, Applications and Challenges. IEEE Communications Surveys Tutorials, 22(3), 1722–1760. https://doi.org/10.1109/COMST.2020.2988367.

Li, S., Zhang, N., Lin, S., Kong, L., Katangur, A., Khan, M.K., Ni, M., & Zhu, G. (2018). Joint admission control and resource allocation in edge computing for internet of things. IEEE Network, 32(1), 72–79. https://doi.org/10.1109/MNET.2018.1700163.

Liu, Y., Lee, M.J., & Zheng, Y. (2016). Adaptive multi-resource allocation for cloudlet-based mobile cloud computing system. IEEE Transactions on Mobile Computing, 15(10), 2398–2410. https://doi.org/10.1109/TMC.2015.2504091.

Liu, Y., Peng, M., Shou, G., Chen, Y., & Chen, S. (2020). Toward edge intelligence: multiaccess edge computing for 5G and internet of things. IEEE Internet of Things Journal, 7(8), 6722–6747. https://doi.org/10.1109/JIOT.2020.3004500.

Mnih, V., Kavukcuoglu, K., & Silver, D.E.A. (2015). Human-level control through deep reinforcement learning. Nature, 518, 529–533. https://doi.org/10.1038/nature14236.

Nath, S., & Wu, J. (2020). Dynamic computation offloading and resource allocation for multi-user mobile edge computing. In GLOBECOM 2020 - 2020 IEEE global communications conference, pp. 1–6. https://doi.org/10.1109/GLOBECOM42002.2020.9348161.

Ning, Z., Kong, X., Xia, F., Hou, W., & Wang, X. (2019). Green and sustainable cloud of things: enabling collaborative edge computing. IEEE Communications Magazine, 57(1), 72–78. https://doi.org/10.1109/MCOM.2018.1700895.

Open, F. (2020). Networking AETHER: private 4G/5G connected edge platform for enterprises. Technical report, open networking foundation, California USA.

Peng, H., & Shen, X. (2020). Deep reinforcement learning based resource management for multi-access edge computing in vehicular networks. IEEE Transactions on Network Science and Engineering, 7(4), 2416–2428. https://doi.org/10.1109/TNSE.2020.2978856.

Qadeer, A., Lee, M.J., & Tsukamoto, K. (2020). Real-time multi-resource allocation via a structured policy table. In L. Barolli, H. nishino, & H. Miwa (Eds.) Advances in intelligent networking and collaborative systems, pp. 370–379. Springer, Cham.

Qadeer, A., Lee, M.J., & Tsukamoto, K. (2021). Flow-level dynamic bandwidth allocation in SDN-enabled edge cloud using heuristic reinforcement learning. In 2021 8th International conference on future internet of things and cloud (ficloud), pp. 1–10. https://doi.org/10.1109/FiCloud49777.2021.00009.

Raychaudhuri, D., Seskar, I., Zussman, G., Korakis, T., Kilper, D., Chen, T., Kolodziejski, J., Sherman, M., Kostic, Z., Gu, X., Krishnaswamy, H., Maheshwari, S., Skrimponis, P., & Gutterman, C. (2020). Challenge: COSMOS: a city-scale programmable testbed for experimentation with advanced wireless. In Proceedings of the 26th annual international conference on mobile computing and networking. MobiCom ’20. Association for computing machinery. https://doi.org/10.1145/3372224.3380891.

Robberechts, J., Sinaeepourfard, A., Goethals, T., & Volckaert, B. (2020). A novel edge-to-cloud-as-a-service (E2CaaS) model for building software services in smart cities. In 2020 21st IEEE international conference on mobile data management (MDM), pp. 365–370. https://doi.org/10.1109/MDM48529.2020.00079.

Safitri, W.D., Ikhwan, M., Firmansyah, D., Rusdiana, S., Rahayu, L., & Akhdansyah, T. (2020). Partial distance strategy analysis on city characteristics to improve reliable smart cities services. In 3rd Smart cities symposium (SCS 2020), vol. 2020, pp. 354–358. https://doi.org/10.1049/icp.2021.0933.

Sharma, S.K., & Wang, X. (2020). Toward massive machine type communications in ultra-dense cellular IoT networks: current issues and machine learning-assisted solutions. IEEE Communications Surveys Tutorials, 22(1), 426–471. https://doi.org/10.1109/COMST.2019.2916177.

Sutton, R.S., & Barto, A.G. (1998). Reinforcement Learning: an introduction, sec. edn. Cambridge: The MIT Press. http://incompleteideas.net/sutton/book/ebook/the-book.html.

Tran, T.X., & Pompili, D. (2019). Joint task offloading and resource allocation for multi-server mobile-edge computing networks. IEEE Transactions on Vehicular Technology, 68(1), 856–868. https://doi.org/10.1109/TVT.2018.2881191.

Ungureanu, O.-M., Vlădeanu, C., & Kooij, R. (2021). Collaborative cloud - edge: a declarative API orchestration model for the NextGen 5G core. In 2021 IEEE international conference on service-oriented system engineering (SOSE), pp. 124–133. https://doi.org/10.1109/SOSE52839.2021.00019.

Wang, X., Han, Y., Leung, V.C.M., Niyato, D., Yan, X., & Chen, X. (2020). Convergence of edge computing and deep learning: a comprehensive survey. IEEE Communications Surveys Tutorials, 22 (2), 869–904. https://doi.org/10.1109/COMST.2020.2970550.

Wang, P., Yang, L.T., & Li, J. (2018). An edge cloud-assisted CPSS framework for smart city. IEEE Cloud Computing, 5(5), 37–46. https://doi.org/10.1109/MCC.2018.053711665.

Wei, Y., Yu, F.R., Song, M., & Han, Z. (2019). Joint optimization of caching, computing, and radio resources for fog-enabled IoT using natural actor–critic deep reinforcement learning. IEEE Internet of Things Journal, 6(2), 2061–2073. https://doi.org/10.1109/JIOT.2018.2878435.

Xue, J., & An, Y. (2021). Joint task offloading and resource allocation for multi-task multi-server NOMA-MEC networks. IEEE Access, 9, 16152–16163. https://doi.org/10.1109/ACCESS.2021.3049883.

Yang, J., Kwon, Y., & Kim, D. (2021). Regional smart city development focus: the south korean national strategic smart city program. IEEE Access, 9, 7193–7210. https://doi.org/10.1109/ACCESS.2020.3047139.

Zheng, J., Cai, Y., Wu, Y., & Shen, X. (2019). Dynamic computation offloading for mobile cloud computing: a stochastic game-theoretic approach. IEEE Transactions on Mobile Computing, 18(4), 771–786. https://doi.org/10.1109/TMC.2018.2847337.

Acknowledgements

We thank Colin Skow (colinskow@gmail.com) for discussions and help with the world formulation.

Funding

This work is supported in part by the National Science Foundation (NSF) IRNC COSMIC (#2029295) and NSF PAWR COSMOS (#1827923).

Author information

Authors and Affiliations

Contributions

Both authors contributed to conceive the design of this study. The system model, problem formulation and algorithm design were carried out by Arslan Qadeer and analyzed by Myung J. Lee. The first draft of the manuscript was completed by Arslan Qadeer. Myung J. Lee read and approved the final manuscript.

Corresponding authors

Ethics declarations

Conflict of Interests

The authors declare no conflict of interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Qadeer, A., Lee, M.J. HRL-Edge-Cloud: Multi-Resource Allocation in Edge-Cloud based Smart-StreetScape System using Heuristic Reinforcement Learning. Inf Syst Front 26, 1399–1415 (2024). https://doi.org/10.1007/s10796-022-10366-2

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10796-022-10366-2