Abstract

Computational models of primary visual cortex have demonstrated that principles of efficient coding and neuronal sparseness can explain the emergence of neurones with localised oriented receptive fields. Yet, existing models have failed to predict the diverse shapes of receptive fields that occur in nature. The existing models used a particular “soft” form of sparseness that limits average neuronal activity. Here we study models of efficient coding in a broader context by comparing soft and “hard” forms of neuronal sparseness.

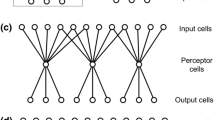

As a result of our analyses, we propose a novel network model for visual cortex. The model forms efficient visual representations in which the number of active neurones, rather than mean neuronal activity, is limited. This form of hard sparseness also economises cortical resources like synaptic memory and metabolic energy. Furthermore, our model accurately predicts the distribution of receptive field shapes found in the primary visual cortex of cat and monkey.

Similar content being viewed by others

Notes

For an introduction to these models, see chapter ten of Dayan and Abbott (2003).

The distribution of shapes of receptive fields in the primary visual cortex of cat and monkey are very similar, for a comparison see Ringach (2002).

Note that in the Sparsenet, even though the combination of feedforward and feedback is linear the coding is ultimately a nonlinear operation on the input because feedback is present.

References

Atick JJ (1992) Could information theory provide an ecological theory of sensory processing? Network: Comput. Neural Syst. 3: 213–251.

Attneave F (1954) Some informational aspects of visual perception. Psychol. Rev. 61: 183–193.

Baddeley R (1996) An efficient code in V1? Nature 381: 560–561.

Barlow HB (1983) Understanding natural vision. Springer-Verlag, Berlin.

Baum EB, Moody J, Wilczek F (1988) Internal representations for associative memory. Biol. Cybern. 59: 217–228.

Bell AJ, Sejnowski TJ (1997) The independent components of natural images are edge filters. Vis. Res. 37: 3327–3338.

Bethge M, Rotermund D, Pawelzik K (2003) Second order phase transition in neural rate coding: binary encoding is optimal for rapid signal transmission. Phys. Rev. Lett. 90(8): 088104–0881044.

Buhmann J, Schulten K (1988) Storing sequences of biased patterns in neural networks with stochastic dynamics. In R Eckmiller, C von der Malsburg, eds. Neural Computers, Berlin. (Neuss 1987), Springer-Verlag, pp. 231–242.

Chen SS, Donoho DL, Saunders MA (1998) Atomic decomposition by basis pursuit. SIAM J. Sci. Comput. 20(1):33–61.

Dayan P, Abbott L (2003) Theoretical Neuroscience. MIT Press, Cambridge MA.

Donoho DL, Elad M (2002) Optimally sparse representation in general (nonorthogonal) dictionaries via \(l^{1}\) minimization. PNAS 100(5): 2197–2002.

Field DJ (1987) Relations between the statistics of natural images and the response properties of cortical cells. J. Opt. Soc. Am. A 4: 2379–2394.

Földiak P (1995) Sparse coding in the primate cortex. In MA Arbib ed. The Handbook of Brain Theory and Neural Networks, MIT Press, Cambridge, MA, pp. 165–170.

Gardner-Medwin A (1976) The recall of events through the learning of associations between their parts. Proc. R. Soc. London. B 194: 375–402.

Gibson JJ (1966) The Perception of the Visual World. Houghton Mifflin, Boston, MA.

Hinton GE, Sallans B, Ghahramani ZJ (1997) A hierarchical community of experts. In M Jordan, ed. Learning in Graphical Models, Kluwer Academic Publishers, pp. 479–494.

Hopfield JJ (1982) Neural networks and physical systems with emergent collective computational abilities. Proc. Nat. Acad. Sci. (USA) 79: 2554–2558.

Hurri J, Hyvärinen A (2003) Simple-cell-like receptive fields maximize temporal coherence in natural video. Neural. Comput. 15: 663–691.

Hyvärinen A, Oja E (2000) Independent component analysis: algorithms and applications. Neural Netw. 13: 411–430.

Jones JP, Palmer LA (1987) The two-dimensional spatial structure of simple receptive fields in the striate cortex. J. Neurophysiol. 58: 1187–1211.

Laughlin SB, Sejnowski TJ (2003) Communication in neuronal networks. Science 301: 1870–1874.

Lennie P (2003) The cost of cortical computation. Curr. Biol. 13(6): 493–497.

Li Z, Attick J (1994) Towards a theory of the striate cortex. Neural. Comput. 6: 127–146.

Mallat S, Zhang Z, (1993) Matching pursuit in time-frequency dictionary. IEEE Trans. Signal Process. 41: 3397–3415.

Olshausen BA, Field DJ (1996) Emergence of simple-cell receptive field properties by learning a sparse code for natural images. Nature 381: 607–608.

Olshausen BA, Field DJ (1997) Sparse coding with an overcomplete basis set: a strategy employed in V1. Vis. Res. 37: 3311–3325.

Palm G (1980) On associative memory. Biol. Cybern. 36: 19–31.

Palm G, Sommer FT (1992) Information capacity in recurrent McCulloch-Pitts networks with sparsely coded memory states. Network: Comput. Neural Syst. 3: 1–10.

Palm G, Sommer FT (1995) Associative data storage and retrieval in neural networks. In E Domany, JL van Hemmen, KS Hemmen, eds. Models of Neural Networks III. Springer, New York, pp. 79 –118.

Pati Y, Reziifar R, Krishnaprasad P (1993) Orthogonal matching pursuits: recursive function approximation with applications to wavelet decomposition. In Proceedings of the 27th Asilomar Conference on Signals, Systems and Computers, pp. 40–44.

Perrinet L, Samuelides M, Thorpe S (2004) Sparse spike coding in an asynchronous feed-forward multi-layer neural network using matching pursuit. Neurocomputing 57: 125–134.

Peters A, Paine BR (1994) A numerical analysis of the genicocortical input to striate cortex in the monkey. Cereb. Cortex 4: 215–229.

Rebollo-Neira L, Lowe D (2002) Optimised orthogonal matching pursuit approach. IEEE Signal Process. Lett. 9: 137–140.

Rehn M, Sommer FT (2006) Storing and restoring visual input with collaborative rank coding and associative memory. Neurocomputing 69(10–12): 1219–1223.

Ringach DL (2002) Spatial structure and symmetry of simple-cell receptive fields in macaque primary visual cortex. J. Neurophys. 88(1): 455–463.

Ruderman D (1994) The statistics of natural images. Network: Comput. Neural Syst. 5: 517–548.

Sallee P (2002) Statistical methods for image and signal processing. PhD Thesis, UC Davis.

Sallee P, Olshausen BA (2002) Learning sparse multiscale image representations. In TK Leen, TG Dietterich, V Tresp, eds. Advances in Neural Information Processing Systems, vol. 13. Morgan Kaufmann Publishers, Inc., pp. 887–893.

Sommer FT, Wennekers T (2005) Synfire chains with conductance-based neurons: internal timing and coordination with timed input. Neurocomputing 65–66: 449–454.

Stroud JM (1956) The fine structure of psychological time. In H Quastler, ed. Information Theory in Psychology. Free Press, pp. 174–205.

Teh Y, Welling M, Osindero S, Hinton GE (2003) Energy-based models for sparse overcomplete representations. J. Mach. Learn. Res. 4: 1235–1260.

Treves A (1991) Dilution and sparse coding in threshold-linear nets. J. Phys. A: Math. Gen. 24: 327–335.

Tsodyks MV, Feigelman MV (1988) The enhanced storage capacity in neural networks with low activity level. Europhys. Lett. 6: 101–105.

Van Essen DC, Anderson CH (1995) Information processing strategies and pathways in the primate retina and visual cortex. In SF Zornetzer, JL Davis, C Lau, eds. Introduction to Neural and Electronic Networks, 2nd ed. Orlando, Academic Press, pp. 45–76.

VanRullen R, Koch C (2003) Is perception discrete or continuous? Trends Cogn. Sci. 7: 207–213.

VanRullen R, Koch C (2005) Attention-driven discrete sampling of motion perception. Proc. Nat. Acad. Sci., USA 102: 5291–5296.

Willshaw DJ, Buneman OP, Longuet-Higgins HC (1969) Non-holographic associative memory. Nature 222: 960–962.

Willwacher G (1982) Storage of a temporal sequence in a network. Biol. Cybern. 43: 115–126.

Zetsche C (1990) Sparse coding: the link between low level vision and associative memory. In R Eckmiller, G Hartmann, G Hauske, eds., Parallel Processing in Neural Systems and Computers. Elsevier Science Publishers B.V. (North Holland).

Acknowledgment

We thank B. A. Olshausen for providing ideas, D. Ringach for the experimental data, T. Bell, J. Culpepper, G. Hinton, J. Hirsch, C. v. d. Malsburg, B. Mel, L. Perrinet, D. Warland, L. Zhaoping and J. Zhu for discussions. Further, we thank the reviewers for helpful suggestions. Financial support was provided by the Strauss-Hawkins trust and KTH.

Author information

Authors and Affiliations

Corresponding author

Additional information

Action Editor: Misha Tsodyks

Appendices

Appendix A: Derivation of the energy functions for hard-sparseness

Inserting \(b_i = a_i y_i, f(x) = ||x||_{L_0}\), and the definitions \(c=\Psi x, C = \Psi \Psi^T\) and \(P^y = \mbox{diag}(y)\), one can rewrite Eq. (2) (up to the constant \(\left\langle x,x\right\rangle\!/2\)) as

where \(\mbox{Tr}(P^y) = \sum_i y_i = ||y||\). Equation (12) requires interleaved optimisation in both variable sets a and y. For fixed y the optimal analogue coefficients are \(a^* = \mbox{argmin}_a ||P^y C P^y a - P^y c||\). The solution can be computed as Eq. (4) using the pseudoinverse. If one inserts Eq. (4) in Eq. (12), the resulting energy function is

which solely depends on the binary variables since the coefficients are optimised implicitly.

Using the identities for the pseudoinverse: \(A^+ = [A^T A]^+ A^T\) and \([A^T]^+ = (A^+)^T\) (see pseudoinverse in Wikipedia), the inner product in Eq. (13) can be written

The operator in the inner product on the RHS of Eq. (14) is a projection operator:

which projects into the subspace spanned by the receptive fields of the active units \(\{i: y_i=1\}\). Equation (14) and definition 15 yield Eq. (5).

Another way to rewrite Eq. (13) is to insert \(P^y C P^y = [P^y (C-1\!\!1) P^y +1\!\!1] P^y =: C^y P^y\). \(C^y\) is a full rank matrix if the selected set of basis functions is linearly independent. This is guaranteed in the complete case and very likely to be fulfilled for sparse selections in overcomplete bases. Thus, for sparse y vectors we can replace the pseudoinverse in Eq. (13) by the ordinary inverse and use the power series expansion: \([C^y]^{-1} = 1\!\!1 - P^y (C-1\!\!1) P^y + [P^y (C-1\!\!1) P^y]^2 - \cdots \) Using the expansion up to the first order yields the approximations for Eqs. (5) and (4), respectively

With the definition \(T_{ij}:= - c_i C_{ij} c_j + 2 \delta_{ij} c_i^2\), Eq. (7) follows from Eq. (16).

Appendix B: Fitting of receptive fields

In the sparse regime, each basis function can be well fitted with a two-dimensional Gabor function in the image coordinates \(u, v\): \(h(u',v') = A\; \exp [-( \frac{u'}{\sqrt 2 \sigma_{u'}})^2 - ( \frac{v'}{\sqrt 2 \sigma_{v'}})^2] \cos ( 2 \pi f u' + \Phi )\), where \(u'\) and \(v'\) are translated and rotated image coordinates, \(\sigma_{u'}\) and \(\sigma_{v'}\) represent the widths of the Gaussian envelope, and f and Φ are the spatial frequency and phase of the sinoidal grating. Notation in Fig. 6: \(\mbox{width}: = \sigma_{u} f\) and \(\mbox{length}: = \sigma_{v} f\). To measure asymmetry of a Gabor function we split h along the \(v'\) axis into \(h_-\) and \(h_+\) and use: \(\mbox{Asym} := |\int h_+ ds - \int h_- ds |/ \int |h| ds\).

Rights and permissions

About this article

Cite this article

Rehn, M., Sommer, F.T. A network that uses few active neurones to code visual input predicts the diverse shapes of cortical receptive fields. J Comput Neurosci 22, 135–146 (2007). https://doi.org/10.1007/s10827-006-0003-9

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10827-006-0003-9