Abstract

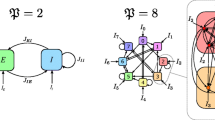

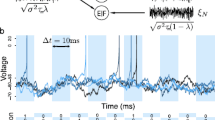

We present an approach for using kinetic theory to capture first and second order statistics of neuronal activity. We coarse grain neuronal networks into populations of neurons and calculate the population average firing rate and output cross-correlation in response to time varying correlated input. We derive coupling equations for the populations based on first and second order statistics of the network connectivity. This coupling scheme is based on the hypothesis that second order statistics of the network connectivity are sufficient to determine second order statistics of neuronal activity. We implement a kinetic theory representation of a simple feed-forward network and demonstrate that the kinetic theory model captures key aspects of the emergence and propagation of correlations in the network, as long as the correlations do not become too strong. By analyzing the correlated activity of feed-forward networks with a variety of connectivity patterns, we provide evidence supporting our hypothesis of the sufficiency of second order connectivity statistics.

Similar content being viewed by others

References

Abbott, L. F., & van Vreeswijk, C. (1993). Asynchronous states in networks of pulse-coupled oscillators. Physical Review E., 48, 1483–1490.

Apfaltrer, F., Ly, C., & Tranchina, D. (2006). Population density methods for stochastic neurons with realistic synaptic kinetics: Firing rate dynamics and fast computational methods. Network: Computation in Neural Systems, 17, 373–418.

Barna, G., Gröbler, T., & Érdi, P. (1998). Statistical model of the hippocampal CA3 region—II. The population framework: Model of rhythmic activity in the CA3 slice. Biological Cybernetics, 79, 309–321.

Binder, M. D., & Powers, R. K. (2001). Relationship between simulated common synaptic input and discharge synchrony in cat spinal motoneurons. Journal of Neurophysiology, 86, 2266–2275.

Brunel, N., & Hakim, V. (1999). Fast global oscillations in networks of integrate-and-fire neurons with low firing rates. Neural Computation, 11(7), 1621–1671.

Cai, D., Tao, L., Rangan, A., & McLaughlin, D. (2006). Kinetic theory for neuronal network dynamics. Communications in Mathematical Sciences, 4, 97–127.

Casti, A. R., Omurtag, A., Sornborger, A., Kaplan, E., Knight, B., Victor, J., et al. (2002). A population study of integrate-and-fire-or-burst neurons. Neural Computation, 14, 957–86.

Câteau, H., & Fukai, F. (2001). Fokker–Planck approach to the pulse packet propagation in synfire chain. Neural Networks, 14, 675–685.

Câteau, H., & Reyes, A. D. (2006). Relation between single neuron and population spiking statistics and effects on network activity. Physical Review Letters, 96, 058, 101.

Diesmann, M., Gewaltig, M. O., & Aertsen, A. (1999). Stable propagation of synchronous spiking in cortical neural networks. Nature, 402, 529–533.

Doiron, B., Rinzel, J., & Reyes, A. (2006). Stochastic synchronization in finite size spiking networks. Physical Review E., 74, 030, 903.

Dorn, J. D., & Ringach, D. L. (2003). Estimating membrane voltage correlations from extracellular spike trains. Journal of Neurophysiology, 89, 2271–2278.

Galán, R. F., Fourcaud-Trocmé, N., Ermentrout, G. B., & Urban, N. N. (2006). Correlation-induced synchronization of oscillations in olfactory bulb neurons. Journal of Neuroscience, 26, 3646–3655.

Gardiner, C. W. (2004).Handbook of stochastic methods for physics, chemistry and the natural sciences, 3rd edn. New York: Springer.

Gerstner, W. (2000). Population dynamics of spiking neurons: Fast transients, asynchronous states, and locking. Neural Computation, 12, 43–89.

Hasegawa, H. (2003). Dynamical mean-field theory of spiking neuron ensembles: Response to a single spike with independent noises. Physical Review E., 67, 041, 903.

Haskell, E., Nykamp, D. Q., & Tranchina, D. (2001). Population density methods for large-scale modeling of neuronal networks with realistic synaptic kinetics: Cutting the dimension down to size. Network: Computation in Neural Systems, 12(2), 141–174.

Hildebrand, E. J., Buice, M. A., & Chow, C. C. (2007). Kinetic theory of coupled oscillators. Physical Review Letters, 98, 054, 101.

Hohn, N., & Burkitt, A. N. (2001). Shot noise in the leaky integrate-and-fire neuron. Physical Review E., 63, 031, 902.

Huertas, M. A., & Smith, G. (2006). A multivariate population density model of the dlgn/pgn relay. Journal of Computational Neuroscience, 21, 171–89.

Ichimaru, S. (1973) Basic principles of plasma physics: A statistical approach. New York: Benjamin.

Jaynes, E. T. (1957) Information theory and statistical mechanics. Physical Review, 106, 62–79.

Knight, B. W. (2000). Dynamics of encoding in neuron populations: Some general mathematical features. Neural Computation, 12, 473–518.

Knight, B. W., Manin, D., & Sirovich, L. (1996). Dynamical models of interacting neuron populations. In E. C., Gerf, (Ed.), Symposium on robotics and cybernetics: Computational engineering in systems applications, cite scientifique Lille, France.

Litvak, V., Sompolinsky, H., Segev, I., & Abeles, M. (2003). On the transmission of rate code in long feedforward networks with excitatory-inhibitory balance. Journal of Neuroscience, 23, 3006–3015.

Ly, C., & Tranchina, D. (2008). Spike train statistics and dynamics with synaptic input from any renewal process: A population density approach. Neural Computation. doi:10.1162/neco.2008.03-08-743.

Masuda, N., & Aihara, K. (2002). Bridging rate coding and temporal spike coding by effect of noise. Physical Review Letters, 88, 248, 101.

Mattia, M., & Del Giudice, P. (2002). Population dynamics of interacting spiking neurons. Physical Review E., 66, 051, 917.

McFadden, J. A. (1965) The entropy of a point process. Journal of the Society for Industrial and Applied Mathematics, 13, 988–994

Meffin, H., Burkitt, A. N., & Grayden, D. B. (2004). An analytical model for the “large, fluctuating synaptic conductance state” typical of neocortical neurons in vivo. Journal of Computational Neuroscience, 16, 159–175

Moreno, R., de la Rocha, J., Renart, A., & Parga, N. (2002). Response of spiking neurons to correlated inputs. Physical Review Letters, 89, 288, 101

Moreno-Bote, R., & Parga, N. (2006). Auto- and crosscorrelograms for the spike response of leaky integrate-and-fire neurons with slow synapses. Physical Review Letters, 96, 028, 101.

Nicholson, D. (1992) Introduction to plasma theory. Malabar, Florida: Krieger.

Nirenberg, S., & Victor, J. (2007). Analyzing the activity of large populations of neurons: How tractable is the problem? Current Opinion in Neurobiology, 17, 397–400.

Nykamp, D. Q. (2005). Revealing pairwise coupling in linear-nonlinear networks. SIAM Journal on Applied Mathematics, 65, 2005–2032.

Nykamp, D. Q. (2007a) Exploiting history-dependent effects to infer network connectivity. SIAM Journal on Applied Mathematics, 68, 354–391.

Nykamp, D. Q. (2007b) A mathematical framework for inferring connectivity in probabilistic neuronal networks. Mathematical Biosciences, 205, 204–251.

Nykamp, D. Q., & Tranchina, D. (2000). A. population density approach that facilitates large-scale modeling of neural networks: Analysis and an application to orientation tuning. Journal of Computational Neuroscience, 8, 19–50.

Nykamp, D. Q., & Tranchina, D. (2001). A. population density approach that facilitates large-scale modeling of neural networks: Extension to slow inhibitory synapses. Neural Computation, 13, 511–546.

Omurtag, A., Kaplan, E., Knight, B., & Sirovich, L. (2000a) A population approach to cortical dynamics with an application to orientation tuning. Network: Computation in Neural Systems, 11, 247–260.

Omurtag, A., Knight, B. W., & Sirovich, L. (2000b) On the simulation of large populations of neurons. Journal of Computational Neuroscience, 8, 51–63.

Reyes, A. D. (2003). Synchrony-dependent propagation of firing rate in iteratively constructed networks in vitro. Nature Neuroscience, 6, 593–599.

de la Rocha, J., Doiron, B., Shea-Brown, E., Josić, K., & Reyes, A. (2007). Correlation between neural spike trains increases with firing rate. Nature, 448, 802–806.

de la Rocha, J., Moreno-Bote, R., & Câteau, H. (2008). Propagation of temporally correlated spike trains: A Fokker-Planck analysis (in preparation).

van Rossum, M. C. W., Turrigiano, G. G., & Nelson, S. B. (2002). Fast propagation of firing rates through layered networks of noisy neurons. Journal of Neuroscience, 22, 1956–1966.

Saad, Y., & Schultz, M. H. (1986) GMRES: A generalized minimal residual algorithm for solving nonsymmetric linear systems. SIAM Journal on Scientific and Statistical Computing, 7, 856–869.

Salinas, E., & Sejnowski, T. (2002). Integrate-and-fire neurons driven by correlated stochastic input. Neural Computation, 14, 2111–2155.

Schneidman, E., Berry, M. J. II, Segev, R., & Bialek, W. (2006). Weak pairwise correlations imply strongly correlated network states in a neural population. Nature, 440, 1007–1012.

Shadlen, M. N., & Newsome, W. T. (2001). Neural basis of a perceptual decision in the parietal cortex (area LIP) of the rhesus monkey. Journal of Neurophysiology, 86, 1916–1936.

Shea-Brown, E., Josić, K., de la Rocha, J., & Doiron, B. (2008). Correlation and synchrony transfer in integrate-and-fire neurons: Basic properties and consequences for coding. Physical Review Letters, 100, 108, 102.

Shinomoto, S., Shima, K., & Tanji, J. (2003). Differences in spiking patterns among cortical neurons. Neural Computation, 15, 2823–2842.

Shlens, J., Field, G. D., Gauthier, J. L., Grivich, M. I., Petrusca, D., Sher, A., et al. (2006). The structure of multi-neuron firing patterns in primate retina. Journal of Neuroscience, 26, 8254–8266.

Sirovich, L. (2008). Populations of tightly coupled neurons: The RGC/LGN system. Neural Computation, 20, 1179–1210.

Sirovich, L., Knight, B., & Omurtag, A. (2000). Dynamics of neuronal populations: The equilibrium solution. SIAM Journal on Applied Mathematics, 60, 2009–2028.

Sirovich, L., Omurtag, A., & Lubliner, K. (2006). Dynamics of neural populations: Stability and synchrony. Network: Computation in Neural Systems, 17, 3–29.

Stevens, C., & Zador, A. (1998). Input synchrony and the irregular firing of cortical neurons. Nature Neuroscience, 1, 210–207.

Svirskis, G., & Hounsgaard, J. (2003). Influence of membrane properties on spike synchronization in neurons: theory and experiments. Network: Computation in Neural Systems 14, 747–763.

Tang, A., Jackson, D., Hobbs, J., Chen, W., Smith, J. L., Patel, H., et al. (2008). A maximum entropy model applied to spatial and temporal correlations from cortical networks in vitro. Journal of Neuroscience, 28, 505–518.

Wang, S., Wang, W., & Liu, F. (2006). Propagation of firing rate in a feed-forward neuronal network. Physical Review Letters, 96, 018, 103.

Yu, S., Huang, D., Singer, W., & Nikolic, D. (2008). A small world of neuronal synchrony. Cereb Cortex. doi:10.1093/cercor/bhn047.

Acknowledgements

This work was supported in part by NSF grant DMS 0719724 (DQN) and NIH training grant R90 DK71500 (CYL). We thank Dan Tranchina, Brent Doiron, Michael Buice, Carson Chow, and Hide Câteau for helpful discussions.

Author information

Authors and Affiliations

Corresponding author

Additional information

Action Editor: Carson C. Chow

Appendices

Appendices

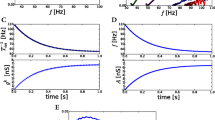

A Method for solving the kinetic theory equations

The first step in solving the kinetic theory equations (2) is to rewrite them in conservative form, i.e., divergence form \(\frac{\partial {\rho}}{\partial {t}} = - \nabla \cdot {\mathbf{J}}\), where J is a flux density. The terms describing the advection due to the leak current are already the divergence of a flux density. We need to rewrite the integrals corresponding to the voltage jumps in response to excitatory input. Recall that F A (x) is the complementary cumulative distribution function and f A (x) is the probability density of the random jump size A, so that \(\frac{\partial F_A}{\partial x}=-f_A(x)\). Applying this identity and the fundamental theorem of calculus, we obtain the following equalities. We rewrite the terms due to independent input as

The integrals involving F A are probability flux densities analogous to those defined in Nykamp and Tranchina (2000).

The jumps due to synchronous input are a little more complicated. There are many ways to write them in conservative form. Since the evolution of ρ is symmetric in v 1 and v 2, we kept the equation symmetric by dividing the diagonal jumps into a symmetric pair of horizontal and vertical jumps.

Although these integrals do not correspond to physical horizontal and vertical jumping of the voltage, we view their formulation simply as an intermediate step to developing a numerical scheme to solve the original Eq. (2).

As a result, we obtain the following integro-differential equation:

where

All second components are analogous by symmetry. The reset terms are defined in Eq. (3).

We do not have local conservative equality between the flux density across the threshold \((J_{\mathrm{ind}}^1(v_{\mathrm{th}},v_2,t)+J_{\mathrm{syn}}^1(v_{\mathrm{th}},v_2,t))\) and the reset J reset,1(v 2,t). Due to our definition of the flux density \(J_{\mathrm{syn}}^1(v_{\mathrm{th}},v_2,t)\), it includes the effect of synchronous inputs where the voltage of neuron 2 jumps from v 2 to higher voltages (and hence appears in the reset term J reset,1(v 2,t) at those higher voltage or even in J reset,3(t) if neuron 2 also crossed threshold). Nonetheless, one can verify that the system is globally conservative because the total flux across threshold equals the total reset:

To numerically solve the equations, we used the finite volume approach. We discretized the domain [0,v th] ×[0,v th] in (v 1,v 2) space into squares of size Δv ×Δv with Δv = 0.0125. We let v 1,j = v 2,j = (j − 1/2)Δv so that the point (v 1,j , v 2,k ) is the center of square (j,k). Each point ρ j,k(t) represented the integral of ρ(v 1,v 2,t) over the square (j,k). The divergence \(\nabla \cdot {\mathbf{J}}\) at the center of each square was approximated by differences in the integral of the flux along the boundary of the square, and we approximated the resulting integrals along each line segment with the midpoint rule. We linearly interpolated ρ j,k to estimate its value along the boundary for J leak. To estimate the value of the integrals for J ind and J syn, we used Simpson’s rule.

We reduced the dimension of the system of equations by exploiting the symmetry ρ j,k = ρ k,j. We discretized in time using the trapezoid method with Δt = 0.5 ms. At each time step, we solved the system of linear equations using GMRES (Saad and Schultz 1986). In this way, we used a numerical method that is second order accurate in both time and space.

Since we set \(v_{\mathrm{reset}} \!<\! E_\text r\), the advection flux J leak is non-zero along locations of voltage reset, preventing the J reset,1 and J reset,2 delta-function source terms from forming a delta-function in ρ(v 1,v 2,t). However, the double delta-function source due to the reset J reset,3 will form a delta-function component of ρ. The flux J reset,3 represents a finite probability per unit time that both neurons reset to (v reset,v reset). At that point, the voltage pair will advect along the line \(\{(v_1,v_2)|v_1\!=\!v_2, 0 \!<\! v_1 \!<\! E_\text r\}\) until either neuron receives an input. Hence, there will be finite probability that the voltages of a neuron pair reside along this line. The delta-function in ρ along this line will prevent second order convergence of the above numerical method if we apply it directly to Eq. (17). To ensure convergence, we divide ρ into two components, a smooth component ρ s and a delta function component along the diagonal with weight function ρ δ :

Plugging this expression into the original evolution Eq. (17) and then grouping the delta-function terms will result in coupled evolution equations for ρ s and ρ δ . Since ρ s and ρ δ are smooth, we can solve the resulting equations using our numerical scheme and achieve second order accuracy.

B Calculating connectivity statistics for different network classes

We calculate the expected number of inputs W 1 and the fraction of shared inputs β for networks with arbitrary outgoing degree distributions and incoming connections determined randomly. We examine the connectivity from a presynaptic population 1 with N 1 neurons onto a postsynaptic population 2 with N 2 neurons.

Let \(\hat{W}_{ij}=1\) if there is a connection from neuron j in population 1 onto neuron i in population 2; otherwise, let \(\hat{W}_{ij}=0\). Let \(d_j = \sum_{i=1}^{N_2} \hat{W}_{ij}\) be outgoing degree of neuron j in population 1, i.e., the number of connections from neuron j onto all neurons in population 2. Let the function f(k) be the outgoing degree distribution so that

for k = 1,2, ..., N 2. The expected total number of connections (out of N 1 N 2 possible) is \(N_1 \sum_{k=1}^{N_2} k f(k)\) so that the expected number of connections onto any neuron in population 2 is

If f(k) was given by a one-parameter family of distributions, prescribing W 1 would determine the particular distribution as a function of population sizes N 1 and N 2.

Neuron j in population 1 has d j connections onto neurons in population 2, which we assume are assigned randomly to neurons in population 2. Then, conditioned on this value of d j , the probability of a connection from neuron j onto any given pair (indexed by i 1 and i 2) of neurons in population 2 is

Multiplying by the probability distribution of d j (i.e., the outgoing degree distribution) and summing over all possible values of d j , we determine that the probability a given neuron j in population 1 is connected to a given pair of neurons in population 2 is

To calculate W 2, the total expected number of shared inputs from all N 1 neurons, we simply need to multiply by N 1:

Dividing by W 1 Eq. (21) gives the fraction of shared input parameter β in terms of the degree distribution

For a random network, the degree distribution is a binomial distribution

so that

agreeing with the results from Section 3.2.2. If the degree distribution is given by a power law with maximum degree d max ≤ N 2

the expressions for W 1 and β become

If the degree distribution is given by a Gaussian

the expressions for W 1 and β become

In our simulations, we set N 1 = N 2 = N and solved the first equation of Eq. (23), Eq. (24) or Eq. (25) for the p, γ or σ, respectively that gave the chosen W 1. Then,we used the second equation of Eq. (23), Eq. (24) or Eq. (25) to calculate β.

Rights and permissions

About this article

Cite this article

Liu, CY., Nykamp, D.Q. A kinetic theory approach to capturing interneuronal correlation: the feed-forward case. J Comput Neurosci 26, 339–368 (2009). https://doi.org/10.1007/s10827-008-0116-4

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10827-008-0116-4